Dummy Variables in Multiple Regression Analysis

advertisement

Multiple Regression Analysis

y = β0 + β1x1 + β2x2 + . . . βkxk + u

5. Dummy Variables

0

Dummy Variables

! A dummy variable (or “indicator variable” is a

variable that takes on the value 1 or 0

Examples:

! male (=1 if male, 0 otherwise)

! south (=1 if in the south, 0 otherwise)

! year70 (=1 if year=1970, 0 otherwise)

! Dummy variables are also called binary variables,

for obvious reasons

1

A Dummy Independent Variable

! Dummy variables can be quite useful in regression

!

!

!

!

!

!

analysis

Consider the simple model with one continuous

variable (x) and one dummy (d)

y = β0 + δ0d + β1x + u

We can interpret d as an intercept shift

If d = 0, then y = β0 + β1x + u

If d = 1, then y = (β0 + δ0) + β1x + u

Think of the case where d = 0 as the base group

(or reference group)

2

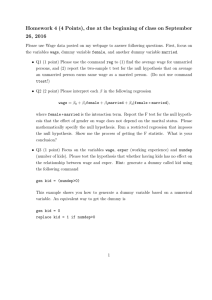

Example of δ0 > 0

y

y=(β0 + δ0) + β1x

d= 1

δ0

{

d=0

} β0

slope = β1

for both!

y = β0 + β1x

x

3

Dummy Trap

! Suppose we include a dummy variable called

!

!

!

!

female (=1 if female, 0 otherwise)

We could not also include a dummy variable

called male (=1 if male, 0 otherwise)

This is because for all i, female=1-male;

perfect colinearity

Intuitively it wouldn’t make sense to have both,

we only need 2 intercepts for the 2 groups

In general, with n groups use n-1 dummies

4

Dummies With log(y)

! Not surprisingly, when y is in log form the

coefficients have a percentage interpretation

! Suppose the model is:

log(y) = β0 + δ0d + β1x + u

! 100(δ0) = is (very roughly) the percentage change

in y between two groups holding x fixed

Example:

! Regression of log of housing prices on dummy for

“heritage” and square feet

5

Interpreting coefficient on dummy with

logs

Actually, it’s not correct to say that 100*(δ0) is the

percent change in y associated with a 1 unit

change in x. Because the model is non-linear, we

have to think of the RHS parameters as partial

derivatives of the LHS w.r.t. the RHS variables of

interest.

But when we take a derivative, we are asking what

the change in the LHS is as we make a small

change in one of the RHS variables, holding the

others constant.

Changing a dummy variable from 0 to 1is not a

small change. It’s a discrete jump.

6

Interpreting coefficient on dummy with

log y

Changing d from 0 to 1 leads to a

100 * (eδ 0 − 1)% change in y.

Changing d from 1 to 0 leads to a

100 * (e −δ 0 − 1)% change in y.

For instance, if δ0=0.2, this implies that changing d

from 0 to 1 leads to a 22% increase in y.

Changing d from 1 to 0 leads to an 18% decrease

in y.

For small values of δ0, the “100*δ0%”

approximation will be close. Not so for larger

values.

7

Interpreting coefficient on dummy with

log y

Things get slightly more complicated when we

obtain an estimate of δ0. You can’t just plug the

OLS estimate of δ0 into the formula on the

previous slide, as this will produce a biased

estimate of the effect of changing d from 0 to 1.

Instead, for an estimate of the effect of changing d

from 0 to 1 use the formula:

⎛ (δˆ0 − 12 (se(δˆ0 ))2 ) ⎞

100 * ⎜ e

− 1⎟ %

⎝

⎠

For more on quirks involving dummy variables, see

http://davegiles.blogspot.ca/2011/03/dummies-fordummies.html

8

Dummies for Multiple Categories

1. Several dummy variables can be included in the

same equation, e.g.,

y = β0 + δ0d0 + δ1d1 + β1x + u

! Here the base case is whatever is implied by d0 =

d1 = 0 (i.e. when all the dummy variables are zero)

Example:

log(hprice)= β0 + δ0heritage + δ1pool + β1sqft + u

! The base case is a non-heritage house with no pool

! δ0 gives information about the effect of heritage

status (vs. not) on house price; δ1 gives us

information about how a pool changes house value

9

Dummies for Multiple Categories

2. We can use dummy variables to control for one

variable with more than two categories

! Suppose everyone in your data is either a HS

dropout, HS grad only, or college grad (at least)

! To compare HS and college grads to HS dropouts,

include 2 dummy variables

hsgrad = 1 if HS grad only, 0 otherwise; and

colgrad = 1 if college grad, 0 otherwise

10

Multiple Categories (cont)

! Any categorical variable can be turned into a set

of dummy variables

! However, because the base group is represented

by the intercept, if there are n categories there

should be n-1 dummy variables

Example: Could turn # of bedrooms into dummies

! If there are a lot of categories, it may make sense

to group some of them together

Example: Age, usually divide into age categories

! In fact, can convert continuous variables as well

11

Interactions Among Dummies

! Interacting dummies is like subdividing the group

Example: have dummies for male, as well as hsgrad

and colgrad

! Could include the new variables male*hsgrad and

male*colgrad

! We now have a total of 5 dummy variables or 6

categories

Base group is female HS dropouts

hsgrad female hsgrad

male*hsgrad male hsgrad

colgrad female college grad male*colgrad male college

grad

male male hs dropouts

12

More on Dummy Interactions

Formally, the model is y = β0 + δ1male + δ2hsgrad +

δ3colgrad + δ4male*hsgrad + δ5male*colgrad + β1x + u,

then, for example:

1. If male = 0 and hsgrad = 0 and colgrad = 0

! y = β0 + β1x + u

2. If male = 0 and hsgrad = 1 and colgrad = 0

! y =β0+δ2hsgrad+β1x+u=(β0+δ2) + β1x + u

3. If male = 1 and hsgrad = 0 and colgrad = 1

! y =β0+δ1male+δ3colgrad+δ5male*colgrad+β1x +u

=(β0+δ1+δ3+δ5)+β1x +u

13

Other Interactions with Dummies

! Can also consider interacting a dummy variable, d,

!

!

!

!

!

with a continuous variable, x

y = β0 + δ0d + β1x + δ1d*x + u

If d = 0, then y = β0 + β1x + u

If d = 1, then y = (β0 + δ0) + (β1 + δ1)x + u

i.e. the dummy variable allows both the intercept

and the slope to change depending on whether it is

“switched” on or off

Example: suppose δ0>0 and δ1<0

14

Example of δ0 > 0 and δ1 < 0

y

y = β0 + β1x

d=0

d=1

y = (β0 + δ0) + (β1 + δ1) x

x

15

Testing for Differences Across

Groups

! Sometimes we will want to test whether a

regression function (intercept and slopes) differs

across groups

Example: Wage Equation

log(wage)=β0 + β1educ + β2exper + u

! We might think that the intercept and slopes will

differ for men and women (e.g. discrimination), so

log(wage)=β0 + δ0male + β1educ + δ1male*educ +

β2exper + δ2male*exper + u

16

Testing Across Groups (cont)

! This can be thought of as simply testing for the

joint significance of the dummy and its

interactions with all other x variables

! So, we could estimate the model with all the

interactions (unrestricted) and without (restricted)

and form the F statistic

! However, forming all of the interaction variables

etc. can be unwieldy

! There is an alternative way to form the F statistic

17

The Chow Test

! It turns out that we can compute the proper F

statistic without estimating the unrestricted model

! In the general model with an intercept and k

continuous variables we can test for differences

across two groups as follows:

1. Run the restricted model for group one and get

SSR1, then for group two and get SSR2

2. Run the restricted model for all to get SSR, then

[

SSR − (SSR1 + SSR2 )] [n − 2(k + 1)]

F=

•

SSR1 + SSR2

k +1

18

The Chow Test (continued)

! Notice that the Chow test is really just a simple F

test for exclusion restrictions, but we’ve realized

that SSRur = SSR1 + SSR2

! Unrestricted model is exactly the same as running

the restricted model for the groups separately

! Note, we have k + 1 restrictions (each of the slope

coefficients and the intercept)

! Note also, the unrestricted model would estimate 2

different intercepts and 2 different slope

coefficients, so the denominator df is n – 2k – 2

19

Linear Probability Model

! Binary variables can also be introduced on the

!

!

!

!

!

!

LHS of an equation

e.g. y=1 if employed, 0 otherwise

In this setting the βj’s have a different

interpretation

As long as E(u|x)=0, then

E(y|x) = β0 + β1x1 + … + βkxk , or

P(y = 1|x) = β0 + β1x1 + … + βkxk

The probability of “success” is a linear function of

the x’s

20

Linear Probability Model (cont)

! So, the interpretation of βj is the change in the

!

!

!

!

probability of success (y=1) when xj changes,

holding the other x’s fixed

If xj is education and y is as above, βj is the change

in the probability of being employed when

education increases by 1 year

Predicted y is the predicted probability of success

One potential problem is that our predictions can

be outside [0,1]

How can a probability be greater than 1?

21

Linear Probability Model (cont)

! Even without predictions outside of [0,1], we may

!

!

!

!

!

estimate marginal effects that are greater than 1

and less than -1

Usually best to use values of independent

variables near the sample means

This model will violate assumption of

homoskedasticity

Problem?

Standard errors are not valid (correctable)

Despite drawbacks, it’s usually a good place to

start when y is binary

22

Nonlinear Alternatives to LPM

! If you go on to practice econometrics with limited

dependent variables (such as dummies), you will

learn about some non-linear models that may be

more appropriate

e.g. logit, probit models

These are little more complicated to work with than

LPM (marginal effects differ from coefficient

estimates, as in other nonlinear models)

Advantages: They restrict predicted values to lie

between 0 and 1; don’t have built-in heteroskedasticity

like LPM

EViews and other packages can estimate such models

23

Policy Analysis Using Dummy Variables

! Policy analysts often use dummies to measure the

effect of a government program

e.g., What’s the effect of raising the minimum wage?

A simple before/after comparison could be done like

this

Take a sample of low-wage firms (say, fast food restaurants) in

a state or province that is changing its minimum wage law.

Sample restaurants before minimum wage increase, then again

after minimum wage increase (could use same restaurants or

different ones, as long as sampling is random)

Estimate model of staffing levels, where Post is dummy

indicating whether the observation is for a firm in the postincrease regime or the pre-increase regime (=1 if post)

# employeesit = β 0 + β1Post it + uit

24

Policy Analysis Using Dummy Variables

! If zero conditional mean assumption holds, then

βˆ1 gives us the effect of increasing the minimum

wage on employment levels at these firms. What

sign do you expect?

Do you see any reason the zero conditional mean assumption

might fail?

What if something else changes as the policy regime changes?

Economy gets better or worse.

Taxes on business change

Immigration increases size of local labour force

Any of these changes, if not controlled for, would be captured in u and would

be correlated with Post. violation of zero conditional mean assumption

Is there a way (other than controlling for all possible things that

might change simultaneously with minimum wage law) we can

deal with this problem?

25

Policy Analysis Using Dummy Variables

! It would be nice if we had a “control group”—a

bunch of similar firms existing under similar

circumstances that didn’t experiment the

minimum wage increase.

How about fast food restaurants on the other side of the

state/provincial border?

Similar economic circumstances

Similar labour supply issues

# employeesit = β 0 + β1Post it + β 2 NJ + β 3 Post * NJ it + uit

! NJ=1 if firm is in NJ; =0 if firm is in Pennsylvania

! Post*NJ is an interaction of the two dummies

26

Policy Analysis Using Dummy Variables

! What this analysis does is look at the effect of an

increase in the minimum wage in NJ, using fastfood restaurants in Pennsylvania as a control

group.

We have to assume that other determinants of

employment that change at the same time as the

minimum wage change are occurring in both states and

with equal magnitudes (recessions, booms, etc.)

One way to reassure ourselves (other than looking at

data on the local economies) is to look at employment

trends in FF restaurants in both states. If they tend to

move together closely, that will provide some

reassurance about our “identifying assumption”

27

Policy Analysis Using Dummy Variables

! Note that the ideal experiment would let us observe NJ at

one point in time, simultaneously in 2 states of the world

The state with no minimum wage increase

The state with minimum wage increase

! Unfortunately we can’t observe these parallel universes;

with this approach we’re trying to do the next best thing

We’re inferring a counterfactual (parallel universe where NJ

experiences no minimum wage increase), by observing what

happens in PA

The counterfactual for NJ assumes NJ follows the same trend as in

PA; then we just compare the actual (with min wage increase)

outcome in NJ to the (inferred) counterfactual for NJ

This gives us our estimate of the effect of increasing the minimum

wage.

28

Policy Analysis Using Dummy Variables

! Focus on this picture and see if you can deduce for

yourself what the effect of a minimum wage

increase in NJ is (assuming common trends in

employment in PA and NJ).

29

Policy Analysis Using Dummy Variables

# employeesit = β 0 + β1Post it + β 2 NJ + β 3 Post * NJ it + uit

βˆ1, βˆ 2 < 0

βˆ1

βˆ 2

βˆ 0

βˆ 0 , βˆ 3 > 0

βˆ 3

30

Policy Analysis Using Dummy Variables

# employeesit = β 0 + β1Post it + β 2 NJ + β 3 Post * NJ it + uit

! Given this model, average employment at a firm is

β 0 in PA before min wage increase

β 0 + β1 in PA after min wage increase

β + β in NJ before min wage increase

0

2

β 0 + β1 + β 2 + β 3 in NJ after min wage increase

! Change in employment in NJ: β1 + β 3

! Change in employment in PA: β1

Note: This is the counterfactual change in employment for NJ

! Diff-in-Diff (ΔNJ-ΔPA): β 3

31

Policy Analysis Using Dummy Variables

! This is called a “difference in difference” analysis.

βˆ 3 gives the effect of the minimum wage.

! Card and Krueger (1994) studied a minimum wage law

change in NJ in 1992, using fast food restaurants in NJ and

eastern PA as the sample

Famously found no effect of minimum wage on employment

Some follow-up studies have disputed their findings, though not

much evidence of a large effect of the minimum wage on

employment

One hypothesis: minimum wage was so low to begin with that it

was close to the equilibrium (market-clearing) wage.

Remains a point of empirical study and argument.

32

Policy Analysis Using Dummy Variables

! We can denote a generic diff-in-diff specification as

Outcomeit = β 0 + β1Post it + β 2Treat + β 3 Post _ Treat it + uit

! It’s not hard to think of dozens of cases in policy analysis

where this type of approach could be used

Effect of changes in welfare generosity on

employment

fertility

marriage

school performance of kids of recipients

Effect of change in divorce laws on

divorce

child outcomes

domestic violence incidents

33

Policy Analysis Using Dummy Variables

! Note the crucial assumption: Common trends

If employment in NJ and PA always move together; or (more

generally) if outcome variable in Treat=1 vs. Treat=0 always move

together, then this is likely a valid assumption

If employment and NJ and PA often move together but sometimes

randomly deviate (for reasons other than changes in minimum

wage laws) then assumption is more suspect

Does the minimum wage really increase employment?

Seems unlikely theoretically

A more sensible conclusion would be either that minimum wage has no effect

or small effect on employment; OR something else changed in NJ (or PA) at

the same time as minimum wage law change is driving their finding (i.e., their

results are subject to bias

34

Caveats on Policy Analysis When Those

Studied Choose Whether to be Treated

! Consider a job training program that unemployed

workers have the option of signing up for

! If individuals choose whether to participate in a

program, this may lead to a self-selection problem

! e.g. Those that apply for job training may be more

diligent or may have had to qualify

35

Self-selection Problems

! If we can control for everything that is correlated

with both participation and the outcome of interest

then it’s not a problem

! Often, though, there are unobservables that are

correlated with participation

! In this case, the estimate of the program effect is

biased, and we don’t want to set policy based on

it!

36