Class Notes 10: MULTI-PARAMETER ITERATION(CONTINUED

advertisement

Class Notes 10: MULTI-PARAMETER ITERATION(CONTINUED)

AND THE STEEPEST DESCENT METHOD.

Math 639d

Due Date: Nov. 6

(updated: October 30, 2014)

We continue the analysis of the multi-parameter iteration in the case when

A is symmetric and positive definite and we have bounds on the spectrum,

0 < λ0 ≤ λ ≤ λ∞ , for all λ ∈ σ(A).

In the last class, we used the Chebyshev polynomials to develop a polynomial for multi-parameter iteration. Specifically, we set

(10.1)

RM (x) =

where

eM (λ) = TM (x(λ))

R

(10.2)

and

(10.3)

eM (x)

R

= 1 + xQM −1 (x)

eM (0)

R

x(λ) = −1 +

2

(λ − λ0 ).

λ∞ − λ0

In this class, we shall show that this is the optimal choice (assuming that

we only know information about the largest and smallest eigenvalue) and

estimate the resulting spectral radius ρ(GM ). That this is the optimal choice

is the conclusion of the following theorem.

Theorem 1. Let QM −1 be as above and set

(10.4)

ρ=

max

λ∈[λ0 ,λ∞ ]

|1 + λQM −1 (λ)|.

If Q is any other polynomial of degree M − 1, then

η=

max

λ∈[λ0 ,λ∞ ]

|1 + λQ(λ)| ≥ ρ.

Proof. Suppose to the contrary that η is less than ρ. Consider the polynomial

P (λ) = (1 + λQM −1 (λ)) − (1 + λQ(λ)) =: RM (λ) − R(λ). Now RM (λ) has

M + 1 extreme points in the interval [λ0 , λ∞ ] with oscillating sign. As η

is less than ρ, the sign of P (λ) = RM (λ) − R(λ) is the same as RM (λ) at

each of these points. This implies that P (λ) has at least M distinct zeroes

in the interval [λ0 , λ∞ ]. It also vanishes at λ = 0, i.e., PM has at least

M + 1 distinct zeroes. This implies that PM is the zero polynomial and is a

contradiction to the assumption that η is strictly less than ρ.

1

2

Remark 1. To implement the multi-parameter iteration, we need to compute

the parameters {τ1 , τ2 , . . . , τM } corresponding to our optimal QM −1 . Recall

from the previous class that τi = 1/ri , where ri is the i’th root of RM . The

roots of RM can be computed from those of PM because of (10.1)-(10.3). The

roots of PM can be computed from the zeroes of cos, indeed, we recall that

TM (x) = cos(M cos−1 (x)) for x ∈ [−1, 1]

and is zero when

M cos−1 (xj ) = (j − 1/2)π or xj = cos((j − 1/2)π/M ),

j = 1, 2, . . . , M.

To compute the corresponding values of λ, we simply use the inverse of the

linear map λ → x(λ) given in (10.3), specifically,

λ(x) = λ0 +

and obtain

λ∞ − λ0

(x + 1)

2

λ∞ − λ0

(xj + 1)

2

λ∞ − λ0

(cos((j − 1/2)π/M ) + 1).

= λ0 +

2

ri = λ0 +

Remark 2. (Warning) Once we have the parameters {τ1 , τ2 , . . . , τM }, the

multi-parameter iteration is as given by (9.1) of the previous class. This leads

to an effective iteration method provided that M is not too large. However,

stability problems have been observed using this implementation and large

M for certain applications. Alternatively, it is possible the develop an implementation based on the three term recurrence satisfied by the Chebyschev

polynomials. This implementation avoids explicit use of the zeroes of RM

and appears to be more stable. The recurrence implementation was popular

until the discovery of the conjugate gradient method (CG) (developed in the

next class). By in large, in practice, the above method has been replaced

by CG. We study the multi-parameter method here as the results which we

obtain for it will form the basis for the analysis of CG.

The above theorem shows that QM −1 is the best polynomial given only

the bounds λ0 , λ∞ on the spectrum. We are left to estimate ρ(GM ) for this

choice of QM −1 . In the previous class we observed that

ρ(GM ) ≤

max

λ∈[λ0 ,λ∞ ]

|1 + Q̃M −1 (λ)| =

with

x(0) = −1 −

1

eM (0)|

|R

=

1

|TM (x(0))|

2

λ∞ + λ0

λ0 = −

=: −γ.

λ∞ − λ0

λ∞ − λ0

3

Since the highest order term in TM has a positive coefficient, TM (x) is strictly

increasing for x > 1 so TM (x) > TM (1) = 1 (we already observed that TM (x)

was monotone on either side of (−1, 1)). Moreover, TM is either an even or

an odd polynomial so |TM (−γ)| = TM (γ) (note that γ > 1). We need a

more convenient expression for TM (γ) when γ > 1 ( (9.7) of the previous

class is not valid for such values of γ). Instead, we consider the formula

(10.5)

TM (γ) = cosh(M cosh−1 (γ)).

That this is a valid expression for TM (γ) when γ > 1 is shown by showing

that it gives the right polynomials for M = 0 and M = 1 and satisfies the

recurrence relation (9.8), i.e., one checks that

cosh(0 cosh−1 (x)) = 1

cosh(cosh

−1

(x)) = x

(obvious),

(obvious),

cosh((M + 1) cosh−1 (x)) = 2x cosh(M cosh−1 (x))

− cosh((M − 1) cosh−1 (x)).

The derivation of the recurrence relation above is similar to the argument

used in showing that the formula (9.7) satisfies the recurrence (9.8) but uses

the analogous identities involving hyperbolic functions.

Using (10.5) gives for θ = cosh−1 (γ),

1

1

eM θ + e−M θ

≥ eM θ = (eθ )M

2

2

2

p

M

1

1

M

= (cosh(θ) + sinh(θ)) = γ + γ 2 − 1 ,

2

2

where we used the identity

q

sinh(θ) = cosh2 (θ) − 1.

TM (γ) = cosh(M θ) =

Let K = λ∞ /λ0 then γ = (K + 1)/(K − 1) so

√

√

p

K

K + 1)2

(

K

+

1

+

2

√

= √

γ + γ2 − 1 =

K −1

( K + 1)( K − 1)

√

K +1

=√

.

K −1

Combining the above inequalities gives

√

K −1 M

1

≤2 √

ρ(GM ) =

.

TM (γ)

K +1

We have proved the following theorem.

4

Theorem 2. (Multi-Parameter) Let A be a symmetric and positive definite

n × n real matrix whose spectrum satisfies 0 < λ0 ≤ λ ≤ λ∞ , for all λ ∈

σ(A). Let RM be defined by (10.1)-(10.3) and τi , i = 1, . . . , M be defined

by Remark 1. Then the multi-parameter iteration defined by (9.1) has a

reduction matrix GM satisfying

√

K −1 M

ρ(GM ) ≤ 2 √

K +1

where K = λ∞ /λ0 . Moreover,

Y

√

M

K −1 M

(10.6)

kGM k = (I − τj A) ≤ 2 √

K +1

j=1

where k · k is the operator norm induced by either of the norms k · kℓ2 or k · kA

on Rn .

Remark 3. The convergence rate depends on the bounds for the spectrum.

Better bounds (i.e., λ0 closer to λ1 and λ∞ closer to λn ) give rise to smaller

K and a faster rate of convergence.

Remark 4. Suppose that we actually use λ0 = λ1 and λ∞ = λn . Then K

is the spectral condition number of A. The optimal one parameter iteration

has a reduction bound given by

K −1

2

ρ=

≈1− .

K +1

K

We saw that this required O(K) iterative steps to obtain a fixed error reduction ǫ. In contrast, the reduction per iteration for the multi-parameter

iteration limits to

√

K −1

2

≈1− √ .

ρ= √

K +1

K

√

Thus, only O( K) iterations are required in this case.

We saw that the matrices A3 and A5 had condition numbers which were

on the order of h−2 . Accordingly, a one parameter iteration would require

on the order of h−2 iterations for a fixed reduction while a multi-parameter

iteration would only require on the order of h−1 (at least in theory). This

convergence acceleration results in a significant reduction in the number of

iterations when h is small.

Remark 5. As mentioned above, numerical stability is an issue with multiparameter iteration. However, the above results will be used to provide convergence estimates for a much more numerically stable algorithm, the conjugate gradient method. Specifically, we shall use the result (10.6) with the

A-norm.

5

The above theorem carries over to the preconditioned case. Let B be

a symmetric and positive definite matrix. We consider the preconditioned

equations

(10.7)

BAx = Bb

and apply the multi-parameter method (9.1) to it. The reduction matrix is

now of the form

Y

M

(10.8)

GM =

(I − τj BA) .

j=1

The spectrum of GM is related to that of BA as in the simpler case, i.e.,

Y

M

σ(GM ) =

(I − τj λi ) : λi ∈ σ(BA) .

j=1

The eigenvalues of BA are all positive and can be reordered λ1 ≤ λ2 ≤

· · · ≤ λn . Given bounds on λ0 and λ∞ on the spectrum of BA, the multiparameter optimization proceeds exactly as in the simpler case. We have

the following theorem:

Theorem 3. (Multi-Parameter-Preconditioned) Let A and B be symmetric

and positive definite n × n real matrices and assume that the spectrum of

BA satisfies 0 < λ0 ≤ λ ≤ λ∞ , for all λ ∈ σ(BA). Let RM be defined by

(10.1)-(10.3) and τi , i = 1, . . . , M be defined by Remark 1. Then the multiparameter iteration for (10.7) has an reduction matrix GM defined by (10.8)

satisfying

√

K −1 M

ρ(GM ) ≤ 2 √

K +1

where K = λ∞ /λ0 . Moreover,

Y

√

M

K −1 M

√

≤

2

(I

−

τ

A)

(10.9)

kGM k = j

K +1

j=1

where k · k is the operator norm induced by either of the norms k · kA or

k · kB −1 on Rn .

Remark 6. We used the fact that GM is self adjoint in either the A-inner

product or the B −1 inner product and Corollary 1 of Class Notes 7 to conclude the estimates in (10.9).

STEEPEST DESCENT:

Suppose that A is a SPD n × n real matrix and, as usual, we consider

iteratively solving Ax = b. By now, you should understand that the goal of

6

any iterative method is to drive down (a norm of) the error ei = x − xi as

rapidly as possible. We consider a method of the following form:

xi+1 = xi + αi pi .

Here pi ∈ Rn is a “search direction” while αi is a real number which we are

free to choose. It is immediate (subtract this equation from x = x) that

(10.10)

ei+1 = ei − αi pi .

We also introduce the “residual” ri = b − Axi . A simple manipulation shows

that

ri = Aei and ri+1 = ri − αi Api .

The first method that we shall develop takes pi to be the residual ri . The

idea is then to try to find the best possible choice for αi . Ideally, we should

choose αi so that it results in the maximum error reduction, i.e., kei+1 k

should be as small as possible. For arbitrary norms, this goal is not a viable

one. The problem is that we cannot assume that we know ei at any step

of the iteration. Indeed, since we always have xi available, knowing ei is

tantamount to knowing the solution since x = xi + ei .

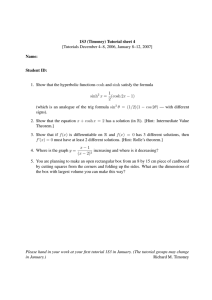

It is instructive to see what happens with the wrong choice of norm.

Suppose that we attempt to choose αi so that kei+1 kℓ2 is minimal, i.e.,

kei+1 kℓ2 = min kei − αri kℓ2 .

α∈R

The above problem can be solved geometrically and its solution is illustrated

in Figure 1. Clearly, αi should be chosen so that the error ei+1 is orthogonal

to ri , i.e.,

(ei+1 , ri ) = 0.

A simple algebraic manipulation using the properties of the inner product

and (10.10) gives

(10.11)

(ei − αi ri , ri ) = (ei , ri ) − αi (ri , ri ) = 0 or αi =

(ei , ri )

.

(ri , ri )

Of course, this method is not computable as we do not know ei during the

iteration so the numerator in the definition of αi in (10.11) is not available.

We can fix up the above method by introducing a different norm, actually,

we introduce a different inner product. Recall, that from earlier classes, inner

products not only provide norms but they give rise to a (different) notion

of angle. We shall get a computable algorithm by replacing the ℓ2 inner

product above with the A-inner product, i.e., we define

(10.12)

kei+1 kA = min kei − αri kA .

α∈R

7

ei

e i+1

αi r i

Figure 1. Minimal error

The solution of this problem is to make ei+1 A-orthogonal to ri , i.e.,

(ei+1 , ri )A = 0.

Repeating the above computations (but with the A-inner product) gives

(10.13)

(ei − αi ri , ri )A = 0 or αi =

αi =

(Aei , ri )

(ei , ri )A

, i.e.,

=

(ri , ri )A

(Ari , ri )

(ri , ri )

.

(Ari , ri )

We have now obtained a computable method. Clearly, the residual ri =

b − Axi and αi are computable without using x or ei . We can easily check

that this choice of αi solves (10.12). Indeed, by A-orthogonality and the

Schwarz inequality,

(10.14)

kei+1 k2A = (ei+1 , ei+1 )A = (ei+1 , ei − αri + (αi − α)ri )A

= (ei+1 , ei − αri )A ≤ kei+1 kA kei − αri kA

holds for any α ∈ R. Clearly if kei+1 kA = 0 then (10.12) holds. Otherwise,

(10.12) follows by dividing (10.14) by kei+1 kA .

The algorithm which we have just derived is known as the steepest descent

method and is summarized in the following:

8

Algorithm 1. (Steepest Descent). Let A be a SPD n × n matrix. Given an

initial iterate x0 , define for i = 0, 1, . . .,

xi+1 = xi + αi ri ,

ri = b − Axi ,

and

(10.15)

αi =

(ri , ri )

.

(Ari , ri )

Proposition 1. Let A be a SPD n × n matrix and {ei } be the sequence of

errors corresponding to the steepest descent algorithm. Then

K −1

kei+1 kA ≤

kei kA

K +1

where K is the spectral condition number of A.

Proof. Since ei+1 is the minimizer

kei+1 kA ≤ k(I − τ A)ei kA

for any real τ . Taking τ = 2/(λ1 + λn ) as in the proposition of Class Notes

7 and applying that proposition completes the proof.

Remark 7. Note that λ1 and λn only appear in the analysis. We do not need

any eigenvalue estimates for implementation of the steepest descent method.

Remark 8. As already mentioned, it is not practical to attempt to make

the optimal choice with respect to other norms as the error is not explicitly known. Alternatively, at least one application of A can eliminate this

drawback, for example, one could design a method which minimized

kAei kℓ2 .

One could also propose to minimize some other norm, i.e.,

kAei kℓ∞ .

Although this is feasible, since the ℓ∞ norm does not come from an inner

product, the computation of the parameter αi ends up being a difficult nonlinear problem.

It is interesting to note that the Steepest Descent Method is the first

example (in this course) of an iterative method that is not a linear iterative method. Note that ei+1 can be theoretically expressed directly from ei

(without knowing xi or b) since one simply substitutes ri = Aei to compute

αi and uses

ei+1 = ei − αi ri .

9

Thus, there is a mapping ei → ei+1 however it is not given by matrix multiplication. This can be illustrated by considering the 2 × 2 matrix

1 0

A=

.

0 2

For either e10 = (1, 0)t or e20 = (0, 1)t , a direct computation gives ej1 = (0, 0)t ,

for j = 1, 2 where ej1 is the error after one step of steepest descent is applied

to ej0 . For example, for e0 = e10 , r0 = Ar0 = (1, 0)t , α0 = 1 and e1 = e0 −r0 =

(0, 0)t . In contrast, for e0 = e10 + e20 = (1, 1)t , we find

5

1

1

Ae0 = r0 =

,

Ar0 =

,

α0 =

2

4

9

4/9

0

0

e1 =

6=

+

= e11 + e21 .

−1/9

0

0