Adaptive Technologies

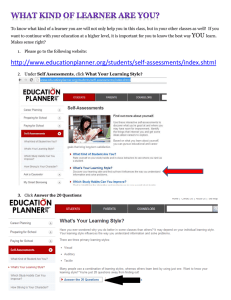

advertisement