Reading Computer Printouts

advertisement

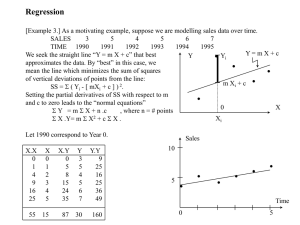

READING COMPUTER PRINTOUTS

Variable

price (Y)

size (X)

N

93 (total sample size)

93 (“)

Mean

99.533 (Y-bar)

1.650 (X-bar)

Std. Dev.

44.184 (sY)

.525 (sX)

Source

Model

DF

1 (# of explanatory

variables)

Sum of Squares

145097 (TSS-SSE;

numerator of r2)

Mean Square

145097

Error (cond. distrib)

Total (marginal dist.)

91 (n-(k+1))

92 (model + error df)

34508 (SSE)

179605 (TSS)

379

Variable

DF

Parameter Estimator

Standard Error

Intercept (α or a)

1

-25 (α)

6.7 ( σ

)

a

Size (β or b)

1

75 (β)

3.9 ( σ

)

b

Root MSE (σ-hat)

19.473 ( ˆ = estimated

standard deviation,

estimated variability of

Y values)

σ

T for Ho:

Parameter = 0

R Square

.8 (r2=coefficient of

determination, because

.8 is close to 1, Y-hat is

a good predictor of

correlation, strong +

association)

Prob > |T|

-3.8

.0003 (very low, reject

the null of Ho: β = 0

.0001 (“ )

19.6 (t-statistic)

***F Value: tells you if the model is doing any good; Prob>F If bigger than .05, all the coefficients are probably zero so your explanatory variables are independent of

your response variable.

E(Y)= α + β X “regression function”; Y = α + βX “linear function”; Y-hat = a + b1X1+ b2X2 “prediction equation” or “least squares line”; the best equation

according to the least squares criterion for estimating a linear regression because it has the smallest SSE. note: a and b, α and β are “regression coefficients”

a=(Y-bar)=b(X-bar) & b=the sum of all {(X-(X-bar)(Y-(Y-bar))} divided by the sum of all (X-(X-bar)2, also note: Y-hat = a + b1X1+ b2X2+e = prediction model

Interpreting regression coefficients: Every 1 unit increase in the explanatory variable has an increase in b (units).

ex. equation Y-hat = -498.7 + 32.6(X1)+9.1(X2); Y = crime (deaths/100,000); X1=poverty rate; Interp: every 1% in poverty rate has an increase in 32 deaths

per100,000 people.

SSE = ∑ (Y − Yˆ ) 2 = “sum of squared errors” or “residual sum of squares” = sum of all errors from the prediction equation E(Y) = a+bX; the variation of the

observed points around the prediction line (Y-hat = a +bX); note: a residual is the vertical distance between the observed point and the prediction line = Y – Y-hat;

note that the sum of all residuals (Y – Y-hat) = 0

TSS = ∑ (Y − Y ) 2 = “total sum of squares” = sum of all errors from the mean

Model Sum of Squares=(TSS-SSE) = “regression sum of squares” or “explained sum of squares” = the numerator of r2, and represents the amount of the total

variation in TSS in Y that is explained by X using the least squares line. (Y=a+bX)

MSE = σˆ =

SSE = “mean square error” or “root square error” or “estimated conditional standard deviation” where df for the

(Y − Yˆ ) 2

=

n −2

n −2

estimate =(n-2) ; Measures the variability of Y-values for all fixed Xs. note: with p-unknown parameters, df = n-p)

σˆ

σb =

∑(X − X )

2

sY= standard deviation of the marginal distribution of Y (σ Y if a population); sY is usually greater than the conditionsal distribution because the errors of margeral are

greater than the errors of conditional w/ correlation.

r=

sx

sy

when

sx =

∑ (X − X )

2

and

n −1

sx =

∑ (Y − Y )

n −1

2

=

TSS “pearsons correlation coefficient” or “standardized regression coefficient” =standardized slope to test

n −1

strengths of association with a linear equation; r is always between -1 and +1; at r=0, b=0 and there is independence between the two variables being tested; at

r=± 1 it is called a “perfect positive/negative linear association” because all points are on the prediction line; The prediction equation using Y to predict X is equal

to that of X to predict Y, r ≠ unit dependent

r2 = TSS − SSE ∑ (Y − Y ) 2 − ∑ (Y − Yˆ ) 2 - “the coefficient of determination”—r2 is always between 0 and 1; if SSE = 0, r2=0; if r2=1, all points fall on the prediction line;

TSS

=

∑ (Y − Y )

2

no prediction error if SSE=TSS, because that is when r2=0; closer r2 is to 1, the better Y-hat (Y=hat=a+bX) as a prediction compared with Y-bar (mean); r2 is not

unit dependent.

Regression toward the mean: a 1 s.d. in X = an r s.d. in Y, where r = pearson’s correlation coefficient. If correlation = r=.5, an x-value being sx above the mean is

predicted to have a Y-value ½ sY above its mean; since r<1, X’s will be farther from their means than their Y’s are from theirs.

MULTIVARIATE REGRESSIONS

Regression Sum of Squares: RSS = TSS-SSE

SSE

R2= proportion of the total variation in Y explained by the multiple regression model now called “coefficient of mult. determination.”; 2 TSS − SSE

R =

Ra2= 1-n-1/n-(k+1)* n-1/n-(k+1)

multicollinearity: when explanatory variables are highly correlated and only slightly boost R2.

r = “multiple correlation coefficient” = square root of R squared

TSS

= 1−

TSS

Q5: There are 5 kids who are patients, ages 6, 8, 10, 12 & 14, 2 kids picked at random. Find the sampling distribution of the mean age of the two children:

(6+8)/2=7 (8+10)/2=9 (10+12)/2=11 (12+14)/2=13

.1 if m = 7, 8, 12 or 13

(6+10)/2=8 (8+12)/2=10 (10+14)/2=12

sampling distrib: p(m) = .2 if m = 9, 10, or 11

(6+12)/2=9 (8+14)/2=11

0 otherwise

(6+14)/2=10

sample mean: E[X-bar]=[(7+8+12+13)*1/10]+[(9+10+11)*2/10)=10

standard error of sample mean: Var[X-bar] = .1{(7-10)2+(8-10)2+(12-10)2+(13-10)2}+ .2*{(9-10)2+(10-10)2+(11-10)2}=3

Q1: Prior opinion was even split between pro and con on abortion. On first day in office, 8 of 10 letters received were anti abortion. If letters = representative, what are chances of getting

this? “I am concerned a majority of my constituency does not support my pro-choice stand.”

hypothesis test: Ho: π = .5 Ha: π>.5 (proportion of .. is greater than .5). What is the Prob of 8 or worse anti letters given the null hypothesis? Prob(# anti abortion letters ≥ 8| π=.5) =

Prob(8) + Prob(9) + Prob(10); n = 10, π = .5; so ((10x9)/2)*(510) + 10(510) + (510) = .055. The latter is the P-value for the test statistic (of 8 observed opposed to abortion letters) so with a

significance level of 5%, the assumption of the proportion of anti abortion voters = ½ would not be rejected. sample sizes: 99% sure of public opinion w/in 2% points. $20 per persion

interviewed: How much will the survey cost? .02 = 2.576*([(π(1-π))/n]. π = .5 so largest value (conservative) for n = 4,148, with cost estimated at $82,960.