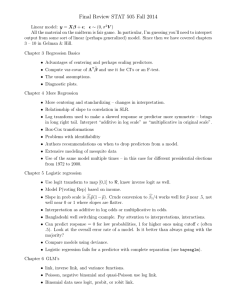

Chapter 10: Causal inference using more advanced models

advertisement