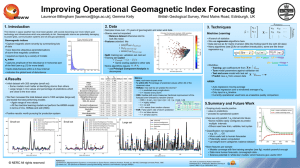

The risk of geomagnetic storms to the grid

advertisement