Measurement and Per-Application Breakdown of Queueing Delay

advertisement

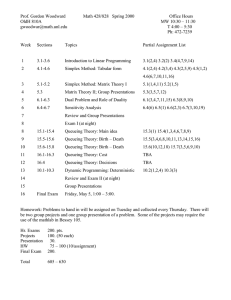

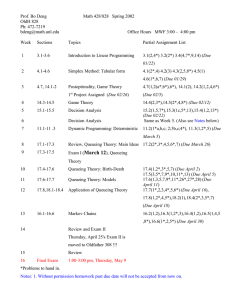

Dissecting Bufferbloat: Measurement and Per-Application Breakdown of Queueing Delay Andrea Araldo Dario Rossi LRI-Université Paris-Sud 91405 Orsay, France Telecom ParisTech 75013 Paris, France dario.rossi@enst.fr araldo@lri.fr A ABSTRACT We propose a passive methodology to estimate the queueing delay incurred by TCP traffic, and additionally leverage DPI classification to breakdown the delay across different applications. Ultimately, we correlate the queueing delay to the performance perceived by the users of that applications, depending on their delay-sensitivity. We implement our methodology in Tstat, and make it available 1 as open source software to the community. We validate and tune the tool, and run a preliminary measurement campaign based on a real ISP traffic trace, showing interesting yet partly counter-intuitive results. DCD DC D trx,i Data Data C Data D Data ttx,i+1 Ack D ttx,j+1- trx,j) qi+1=(ttx,i+1 - trx,i)- min( j<i Categories and Subject Descriptors C.2.5 [Computer Communication Network]: Internet; C.4 [Performance of Systems]: Measurement Techniques; C.2.3 [Network Operations]: Network Monitoring Figure 1: Synopsis of our passive methodology Keywords employing passive measurements [3, 12, 7, 8], like we do. Yet, most work so far has limitedly focused on measuring the maximum bufferbloat, while it is unclear how often bufferbloat occurs in practice, and which applications are most affected. To the best of our knowledge, this work is the first to report a detailed per-application view of Internet queueing delay – that depends on the traffic mix and user behavior of each household. Bufferbloat; Queueing delay; Passive Measurement; TCP; Queues; Traffic classification 1. INTRODUCTION Despite the steady growth of link capacity, Internet performance may still be laggy as confirmed by the recent resonance of the “bufferbloat” buzzword [13]. Shortly, excessive buffer delays (up to few seconds) are possible in today’s Internet due to the combination of loss-based TCP congestion control coupled with excessive buffer sizes (e.g., in user AP and modem routers, end-host software stack and network interfaces) in front of slow access links. This can cause long packet queues in those buffers and hamper user QoE. Recent effort has focused on measuring the queueing delay experienced by end-users, mostly employing active techniques [16, 9, 15, 20, 4, 5, 1, 17], with few exceptions 1 B C D 2. QUEUEING DELAY ESTIMATION Since severe queueing is likely to occur at the bottlenecks, like broadband links [10], we focus on infering the queueing delay of the uplink of hosts (customers) as in Fig. 1 Our monitor (the eye in the figure) runs close to host A, whose queueing we want to measure, e.g., close to the DSLAM of its ISP network. Assume the local queue of A contains packets directed to remote hosts B, C and D. A data segment directed to A is captured by the monitor at trx,i (packets are timestamped at the measurement point via Endace DAG cards) and, as soon as it is received, the TCP receiver issues an acknowledgement that will be serviced after the already queued data segments. We neglect for simplicity delayedacknowledgement timers (that are by the way small compared to the bufferbloat magnitude reported in [16]). The monitor captures the ack at ttx,i+1 and estimate the queueing delay qi+1 incurred by the (i+1)-th ack at A as the difference between the current RTT sample ttx,i+1 − trx,i and the minimum among the previously observed RTT samples http://perso.telecom-paristech.fr/~araldo Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for components of this work owned by others than ACM must be honored. Abstracting with credit is permitted. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. Request permissions from permissions@acm.org. CoNEXT Student Workshop’13, December 9, 2013, Santa Barbara, CA, USA. Copyright 2013 ACM 978-1-4503-2575-2/13/12 ...$15.00. http://dx.doi.org/10.1145/2537148.2537785. 53 high 10000 mid 1000 100 low Aggregated queueing delay[ms] of that flow (that represents the propagation delay and, as the monitor is close to the user, is by the way expected to be small). To gather reliable results, we filter out RTT samples of reordered or retransmitted segments. If we considered queueing delay samples for all packets of any given flow, we could possibly introduce biases in the measurement process, as flows with higher data rates would be more represented in the analysis. To avoid this risk, we instead consider the aggregated queueing delay, i.e. the average queueing delay in windows of 1 second, that is closer to the user perspective, for it describes what happens to a flow during the typical second of its lifetime, rather than to all of its packets. Other related work as [3] does not stress the importance of the validation and the tuning of the measuring tool. On the contrary, we validate our methodology in a local testbed under different data rate of the links, from 1Mbps (typical of ADSL uplink) to 100Mbps. Specifically, Tstat keeps sequence numbers in a structure named quad (inherited from tcptrace). If the number of outstanding segments grows larger than the quad size, sequence numbers are overwritten, the related queueing delay samples can not be calculated and the estimation becomes coarse – which especially happens for high queueing delay, possibly resulting in bufferbloat underestimation, which is undesirable. Clearly, the quad size needed to achieve an accurate estimation increases with the link rate (we precisely tune it for our experiments). As a side note, our tool can be directly applied to offline traffic traces, but is also suitable for online processing. We empirically observe that the queueing delay analysis of offline traces imposes an 8% overhead in terms of Tstat running time (other than doubling the storage requirements, as we log the queueing delay of all active flows every second). 10 1 Oth Mail P2P Web Media Chat SSH VoIP low 89.7 93.2 58.4 mid 10.2 6.2 38.7 high 0.1 0.6 2.9 91.9 86.8 8.0 13.2 0.1 0.0 45.7 54.1 0.1 98.6 97.8 1.4 2.2 0.0 0.0 Figure 2: Jittered density maps and (5,25,50,75,95)-th percentiles of the aggregated queueing delay. Table reports the percentage in each region. [5, 20, 14, 6, 21, 7] we set two thresholds at 100 ms and 1 second (thus deriving 3 regions of low, middle and high queueing delay) such that: (i) performance of interactive multimedia (e.g., VoIP, video-conference and live-streaming) or data-oriented (e.g., remote terminal or cloud editing of text documents) applications significantly degrades when the first threshold is crossed [14, 6]; (ii) performance of mildly-interactive application (e.g., Web, chat, etc.) significantly degrades when the second threshold is crossed [5, 20]. In the table of Fig. 2, boldface highlights possible QoE degradation: notice that even non-interactive applications performance degrades when the second threshold is crossed [13, 21, 7]. Overall, bufferbloat impact appears to be modest: Web and Chat are rarely impacted by high delay, and VoIP and SSH rarely experience middle delay. P2P stands out, raising the odds to induce high delays followed by Mail, though with likely minor impact for the users. 3. PER-APPLICATION VIEW We report experimental results of the inference analyzing a 8hr busy-period trace gathered during 2009,2 at a vantage point close to the DSLAM, of an ISP network participating to the FP7 NapaWine [18] project. Overall, we monitor over 93000 hosts and gather about 107 individual per-flow aggregated queueing delay samples. We consider each internal IP as a single3 host. To infer the applications running on the hosts, we leverage the Tstat DPI and behavioral classification capabilities [11], extensively validated by ourselves as well as by the scientific community at large [19]. We cluster similar applications into classes depending on their service: namely, Mail, Web, Multimedia, P2P, SSH, VoIP, Chat (we consider non-video chat) and other uncategorized (or unclassified) applications. We present our results in Fig. 2. For most applications the 75% of 1-second windows experience less than 100ms worth of queueing delay. The only exceptions are, rather unsurprisingly, P2P applications and, somehow more surprisingly, Chat applications, with median delay exceeding 100ms. We can map the queueing delay into a coarse indication of QoE for the user. Based on 4. CONCLUSION Though preliminary, this work already conveys several useful insights on the queueing delay estimation, e.g., correctly settings of monitoring tools, metrics more representative of the user perspective, a per-application assessment of likely QoE impact. We provide to the community a ready to use tool and we plan to deploy it on operational networks [2], in order to gather more statistically significant results. Our per-application study of the queueing delay opens new interesting questions, to investigate as future work. As we have seen, Chat users experience middle delay: at the same time, such middle delay is likely not self-inflicted by the Chat application itself, but rather tied to applications that run in parallel with Chat and that generate cross-traffic. A root cause analysis, based on standard data mining techniques, would allow to grasp these correlations and pinpoint the applications (or combinations of them) that are more likely to induce high queueing delays. 2 Our focus here is to build a solid methodology, rather than providing a full blown measurement campaign, which is the aim of our ongoing work. Hence results should be contrasted with those of more recent dataset for an up-to-date view of Internet bufferbloat. 3 With NAT devices the same IP is shared by multiple hosts but this has no impact on our methodology, since these potentially multiple hosts share the same access bottleneck link Acknowledgements This work was carried out at LINCS (http://www.lincs. fr) and funded by the European Union under the FP7 Grant Agreement n. 318627 (Integrated Project ”mPlane”). 54 5. REFERENCES [12] S. Gangam, J. Chandrashekar, I. Cunha, and J. Kurose. Estimating TCP latency approximately with passive measurements. In PAM, 2013. [13] J. Gettys and K. Nichols. Bufferbloat: Dark buffers in the internet. Communications of the ACM, 55(1):57–65, 2012. [14] O. Holfeld, E. Pujol, F. Ciucu, A. Feldmann, and P. Barford. BufferBloat: how relevant? a QoE perspective on buffer sizing. Technical report, 2012. [15] H. Jiang, Y. Wang, K. Lee, and I. Rhee. Tackling bufferbloat in 3G/4G networks. In ACM IMC, 2012. [16] C. Kreibich, N. Weaver, B. Nechaev, and V. Paxson. Netalyzr: Illuminating the edge network. In ACM IMC, 2010. [17] D. Leonard and D. Loguinov. Demystifying service discovery: implementing an internet-wide scanner. In ACM IMC, 2010. [18] E. Leonardi, M. Mellia, A. Horvath, L. Muscariello, S. Niccolini, and D. Rossi. Building a cooperative P2P-TV application over a Wise Network: the approach of the European FP-7 STREP NAPA-WINE. IEEE Communication Magazine, 64(6), April 2008. [19] M. Pietrzyk, J.-L. Costeux, G. Urvoy-Keller, and T. En-Najjary. Challenging statistical classification for operational usage: the adsl case. In Proceedings of the 9th ACM SIGCOMM conference on Internet measurement conference, pages 122–135. ACM, 2009. [20] S. Sundaresan, W. de Donato, N. Feamster, R. Teixeira, S. Crawford, and A. Pescapè. Broadband internet performance: a view from the gateway. In ACM SIGCOMM, 2011. [21] C. Testa and D. Rossi. The impact of uTP on BitTorrent completion time. In IEEE P2P, 2011. [1] http://internetcensus2012.bitbucket.org/. [2] http://www.ict-mplane.eu. [3] M. Allman. Comments on bufferbloat. SIGCOMM Comput. Commun. Rev., 43(1), Jan 2012. [4] Z. Bischof, J. Otto, M. Sánchez, J. Rula, D. Choffnes, and F. Bustamante. Crowdsourcing ISP characterization to the network edge. In ACM SIGCOMM Workshop on Measurements Up the STack (W-MUST’11), 2011. [5] Z. S. Bischof, J. S. Otto, and F. E. Bustamante. Up, down and around the stack: ISP characterization from network intensive applications. In ACM SIGCOMM Workshop on Measurements Up the STack (W-MUST’12), 2012. [6] K.-T. Chen, C.-Y. Huang, P. Huang, and C.-L. Lei. Quantifying skype user satisfaction. 36(4):399–410, 2006. [7] C. Chirichella and D. Rossi. To the moon and back: are internet bufferbloat delays really that large. In IEEE INFOCOM Workshop on Traffic Measurement and Analysis (TMA), 2013. [8] C. Chirichella, D. Rossi, C. Testa, T. Friedman, and A. Pescape. Remotely gauging upstream bufferbloat delays. In PAM, 2013. [9] M. Dhawan, J. Samuel, R. Teixeira, C. Kreibich, M. Allman, N. Weaver, and V. Paxson. Fathom: a browser-based network measurement platform. In ACM IMC, 2012. [10] M. Dischinger, A. Haeberlen, K. P. Gummadi, and S. Saroiu. Characterizing residential broadband networks. In Internet Measurement Comference, pages 43–56, 2007. [11] A. Finamore, M. Mellia, M. Meo, M. Munafo, and D. Rossi. Experiences of internet traffic monitoring with tstat. IEEE Network Magazine, May 2011. 55