Time Series Analysis Spring 2016 Assignment 1 Solutions Kaiji

advertisement

Time Series Analysis

Spring 2016

Assignment 1

Solutions

Kaiji Motegi

Waseda University

Reading: Chapter 2 of Enders (2014) Applied Econometric Time Series.

Background Knowledge

Definition 1: A scalar random variable X follows the (univariate) normal distribution with

mean µ and variance σ 2 , written as X ∼ N (µ, σ 2 ), if the probability density function (p.d.f.)

of X is given by

]

[

1

(x − µ)2

f (x) = √

exp −

,

2σ 2

2πσ

−∞ < x < ∞.

(1)

Definition 2: A T × 1 vector of random variables X = [X1 , X2 , . . . , XT ]′ follows the multivariate normal distribution with mean µ and covariance matrix Σ, written as X ∼ N (µ, Σ),

if the probability density function (p.d.f.) of X is given by

[

− T2

f (x) = (2π)

− 12

|Σ|

]

1

′ −1

exp − (x − µ) Σ (x − µ) ,

2

x ∈ RT .

(2)

where | · | denotes the determinant. (Remark: Eq. (2) reduces to Eq. (1) when T = 1.)

Problems

Problem-1: Consider MA(q): yt =

∑q

j=0

βj ϵt−j , where β0 = 1 and ϵt is a white noise with

variance σ 2 .

(a) Compute µ ≡ E[yt ].

(b) Compute γ0 ≡ E[(yt − µ)2 ].

(c) Compute γj ≡ E[(yt − µ)(yt−j − µ)] for j ≥ 1. (Remark: It is important to observe

that γj = 0 for j ≥ q + 1.)

(d) Conclude that {yt } is covariance stationary whatever β1 , . . . , βq are.

1

Time Series Analysis

Spring 2016

Assignment 1

Solutions

Kaiji Motegi

Waseda University

(e) Compute ρj ≡ γj /γ0 .

Solution-1: (a) µ = 0.

∑

(b) γ0 = σ 2 qk=0 βk2 .

∑

(c) γj = σ 2 q−j

k=0 βk+j βk for j = 1, . . . , q. γj = 0 for j ≥ q + 1.

(d) Mean µ and variance γ0 are constant over time as shown in parts (a) and (b). Autocovariance γj depends on lag j but not time t as shown in part (c). Hence, {yt } is covariance

stationary.

(e) ρj =

∑q−j

k=0

βk+j βk /

∑q

k=0

βk2 .

Problem-2: In class we covered AR(1) and MA(1) processes. Here we consider a more

general process called ARMA(1, 1):

yt = ϕyt−1 + ϵt + βϵt−1 ,

(3)

where ϵt is a white noise with variance σ 2 . Assume |ϕ| < 1 so that {yt } is covariance

stationary. (Remark: β does not play any role for covariance stationarity.) Let us compute

autocorrelation functions ρj using the Yule-Walker equations.

(a) Show that γ0 = ϕγ1 + σ 2 + β(ϕ + β)σ 2 .

(b) Show that γ1 = ϕγ0 + βσ 2 .

(c) Combining (a) and (b), solve for γ0 and γ1 .

(d) Show that

ρ1 =

(ϕ + β)(1 + ϕβ)

.

1 + ϕβ + β(ϕ + β)

(e) Show that γj = ϕγj−1 for j ≥ 2.

2

Time Series Analysis

Spring 2016

Assignment 1

Solutions

Kaiji Motegi

Waseda University

(f) Show that

ρj =

ϕj−1 (ϕ + β)(1 + ϕβ)

,

1 + ϕβ + β(ϕ + β)

j ≥ 1.

(4)

Solution-2: (a) Multiply yt and take expectations on both sides of Eq. (3) to get

γ0 = ϕγ1 + E[(ϕyt−1 + ϵt + βϵt−1 )ϵt ] + βE[(ϕyt−1 + ϵt + βϵt−1 )ϵt−1 ]

= ϕγ1 + σ 2 + βϕE[(ϕyt−2 + ϵt−1 + βϵt−2 )ϵt−1 ] + β 2 σ 2

= ϕγ1 + σ 2 + βϕσ 2 + β 2 σ 2

= ϕγ1 + σ 2 + β(ϕ + β)σ 2 .

(b) Multiply yt−1 and take expectations on both sides of Eq. (3) to get

γ1 = ϕγ0 + βE[(ϕyt−2 + ϵt−1 + βϵt−2 )ϵt−1 ] = ϕγ0 + βσ 2 .

(c) γ0 = σ 2 [1 + ϕβ + β(ϕ + β)]/(1 − ϕ2 ) and γ1 = σ 2 (ϕ + β)(1 + ϕβ)/(1 − ϕ2 ).

(d) Since ρ1 = γ1 /γ0 , we get the desired result.

(e) Let j ≥ 2. Multiply yt−j and take expectations on both sides of Eq. (3) to get

γj = ϕγj−1 .

(f) We have from part (d) that ρ1 = (ϕ + β)(1 + ϕβ)/[1 + ϕβ + β(ϕ + β)]. In view of part

(e) we have that ρj = ϕρj−1 and thus ρj = ϕj−1 (ϕ + β)(1 + ϕβ)/[1 + ϕβ + β(ϕ + β)].

Problem-3: Consider a specific form of ARMA(1,1):

yt = 0.3yt−1 + ϵt + 0.8ϵt−1 ,

σ 2 = 1.

(5)

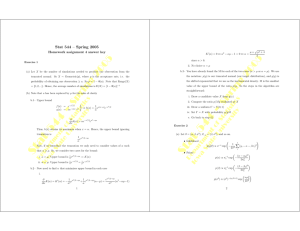

(a) Compute ρ1 , . . . , ρ5 and replicate Figure 1. (Hint: Use Eq. (4). You can use any

software including Excel, R, EViews, Matlab, etc.)

3

Time Series Analysis

Spring 2016

Assignment 1

Solutions

Kaiji Motegi

Waseda University

(b) The Excel spreadsheet ARMA.xlsx contains two simulated samples from Eq. (5). {ϵt }

is approximated by independent draws from N (0, 1). Sample size is T = 100 in the

first one and T = 1000 in the second one. Plot each series to replicate Figure 2.

(c) For each sample, compute sample autocorrelations ρ̂1 , . . . , ρ̂5 and replicate Figure 3.

(d) Compare Figures 1 and 3 and comment on the results.

1

0.8

0.6

0.4

0.2

0

1

2

3

4

5

Lag j

Figure 1: Population Autocorrelations of ARMA(1,1)

Solution-3: (a) (ρ1 , ρ2 , ρ3 , ρ4 , ρ5 ) = (0.643, 0.193, 0.058, 0.017, 0.005).

(b) See Figure 2.

(c) For T = 100, (ρ̂1 , ρ̂2 , ρ̂3 , ρ̂4 , ρ̂5 ) = (0.625, 0.078, −0.128, −0.076, 0.050). For T = 1000,

(ρ̂1 , ρ̂2 , ρ̂3 , ρ̂4 , ρ̂5 ) = (0.674, 0.223, 0.036, −0.006, 0.042).

(d) When T = 100, the sample autocorrelations slightly underestimate (ρ2 , ρ3 , ρ4 ). When

T = 1000, the approximation is nearly perfect.

Problem-4: Consider a T ×1 vector of random variables X = [X1 , X2 , . . . , XT ]′ . In class we

4

Time Series Analysis

Spring 2016

Assignment 1

Solutions

4

Kaiji Motegi

Waseda University

10

2

5

0

-2

0

-4

-6

0

20

40

60

80

-5

100

0

200

1. T = 100

400

600

800

1000

2. T = 1000

Figure 2: Simulated ARMA(1,1) Processes

1

1

0.5

0.5

0

0

-0.5

1

2

3

4

-0.5

5

Lag j

1

2

3

4

5

Lag j

1. T = 100

2. T = 1000

Figure 3: Sample Autocorrelations of ARMA(1,1)

learned that independence implies uncorrelatedness but the converse is not true in general.1

In this problem we learn that uncorrelatedness implies independence when X follows a

multivariate normal distribution.

Let µt be the t-th element of µ = E[X]. Then uncorrelatedness is defined as E[(Xt − µt )(Xs − µs )] = 0

for any t and s. Independence is equivalent to E[g(Xt − µt )h(Xs − µs )] = E[g(Xt − µt )]E[h(Xs − µs )] for

any functions g and h and for any t and s. Take the identity function for g and h to see that independence

implies uncorrelatedness.

1

5

Time Series Analysis

Spring 2016

Assignment 1

Solutions

Kaiji Motegi

Waseda University

(a) Assume that X ∼ N (µ, Σ) with

µ1

µ2

.

.

µ=

. ,

µT −1

µT

σ12 0 . . . . . .

0

.

.

.

2

.

.

.

0 σ2

.

.

.

. .

.

.

.

..

.. ..

..

.. .

Σ=

.. . . . .

.

. σT2 −1 0

.

2

0 ... ...

0

σT

(Remark: The diagonality of Σ means uncorrelatedness.) Compute Σ−1 and |Σ|.

(b) Show that

′

−1

(x − µ) Σ (x − µ) =

T

∑

(xt − µt )2

t=1

σt2

.

(c) Show that the p.d.f. of X is written as

− T2

f (x) = (2π)

(T

∏

)− 12

σt2

t=1

where

∏T

t=1

[

]

T

1 ∑ (xt − µt )2

exp −

,

2 t=1

σt2

(6)

σt2 ≡ σ12 × σ22 × · · · × σT2 . (Hint: Use Eq. (2).)

(d) Show that Eq. (6) can be rewritten as

f (x) =

T

∏

ft (xt ),

(7)

t=1

where ft (·) denotes the p.d.f. of the univariate normal distribution with mean µt and

variance σt2 (cfr. Eq. (1)). (Important Remark: Eq. (7) indicates that X is independent since the joint p.d.f. of X is expressed as the product of the marginal p.d.f.’s.

Hence, independence and uncorrelatedness are equivalent under the multivariate normal distribution.)

6

Time Series Analysis

Spring 2016

Assignment 1

Solutions

Kaiji Motegi

Waseda University

Solution-4: (a) We have that

Σ−1

and |Σ| =

∏T

t=1

1/σ12

0

...

...

0

.

.

.

2

.

.

.

0

.

.

.

1/σ2

.

.

.

.

.

..

..

..

..

.. .

=

..

..

..

.

. 1/σT2 −1

0

.

2

0

... ...

0

1/σT

σt2 .

(b) We have that

] x1 − µ1 ∑

T

xT − µT

x1 − µ1

(xt − µt )2

..

′ −1

,...,

.

(x − µ) Σ (x − µ) =

.

=

σ12

σT2

σt2

t=1

xT − µT

[

(c) We have from parts (a) and (b) that

[

]

1

′ −1

f (x) = (2π) |Σ| exp − (x − µ) Σ (x − µ)

2

(T

)− 12

[

]

T

2

∏

∑

T

1

(x

−

µ

)

t

t

= (2π)− 2

σt2

exp −

.

2 t=1

σt2

t=1

− T2

− 12

(d) Eq. (6) can be rewritten as

[

]}

{

[

{

]}

( 2 )− 1

( 2 )− 1

1 (x1 − µ1 )2

1 (xT − µT )2

− 21

− 12

2

2

σ1

exp −

× · · · × (2π)

σT

exp −

f (x) = (2π)

2

2

σ12

σT2

=

T

∏

ft (xt ).

t=1

7