®

AUTODESK WHITEPAPER

Virtual Production, or Virtual

Moviemaking,

The New Art of Virtual Moviemaking

visually

workflow.

camera

Virtual Production processes are set to transform the way

filmmakers and their teams create high quality entertainment.

is

dynamic,

It

a

blends

systems,

motion

and

capture,

3D

new,

non-linear

virtual

advanced

performance

software

and

practical 3D assets with realtime render display technology,

New digital technologies are providing filmmakers and their production teams with real-time,

enabling

interactive environments and breakthrough workflows, transforming the way they plan and

interactively

filmmakers

to

visualize

and

create movies. Using the latest 3D software, gaming and motion capture technology, they

explore digital scenes for the

can explore, define, plan and communicate their creative ideas in new, more intuitive, visual

production of feature films and

ways helping them reduce risk, eliminate confusion and address production unknowns much

game cinematics.

earlier in the filmmaking process. Innovators – from production companies like Lightstorm to

previsualization specialists like The Third Floor – are already using Virtual Production

technologies to push creative boundaries and save costs.

CONTENTS

“Through the [Virtual Moviemaking] process we are able to optimize the entire film, to

make it more creative, and to insure that more of the budget ends up on screen. That’s

Introduction ......................... 1

where all the technology – all the hardware and software – comes into play: to make

Historical Perspective ......... 2

sure that the vision of the director is preserved throughout the entire process.”

Virtual Production ............... 3

Chris Edwards, CEO The Third Floor – Previs Studio

Applications of

Virtual Moviemaking ........... 5

The Technology of

Virtual Moviemaking ........... 6

Camera Systems ................ 7

Performance Capture .......... 9

Real-Time 3D Engines ...... 11

Art Directed 3D Assets ...... 13

Integrated Workflow .......... 15

Previsualization .................16

Stereoscopic 3D ................16

Post Production .................17

Looking Ahead ..................18

References, Resources

and Acknowledgements ...19

®

®

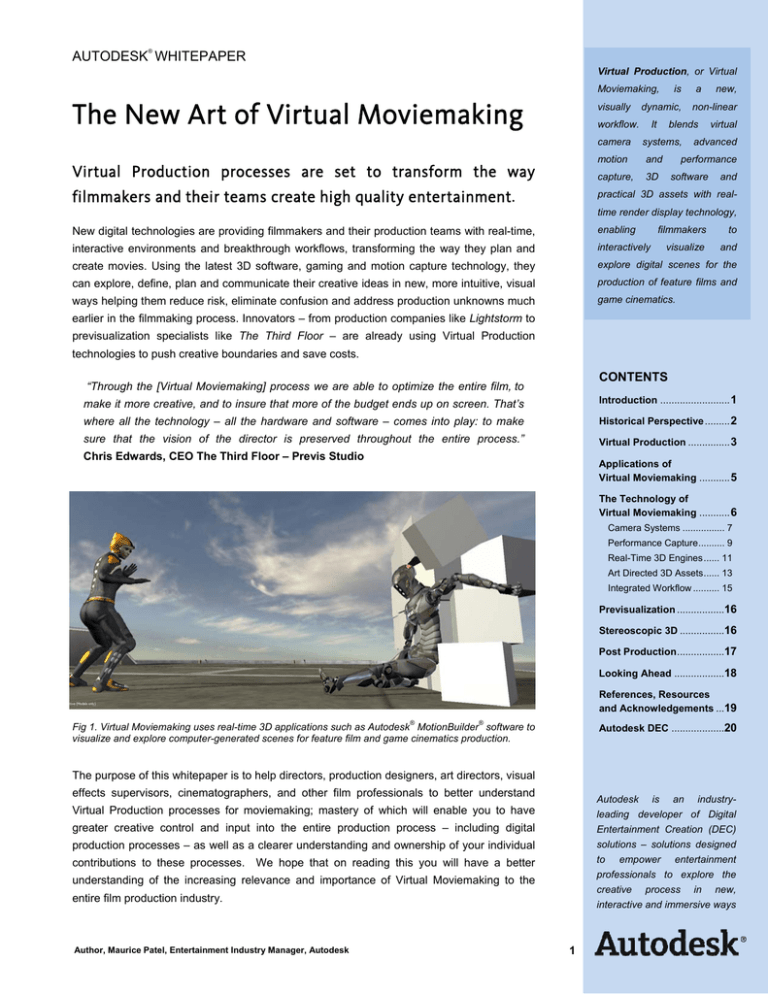

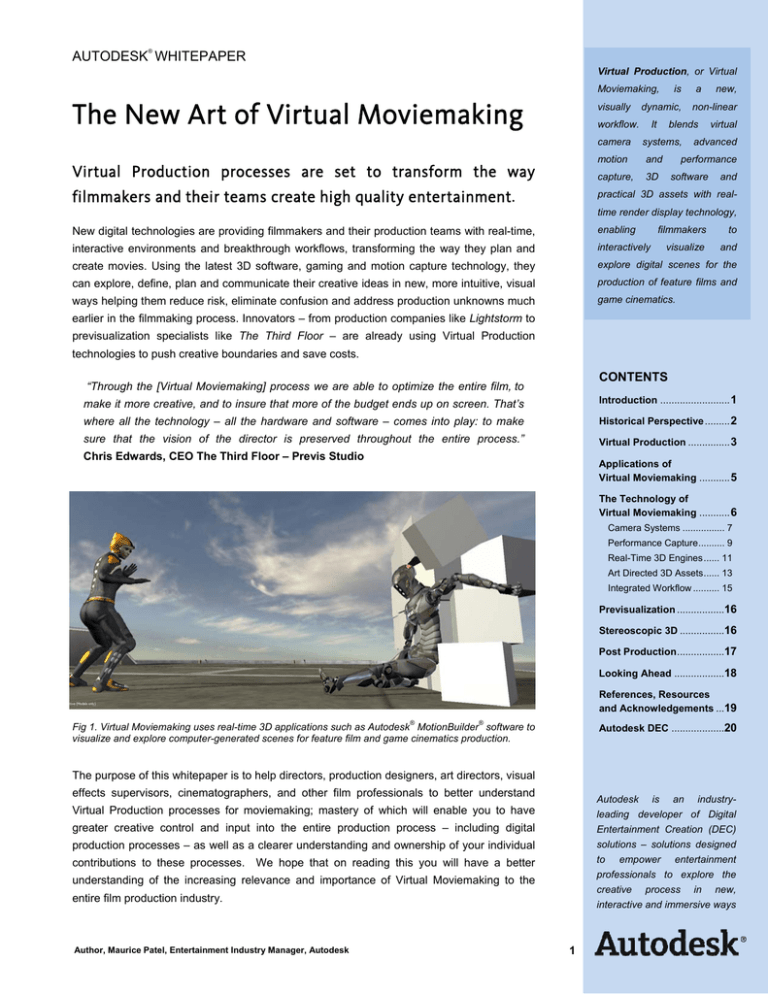

Fig 1. Virtual Moviemaking uses real-time 3D applications such as Autodesk MotionBuilder software to

visualize and explore computer-generated scenes for feature film and game cinematics production.

Autodesk DEC ...................20

The purpose of this whitepaper is to help directors, production designers, art directors, visual

effects supervisors, cinematographers, and other film professionals to better understand

Autodesk

is

an

industry-

Virtual Production processes for moviemaking; mastery of which will enable you to have

leading developer of Digital

greater creative control and input into the entire production process – including digital

Entertainment Creation (DEC)

production processes – as well as a clearer understanding and ownership of your individual

solutions – solutions designed

contributions to these processes. We hope that on reading this you will have a better

to

understanding of the increasing relevance and importance of Virtual Moviemaking to the

creative

entire film production industry.

Author, Maurice Patel, Entertainment Industry Manager, Autodesk

empower

entertainment

professionals to explore the

process

in

new,

interactive and immersive ways

1

The New Art of Virtual Moviemaking – An Autodesk Whitepaper

Computer Generated

Imagery (CGI) in Movies

Historical Perspective

The last decade has seen a

The film production process is a constantly evolving one as technologies emerge enabling new

rapid rise in the demand for

types of creativity. Today, the use of digital technology is becoming pervasive in filmmaking. Its

computer generated imagery in

adoption has been marked by significant tipping points, one of the first of which was the

movies and has been a major

introduction of non-linear editing (NLE) systems in the late 80s. All of a sudden the craft of the

driver

editor changed radically. Editors could experiment more and be far more creative. Because

success.

behind

box

office

their edits became virtual they could change them at any time; and so today editors begin

2007 Share of Box Office

Gross (US)

editing from the first storyboards and keep on editing until the film is ready to print for

distribution – sometimes even afterwards. The digital edit became the first virtual

12%

representation of a movie, albeit a rather low quality one.

43%

45%

2009 Share of Box Office

Gross (US)

12%

49%

Fig 2. Digital non-linear editing radically changed the craft of editing

39%

1

VFX Blockbusters*

Animation

Other

Another tipping point occurred when it became feasible (and cost effective) to scan the entire

movie at high resolution. This paved the way for Digital Intermediates (DI) and changed the

*Visual Effects

way movies are color graded. The DI process enabled the creation of a high-quality, highresolution, virtual representation of the movie – a digital master. Between these events use of

digital visual effects had also rapidly expanded and full length CG animated movies had

Increasing Use of Digital

Visual Effects 1998-2008

become highly popular (Sidebar).

(VFX shots per minute)

Editors and cinematographers needed to develop new knowledge and skills to take advantage

and control of the new technology. In return they got greater creative freedom, but also greater

responsibility. One advantage of digital technology is that it removes physical constraints.

While this is truly liberating in terms of creativity it is also a source of potential error, especially

if the scope and impact of the technology is not fully understood. Avoiding pitfalls requires

effective collaboration between crafts, respect for the different skills and requirements of key

stakeholders, and a solid understanding of how to guide technology to produce the desired

creative outcome – a problem sometimes referred to as maintaining creative intent.

The graph above shows the

Virtual Moviemaking is another such tipping point impacting a broader range of film production

trend for increasing numbers of

professionals from directors, art directors and cinematographers to visual effects supervisors

digital visual effects shots per

and other production teams. But why is it so important? The answer lies in the increasing use

minute of movie based on the

of computer generated characters and visual effects in movies. A director wants to (and should

Academy Award

Note: by 2006 almost every shot in

the winning movies involved some

level of digital visual effects

are real or virtual; but today much of computer graphics production is treated as a postproduction process for review and approval and not as a hands on directive experience. Virtual

Moviemaking processes help change that dynamic enabling filmmakers to have hands on

creative input into what is an increasingly important part of the movie making experience.

Image: Steenbeck film editing machine by Andrew Lih, Courtesy Wikpiedia, the free encyclopedia under

Creative Commons Attribution-ShareAlike 2.0 license

winners for

Best Visual Effects.

be able to) scout locations or direct the performance of characters irrespective of whether they

1

®

2

The New Art of Virtual Moviemaking – An Autodesk Whitepaper

Full Circle? The Promise of Virtual Production

Not so long ago, if a director wanted to shoot a special effect or add a monster to the scene he or she would have

to figure out a way to shoot it on camera. This obviously imposed some limitations as to what could realistically be

done, but at least he or she could interact directly with the process and see it unfold before their own eyes on set.

They could direct the performance of the monster and control the execution of the special effect on set. Then

along came digital technology.

On set

production

By the late 1980s high-resolution image scanners and high-performance computers made it possible and feasible

to create digital visual effects. Suddenly the director’s imagination was given free reign. It was possible to create

the impossible: pyrotechnic explosions that defy the laws of physics, dinosaurs and alien creatures and planets.

CGI was born but in the process the director lost the ability to get involved directly in the creative process which

was now done after production in post-production. The director’s role became primarily a role of review and

approval at scheduled screenings of pre-rendered shots. The process could be compared to reviewing dailies

without actually ever going on set.

On set

production

post

production

Off set

However as digital effects shots became more common, increasing from a few shots to tens, then hundreds, of

shots, and finally in some cases the entire movie, effective planning became crucial and previsualization became

important. Previsualization enabled the director to at least plan the visual effects shots better and make up-front

decisions that would affect the final look and feel.

But there was a discontinuity between previsualization and post-production processes and still no real possibility

for the director to interact directly with the creative process itself. As CGI tools moved into preproduction the on

set production processes were increasingly isolated from the digital processes that surrounded them. This

became problematic for directors working on productions that involved large amounts of CG for visual effects and

digital character creation.

previs

production

post

production

As leading filmmakers and their teams tried to tackle the creative problems inherent to the traditional production

processes it became increasingly clear that they needed tools that would enable them to direct the CG processes

as effctively as they could direct live action. It was from this need that virtual production tecniques were born.

3

The New Art of Virtual Moviemaking – An Autodesk Whitepaper

Virtual production is an interactive, iterative and integrative process that starts from pre-production and continues

till the final frame is printed to film (or packaged electronically) for distribution.

Virtual Production is an iterative, collaborative process that

VIRTUAL PRODUCTION

starts at preproduction and continues through production and

into postproduction.

preproduction

production

postproduction

Digital technology blurs the boundaries between these process

enabling a single virtual production space where the story

continuously evolves fed by elements from both CGI and live

action shoots.

Virtual production processes levearge the proliferation of CG technology

upstream and downstream of the production process, together with the latest

“Virtual

breakthroughs in gaming technology and real-time graphics performance to

liberate

provide filmakers with

Moviemaking

filmmakers

can

from

the

the instant feedback and the ‘in the moment’

often stifling visual effects and

interaction that they have long experienced on live action shoots. Creating

animation process by allowing

CG animation and visual effects shots can be experienced more like a live

them

action shoot yet still offer the creative flexibility than is impossible on the real

production in much the same

world set. when virtual production becomes interactive it feels much more like

traditional moviemaking, hence the term Virtual Moviemaking.

to

experience

the

way they do while shooting live

action.

They are immersed in

Virtual Moviemaking also differs from traditional CGI in that it is driven by the

the world of their film and the

lead creative team members themselves. The Director and Cinematographer

entire

actually hold the camera and perform the shots as opposed to this being done

immensely from that kind of

secondhand by computer animators and previs artists.

immediate

Powerful real time

rendering engines make it possible to create visually appealing images that

production

creative

benefits

discovery

and problem solving

are worthy of the highest quality concept art. Filmmakers no longer have to

wait hours, days or even weeks to see CG shots presented in a visually

Rob Powers, Pioneered the first

appealing way that is representative of the images they have visualized in

Virtual Art Department for Avatar

their mind’s eye.

VIRTUAL PRODUCTION

Art Dept.

Production Design

Set Construction

Director

Cinematography

Virtual Art Dept.

Visual effects

The improved accessibility offered by the Virtual Moviemaking

process fosters greater collaboration and communication

throughout the entire production team, encouraging productive

conversations between team members that would previously

have had little or no contact.

The Virtual Moviemaking forum as a conduit for increased

collaboration and a hub for communication throughout the

production is arguably one of its strongest benefits for the

inherently collaborative medium of moviemaking

4

The New Art of Virtual Moviemaking – An Autodesk Whitepaper

Previsualization*

Applications of Virtual Moviemaking

Previsualization (previs) is the

use of digital technology to

Virtual Moviemaking represents a significant metamorphosis in the use of digital technology for

explore creative ideas, plan

film production, enabling greater creative experimentation and broader collaboration between

technical

solutions

to

crafts. It transforms previously cumbersome, complex and alienating digital processes into more

production

problems

or

interactive and immersive ones, allowing them to be driven by the filmmakers themselves. As a

communicate

result, Virtual Moviemaking is helping filmmakers to bridge the gap between the real world and

creative intent to the broader

vision

and

production team.

the world of digital performers, props, sets and locations. It gives them the freedom to directly

apply their skills in the digital world – whether for the hands-on direction of computer generated

On-Set Previsualization*

characters, or to plan a complex shoot more effectively. Being digital, Virtual Moviemaking

technology is highly flexible and can be easily molded to fit the exact needs of a given

The

production. As a result its applications vary extensively in scope ranging from previsualization to

technologies on set to produce

use

of

interactive

(near) real-time visualizations

virtual cinematography (Sidebar).

that can help the director and

The term virtual cinematography was popularized in the early 2000s with the digital

other crew members quickly

techniques used to create the computer generated scenes in The Matrix Reloaded although,

evaluate captured imagery and

guide performance.

arguably, both the term and many of its concepts and techniques predated the movie. In its

broadest sense, virtual cinematography refers to any cinematographic technique applied to

Virtual Cinematography

computer graphics, from virtual cameras, framing and composition to lighting and depth of field.

In this broadest sense virtual cinematography is a key part of effective digital visual effects

Virtual

creation and essential to creating imagery that serves to develop and enhance the story. More

involves the digital capture,

simulation and manipulation of

recently the term has been specifically applied to real-time tools used during production to make

cinematographic

directive decisions.

Virtual

Moviemaking

the properties of cameras and

technology

lighting.

action visual effects production. The

Technical Previsualization*

technology can be used to immediately

CG = Computer Graphics

CGI = Computer Generated Imagery

CGI is a specific application of CG in that

it refers to the use of computer graphics

to create synthetic imagery. However,

the two terms are sometimes used interchangeably in the visual effects industry.

In a broad sense, computer-generated is

an adjective that describes any element

created by a computer– as opposed

to shot live on a set

or location.

elements

using computers – in particular

provides significant benefits to live

CG and CGI

Cinematography

visualize how the shot will look after

Technical

post-production. Camera moves can

focuses on technical accuracy

be planned and tested in real-time and

in order to better understand

previsualization

and plan real-world production

both creative and technical aspects of

scenario.

Dimensions

and

the shoot carefully evaluated and

physical properties must be

tested. The director can see and

accurately modeled in order to

understand the full context of the shot

determine feasibility on set.

including the visual effects that will be

added later, enabling him, or her, to

Pitchvis and Postvis*

direct performance appropriately.

Pitchvis and Postvis describe

the use of visualization in

Another application can be found in the

either the early conceptual

way top production designers are using

development

(for

project

Virtual Moviemaking to design and explore virtual movie sets before they are built by the

greenlighting) or the later post-

construction crews.

At first glance this appears to be a fairly logical evolution of the

production

previsualization process commonly used by many film productions to plan and trouble shoot the

production.

stages

of

film

production. However, Virtual Moviemaking offers much more flexibility by providing an infinitely

customizable real-time stage within which to work.

The real-time aspects of Virtual Moviemaking technology are also highly beneficial for CG

animated movies and videogame cinematic sequences where real-time virtual cameras and

* Definitions based on the

recommendation of the joint

previsualization subcommittee

comprising representatives of the

American Society of Cinematographers

(ASC), the Art Directors Guild (ADG),

the Visual Effects Society (VES) and

lighting help simplify and accelerate the camera animation and layout process.

5

The New Art of Virtual Moviemaking – An Autodesk Whitepaper

Virtual Moviemaking

Today, Virtual Moviemaking technology is being deployed by pioneering directors working on

in Action

large-scale, blockbuster, science fiction and animation projects. Examples include James

Computer

applications

like

Cameron on Avatar, Steven Spielberg and Peter Jackson on Tintin and Robert Zemeckis on A

Autodesk

Christmas Carol. These directors and their production companies are already designing and

allow directors to control a

building special virtual cinematography sound stages and it is not a stretch to envisage a future

virtual camera using hand-held

®

®

MotionBuilder

I

n

devices such as the mock

t

camera

shown below.

e

The camera movement is

g

tracked in real-time and

r

translated

directly into the

a

computer.

t

MotionBuilder also allows real

e capture and display of

time

d

actors’

performances applied

in which most major sound stages will have Virtual Moviemaking capabilities. Today the

technology is the preserve of large-budget productions but, given the rapid evolution of

computer performance and capability, there is no reason not to expect that, as with Digital

Intermediates (DI), Virtual Moviemaking will become accessible to a broader range of production

budgets.

So profound is the impact of Virtual Moviemaking on the creative process that it will most

certainly be felt across all departments and all levels of production by offering greater creative

options and fostering a new collaborative environment to explore the moviemaking process.

to digital characters.

In the next section we will take a more detailed look at the actual technology used for Virtual

W allows the director to

This

Moviemaking.

o a computer generated

shoot

The Technology of Virtual Moviemaking

(virtual)

r

scene

by

directing

k

the

actors

f

l

live-action shoot.

o

w

same way they would during a

production and gaming industries. When used together the sum of these individual workflows

becomes far greater than their individual parts creating a revolutionary new interactive workflow.

Core components of the Virtual Moviemaking

include sophisticated hardware devices that

allow direct input into the computer programs

without having to use a keyboard or mouse,

advanced motion capture systems, virtual

asset kits or libraries and real-time rendering

and display technologies (Sidebar).

As a result, the director can control the virtual

camera in the computer using a familiar device

movements of the camera and the actors

performing can then be captured using special

motion

sensing

devices

and

the

Fig 3.

Exploring a computer generated scene using virtual cameras and actors

Chris Edwards at the Autodesk User Group, SIGGRAPH

results

displayed in real-time on a computer screen.

This allows actors and crew to shoot digital

performances in ways that are intuitively similar

to live action shooting.

Virtual Moviemaking workflows consist of

several

distinct

but

related

and

moving the camera in the

Virtual Moviemaking blends several, previously distinct, technologies and workflows from the film

such as a real but modified camera body. The

simply

components

designed to make this all possible. The

following sections describe these components

in more detail.

6

The New Art of Virtual Moviemaking – An Autodesk Whitepaper

Virtual Camera Devices

Motion Capture Camera Systems

Advanced Virtual Moviemaking

techniques

The core of any Virtual Moviemaking workflow is the camera system. The camera system

use

specialized

hardware devices to control a

consists of two key components: a virtual camera and a device to control it. Basic systems use

virtual camera (CG camera).

standard computer peripherals (a keyboard and mouse) to control the virtual camera. More

Some of these devices are

sophisticated systems use specialized devices, or tracking controllers, that an operator can

based on familiar camera form

manipulate much like a physical camera. Depending on the user’s preference such controllers

factors and include controls for

can range from gaming controllers to flat panel viewfinders to full camera bodies (Sidebar).

focal length, zoom, and depth

of field as well as common

In many cases the virtual camera is controlled by the operator moving a tracking controller within

film-specific setups such as

a tracking volume– a volume of space defined by a series of remote sensing devices capable

virtual dolly and crane rigs.

of detecting the exact orientation and movement of the tracking controller (Figure 4). A computer

Other devices have unique

translates the data from these sensors into instructions that control (animate) the virtual camera

and

causing it to behave exactly like the controller. The sensing devices and the tracking controllers

unconventional

geared

form an integrated system known as a motion capture camera system.

more

for

forms

extended

hours of hand held operation.

The InterSense VCam

The InterSense VCam offers a

simple and natural way for

camera operators to create

virtual

camera

moves

with

realism. The VCam device

Fig 4. Schematic representation of a basic motion capture camera system

controls the motion, camera

Sensing Devices (SD) are arranged in a room or on a soundstage to create a tracking volume (red box).

These devices detect and analyze the movement of special objects, known as tracking controllers, within the

defined volume. The detected movement is then applied to a virtual camera in a computer.

angle and point of view so that

shots can be set in a natural

and intuitive manner.

Sophisticated systems combine a range of technologies for greater precision. The InterSense

Visualization of virtual content

VCam, for example, uses a combination of ultrasonic sensing devices combined with acoustic

occurs in real-time providing

speakers, accelerometers and gyroscopes, mounted in a camera body, to track the position and

the director with a high degree

orientation of the camera with millimeter precision and to within one degree of angular rotation.

of flexibility and creativity.

Other systems feed data directly from the controller into the computer and do not require special

sensing devices. These include games controllers and

several other types of hand held devices. They have an

advantage in that they can be used anywhere where the

device can be hooked up to a computer. However, the

camera is often ‘tethered’ to the computer which can

affect mobility.

Fig 5a. What You See is What You Get:

Here the display device acts as the camera controller. Its

movements are tracked and applied to a virtual camera

allowing the camera operator to see exactly what the CG

scene will look like as they move the camera.

Image Courtesy of The Third Floor

7

The New Art of Virtual Moviemaking – An Autodesk Whitepaper

Virtual Camera Devices

Another method of controlling

a virtual camera is by using

visual display systems such

as the Gamecaster

®

GCS3™

virtual camera controller, which

does

not

require

sensing

devices arranged in a room or

soundstage

to

create

a

tracking volume. It allows the

director to view the CG scene,

as if through the lens of a

camera.

The GCS3 can be mounted to

a tripod fluidhead and panbars,

Fig 5b. What You See is What You Get:

Image Courtesy of InterSense

or a shoulder mount for handheld operation, enabling users

The MotionBuilder virtual camera (light-green icon) in the CG scene is controlled by a tracking controller,

providing the director with a viewfinder perspective (inset) of the set and characters during shooting.

to film CG scenes the same

way they would shoot live

action -- by looking through the

The devices used to control the virtual camera can be completely customized to reflect the

GCS3

unique working style of the director. Not only can they take different physical form factors

"viewfinder"

panning,

discussed above, each one of the device’s various buttons, knobs and other controls can be

craning

tilting,

and

and

trucking,

zooming

the

customized to match the individual preferences of the operator. Customization of the camera’s

virtual camera in real-time with

virtual features can be as simple as using a special software application with an easy-to-use

real-life camera hardware.

graphical user interface (GUI) to modify and set the different CG parameters controlled by the

camera. The camera parameters are then integrated into software drivers which plug into realtime 3D animation applications such as Autodesk® MotionBuilder®. These applications react to

The

Gamecaster

GCS3

the camera input allowing the director to have complete cinematographic control over how they

shoot the CG world.

While the goal of a virtual camera system is to replicate the functionality of a real world camera,

they offer the additional advantage of being able to extend the camera’s functionality, and

therefore the Director’s creative options, far beyond the physical limits of real cameras. For

The GCS3 features include a

example, a virtual camera system can be scaled to any size– enabling the Director to view a full-

stylized form factor, internal

size set as if it were a miniature model in order to quickly cover a large area for exploration and

motion sensors, an HD color

initial decision making. Once a specific shooting location and approach have been determined

LCD

the camera can then be scaled back to actual size to shoot the scene correctly. This type of

thumbstick controls. It takes

Instant adjustment of scale is just one of many additional creative options provided by virtual

only 15 minutes to set up, plug

camera systems.

into a computer via USB and

viewfinder,

and

two

start filming.

The field of motion capture is rapidly evolving. Every

year new systems emerge which offer greater flexibility

at lower cost. The price of motion capture has dropped

significantly in the past few years as the technology

has improved. Previously, even basic systems were

complex, cumbersome and very expensive to set up.

Today newer, more compact systems are simpler to

–

set up, more flexible, and start at only a few thousand

–

dollars for basic motion capture functionality.

Fig 5c. Halon Previs Supervisor Justin Denton

films a CG scene in Maya in real-time with the

Gamecaster GCS3 virtual camera controller.

Image Courtesy of Halon Entertainment

8

The New Art of Virtual Moviemaking – An Autodesk Whitepaper

Real-Time Performance Capture

Good performance capture is as important as the motion capture camera system when it comes to creating

believable CG characters. In of itself, performance capture is a not new phenomenon and has long been used for

visual effects creation. Early uses of performance capture date as far back as Titanic in 1997, and it has been

essential to the successful completion of a diverse range of projects– from X-Men to Beowulf– as well as the

creation of some truly memorable movie characters– such as Gollum in The Lord of the Rings.

Modern performance capture systems are designed to capture more of the nuances and personality of the actors’

performance required to create more realistic– or at least more believable– CG characters. To that end, it has

become increasingly common to capture the actors’ facial expressions along with their body movements to

enhance the CG performance. Recent examples of innovations this technique include the Robert Zemekis films

Polar Express and Beowulf where computer-generated facial animations were layered on top of captured facial

performances from popular actors such as Tom Hanks and Angelina Jolie.

The talent and creativity of the actor is critical to driving believable performance from the CG character.

Technology is no substitute for personality, and whether the creative talent is that of an inspiring actor or a highly

skilled animator, the human element is essential to creating engaging digital characters. However, it is through the

advanced combination of body, facial and vocal performance capture technologies that the invaluable craft of the

actor is being integrated into the Virtual Moviemaking pipeline. Recent movies such as The Curious Case of

Benjamin Button have demonstrated just how believable performance capture can be and the advances the

industry has made in overcoming the uncanny valley effect.

.

The

Uncanny Valley of Eeriness – Or why does some animation look so creepy?

In the 1970s, Masahiro Mori, a Japanese researcher in

robotics, introduced the uncanny valley hypothesis to

describe a human observer’s empathy – or lack of it –

for non-human anthropomorphic entities. Although the

concept was originally applied to how humans relate

7

emotionally to robots, the phenomenon also applies to

an

audience’s

empathy

for

computer

generated

characters.

In its simplest form the relationship states that, at first, a

human observer’s empathy will increase as an

anthropomorphic entity becomes more humanlike but

will drop sharply when the entity becomes close to

human, but not quite, effectively becoming creepy. This

decrease in empathy is called the uncanny valley effect.

The

phenomenon

is

magnified

three-fold

when

observing entities in movement (as opposed to still

objects/images) and appears to be intrinsic to the way the human brain processes information

related to human interaction.

Neuroscientists have used fMRI scans to observe the human brain’s response to various types of

computer-animated characters confirming both the effect and its correlation to increasing

anthropomorphism; observing, for instance, that the same motion capture data will often appear

The Uncanny Valley

Adapted from Mashiro Mori

and Karl MacDorman

Adapted image subject to GNU

Free Documentation License

less biological, or real, the more humanlike the character. Our understanding of the uncanny valley

phenomena and how to overcome it has dramatically improved in the past few years, enabling the

creation of ever more believable digital characters – although many challenges still lie ahead.

9

The New Art of Virtual Moviemaking – An Autodesk Whitepaper

Xsens

The worlds of performance capture for visual effects and Virtual Moviemaking are fast

Cameraless

converging as technological improvements enable filmmakers to capture actors’ performances

motion

capture

suits like the Xsens MVN suit

and apply them to CG characters in real-time. Today an actor’s performance can be captured

offer filmmakers an easy-to

using body, facial and vocal capture systems all of which can be simultaneously remapped onto

use, cost efficient system for

the virtual character in real time. This allows the director to see the live actor performing in front

full-body

of them and simultaneously see the virtual character performing the same actions through the

capture.

human

motion

virtual camera.

Since

these

suits

do

not

Similar to the additional creative options offered by the virtual camera systems virtual

require

performance systems also allow the actor and director to explore some unique creative

emitters or markers they don’t

possibilities by eliminating the real-world constraints of the human body, prosthetics and make-

have lighting restrictions and

up. Actors can play an infinite range of character types and no longer need to be type cast

avoid

external

problems

cameras,

such

as

occlusion or missing markers.

based on physical appearance, race, sex, age or even species – as evidenced by recent motion

capture performances by actors such as Andy Serkis (playing Gollum as well as a giant ape in

They are therefore well suited

King Kong), Tom Hanks (playing multiple characters including a child in Polar Express) and

to capturing body movement

Brad Pitt (aging in reverse in The Curious Case of Benjamin Button).

for outside action shots that

require extensive freedom of

Virtual Moviemaking offers filmmakers a powerful, new, non-linear, story-telling methodology – a

movement – from climbing to

virtual production space in which a story can be developed with as much creative freedom–

complex fight scenes

and perhaps more– than in the non-linear editing suite. Since each ‘take’ can be recorded,

MVN is based on state-of-the-

saved and replayed on set, the director can start to literally build the story ‘in 3D’ cutting together

art miniature inertial sensors,

takes and replaying them live ‘in camera,’ changing only the elements that need to be changed

sophisticated

to enhance the story. A rough cut can therefore be built on set walking the director through the

models

story as it is being shot.

and

biomechanical

sensor

fusion

algorithms. The result is an

output

In addition the director can easily combine multiple takes into a single shot. The best takes from

of

kinematics*

each actor can be selected and combined into a master scene that the director can re-camera

high

that

quality

can

be

applied to a CG character to

and do coverage on as many times as needed. In each new take the actors’ performance will be

create realistic animation.

as flawless as the last, selected, recording and the director can focus on only the things that

* Kinematics describes how a chain of

really need to change– such as camera movement, composition, or other aspects of the shot

interconnected objects– such as the

sequence: if the performance was perfect but the camera angle wrong the actor will not have to

jointed bones of a human limb– move

try and rematch his or her ‘perfect’ performance while the director reshoots. The scene is simply

together.

reshot using the previously captured performance.

As with motion capture camera systems, performance capture systems are fast

evolving and becoming more accessible. At the bleeding edge new advanced

systems are capable of recording both live action and motion capture performances

simultaneously and blending them in real-time enabling the director to film CG

characters interacting with live actors. This is a significant breakthrough. Previously

CG characters had to be composited with live action after production.

Fig 7. New motion capture suits offer actors and stuntmen the freedom to

perform complex action sequences easily on location as well as on set.

Images Courtesy of Xsens

Images courtesy of Xsens

10

The New Art of Virtual Moviemaking – An Autodesk Whitepaper

Computer Performance

Real-Time 3D Animation Engines

Several trends in computer

performance

In addition to motion capture systems, the Virtual Moviemaking process also requires a robust

real

3D animation engine for the real-time render and display of the CG characters in the 3D scene.

time

are

facilitating

calculation

and

display of 3D computer scenes

The animation engine provides necessary visual feedback for the director to see the action in

and animation:

context, make creative decisions and interact with the actors and scene. The quality of the visual

feedback is determined by the quality of the animation engine.

Central Processing Unit

Rendering 3D scenes with any kind of visual fidelity is computationally expensive and requires

The Central Processing Unit

significant resources and time. Even today, it can take hours, or days, for a large render farm

(CPU) of the computer has

with hundreds of processors to render a single photo-real frame of a complex 3D scene– and

increased

every second of film requires 24 frames. Complex visual effects and 3D animated movies like

performance is measured in

Shrek and Wall-E are all created this way– where each image is rendered frame by frame for a

terms of clock speed (Hertz) –

period lasting many months. However recent technologies, many deriving from the requirements

which is the speed at which

of the video-game industry, are rapidly improving the quality and capability of real-time rendering

the

in

speed.

processor

can

CPU

change

state from 0 to 1. At the time of

systems, making advanced Virtual Moviemaking techniques feasible.

writing processor performance

Computer processing capacity has increased dramatically in recent years and low-cost, high-

exceeded 3:0 GHz or 3 billion

performance, graphics cards have become common (Sidebar). In addition, gaming technology is

cycles a second.

driving real-time rendering towards ever increasing photo-realism at frame rates that provide the

viewer with immediate, interactive feedback. This is enabling high-quality real-time (24 or 30

Multi-Core Processors

frames/second) rendering and display needed to eliminate undesirable side effects such as

However there are physical

strobing and stuttering that make timing hard to judge.

limits to how far you can push

processor speeds until you

start

getting

undesirable

quantum effects. As a result

chip

manufacturers

have

started developing multi-core

processors instead of trying to

increase clock speed. These

processors offer more efficient

and faster processing to highly

multi-threaded applications –

software where the processing

algorithms

have

been

specifically designed to run in

parallel.

Graphics Processing Unit

The Graphics Processing Unit

(GPUs)

is

a

specialized

processor for rendering 3D

graphics. Although many PC

Fig 7. Autodesk MotionBuilder uses a real-time render engine to display animated characters in a 3D scene

motherboards

have

GPUs

Applications like Autodesk MotionBuilder provide real-time 3D animation engines capable of

built-in,

processing large amounts of 3D data in real-time and displaying them at high resolution so the

cards from NVIDIA and ATI

director can see and judge the performance being captured. Given the importance of lighting in

offer better performance. GPU

filmmaking, the quality and capability of the virtual lighting kit provided with the 3D animation

efficiency and parallelization

engine is critical to effective visualization. The virtual lighting kit determines exactly how the

enable significant performance

system will render and display lights, gobos, shadows, object transparency, reflections and in

increases

whose

advanced systems, even certain types of atmospheric effects.

high-end

to

rendering

graphics

applications

algorithms

have been GPU optimized.

11

The New Art of Virtual Moviemaking – An Autodesk Whitepaper

3D Shaders

Accurate display of properties like reflection and transparency can be critical to shooting certain

3D

shaders

are

software

types of sets, vehicles, or props virtually. For example, when shooting a scene through a car

instructions describing how 3D

windshield or a window the director will not be able to frame the shot properly if the system

objects should be rendered.

cannot display transparent objects correctly and he/she is unable to see the actors through the

They are executed on the GPU

glass.

(graphics processing unit) of a

computer graphics card.

Today, 3D animation engines are capable of rendering very sophisticated, realistic visualizations

of the scene. However filmmakers typically want to push creative boundaries and try things that

Each shader provides precise

have not been tried before. To do this the real-time animation engine needs to be customizable.

instructions on how the 3D

The development of custom shaders (Sidebar) allows the production team to create specific

data should be rendered and

visual looks or styles and apply them to the 3D scene giving the director a much more accurate

displayed. Common types of

shader include pixel, vertex

depiction of the scene in terms of art-direction and cinematography.

and geometry shaders

Real time render engines will continue to improve with time delivering real-time images that are

Shaders can be created using

increasingly photo real. Current real time render engines such as the one built into Autodesk

one

of

several

specialized

MotionBuilder provides filmmakers with extensive creative tools to implement compelling art-

programming languages:

directed representations of locations, sets, and other virtual assets.

- HLSL (High Level Shader

Language)

- GLSL (OpenGL Shader

Art Directed 3D Assets

Language)

- Cg (C for Graphics)

In previous chapters we have discussed how 3D data is captured on set and applied to both

cameras and characters in the virtual environment using a real-time animation engine. This

Recent

developments

in

gives the director and production crew the ability to interact live with the virtual CG environment

programmable graphics such

and performers. However for the experience to be compelling and authentic the virtual

as GPCPU (general purpose

computing on CPUs) enable

environment should resemble the real thing. The virtual set or location should look like a real set

very sophisticated rendering

or location, and a prop – whether it’s a chair or a gun – should look like a real prop. The closer

pipelines to be implemented;

these virtual elements resemble the intention of the Production Designer and Art Department the

and companies like Nvidia are

more realistic the virtual production experience becomes. 3D assets which resemble the visual

further

intent of the Production Designer and Art Director are termed Art Directed 3D Assets.

expanding

graphics

programming capabilities with

technologies

(Compute

like

CUDA

Unified

Device

Architecture).

One of the biggest challenges

for developers remains the

hardware specificity of many

software

development

(SDK’s)

and

programming

(API’s).

This

kits

application

interfaces

can

cause

unpredictability in results and

might require work to be done

twice

when

working

with

Fig 8. Real-time Art Directed 3D Assets such as the jungle scene above improve esthetics and increase

realism. Virtual Art Departments bring Art Direction to Virtual Production workflows by focusing on lighting

and atmosphere to invoke appealing environments. MotionBuilder image courtesy of Rob Powers

different

The visceral exploration and discovery of a set or a location helps inspire the Director,

However the fact remains that

Cinematographer, and other members of the creative team. This inspiration happens in

continued progress in this field

impromptu ways and helps uncover new and better ways to tell the story. Art directed 3D

will drive increasing image

assets can help facilitate a similar creative process in the virtual world.

quality and photo-realism in

graphics

pipelines

(e.g. Direct3D versus OpenGL,

Nvidia versus ATI).

real-time graphics display.

12

The New Art of Virtual Moviemaking – An Autodesk Whitepaper

However, most of the 3D assets that are used today in early production and in previs tend to be fairly basic in

form and of limited visual appeal. They are often just simple blocked out shapes with flat, grey shading. Their

primary purpose has been to help provide purely technical information to the production team – primarily to help

solve problems related to motion, scale, composition, and timing. It has been argued that simpler assets simplify

the process allowing the team to better focus on only what is important when making a decision. Additionally, it

has been difficult for visual effects pipelines to generate polished, high-quality CG assets with proper texturing

and lighting without significant prior pipeline setup and development.

While working with grey shaded model assets can be a valid workflow there are times when it can prove to be

less helpful. After all it is rare to hear a director ask for the live action set to be painted a flat grey with no obvious

lighting cues so that he or she can more clearly understand it. Today, the grey shaded workflow is an imposed

limitation of the CGI asset creation process that has grown to be accepted by the production community at large.

If given the option most directors would choose to work with assets that are in a form that resembles how they

should be experienced in production: with all their relevant colors, textures, shading, and lighting.

This

is all the more true given that CGI assets evaluated in the unnatural void of a grey shaded world and in an

inaccurately lit environment can sometimes send false cues and be creatively misleading. Fortunately, new digital

technologies can help take things much further by enabling greater fidelity in the real-time assets used. Using

advanced techniques such as baking texture and lighting into the assets the Production Designers and Art

Directors can actively drive the asset creation process to preserve conceptual design esthetics at every step of

the process.

Art directed 3D assets are the basic building blocks of Virtual

Virtual Art Department

Moviemaking worlds. Innovative production teams use a Virtual Art

Department to create and optimize 3D assets for virtual production.

The Virtual Art Department (VAD) is

The Virtual Art Department will work closely with the Director,

responsible

Production Designer, traditional Art Department, and other production

Department designs and digital matte

team members to create compelling virtual assets that allow for

paintings into virtual 3D asset which

efficient production and development. The highly visual virtual assets,

when used dynamically within a real time display engine, provide the

production team a sense of “in the moment” discovery and improved

problem solving. Having said that, it is important to point out that the

Virtual Moviemaking workflow is inherently flexible enough to offer both

for

translating

Art

match the design work as closely as

possible and which are optimized,

organized,

and

assembled

into

flexible kits providing the best realtime

performance

and

maximum

flexibility while shooting.

grey shaded and fully art directed assets as desired.

Practical Asset Kits

Practical asset kits need to be easily modifiable, modular, quick to deploy, and have corresponding practical and

virtual counterparts.

Deploying assets on set, whether live for a Broadway production or digitally for Virtual Moviemaking, needs to be

fast and efficient for production to proceed smoothly. Cumbersome assets that take too long to set up or tear

down are as unusable in the virtual world as they would be on a live stage. To that end, a successful Virtual

Production workflow requires a modular asset kit that has been carefully planned for easy deployment – without

such a kit the immediacy of Virtual Moviemaking cannot be fully realized and the benefit of a real-time interaction

will be lost.

Developing virtual asset kits needs to be carefully coordinated with that of the real-world production assets (i.e.

the practical assets). This is required to ensure that the virtual interaction between CG characters and a set or

prop accurately mimics the real-world interaction on set. Without such co-ordination it will be difficult to provide

proper cues to the Director and actors on the virtual capture stage, which in turn makes it harder for them to

deliver a compelling performance.

13

The New Art of Virtual Moviemaking – An Autodesk Whitepaper

Asset Types

Therefore, during preproduction, special attention needs to be paid to coordinating the work

ofthe Virtual Art Department with that of the traditional Art Department: production design, art

The term 3D Asset is used to

direction, set design and construction and props. It is important to note that there are almost no

broadly describe any 3D object

(geometry) created using 3D

physical limitations in the virtual world. Anything is possible, even behavior that defies the laws

modeling

of physics. CG cameras can move and shoot in ways that are impossible on set. Without careful

and

animation

software such as Autodesk

co-ordination between the different departments during production results might be

Maya, Softimage or 3ds Max.

unpredictable or even undesirable.

3D assets are used in a wide

For example, it is very easy to plan a virtual shoot with fly-out removable apartment walls, or a

range of production pipelines

foreground tree whose limbs can be repositioned perfectly to frame a desired shot. However

for visual effects, CG animated

unless one also takes into account the physical, on-set reality during planning and development,

movies and previs.

then when it comes to capturing the performance the shoot will not go according to plan. The

real camera will not be able to move around the set as planned and pieces of the set like the

Virtual Assets are 3D objects

foreground tree will not be as easily configurable as they were on the computer.

that have been optimized for

To develop effective asset kits, the virtual and traditional Art Departments need to work

real-time

together. The Virtual Art team needs to fully understand the real-world limitations of practical

display in virtual moviemaking.

manipulation

and

In many ways, they are similar

assets, including such constraints as budget and logistics, while the Traditional Art team needs

to video game assets but differ

to understand the objectives of the virtual production team in order to develop practical assets

in organization, metadata tags

that better support them. Working together these teams can generally find better, more

and

innovative, solutions to production problems than if they work separately.

naming

conventions.

Video Game Assets are 3D

assets

that

optimized

so

have

been

that

game

Pre-animated Asset Handling and Real Time Effects

engines can render them in

While virtual and practical asset kits most often refer to set construction and props, pre-

real-time during game play.

animated assets and real time effects typically refer to animated characters and visual effects.

Pre-animated assets and real-time effects further enhance the Virtual Moviemaking experience

Pre-Animated Assets are 3D

by speeding processes and increasing realism. For example, as two characters fight one can be

objects that have been pre-

shoved into a stack of crates and automatically send the crates tumbling down in real time.

animated and “banked” so that

Rather than have to manually animate this behavior advanced workflows integrate pre-animated

they can be called up without

assets and sophisticated simulation capabilities.

delay when needed on the

virtual stage.

They are often

derived from 3D animations

created

at

postproduction

houses. Like virtual assets,

they require optimization as

well as translation into formats

that are compatible with the

real time render engine being

used in the virtual production.

F

Practical Assets – real-world

Fig 9. Animated behavior such as falling crates can be simulated automatically in advanced systems.

objects of wood, steel etc, as

Interoperable tools such as Autodesk® Maya®, Autodesk® 3ds Max® and MotionBuilder software

well as painted stage pieces,

help provide efficient workflows for incorporating pre-animated data into a virtual production.

that have been designed by the

For example, data caches, such as geometry caches, allow 3D assets created within Maya to

art director and built by the film

construction

be streamlined and shared in a more efficient form for real time playback within the Motion-

team

to

easily

match – and be interchanged

Builder render engine. Techniques like geometry caching help reduce the processing overhead

with – the 3D virtual assets on

inherent in the complex rigging setups and hierarchies typical of most animation today and are a

the motion capture stage.

14

The New Art of Virtual Moviemaking – An Autodesk Whitepaper

Geometry Cacheing

necessary optimization for virtual production – without them it would not be possible to achieve

Geometry

efficient real time playback of diverse asset types.

Autodesk MotionBuilder has also recently added groundbreaking real-time simulation tools for

enables

(assets) between applications

rigid-body dynamics as well as a real time rag doll character rig solver to its feature set. These

improving workflow efficiency

capabilities help filmmakers achieve more realistic, real-time effects on the Virtual Moviemaking

stage.

caching

efficient exchange of 3D data

and overall performance.

These innovations offer a rich and stimulating, interactive, Virtual Moviemaking

3D assets can be created a

experience and add to the Director’s creative toolkit.

using a variety of modeling

Today much of the development of these production assets occurs concurrently in different

techniques such as polygon

departments or even within different companies.

meshes, NURBS surfaces or

The process of integrating pre-animated

assets into production therefore requires careful coordination and collaboration between Visual

subdivision

Effects, the Previs Department, the Virtual Art Department and others in order to develop an

ations. Once modeled complex

efficient pipeline.

animation techniques are often

surface

deform-

applied in order to get the asset

Progress in animation technique, whether through innovation or refinement, tends to originate

to behave as required. The

within one department or entity first – such as the Visual Effects facility or the in-house Previs

resulting data files can be quite

Department. Therefore, to be effective the Virtual Moviemaking pipeline needs to be highly

large and complex and there-

efficient at incorporating these changes and applying them throughout the production process. It

fore time consuming to render.

must be able to quickly integrate the latest developments in animation, rigging, blocking, or

Geometry caches help solve

other cg assets so that the Director and production team can make full use of them on set.

this problem. They are special

files that store the 3D asset as

simple

vertex

transformation

data and are therefore often

Integration with Current Art Department Development and Design Workflows

referred to as point caches.

Art Departments are already making extensive use of 3D modeling and CAD applications

They help improve rendering

offering the potential for better integration with Virtual Moviemaking and previs processes.

performance by reducing the

The use of CG modeling and design has become relatively widespread within feature film Art

number of calculations required

Departments. There is no question that a CG-based design workflow is proving to be extremely

to

useful, even indispensible, to modern production design, or that it is often used to develop

contain many animated objects.

extremely sophisticated visuals. This workflow is increasingly being used by Set Designers, Art

They

Directors, Concept Artists, Production Designers, and Directors during the early stages of visual

deformations to be more easily

development and design. However, this CG-based design workflow currently only produces

combined and edited.

static frames or rendered sequences that are consumed passively by the creative team. They

play

back

also

scenes

allow

that

object

The cache file can be saved to

can watch but they cannot interact directly with the CG environment.

a local hard drive, or better yet

Virtual Moviemaking requires pushing beyond typical CG approaches to production design to

to a central server where it can

be easily shared with other

create an interactive and immersive experience that can be driven by the Art Director,

applications.

Production Designer or Director themselves. This requires packaging the concepts created into

virtual assets that can be loaded into a real-time system that offers the creative team control of

the camera. This, for example, would give them the option to walk around a set on a ‘virtual

location scout’ as opposed to relying on a computer artist to move a cg camera while they view

the results. This can be more easily achieved if the virtual and traditional Art Departments,

®

®

Previs Department and other stakeholders collaborate on developing a more interoperable and

Autodesk

integrated workflow for developing and sharing assets.

change technology provides an

FBX

data inter-

open framework for software

So, while design elements such as sets and props can currently be evaluated with real world

applications such as Autodesk

camera lenses in mind, the Virtual Moviemaking workflow makes a much more “production

Maya, 3ds Max, Softimage and

relevant” evaluation easier. It allows virtual assets to be seen in a likely shooting scenario and

MotionBuilder as well as third

lighting environment so that they can be experienced in the same way that a real world set

party applications, to exchange

would be.

point data as geometry caches

with each other.

15

The New Art of Virtual Moviemaking – An Autodesk Whitepaper

The increased fidelity and detail in visual and spatial information provided by the Virtual Moviemaking workflow

facilitates the creative team to find answers to production decisions much earlier in the process. The process

inherently allows for much more thorough vetting and preparation for the Art Department in general prior to full

scale production, and can help the team make more informed production design decisions earlier. It also helps

ensure that concept art, previs and even final post-production assets are more closely aligned in look and feel.

Previsualization

Previsualization is defined fairly accurately on Wikipedia as any technique, whether hand drawn or digital, that

attempts to visualize movie scenes before filming begins for the purpose of planning. Its primary purpose is to

allow the director “to experiment with different staging and art direction options such as lighting, camera

placement and movement, stage direction and editing – without having to incur the costs of actual production1”.

So, where does previs end and Virtual Moviemaking begin?

The answer is that previs blends into the broader Virtual Moviemaking process. Virtual Moviemaking is a natural

expansion and extension of the previsualization process, tying pre-production and production processes together

into a consistent virtual production environment where the director and broader creative team can not only plan

but also execute on key production elements. Ground-breaking new productions like Avatar and Tintin are clear

demonstrations of this new, broader approach to Virtual Moviemaking.

Virtual production techniques have evolved from the merger of several unique creative processes and

technologies. These include previs, production design, art direction, cinematography, performance and motion

capture, 3D animation, visual effects and real-time gaming technology to name a few. Today sophisticated

previsualization uses virtual production techniques, and virtual cinematography uses previsualization techniques.

Properly designed Virtual Moviemaking pipelines should enable previs teams to share assets bi-directionally with

the virtual production environment. This requires greater awareness of the virtual production needs early on in

the planning process; but if the groundwork is properly laid for flexible asset interchange overall production will be

more cost effective and efficient. If the asset interchange is bi-directional the Previs department can benefit

directly from the large number of assets generated by the Virtual Art Department and virtual shoots. At the core

of current file interchange standards are robust new formats such as Autodesk® FBX® technology that allow 3D

asset interchange between various different software programs. This makes it possible for all departments and

creative teams to benefit from the development of CG assets created anywhere within the production process.

Stereoscopic 3D (S3D)

Dynamic stereoscopic intraocular and convergence adjustment

The demands of Stereoscopic 3D (S3D) film production are novel and complex. Creating a compelling

entertainment experience requires careful planning and significant attention needs to be paid to the 3D depth

aspects of the story. If the depth characteristics of each scene (and every shot) are not fully understood the

director will not be able to engage the audience’s emotions appropriately. 3D depth planning needs to begin right

from the start – from early storyboard and production design – and continue through production where special

care and attention needs to be paid to capturing the scene depth correctly.

If not, the final result will not have the desired effect on the big screen: it might be too aggressive, too subtle, or

even disorienting to the viewer. Problems such as incorrect framing for screen surround – where objects that

1

Quoted from: Wikipedia contributors, "Previsualization," Wikipedia, The Free Encyclopedia, Oct 2009. Original source: Bill Ferster, Idea

Editing: Previsualization for Feature Films, POST Magazine, April 1998.

16

The New Art of Virtual Moviemaking – An Autodesk Whitepaper

should appear to be in front of the screen plane are abruptly cut off by the edge of the screen (making them

appear to be both in front of and within the screen plane at the same time) – need to be avoided.

While it is relatively easy to change camera properties such as intraocular separation and convergence in CG

animated movies and then re-render the shot, changing these properties in post-production on live action shots is

time consuming and costly and the quality of the stereo effect may suffer. It is best to avoid such an eventually

through careful planning. Previs and virtual production techniques are highly valuable tools in stereo production

for exactly these reasons.

By allowing the director to view a set dynamically, virtual production technologies provide immediate feedback

and direct interaction to the production crew. The director can experiment with 3D depth and feel on the fly,

changing camera properties such as position, orientation, intraocular distance and convergence. Special S3D

viewing systems allow him/her to experience changes to depth immediately and get a better feel for how the final

production will look and make informed creative decisions on how to shoot a scene before production begins.

He/she will no longer be “shooting blind” when it comes to live production and can therefore reduce the margin of

error as well the need for expensive retakes, reshoots or post-production fixes.

A more detailed description of Stereoscopic 3D production can be found in the Autodesk

Whitepaper, The Business and Technology of Stereoscopic Filmmaking.

This whitepaper examines the S3D business case, the current state of the industry and the technical

and creative considerations faced by those looking to make compelling stereoscopic movies. It also

provides background information on stereopsis and perception, the science underlying stereosocopy.

It is hoped that increased knowledge of the science and technology of S3D will help empower the

reader to create more effective and compelling movie entertainment.

Post Production

Virtual Moviemaking ties pre-production and production more directly to post-production. By creating a virtual

production environment with shared assets the different teams, from the previs team to the visual effects crew can

better collaborate and share insights and expertise. When managed well the result is better planning, reduced

costs and greater consistency: the virtual and practical production design elements are consistent;

previsualization processes are consistent with production design; and previsualization and production design are

more consistent with visual effects.

Production assets captured on the virtual sound stage can be shared with visual effects facilities and, although

virtual production is not without its own problems in terms of data quality, consistency and interoperability, overall

the process enables filmmakers to be more involved in directing the creation of CG content in ways that can still

directly influence post-production. It is important to point out that although the real-time imagery of Virtual

Moviemaking can be extremely appealing visually, the current generation of real time engines cannot produce the

high image quality achieved in post-production by the visual effects teams. In post-production extensive attention

is paid to each minute detail in modeling, animating, texturing, lighting, rendering and compositing the CG

elements to create the final image seen on big screen. The files produced during virtual production are primarily

sent to post production to provide motion capture data and to provide guidance to the visual effects team.

Getting input from the visual effects team early in the Virtual Moviemaking process is crucial to making informed

decisions and can eliminate production errors which, when propagated down the production pipeline, become

costly to fix. A lot of wasted effort trying to second guess the final look and feel can be avoided by the entire team

if information that more closely represents the desired final look is dispersed throughout the production early on. It

also allows for more immediate and spontaneous creative exploration by the Director and the creative production

team.

17

The New Art of Virtual Moviemaking – An Autodesk Whitepaper

Virtual Moviemaking

Looking Ahead

Virtual Moviemaking will continue to

evolve and in the future move closer

The movie industry is currently in the very early adoption stages of virtual production workflows.

toward

The technology is still nascent and the processes far from standard. It requires skill, talent,

a

nonlinear

patience and a taste for risk to embark upon the path of virtual production but the rewards are

completely

immersive

production

workflow

connecting all departments.

rich. However as with Digital Intermediates (DI) and visual effects the steady march of

technological progress will make the technologies discussed in this whitepaper ever more

This innovative workflow is being

accessible. Already today lower budget productions are using many of these techniques to

pioneered at the highest levels of

improve the quality of their CG production.

Hollywood

film

production

to

facilitate the more complex visual

As virtual production workflows become more commonplace and more and more film production

effects

professionals become comfortable with the new technology it will disseminate throughout all

productions.

levels of the film production pipeline. All departments stand to benefit from real-time, immersive

digital toolsets and the added level of discovery and preparation which they provide.

heavy

and

animated

However, the benefits of virtual

The

moviemaking

workflows

are

impact is already clear on high profile film projects such as Avatar and Tintin, not only with the

potentially useful to many other

Directing Team but with Cinematographers, Visual Effects Supervisors, Actors, and throughout

types of film production especially

the Art Department, as Production Designers, Art Directors, Set Designers, and Costume

considering the wide number of

professionals get exposure to the benefits of the technology. Virtual Moviemaking technology

animation and effects shots present

allows every one of these professionals to vet out their various creative contributions and

in films not traditionally categorized

experience them in the same way that the filmmaker and ultimately the audience will see them.

as visual effects or CG animation

movies.

Already it is no longer necessary to invest in extremely expensive motion capture technology to

By fostering a ‘production relevant’

start virtual production. Affordable solutions exist allowing smaller productions and Art

environment for creative exploration

departments working on projects pre-greenlight to explore virtual workflows. As the project

and problem solving early in the

evolves the technology can be fairly easily scaled to meet increasing production requirements.

moviemaking

As the technology continues to become more scalable and more accessible a broader range of

process,

and

by

creating a central hub for team-wide

creative professionals will able to adopt Virtual Moviemaking techniques.

interchange between departments,

Fortunately, research and development in interactive devices, graphics processing and powerful

Virtual Moviemaking enhances the

software tools is at an all time high, fuelled in great part by the videogame and mobile device

collaborative nature of the movie-

®

industry. Software development for devices ranging from the Xbox 360 and Sony PlayStation

making process. This is important

3 game consoles to the Nintendo® Wii® and iPhone® entertainment devices offers interesting

as it is the individual members of

®

®

the creative team and not the

insights as to the future potential for applications on the next generations of these devices.

technology that drives the Virtual

Another interesting breakthrough would be the emergence of more modular motion tracking

Moviemaking workflow.

systems that can be scaled from a small portable virtual capture volume to a full large scale

production stage – this would allow Virtual Moviemaking technology to be implemented at every

Ultimately, Virtual Moviemaking can

stage of the production process without a heavy budgetary burden early on.

be seen as the next evolution of

previous technologies, workflows,