Super Wide Viewing for Tele-operation

advertisement

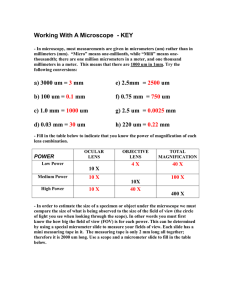

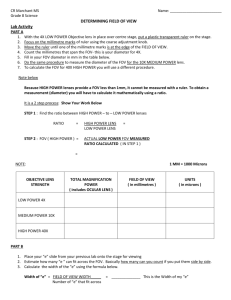

Super Wide Viewing for Tele-operation Hajime Nagahara*, Yasushi Yagi**, and Masahiko Yachida* ** The institute of Scientific and Industrial Research, *Graduate School of Engineering Science Osaka University Osaka University 8-1, Mihogaoka, Ibaraki, Osaka, 567-0047, 1-3, Machikaneyama-cho, Toyonaka, Osaka, 560-8531, Japan Japan Abstract: - In this paper, we propose a tele-operation system with a super wide field of view (FOV). Our teleoperation system has an omnidirectional image sensor for capturing a panoramic image and a personal spherical screen display for projecting a wide-angle image. The first original contribution is applying our system to navigating the mobile robot by tele-operation and evaluating efficiency of wide FOV. The second original contribution is a new propose of a super wide FOV. Generally, FOVs of a common display system and a HMD system are restricted and it is unable to see the outer of FOV of these displays. The idea of super wide FOV is that the wide FOV is nonlinearly compressed to display FOV. Therefore, the operator can look behind even if the display is not a panoramic large screen display. Key-Words: - Wide filed of view, Tele-opration, Robot, Navigation. 1 Introduction The tele-operation that human operator directs the robot from the remote site has been studied and produced [1], because human does not have to go hazardous environments such as space, volcano, sea and nuclear plant. To complete the tasks effectively and safely, tele-operation systems, called telepresence [2][3] and tele-existence [4][5], are required high quality and realistic feed back information, as if the operator felt actual presence at robot site. Visual feedback is the most important factor of tele-presence. Considerable factors of visual reality are plasticity related to vergence and focus controls, texture related to resolution and dynamic range of images, timedelay caused by camera rotation and communication, and extensity caused by field of view of display. In this paper, we focus to extensity caused by field of view (FOV) of display. It is well known that peripheral vision included wide FOV would influence postural control of human [6]. In addition, a FOV over 80 degree is required for feeling immersion and reality by human operator [7]. Takahashi et al [8] measured the influence of wide FOV head mounted display to human attitude control. They compared 140 degree FOV. This research suggests that the wide FOV is one of important factor for human attitude control. Caldwell et al. [9] reported that the narrow FOV disimproved task efficiency and reality in a navigation task. However, they compared only 30 and 60 degree FOV. Almost previous visual tele-presence systems use the ordinary narrow FOV display such as CRT. The view field can be changed by rotating a camera to get the large FOV information. However, a rotating camera arises a time-delay problem. One solution of enlarging the FOV without such a time-delay problem is to use an omnidirectional image sensor on tele-presence system. Onoe et al. [10] proposed the tele-operation system with omnidirectional image sensor. The system presents the transformed perspective image on a common Head Mounted Display (HMD) corresponding to an operator's head motion. The operator control led the mobile robot in indoor environment. FOV of the common HMD is narrow less than 60 degree, therefore, it is not enough wide angle to present peripheral vision information and the report did not evaluate easefulness of the system. However, they built the virtual tour system [11] by projecting panorama movies on the cylindrical large screen named CYLINDRA and the cubic screen system like CAVE [12]. The first research suggested us that the 360-degree panoramic image is suitable for tele-operation system. The second one suggested us that the wide field of view increases existence and presence. In this paper, we propose a tele-operation system with a super wide FOV. Our tele-operation system has an omnidirectional image sensor named HyperOmni Vision [13] for capturing a panoramic Slave system Master system PC for Imaging PentiumII 400MHz Omnidirectional image sensor NTSC Receiver Transmitter Robot RS232C Radio modem RS232C Spherical display Joystick RS232C Radio modem a: Slave system PC for robot control PentiumII 266 b: Master system Figure 2. Tele-operation system for navigation MHz Figure 1. System configuration image and a personal spherical screen display for projecting a wide-angle image. The first original contribution is applying our system to navigating the mobile robot by teleoperation and evaluating efficiency of wide FOV by experiments. The second original contribution is a new proposal of a super wide FOV. Generally, FOVs of a common display system and a HMD system are restricted and it is unable to see the outer of FOV of these displays. Therefore, we propose the idea to project super wide FOV information over the display capacity. Actually, FOV of the input image is transformed to the display system, nonlinearly. The operator can look behind even if the display is not the panoramic large screen display such as CYLINDRA and CAVE. 2 Tele-operation system Fig. 1 shows the configuration of the tele-operation system used in our experiments. The slave system shown in fig. 2-a is a mobile robot (B12: Real World Interface inc.) with an omnidirectional image sensor, HyperOmni Vision. The omnidirectional image sensor composed of a hyperboloidal mirror and a common NTSC video camera unit (EVI-310: Sony). It can capture a 360-degree FOV on a single input image. The input image is transmitted to the master system by a wireless NTSC video transmitter. The mobile robot B12 has three wheels steering and three wheels drive. These three wheels are driven synchronously and remain parallel at all times. The robot is controlled by the joystick on the master system through a wireless RS232C modem. The master system shown in fig. 2-b consists of a spherical screen display (VisionStarion: Elumens inc.), a joystick, PCs for an image processing and robot controle, a wireless RS232C modem and a wireless NTSC video receiver. The display can project 120hx65v FOV image by SXGA(1280x1024 pixels) resolution. Where, 120hx65v means that horizontal and vertical fields of view are 120 and 65 degree, respectively. Afterward, we represent horizontal and vertical fields of view like this in this paper. The input image is transformed to a spherical image by the PC on the master system. The transformed spherical image is projected on the spherical screen display. Incidentally, the omnidirectional image sensor has a single center of projection. The input image can transform to any desired coordinates without distortion. Therefore, the complete distortionless image is shown to the operator on the spherical screen display. Equations 24 are relationships between the input image coordinate of the omnidirectional image sensor and the spherical image coordinate. Here, p(x, y) and P( i , a ) indicate to the input image coordinates and spherical coordinates longitude and attitude around the sensor. b and c are coefficients of the hyperboloidal mirror shape described in equation 1. f is the focal length of camera. More details of the image formation are in reference [13]. X 2 + Y 2 - Z 2 = - 1, (Z > 0) a2 b2 (1) a2 + b2 = c2 r= (c 2 - b 2 ) f (b + c ) tan a + 2bc tan 2 a + 1 2 x = r cos i y = r sin i 2 (2) (3) (4) Fig. 3 and 4 show the input image of the Figure 3. An omnidirectional image Figure 5. 270hx65v degree FOV with linear transformation Figure 4. 120hx65v degree FOV Figure 6. 270hx65v degree FOV with nonlinear transformation omnidirectional image sensor and the projected image on the spherical screen display, respectively. 3 Wide field of view over the display capacity Generally, FOVs of a common display system and a HMD system are restricted and it is unable to see the outer of FOV of a common display system. A new proposal of a super wide FOV is that FOV of the input image is nonlinearly transformed to the display FOV. It is like compression the input image to display size. Therefore, the operator can look behind even if the display is not the panoramic large screen display such as CYRINDRA and CAVE. A linear transformation described in equation 5 is a simple way to transform FOV. Here, i and i' are azimuth angles on input image and output image. H view and H disp are horizontal sizes of input FOV and that of display screen FOV, respectively. Fig. 5 shows the sample of the linear FOV transformation image. However, the operator would be confused which way to heading in remote environment, because of discordance between the real observed azimuth and the transformed direction on the display. Incidentally, the operator would not be confused at the peripheral area, because human peripheral vision is not usually used for details analysis but also rough sensing such as motion detection. Therefore, our system nonlinearly transform the input image to the display image by the equation 6. In this system, the central area is done by a linear transformation and the peripheral area is done by a nonlinear transformation. From preliminary experiments, the center area of 60 degree should keep the direction accordance. The outer area is transformed by quadratic curve shown in fig. 7. In this figure, the horizontal FOV of display Figure 7. FOV transformation screen H disp is set on 120[degree] and the horizontal FOV of input H view is set on 180, 270 and 360[degree]. This figure also shows the azimuth accordant case when the horizontal FOV of input is within that of display screen FOV (120[degree]). Fig. 6 shows an example of a transformed image by nonlinear transformation. view i= H H disp i' i' - c (i' + i c ) 2 (i' # - i c ) (- i c < i' < i c ) i = *i' i' + c (i' + i c ) 2 (i' $ i c ) 2 (H view - H disp ) c= (H disp - 2i c ) 2 Figure 8. The experimental scene (5) (6) 4 Experiments on real environments We evaluated the efficiency of wide FOV to robot navigation. We prepare four navigation courses such as a slalom, a crank and parkings. The robot is 35[cm] wide. The motion freedom of the mobile robot is translation and rotation. The maximum speeds of rotation and translation are set on 31.6[deg/s] and 14.1[cm/s], respectively. In case of slalom and crank, the robot can do pinwheeling. However, in case of two types of parking tasks, the robot movement is same as car; steering and driving. We used the nine different types of FOV setups (six sizes of FOV for linear and three sizes for nonlinear transformation). 60hx45v is the setup to assume a common video camera FOV. The transformed image is projected on the spherical screen display that has 120hx65v FOV. The setups over the FOV 120hx65v are applied to the nonlinear transformation and also the linear transformation to Figure 9. Results in real environments compare them. The angular resolutions are same in all FOV setups. Figs. 4-6 show the sample images displayed on the spherical screen display. Ten subjects tried every combination between task and FOV. Fig. 8 shows the experimental scene in the clank course. The time taken to perform the course together with the number of collisions was recorded. Fig. 9 shows a total result of all experiments. In this figure, horizontal axis indicates the field of view and vertical axis indicate the average, standard deviation of accomplished time and average number of collisions. Hairline and bold line indicate the linear transformation and the proposed nonlinear transformation methods, respectively. The averages and the standard deviations of accomplished time and the number of the average collisions were decreased when FOV was larger. It means task efficiency was improved by wide FOV. It shows the advantage for using wide FOV display and image sensor such as an omnidirectional image sensor. Over the 120hx65v setup, the average and the standard deviation were increased in case of the linear FOV transformation method. This was caused by the direction discordance. Field of view[deg] Field of view[deg] Figure 11. Results of 2D navigation task in virtual environments Figure10. 10.Simulator Simulaterview view Figure For example, if robot rotating 90[degree] in the remote environment, transformed image shows only 30[degree] rotation on display FOV in 360hx65v setup. Many subjects report that linearly transformed images were unnatural and difficult to understand the relation between robot heading direction and obstacles around the robots in the remote environments. Next, the accomplished time of the proposed nonlinear FOV transformation method was decreased until 270hx65v setup, and it was faster than linear FOV transformation. It shows that the nonlinear transformation method got more improvement of the tasks to keep the direction accordance and to present the wide FOV information over the display capacity. As shown in figure 9, totally 270hx65v with nonlinear FOV transformation was the best setup in sight of both the time and the collision on the tested setups and tasks. 5 Experiments on virtual environments In this section, we also evaluated the efficiency of wide FOV to robot navigation in virtual environments. It is effective to use the virtual environments for the evaluation, because it is easy to set up a complicated environment and tasks, such as 3D navigation and spacious environment. We constructed a simulator of robot navigation with a wide field of view for the experiments. The simulator was constructed by using OpenGL graphics library. Figure 10 shows a view of the simulator with 270hx65v FOV set up as an example. We use two Field of view[deg] Field of view[deg] Figure 12. Results of 3D navigation task in virtual environments types of tasks; 2D navigation task (similar to experiments of real environments in section 4 and 3D navigation task (assumed like an exploration of submarine). Both tasks are to move from a start to a goal point with avoiding the obstacles. The 3D navigation task required to overcome and to pass through under the obstacle objects. Motion of elevation was added in the 3D navigation task. We employed eight subjects for 2D and 3D navigation tasks. Experiments were carried out with the different view and FOV; 60hx45v and 120hx65v, nonlinear 180hx65v and 270hx65v, 360hx65v. Additionally the omnidirectional input image was also compared in these experiments, because some previous research [14] directly used the omnidirectional input image for tele-presence application. Figures 11 and 12 show the resultant time to perform the tasks and the number of collisions for 2D and 3D navigation tasks. The resultant times also show the standard deviations to indicate a scattering of samples. These results also show short time to accomplish the task and small number of collisions about 180hx65v and 270hx65v in the both tasks. It means that wide FOV is effective to a remote navigation task. The operation with an omnidirectional input image is also good for 2D task. However, the result with the omnidirectional image is worst in the case of 3D task. It shows that the omnidirectional input image is easy to recognize the environment around the robot in the 2D task that only requires motion about horizontal plane. On the other hands, it is difficult to operate with the omnidirectional input image, because the omnidirectional image is hard to recognize the object height. So proposed wide FOV display method is effective on more general cases. 6 Conclusions To accomplish the tasks effectively and safely on tele-operation, high quality and realistic feed back information are displayed to the remote operator as if operator is in robot site. We think that FOV is the one of important factor of reality on visual feed back system on tele-operation. In this paper, we focus on the effect of FOV to operate the mobile robot. We construct the wide field of view tele-operation system. The slave robot system has the omnidirectional image sensor to take the panoramic image and the master system has spherical display that can display 120hx65v wide field of view distortionless image. Moreover, we propose FOV transformation method to present super wide field of view information over the display capacity. We evaluated efficiency of the tele-operation system and the nonlinear FOV transformation method. From experimental results both simulation and real environments, we confirmed the effect of wide field of view to accomplish the navigation task effectively and safely. The proposed nonlinear FOV transformation method was also effective at the setup of 270hx65v degree. However, we ignored another problem on tele-operating system such as communication delay etc. We will pursue to estimate more realistic situation and tasks. References: [1] C. R. Weisbin and D. Lavery, ``NASA Rover and Telerobotics Technology Program'', IEEE Robotics and Automation Magazine, pp.14-21, December, 1994. [2] S. S. Fisher et al.: ``Virtual environment display system'', ACM Workshop on Interactive 3D Graphics, pp.1-11, 1986. [3] J. D. Hightower, E. H. Spain et al.: ``Telepresence: A Hybrid approach to high- performance robots'', Proc. Int. Conf. Advanced Robotics, pp.563-573, 1987. [4] S. Tachi et al.: ``Tele-existance(I)-Design and evaluation of a visual display with sensation of presence-'', Proc. 5th Symposium Theory and Practice of Robots and Manipulators, pp.245-254, 1984. [5] S. Tachi and H. Arai: ``Study on teleexistance(II)-Three dimentional color dispaly with sensastion of presence'', Proc. Int. Conf. Advanced Robotics, pp.345-352, 1985. [6] J. D. Dickinson and J. A. Leonald: ``The role of peripheral vision in static balancing'', EGONOMICS, Vol.10, pp.421-429, 1967. [7] T. A. Furness: ``Creating better virtual worlds'', Technical Report HITL-M-89-3, 1989. [8] M. Takahashi, K. Arai and K. Yamamoto: ``Wide field of view using a 4LCD HMD is effective for postural control'', Second International Conference on Psychophysiology in Ergonomics, 1998. [9] D. G. Caldwell, K. Reddy, O. Kocak and A. Wardle: ``Sensory Requirements and Performance Assessment of Tele-Presence Controlled Robots'', Proc. IEEE Int. Conf. Robotics and Automation, pp.1375-1380, 1996. [10] Y. Onoe, K. Yamazawa, H. Takemura and N. Yokoya: ``Telepresence by real-time view dependent image generation from omnidirectional video stream'', Computer Vision and Image Understanding, Vol.71, No.2 pp.154-165, 1998. [11] Y. Manabe, K. Sato, K. Yamazawa, N. Yokoya and K. Chihara: ``Reproduction of motion for immersive mixed environments'', Proc. 1st Int. Conf. Image and Graphics, pp.673-676, 2000. [12] C. Cruz-Neira, D. J. Sandin and T. A. DeFanti: ``Surround-screen Projection-based Virtual Reality: The Design and Implementation of the CAVE'', Proc. ACM SIGGRAPH'93, pp.135-142, 1993. [13] K. Yamazawa, Y. Yagi and M. Yachida: ``New real-time omnidirectional image sensor with hyperboloidal mirror'', Proc. 8th Scandinavian Conf. Image Analysis, Vol.2, pp.1381-1387, 1993. [14] J. Baldwin, A. Basu, and H. Zhang: ``Panoramic Video with Predictive Windows for Telepresence Applications'', Proc. IEEE Int. Conf. Robotics and Automations, pp.1922-1927, 1999.