9 Log-linear Models for 3

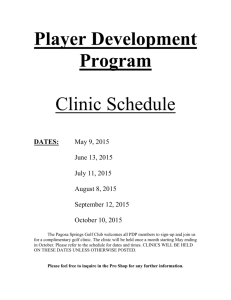

advertisement

c

°2005,

Anthony C. Brooms

Statistical Modelling and Data Analysis

9

Log-linear Models for 3-way Contingency Tables

9.1

Introduction

We extend our techniques from the previous chapter in order to deal with tables of count data

classified according to 3 factors. Extensions to more than 3 factors can be deduced by analogy

from the 2 and 3 factor cases, and is left to you as an exercise!

There are 4 possibilities to consider: i) Nothing fixed by design, ii) Only the sample size

fixed, iii) One factor or variable fixed, i.e. two response variables and one explanatory variable,

iv) two factors fixed, i.e. one response variable and two explanatory variables. Case (i) rarely

occurs in practice; but actually, we would usually want to fit a constant term in the linear

predictor anyway, and so the log-linear models for case (ii) would suffice.

We consider cases (ii)-(iv) in turn, stating the relevant probability models for the realizations of the table, the expressions for the expectations of the cells counts, along with the

corresponding log-linear models. We shall also indicate the terms that need to be included in

the linear predictor in order to justifiably fit a GLM with Poisson error structure and log link.

9.2

Notation

The data are classified according to 3 factors, A, B, and C, each having J,K, and L levels,

respectively.

Let Yjkl be the count for the cell at the j-th row, k-th column, and l-th layer of the table,

and let yjkl be its realization.

Let θjkl be the probability for the cell at the j-th row, k-th column, and l-th layer of the

table.

9.3

Three Response Variables

With just the sample size fixed, the appropriate distribution is multinomial with paramters n,

and {θjkl }

yjkl

J Y

K Y

L

Y

θjkl

f (y; θ) = n!

y !

j=1 k=1 l=1 jkl

where

PJ

j=1

PK PL

k=1

l=1 θjkl

= 1 and

PJ

j=1

PK PL

k=1

1

l=1

yjkl = n.

Thus

E[Yjkl ] = nθjkl

(1)

Taking the logarithm of (1) yields

log E[Yjkl ] = log n + log θjkl

which corresponds to the log-linear model

ηjkl = µ + αj + βk + γl + (αβ)jk + (αγ)jl + (βγ)kl + (αβγ)jkl

(2)

The underlined term ’corresponds’ to the log n term; since n is specified by design, then we

must also always include the constant term when considering smaller, more parsimonious models.

The number of independent parameters is equal to

1+(J−1)+(K−1)+(L−1)+(J−1)(K−1)+(J−1)(L−1)+(K−1)(L−1)+(J−1)(K−1)(L−1) = JKL.

Partial Association Model

Here, each pair of factors is unaffected by the level of the third, and so

E[Yjkl ] = nθjk. θj.l θ.kl

(3)

Taking the logarithm of this yields

log E[Yjkl ] = log n + log θjk. + log θj.l + log θ.kl

which corresponds to

ηjkl = µ + αj + βk + γl + (αβ)jk + (αγ)jl + (βγ)kl

(4)

This has

JKL − (J − 1)(K − 1)(L − 1)

independent parameters.

Conditional Independence Model

Suppose, for concreteness, that given the level of the first variable/factor, the other two are

independent. Then θjkl = θj.. × θjk. × θj.l . In this case, the expectation of the cell counts is

given by

E[Yjkl ] = nθj.. θjk. θj.l

Upon taking the logarithm of (5), we obtain

2

(5)

log E[Yjkl ] = log n + log θj.. + log θjk. + log θj.l

This corresponds to the log-linear model

ηjkl = µ + αj + βk + γl + (αβ)jk + (αγ)jl

(6)

This has

1 + (J − 1) + (K − 1) + (L − 1) + (J − 1)(K − 1) + (J − 1)(L − 1) = J(K + L − 1)

independent parameters.

One variable independent of the other two variables

Suppose that the first variable is independent of the other two.

Clearly θjkl = θj.. × θ.kl which implies that

E[Yjkl ] = nθj.. θ.kl

(7)

The logarithm of this expression is given by

log E[Yjkl ] = log n + log θj.. + log θ.kl

The corresponding log-linear model is

ηjkl = µ + αj + βk + γl + (βγ)kl

(8)

This has

1 + (J − 1) + (K − 1) + (L − 1) + (K − 1)(L − 1) = J + KL − 1

independent parameters.

Complete Independence

Clearly θjkl = θj.. × θ.k. × θ..l and so

E[Yjkl ] = nθj.. θ.k. θ..l

This implies that

log E[Yjkl ] = log n + log θj.. + log θ.k. + log θ..l

and so the corresponding log-linear model is

3

(9)

ηjkl = µ + αj + βk + γl

(10)

with

1 + (J − 1) + (K − 1) + (L − 1) = J + K + L − 2

independent parameters.

Not all models will necessarily have obvious interpretations in the same way as

the other models examined in this section.

9.4

Two Response Variables+One Explanatory Variable

Let us suppose, for concreteness, that the margin corresponding to C is fixed, i.e. the layer

totals, {y..l }, are fixed by design. Then the appropriate distribution for the realizations of the

table is the product multinomial distribution:

f (y; θ) =

L

Y

y..l !

j=1 k=1

l=1

where

PJ

j=1

PK

k=1 θjkl

yjkl

J Y

K

Y

θjkl

yjkl!

= 1, for l = 1, . . . , L.

The expected values of the cell counts take the form

E[Yjkl ] = y..l θjkl

(11)

Taking the logarithm of this expression yields

log E[Yjkl ] = log y..l + log θjkl

Thus the corresponding log-linear model, which is also the saturated model, is:

ηjkl = µ + αj + βk + γl + (αβ)jk + (αγ)jl + (βγ)kl + (αβγ)jkl

(12)

with JKL independent parameters. The term log y..l necessitates the inclusion of the terms

µ + γl .

Independence of Responses at each level of the explanatory variable

Expectation:

E[Yjkl ] = y..l θj.l θ.kl

log-linear model:

4

(13)

ηjkl = µ + αj + βk + γl + (αγ)jl + (βγ)kl

No. of Independent Parameters:

L(J + K − 1)

5

(14)

Homogeneity Model

in which the association between the response variables is the same at each level of the explanatory variable.

Expectation:

E[Yjkl ] = y..l θjk.

(15)

log-linear model:

ηjkl = µ + αj + βk + γl + (αβ)jk

(16)

No. of Independent Parameters:

JK + L − 1

9.5

One Response Variable+Two Explanatory Variables

Suppose, for concreteness, that the first variable, A, is a response, with the other two being

explanatory variables. Then the appropriate distribution is

f (y; θ) =

L

K Y

Y

k=1 l=1

y.kl !

yjkl

J

Y

θjkl

j=1

yjkl !

The expectations of the cell counts take the form

E[Yjkl ] = y.kl θjkl

Taking the logarithm of this equation yields

log E[Yjkl ] = log y.kl + log θjkl

This corresponds to the log-linear model

ηjkl = µ + αj + βk + γl + (αβ)jk + (αγ)jl + (βγ)kl + (αβγ)jkl

6

(17)

Same distribution for all columns of each subtable1

Expectation:

E[Yjkl ] = y.kl θj.l

(18)

log-linear model:

ηjkl = µ + αj + βk + γl + (αγ)jl + (βγ)kl

(19)

No. of Independent Parameters:

L(J + K − 1)

Same distribution for all columns of every subtable

Expectation:

E[Yjkl ] = y.kl θj..

(20)

log-linear model:

ηjkl = µ + αj + βk + γl + (βγ)kl

(21)

No. of Independent Parameters:

KL + J − 1

• For the sake of the exposition, we have selected particular variables to be either response or

explanatory. We can often permute these selections, yielding a corresponding change in the

log-linear model. Once you understand the basic principle of deriving these expressions, then

such changes and extensions should not pose any problem.

• In deciding which model to endorse, out of a number of valid possibilities which fit the

data adequately, we should try to adhere to the principle or law of parsimony. This basically

says that we try to go for the simplest model possible: it is considered preferable to endorse a

simpler model which describes the data adequately, rather than a more complicated one which

leaves very little of the variability unexplained.

1

or layer.

7

9.6

An Example

Consider the data of Bishop (1969) TABLE 2 from Chapter 8. Let us assume that only the

sample size, 715, has been fixed by design. We shall try to identify whether there is any association between any of the three response variables, which shall be labelled clinic, care, and surv.

The data can be read in as follows:

>

>

>

>

>

>

1

2

3

4

5

6

7

8

count <- c(176, 293, 197, 23, 3, 4, 17, 2)

clinic <- rep(c("A", "A", "B", "B"), 2)

care <- rep(c("L", "H"), 4)

surv <- c(rep("S", 4), rep("D", 4))

health <- data.frame(count, clinic, care, surv)

health

count clinic care surv

176

A

L

S

293

A

H

S

197

B

L

S

23

B

H

S

3

A

L

D

4

A

H

D

17

B

L

D

2

B

H

D

The code (along with an abbreviated output) for testing for independence between the variables

is given below.

> health.glm <- glm(count ~ clinic + care + surv, data = health, family = poisson)

> summary(health.glm)

Call: glm(formula = count ~ clinic + care + surv, family =poisson, data = health)

Deviance Residuals:

1

2

3

4

5

6

7

8

-5.071824 5.65378 5.781575 -9.599746 -2.470493 -1.500944 4.325865 -1.068839

Coefficients:

Value Std. Error

(Intercept) 3.44724027 0.10046682

clinic -0.34447476 0.03959526

care 0.09962809 0.03755684

surv 1.63855973 0.09954335

t value

34.312228

-8.699898

2.652729

16.460766

(Dispersion Parameter for Poisson family taken to be 1 )

Null Deviance: 1066.428 on 7 degrees of freedom

Residual Deviance: 211.482 on 4 degrees of freedom

The (scaled) deviance is 211.482 on 4 degrees of freedom; this is highly significant, and so we

are forced to reject the hypothesis of independence between all 3 response variables.

8

Adding in terms until we can find a model that adequately fits the data leads us to the

following:

> health.glm <- glm(count ~ clinic + care + surv + clinic:care + clinic:surv, data = health,

family = poisson)

> summary(health.glm)

Call: glm(formula = count ~ clinic + care + surv + clinic:care + clinic:surv, family = poisson,

data = health)

Deviance Residuals:

1

2

3

4

5

6

7

-0.02769317 0.02148716 0.0008943334 -0.00261686 0.22161 -0.1784756 -0.003043631

8

0.008894454

Coefficients:

Value Std. Error

(Intercept) 3.1541966 0.12006527

clinic -0.1692010 0.12006527

care 0.4101885 0.05789349

surv 1.6634703 0.11240663

clinic:care 0.6633616 0.05789349

clinic:surv -0.4388760 0.11240663

t value

26.270682

-1.409242

7.085227

14.798685

11.458312

-3.904361

(Dispersion Parameter for Poisson family taken to be 1 )

Null Deviance: 1066.428 on 7 degrees of freedom

Residual Deviance: 0.0822892 on 2 degrees of freedom

The deviance of 0.0822892 on 2 degrees of freedom is not significant and so we endorse this

model (other models with all main effects and 2 out of the 3 two-factor interactions were

not found to fit adequately, as is the case for the smaller models). This corresponds to the

conditional independence model of (6). This says that given the clinic, survival prospects, and

standard of antenatal care are independent.

9

The estimates for the parameters in the linear predictor, under the corner-point constraints,

can be obtained as follows.

> options(contrasts = c("contr.treatment", "contr.poly"))

> health.glm <- glm(count ~ clinic + care + surv + clinic:care + clinic:surv, data = health,

family = poisson)

> dummy.coef(health.glm)

$"(Intercept)":

(Intercept)

1.474224

$clinic:

A

B

0 -0.7873732

$care:

H

L

0 -0.5063463

$surv:

D

S

0 4.204693

$"clinic:care":

AH BH AL

BL

0 0 0 2.653447

$"clinic:surv":

AD BD AS

BS

0 0 0 -1.755504

10