1 Gaussian Elimination

advertisement

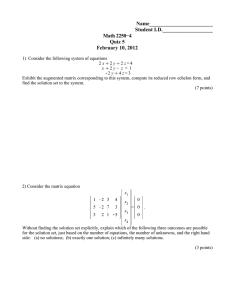

Math1300:MainPage/GaussianElimination Contents • 1 Gaussian Elimination ♦ 1.1 Elementary Row Operations ♦ 1.2 Some matrices whose associated system of equations are easy to solve ♦ 1.3 Gaussian Elimination ♦ 1.4 Gauss-Jordan reduction and the Reduced Row Echelon Form ♦ 1.5 Homogeneous equations ◊ 1.5.1 Theorem (Homogeneous equations) 1 Gaussian Elimination 1.1 Elementary Row Operations Gaussian elimination is an algorithm (a well-defined procedure for computation that eventually completes) that finds all solutions to any system of linear equations. It is defined via certain operations carried out on the augmented matrix. These operations change the matrix (and hence the system of linear equations associated with it), but they leave the set of solutions unchanged. There are three of them, which we now describe 1. Interchanging two rows Exchanging two rows in the augmented matrix is the same as writing the the equations in a different order. The equations themselves are unchanged, as is the set of all solutions. When we interchange row denote it by Example: and row , we . corresponds to Now we interchange row 1 and row 3 ( ) to get: corresponds to 2. Multiplying a row by a nonzero constant If we have a linear equation and multiply both sides of the equation by a nonzero constant (the greek letter is the traditional name for the constant) then the linear eqaution becomes and so . This would apply to any equation in a system, of course, so when we apply this operation to row we denote it by 1 Gaussian Elimination (The symbol means "is replaced by"). As long as is 1 Math1300:MainPage/GaussianElimination nonzero, this operation leaves the set of solutions unchanged. Example: Now we multiply row corresponds to by ( ) to get: corresponds to 3. Adding a multiple of one row to another Recall that one of our basic techniques for solving a system of equations is to pick a variable, make the coefficients of that variable equal in two equations and then take the difference of the equations to create a new one with the variable eliminated. In terms of the associated augmented matrix, this means we get a new row formed by subtracting the corresponding entries in the rows of the two equations. In other words, we replace one row by the difference of two rows. We use the notation to indicate that row is replaced by the difference of row and row . The set of solutions is unchanged. We have already seen that multiplying a row by a nonzero constants leaves the set of solutions unchanged; it is often convenient to combine these two steps as one: from another. Example: eliminating Note that is the case of subtracting one row from the second equation corresponds to Now we replace row 2 by the sum of row 2 and twice row 1 ( ) to get: corresponds to In summary, here is a table of the three elementary row operations: Table of Elementary Row Operations Elementary Operation Notation Interchange rows Multiply row by constant , Add multiple of one row to another Each operation changes the matrix and the associated system of linear equations, but it leaves the set of solutions unchanged. 1.1 Elementary Row Operations 2 Math1300:MainPage/GaussianElimination 1.2 Some matrices whose associated system of equations are easy to solve The elementary row operations allow us to change matrices and their associated system of linear equations without changing their solutions of those equations. The goal is to end up with matrices which make these common solutions obvious. Here are some examples. 1. the augmented matrix corresponds to the system of linear equations The equations themselves actually describe the unique solution! Notice the structure of the coefficient matrix that makes this possible. There is only one nonzero entry in each row, its value is 1, and as you proceed down through from one row to the next, the nonzero entry moves one column to the right. When the first nonzero entry of a row is 1, it is called a leading one. 2. the augmented matrix corresponds to the system of linear equations The last row is easy to solve: we get , or 3. the augmented matrix . Using this value, it is also easy to solve . corresponds to the system of linear equations As before, we get . We still have two variables undefined: we assign a parameter to the second one: . Using this value, we have , or . We now know the solutions: , , . In fact we can check this result with the first equation: The compact way of writing this solution is . . It's clear from the examples given that having lots of zeros in the coefficient matrix is helpful for computing the solutions. It's also clear that leading ones can also make the computation easier. Our plan is to use elementary row 1.2 Some matrices whose associated system of equations are easy to solve 3 Math1300:MainPage/GaussianElimination operations to change a given coefficient matrix into one with these properties, and then to describe all the solutions. Here are some observations that will help us: 1. If a column has some nonzero entry, we can always make the top entry nonzero by interchanging rows, if necessary. 2. If the first nonzero entry of a row is , we can turn it into a 1 by using . 3. If two rows and have nonzero entries in some column , we can turn the entry into a zero using . 1.3 Gaussian Elimination The plan is now start with the augmented matrix and, by using a sequence of elementary row operations, change it to a new matrix where it is easy to identify the solutions of the associated system of linear equations. Since any elementary row operation leaves the solutions unchanged, the solutions to the final system of linear equations will be identical to the solutions of the original one. We work on the columns of the matrix from left to right and change the matrix in the following way: 1. Start with the first column. If it has all entries equal to zero, move on to the next column to the right. 2. If the column has nonzero entries, interchange rows, if necessary, to get a nonzero entry on top. 3. For any nonzero entry below the top one, use an elementary row operation to change it to zero. 4. Now consider the part of the matrix below the top row and to the right of the column under consideration: if there are no such rows or columns, stop since the procedure is finished. Otherwise, carry out the same procedure on the new matrix. Here are some examples: has augmented matrix . We don't need to exchange rows to make the top entry of the first column nonzero, so we proceed to make all entries below the top one in the column equal to zero. There is, of course, only one such entry, and so, using , we get We're now done with the first column, so we delete that column and the top row and carry out the same procedure on the matrix 1.3 Gaussian Elimination 4 Math1300:MainPage/GaussianElimination . Since there is only one row there is nothing to do, and since there are no further rows to the matrix, the procedure stops. So we stop with our original matrix changed to Now we can determine all of the solutions. The first nonzero entry in the first row is in the first column, the column associated with . The first nonzero entry in the second row, similarly, is associated with . We assign parameters to the other variables: and . The second row then tells us that , or the first row to find . Now that we know : we get simplification, , we can use . After . In summary, we have: All solutions to are of the form where and are any real numbers. Also, for any assignment of real numbers to the system of linear equations. It is easy (and worthwhile) to check that substituting the values of indeed give a solution. and , we get a solution to into the two equations does Now consider the equations and its corresponding augmented matrix as it is changed by Gaussian elimination. 1.3 Gaussian Elimination 5 Math1300:MainPage/GaussianElimination The last row, for any choice of reduces to so any solution of the associated first three equations will also be a solution to the last one. In other words, the last row of the matrix has no effect on the solution set, and actually be dropped from the matrix. The third row gives and the first row gives Hence there is one solution: The second row gives Now change the equations from the last example very slightly: The Gaussian elimination is almost identical as is reduced to 1.3 Gaussian Elimination 6 Math1300:MainPage/GaussianElimination as can be seen here: Now the last row says , which can never be true. This means the original system of equations have no solutions, that is, the system is inconsistent. We can make a useful observation here: If a row of the augmented matrix is of the form where 1. 2. is either zero or nonzero, then one of two things happen. in which case the row may be dropped from the matrix in which case there is no solution. Notice the pattern of zero and nonzero entries after Gaussian elimination: Example Consider the system of linear equations: We wish to know the values of and for which there are there no solutions, one solution or more than one solution. To solve this problem, we apply Gaussian elimination to the augmented matrix: 1.3 Gaussian Elimination 7 Math1300:MainPage/GaussianElimination An analysis of the last row tells us everything: If then there is exactly one solution. If and then there are no solutions. Otherwise (when and ) there are an infinite number of solutions. 1.4 Gauss-Jordan reduction and the Reduced Row Echelon Form Gauss-Jordan reduction is an extension of the Gaussian elimination algorithm. It produces a matrix, called the reduced row echelon form in the following way: take the first nonzero entry in any row and change it to a 1 multiplying the row by the inverse of that nonzero entry; then use this 1 to change all the entries above it to a zero. The resulting matrix will have the following properties: Properties of the reduced row echelon form 1. The first nonzero entry in any row is a 1. It is called a leading one. 2. All entries above and below a leading one are zero. 3. As you go down the rows of the matrix, the leading ones move to the right. 4. Any all-zero rows are at the bottom of the matrix. For completeness, we'll describe Gauss-Jordan reduction in detail. As with Gaussian elimination, the columns of the matrix are processed from left to right. 1. Start with the first column. If it has all entries equal to zero, move on to the next column to the right. 2. If, to the contrary, the column has nonzero entries, interchange rows, if necessary, to get a nonzero entry on top. 3. Multiply the top row by a constant to change the nonzero entry to a (leading) one. 4. If there is any nonzero entry below above or below this (leading) one, use an elementary row operation to change it to zero. 5. Now consider the part of the matrix below the top row and to the right of the column under consideration: if there are no such rows or columns, stop since the procedure is finished. Otherwise, carry out the same procedure on the new matrix. Consider the following example: 1.4 Gauss-Jordan reduction and the Reduced Row Echelon Form 8 Math1300:MainPage/GaussianElimination is the reduced row echelon form of the original matrix. Here is another example: 1.4 Gauss-Jordan reduction and the Reduced Row Echelon Form 9 Math1300:MainPage/GaussianElimination Notice the pattern of zero, 1 and nonzero entries after Gauss-Jordan reduction (with the leading ones in red): Now that we can put a matrix in reduced row echelon form, let's see what this implies for finding all solutions to the associated system of linear equations. Remember that the first columns correspond to coefficients of variables , and the last column corresponds to the constants on the right side of the equations. Each column either contains a leading one or it does not. If it does, the corresponding variable is called constrained. If not the variable is called free. Each free variable is assigned a parameter which may take on any number when finding solutions. The values of the constrained variables are then determined by the reduced row echelon form. As an example, suppose we have a system of linear equations whose augmented matrix has the following reduced row echelon form: Notice that this means that our system has three equations and five unknowns. The leading ones are in columns one, three and five, so , and are constrained variables. This leaves and as free variables. We assign parameters to the free variables: and . The rows of the matrix then determine the constrained variables: • • • from the bottom row from the middle row from the top row The compact way of writing this is Notice the role of the zeros above and below each leading one: the evaluation of a constrained variable involves only free variables. In summary, we can say the following: 1.4 Gauss-Jordan reduction and the Reduced Row Echelon Form 10 Math1300:MainPage/GaussianElimination 1. If the reduced row echelon form has a row of the form equations has no solution 2. If the reduced row echelon form has no free variables, then it looks like , then the system of linear and there is a unique solution, namely, 3. If the reduced row echelon form has free variables, then there are an infinite number of solutions. Indeed, the parameter assigned to any one free variable can take on an infinite number of values. Here is a longer example Here is an animation of a matrix being put into reduced row echelon form: 1.5 Homogeneous equations A linear equation is called homogeneous if A system of linear equations is called homogeneous if each of its equations is homogeneous. In other words, the system looks like and so the augmented matrix is of the form 1.5 Homogeneous equations 11 Math1300:MainPage/GaussianElimination The last column is all zero; note that under any elementary row operation it remains all zero. In particular this means that the reduced row echelon form will never have a row of the form always be a solution. This is hardly surprising since if we set called the trivial solution. Any other solution is nontrivial. , and so there will , we get what is 1.5.1 Theorem (Homogeneous equations) Suppose a homogeneous system of equations has more unknowns than equations. Then it has an infinite number of solutions. Proof: Let be the number of rows and the number of columns. Put the augmented matrix in reduced row echelon form. Then the number of leading ones is at most (since there is at most one leading one per row.) In addition, since , there is some column corresponding to a variable that has no leading one, and so the variable of that column is free. Hence there are an infinite number of solutions. 1.5.1 Theorem (Homogeneous equations) 12