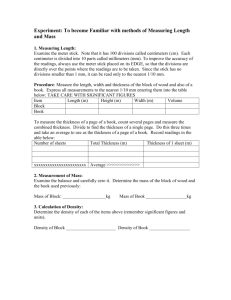

Experimentation and Measurement - National Institute of Standards

advertisement