Lecture General broadcast channels Broadcast communication system

advertisement

Lecture

General broadcast channels

(Reading: NIT ., .)

∙

∙

∙

∙

Marton’s inner bound

Gaussian vector broadcast channel

Marton’s inner bound with common message

Nair–El Gamal outer bound

© Copyright – Abbas El Gamal and Young-Han Kim

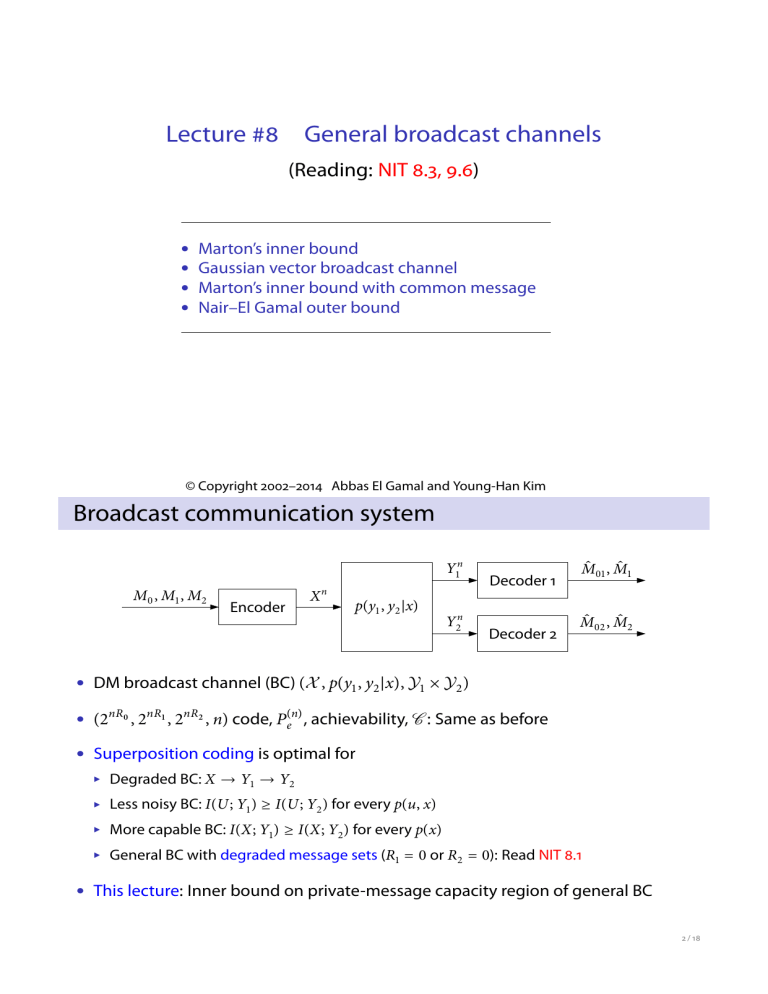

Broadcast communication system

Yn

M , M , M

Encoder

Xn

p(y , y |x)

Yn

Decoder

Decoder

̂ , M

̂

M

̂ , M

̂

M

∙ DM broadcast channel (BC) (X , p(y , y |x), Y × Y )

∙ (nR , nR , nR , n) code, Pe(n) , achievability, C : Same as before

∙ Superposition coding is optimal for

Degraded BC: X → Y → Y

Less noisy BC: I(U; Y ) ≥ I(U; Y ) for every p(u, x)

More capable BC: I(X; Y ) ≥ I(X; Y ) for every p(x)

General BC with degraded message sets (R = or R = ): Read NIT .

∙ This lecture: Inner bound on private-message capacity region of general BC

/

Marton’s inner bound

∙ A simple inner bound: (R , R ) is achievable for the DM-BC p(y , y |x) if

R < I(U ; Y ),

R < I(U ; Y )

for some pmf p(u )p(u ) and function x(u , u )

∙ Marton’s coding scheme: Allows U and U to be dependent

Theorem . (Marton )

(R , R ) is achievable if

R < I(U ; Y ),

R < I(U ; Y ),

R + R < I(U ; Y ) + I(U ; Y ) − I(U ; U )

for some pmf p(u , u ) and function x(u , u )

∙ Optimal for semideterministic BC (Y = y (X )) and Gaussian vector BC

/

Semideterministic BC

∙ Semideterministic BC (Y = y (X)): Set U = Y

The capacity region is the set of (R , R ) such that

R ≤ H(Y ),

R ≤ I(U; Y ),

R + R ≤ H(Y |U) + I(U; Y )

for some p(u, x)

∙ Deterministic BC (Y = y (X), Y = y (X)): Further set U = Y

The capacity region is the set of (R , R ) such that

R ≤ H(Y ),

R ≤ H(Y ),

R + R ≤ H(Y , Y )

for some p(x)

/

Example: Blackwell channel

Y

Y

X

X

Y

R

R

H(/)

R

/

/

/ /

∙

Proof

of achievability

∙

H

C ()

C ()

/

=

= (

)

≤ ( )

≤ ( / )

+

≤

Use multicoding and the mutual covering lemma

un (l )

R

C ()

̃ −R )

n(R

C (m )

⋃

( )+

∈[/

/ ]

C (nR )

̃

n R

un (l )

̃

n(R −R )

C ()

Tє(n)

(U , U )

C (nR )

̃

n R

/

Proof of achievability

∙ Codebook generation: Fix p(u , u ) and x(u , u )

̃

For each m ∈ [ : nR ] generate a subcodebook C (m ) consisting of n(R −R ) sequences

̃

̃

n

un (l ) ∼ ∏i= pU (ui ), l ∈ [(m − )n(R −R ) + : m n(R −R ) ]

Similarly, generate C (m ), m ∈ [ : nR ]

C ()

un (l )

C ()

C ()

̃

n(R −R )

C (m )

C (nR )

̃

n R

un (l )

̃

n(R −R )

C ()

C (nR )

̃

n R

/

Proof of achievability

∙ Codebook generation: Fix p(u , u ) and x(u , u )

For each (m , m ), find (l , l ) such that un (l ) ∈ C (m ), un (l ) ∈ C (m ),

(un (l ), un (l )) ∈ Tє(n)

If no such pair exists, choose (l , l ) = (, )

Generate xn (m , m ) as xi (m , m ) = x(ui (l ), ui (l )), i ∈ [ : n]

C ()

un (l )

C ()

C ()

̃ −R )

n(R

C (m )

C (nR )

̃

n R

un (l )

̃

n(R −R )

C ()

Tє(n)

(U , U )

C (nR )

̃

n R

/

Proof of achievability

∙ Encoding:

To send a message pair (m , m ), transmit xn (m , m )

C ()

un (l )

C ()

C ()

̃

n(R −R )

C (m )

C (nR )

̃

n R

un (l )

̃

n(R −R )

C ()

C (nR )

̃

n R

/

Proof of achievability

∙ Decoding:

̂ j such that (unj (lj ), yjn ) ∈ Tє(n) for some unj (lj ) ∈ Cj (m

̂ j)

Decoder j = , finds unique m

C ()

un (l )

C ()

C ()

̃ −R )

n(R

C (m )

C (nR )

̃

n R

un (l )

̃

n(R −R )

C ()

C (nR )

̃

n R

/

Analysis of the probability of error

∙ Consider P(E) conditioned on (M , M ) = (, )

∙ Let (L , L ) denote the pair of chosen indices

∙ Error events for decoder :

for all (Un (l ), Un (l )) ∈ C () × C (),

E = (Un (l ), Un (l )) ∉ Tє(n)

E = (Un (L ), Yn ) ∉ Tє(n) ,

̃

E = (Un (l ), Yn ) ∈ Tє(n) (U , Y ) for some l ∉ [ : n(R −R ) ]

Thus, by the union of events bound,

P(E ) ≤ P(E ) + P(Ec ∩ E ) + P(E )

/

Mutual covering lemma (U = )

∙ Let (U , U ) ∼ p(u , u ) and є < є

∙ For j = , , let Ujn (mj ) ∼ ∏ni= pUj (uji ), mj ∈ [ : nrj ], be pairwise independent

∙ Assume that {Un (m )} and {Un (m )} are independent

Un

Un

Un (m )

Un (m )

/

Mutual covering lemma (U = )

∙ Let (U , U ) ∼ p(u , u ) and є < є

∙ For j = , , let Ujn (mj ) ∼ ∏ni= pUj (uji ), mj ∈ [ : nrj ], be pairwise independent

∙ Assume that {Un (m )} and {Un (m )} are independent

Lemma . (Mutual covering lemma)

There exists δ(є) → as є → such that

lim P(Un (m ), Un (m )) ∉ Tє(n) for all m ∈ [ : nr ], m ∈ [ : nr ] =

n→∞

if r + r > I(U ; U ) + δ(є)

∙ Proof: See NIT Appendix A

∙ This lemma extends the covering lemma:

For a single Un sequence (r = ), r > I(U ; U ) + δ(є) as in the covering lemma

Pairwise independence: linear codes for finite field models

/

Analysis of the probability of error

∙ Error events for decoder :

E = (Un (l ), Un (l )) ∉ Tє(n)

for all (Un (l ), Un (l )) ∈ C () × C (),

E = (Un (L ), Yn ) ∉ Tє(n) ,

̃

E = (Un (l ), Yn ) ∈ Tє(n) (U , Y ) for some l ∉ [ : n(R −R ) ]

∙ By the mutual covering lemma (with r = R̃ − R and r = R̃ − R ),

̃ − R ) + (R

̃ − R ) > I(U ; U ) + δ(є )

P(E ) → if (R

∙ Since Ec = {(Un (L ), Un (L ), X n ) ∈ Tє(n)

},

by the conditional typicality lemma, P(Ec ∩ E ) →

∙ By the packing lemma, P(E ) → if R̃ < I(U ; Y ) − δ(є)

∙ Similarly, P(E ) → if R̃ < I(U ; Y ) + δ(є)

∙ Using Fourier–Motzkin to eliminate R̃ and R̃ completes the proof

/

Relationship to Gelfand–Pinsker

∙ Fix p(u , u ) and x(u , u )

∙ Consider R = I(U ; Y ) − I(U ; U ) − δ(є) and R = I(U ; Y ) − δ(є)

∙ Marton scheme for communicating M is equivalent to G–P for p(y |u , u )p(u )

p(u )

Un

M

Encoder

Un

Xn

x(u , u )

p(y |x)

Yn

Decoder

̂

M

∙ The corner point (R = I(U ; Y ), R = I(U ; Y ) − I(U ; U )) achieved similarly

∙ The rest of the pentagon region is achieved by time sharing

/

Application: Gaussian BC

Z

M

M -encoder

Xn

Yn

Xn

M

M -encoder

Yn

Xn

Z

̄

∙ Decompose X into the sum of independent X ∼ N(, αP) and X ∼ N(, αP)

̄

∙ Send M to Y = X + X + Z : R < C(αP/(αP

+ N )) (treat X as noise)

∙ Send M to Y = X + X + Z : R < C(αP/N ) (writing on dirty paper)

Substitute U = X and U = βU + X , β = αP/(αP + N ) in Marton

∙ This coding scheme works even when N > N (unlike superposition coding)

/

Gaussian vector broadcast channel

Z

M , M

Encoder

Xn

Yn

G

Yn

G

̂

M

Decoder

̂

M

Decoder

Z

∙ Average power constraint: ∑ni= xT(m , m , i)x(m , m , i) ≤ nP

∙ Z , Z ∼ N(, Ir )

∙ Channel is not degraded in general (superposition coding not optimal)

∙ Marton coding (vector writing on dirty paper) is optimal, however

/

Capacity region

∙ R : (R , R ) such that

R

|G K GT + G K GT + Ir |

,

R < log

|G K GT + Ir |

R <

log |G K GT + Ir |

R

C

R

for some K , K ⪰ with tr(K + K ) ≤ P

∙ R : (R , R ) such that

R < log |G K GT + Ir |,

R

|G K GT + G K GT + Ir |

R < log

|G K GT + Ir |

for some K , K ⪰ with tr(K + K ) ≤ P

Theorem . (Weingarten–Steinberg–Shamai )

C is the convex closure of R ∪ R

/

Proof of achievability for R

Z

M

M -encoder

Xn

X

M

M -encoder

n

Xn

Yn

G

Yn

G

Z

∙ Decompose X into the sum of independent X ∼ N(, K ) and X ∼ N(, K )

∙

|G K GT + G K GT + Ir |

Send M to Y = G X + G X + Z : R < log

|G K GT + Ir |

∙ Send M to Y = G X + G X + Z : R < log |G K GT + Ir | (vector WDP)

∙ R is achieved similarly

/

Marton’s inner bound with common message

Theorem . (Marton , Liang )

(R , R , R ) is achievable if

R + R < I(U , U ; Y ),

R + R < I(U , U ; Y ),

R + R + R < I(U , U ; Y ) + I(U ; Y |U ) − I(U ; U |U ),

R + R + R < I(U ; Y |U ) + I(U , U ; Y ) − I(U ; U |U ),

R + R + R < I(U , U ; Y ) + I(U , U ; Y ) − I(U ; U |U )

for some p(u , u , u ) and function x(u , u , u )

∙ Proof of achievability: Superposition coding + Marton’s coding

∙ Tight for all classes of BCs with known capacity regions

∙ Even for R = , larger than Marton’s inner bound with U = (Theorem .)

/

Nair–El Gamal outer bound

Theorem . (Nair–El Gamal )

If (R , R , R ) is achievable, then

R ≤ min{I(U ; Y ), I(U ; Y )},

R + R ≤ I(U , U ; Y ),

R + R ≤ I(U , U ; Y ),

R + R + R ≤ I(U , U ; Y ) + I(U ; Y |U , U ),

R + R + R ≤ I(U ; Y |U , U ) + I(U , U ; Y )

for some p(u )p(u )p(u |u , u ) and function x(u , u , u )

∙ Tight for all BCs with known capacity regions that we discussed so far

∙ Does not coincide with Marton’s inner bound (Jog–Nair )

∙ Not tight in general (Geng–Gohari–Nair–Yu )

/

Summary

∙ Marton’s inner bound:

Multidimensional subcodebook generation

Generating correlated codewords for independent messages

∙ Mutual covering lemma

∙ Connection between Marton coding and Gelfand–Pinsker coding

∙ Writing on dirty paper achieves the capacity region of the Gaussian vector BC

∙ Nair–El Gamal outer bound

/

References

Geng, Y., Gohari, A. A., Nair, C., and Yu, Y. (). The capacity region for two classes of product broadcast

channels. In Proc. IEEE Int. Symp. Inf. Theory, Saint Petersburg, Russia.

Jog, V. and Nair, C. (). An information inequality for the BSSC channel. In Proc. UCSD Inf. Theory Appl.

Workshop, La Jolla, CA.

Liang, Y. (). Multiuser communications with relaying and user cooperation. Ph.D. thesis, University of

Illinois, Urbana-Champaign, IL.

Marton, K. (). A coding theorem for the discrete memoryless broadcast channel. IEEE Trans. Inf.

Theory, (), –.

Nair, C. and El Gamal, A. (). An outer bound to the capacity region of the broadcast channel. IEEE

Trans. Inf. Theory, (), –.

Weingarten, H., Steinberg, Y., and Shamai, S. (). The capacity region of the Gaussian multiple-input

multiple-output broadcast channel. IEEE Trans. Inf. Theory, (), –.

/