Boolean Functions - Carnegie Mellon School of Computer Science

advertisement

Outline

Boolean Functions

2

1

Boolean Functions

2

Clones and Post

3

Circuits and Log-Space

Klaus Sutner

Carnegie Mellon University

Fall 2013

Boolean Functions

3

There Are Lots

4

n

Obviously there are 22 Boolean functions of arity n.

Let us return to the two-element Boolean algebra B = {ff, tt}.

To lighten notation we will overload and write 2 = {0, 1} instead.

n

Definition

22

A Boolean function of arity n is any function of the form 2n → 2.

n

1

2

3

4

5

6

7

8

4

16

256

65536

4.3 × 109

1.8 × 1019

3.4 × 1038

1.2 × 1077

Note that we only consider single outputs here, f : 2n → 2 rather than

f : 2n → 2m .

22

Slightly more vexing is the question of whether one should include n = 0. We’ll

fudge things a bit.

Formulae and Functions

ϕ

b : 2n → 2

by ϕ(a)

b

= ϕ[a/x]: replace the variables by actual Boolean values and evaluate

the resulting expression.

Obviously two formulae are equivalent iff the corresponding Boolean functions

n

are identical; so there are 22 classes of mutually inequivalent Boolean

formulae with n variables.

= 6.7 × 10315652

Infinite, for all practical intents and purposes. Since there are quite so many, it

is a good idea to think about how to describe and construct these functions.

5

Given a Boolean formula ϕ(x1 , x2 , . . . , xn ) we can naturally define an n-ary

Boolean function

20

Lindenbaum-Tarski Algebra

In fact, we obtain a Boolean algebra: points are the equivalence classes of

formulae and the operations are determined by the logical operations “and,”

“or” and “not.”

In fact, this algebra is the free Boolean algebra generated by the corresponding

propositional variables.

This may seem a bit unexciting, but it establishes a strong connection between

logic and algebra and is actually quite important.

For example, if we were to deal with intuitionistic logic instead we would wind

up with a Heyting algebra.

6

Zero or One Inputs

7

Binary Boolean Functions

8

Case n = 2: This is much more interesting: here all the well-known operations

from propositional logic appear.

For small arities n we can easily enumerate all Boolean functions.

x

0

0

1

1

Case n = 0: All we get is 2 constant functions 0 and 1.

Case n = 1: 2 constants, plus

x

0

0

1

1

Hid = x, the identity, and

Hnot (x) = 1 − x, negation.

Incidentally, the latter two are reversible.

y

0

1

0

1

y

0

1

0

1

Hand (x, y)

0

0

0

1

Hequ (x, y)

1

0

0

1

Hor (x, y)

0

1

1

1

Himp (x, y)

1

1

0

1

Hxor (x, y)

0

1

1

0

Hnand (x, y)

1

1

1

0

Via composition we can generate all Boolean functions from these.

Expressing Boolean Functions

9

Propositional Bases

10

As it turns out, there are lots of bases.

It is well-known that some Boolean functions can be expressed in terms of

others. For example, one can write “exclusive or” ⊕ in disjunctive normal form,

¯ or nor ∨

¯ as follows:

conjunctive normal, or using only nand ∧

Theorem (Basis Theorem)

Any Boolean function f : 2n → 2 can be obtained by composition from the

basic functions (unary) negation and (binary) disjunction.

x ⊕ y = (x ∧ y) ∨ (x ∧ y)

= (x ∨ y) ∧ (x ∨ y)

Proof.

Consider the truth table for f .

¯ x) ∨

¯ (y ∨

¯ y)) ∨

¯ (x ∨

¯ y)

= ((x ∨

For every truth assignment α such that f (α) = 1 define a conjunction

Cα = z1 ∧ . . . ∧ zn by zi = xi whenever α(xi ) = 1, zi = xi otherwise.

¯ (x ∧

¯ y)) ∧

¯ ((x ∧

¯ y) ∧

¯ y)

= (x ∧

Then f (x) ≡ Cα1 ∨ . . . ∨ Cαr .

Use Morgan’s law to eliminate the conjunctions from this disjunctive normal

form.

A set of Boolean functions is a basis or functionally complete if it produces all

Boolean functions by composition.

Other Bases

✷

11

Here are some more bases:

{∧, ¬},

Boolean Functions

{ ⇒ , ¬},

{ ⇒ , 0},

{∨, ⇔ , 0}

¯}

{∧

2

Clones and Post

¯}

{∨

On the other hand, {∨, ⇔ } does not work.

One might be tempted to ask when a set of Boolean functions is a basis.

To tackle this problem we need to be a little more careful about what is meant

by basis.

Circuits and Log-Space

Clones

13

Definition (Clones)

A family F of Boolean functions is a clone if it contains all projections Pni and

is closed under composition.

The following functions h must also be in F.

monadic identity: P11

Recall from our discussion of primitive recursive functions that a projection is a

map

cylindrification: h(x1 , . . . , xn ) = f (x1 , . . . , xn−1 )

Pni (x1 , . . . , xn ) = xi

diagonalization: h(x1 , . . . , xn ) = f (x1 , . . . , xn , xn )

and closure under composition means that

f : Bm → B

14

One can rephrase the definition of a clone into a longer list of simpler

conditions (that may be easier to work with). Let f, g ∈ F be an arbitrary

functions of suitable arity.

Write BF for all Boolean functions and BFn for Boolean functions of arity n.

Pni : Bn → B

Characterization of Clones

rotation: h(x1 , . . . , xn ) = f (x2 , x3 , . . . , xn , x1 )

f (x) = h(g1 (x), . . . , gn (x))

transposition: h(x1 , . . . , xn ) = f (x1 , . . . , xn−2 , xn , xn−1 )

composition: h(x1 , . . . , xn+m ) = f (x1 , . . . , xn , g(xn+1 , . . . , xn+m ))

is in F, given functions gi : Bm → B for i = 1, . . . , n , and h : Bn → B , all in F.

More succinctly, we can write h ◦ (g1 , . . . , gn ).

Simple Clones

16

We need to identify clones. This may seem hopeless at first, but at least of few

simple ones we can deal with easily.

According to rules (2), (3), (4), (5) we can construct h from f via

h(x1 , . . . , xn ) = f (xπ(1) , . . . , xπ(m) )

It is clear that BF is a clone; we write ⊤ for this clone. At the other end, the

smallest clone is the set all projections; we write ⊥ for this clone.

for any function π : [m] → [n] .

Together with the last rule this suffices to get arbitrary compositions.

The collections of all clones form a lattice under set inclusion. Operation ⊓ is

just intersection, but ⊔ requires closure under composition.

Exercise

For any Boolean function f we can define its dual fb by

Show that this characterization of clones is correct.

b = ∧ and ¬

For example, ∨

b = ¬.

Self-Dual Functions

17

fb(x) = f (x)

0/1-Preserving Functions

To find more interesting examples of clones, consider the following.

Then for any clone F the set of all duals is again a clone:

b = { fb | f ∈ F }

F

Of course, for the trivial clones ⊤ and ⊥ duality does not buy us anything. But

later we will find a nice symmetric structure.

Definition

Let f be a Boolean function.

f is 0-preserving if f (0) = 0.

f is 1-preserving if f (1) = 1.

f is bi-preserving if f is 0-preserving and 1-preserving.

Note that some functions are their own dual: ¬ is an example.

For example n-ary disjunction and conjunction are both bi-preserving.

Claim

The collection D of all self-dual functions is a clone.

Claim

The collections P0 , P1 and P of all 0-preserving, 1-preserving and bi-preserving

Boolean functions are clones.

18

Monotone Functions

19

For Boolean vectors a and b of the same length write a ≤ b iff ai ≤ bi (the

product order on 2)

Dedekind Numbers

20

Counting the number of n-ary monotone Boolean functions is not easy, the

problem was first introduced by R. Dedekind in 1897.

Definition

Here are the first few Dedekind numbers Dn , for arities n = 0, . . . , 8.

A Boolean function f is monotone if

a≤b

implies

f (a) ≤ f (b)

2, 3, 6, 20, 168, 7581, 7828354, 2414682040998, 56130437228687557907788

For example n-ary disjunction and conjunction are both monotone.

One can define a topology on Bn so that these functions are precisely the

continuous ones.

One can show that Dn is the number of anti-chains in the powerset of [n]. For

example, there are 6 anti-chains over [2]

∅, {∅} , {{1}} , {{2}} , {{1, 2}} , {{1} , {2}}

Claim

The collection M of all monotone Boolean functions is a clone.

More Anti-Chains

21

Quasi-Monadic Functions

22

And 20 anti-chains over [3]:

These are functions that are essentially unary: they are n-ary but depend on

only one argument.

Definition

∅, {∅} , {{1}} , {{2}} , {{3}} , {{1, 2}} , {{1, 3}} , {{2, 3}} , {{1, 2, 3}} ,

A Boolean function f quasi-monadic if there is some index i such that

{{1} , {2}} , {{1} , {3}} , {{1} , {2, 3}} , {{2} , {3}} , {{2} , {1, 3}} , {{3} , {1, 2}} ,

{{1, 2} , {1, 3}} , {{1, 2} , {2, 3}} , {{1, 3} , {2, 3}} , {{1} , {2} , {3}} ,

xi = yi

implies

f (x) = f (y)

{{1, 2} , {1, 3} , {2, 3}}

Claim

Make sure you understand how the anti-chains correspond to monotone

functions: every set in an anti-chain over [n] defines a clause over n Boolean

variables x1 , . . . , xn . Define f to be true on exactly these clauses.

Quasi-Monadic Clones

The collection U of all quasi-monadic Boolean functions is a clone.

23

Relations Between Quasi-Monadic Functions

Quasi-monadic functions are rather simple, so it is not too surprising that we

can actually determine all clones containing only quasi-monadic functions:

there are exactly 6 of them.

24

U

M

The first 4 contain only monotone functions.

⊥, the trivial clone of projections,

0

1

0-preserving quasi-monadic functions,

D

1-preserving quasi-monadic functions,

⊥

monotone quasi-monadic functions,

self-dual quasi-monadic functions,

all quasi-monadic functions.

The arrows are precise in the sense that there are no other clones in between.

It is not hard to see that each of these classes of functions is in fact a clone.

The hard part is to make sure that each quasi-monadic clone appears on the

the list.

Exercise

Figure out exactly what these clones look like.

Self-Dual Functions

25

A function is self-dual if fb = f . Note that negation is self-dual. Here is another

important example.

Threshold Functions

26

Majority is an important special case of a broader class of functions.

Definition

Definition (Majority)

A threshold function thrn

m , 0 ≤ m ≤ n, is an n-ary Boolean function defined by

(

1 if #(i xi = 1) ≥ m,

thrn

m (x) =

0 otherwise.

The ternary majority function maj is defined by

maj(x, y, z) = (x ∧ y) ∨ (x ∧ z) ∨ (y ∧ z)

Then majority is self-dual:

Lots of Boolean functions can be defined in terms of threshold functions.

maj(x, y, z) = (x ∧ y) ∨ (x ∧ z) ∨ (y ∧ z)

thrn

0 is the constant tt.

= (x ∧ y) ∧ (x ∧ z) ∧ (y ∧ z)

thrn

1 is n-ary disjunction.

= (x ∨ y) ∧ (x ∨ z) ∧ (y ∨ z)

thrn

n is n-ary conjunction.

= maj(x, y, z)

n

thrn

k (x) ∧ ¬thrk+1 (x) is the counting function: “exactly k out of n.”

Counting with 8 Variables

27

Affine Functions

28

Definition

An affine Boolean function has the form

f (x) = a0 ⊕

M

ai x i

i

where the ai ∈ B are fixed.

Here ⊕ denotes exclusive or (equivalently, addition modulo 2). If a0 = 0 we

have a linear Boolean function. Note that these functions are additive:

f (x ⊕ y) = f (x) ⊕ f (y)

Claim

The collection A of all affine functions is a clone.

Emil Post

29

The same Post as in Post’s

problem and Post tag systems.

Post found a classification of

all clones, published in 1941.

Classification

30

Generators

31

Careful inspection shows that all the clones in the diagram have a finite set of

generators: for any clone C there are finitely many Boolean functions f1 , . . . , fr

so that the least clone containing all fi is C.

Aside: Finite-Basis Theorem

32

However, one can also directly show that Boolean clones must be finitely

generated. The proof rests on the following strange property.

Here are some examples:

Definition

⊤

P0

P1

P

M

D

A

U

An n-ary Boolean function f is a rejection function if for all a ∈ B:

#(i xi = a) ≥ n − 1

implies

f (x) = a

∨, ¬

∨, ⊕

∨, →

if-then-else

∨, ∧, 0, 1

maj, ¬

↔,0

¬, 0

For example, majority is a rejection function.

Lemma

A clone containing a rejection function is finitely generated.

Since our diagram contains all clones it follows that all Boolean clones are

finitely generated.

Alas, there are infinitely many . . .

33

Post’s Lattice

34

One can generalize the property of being 0-preserving:

Definition

A Boolean function f is m-fold 0-preserving if

x1 ∧ . . . ∧ xm = 0

Call this class Zm and let Z∞

implies

T

= Zm .

The largest clones other than ⊤ are M , D, A, P0 and P1 .

f (x1 ) ∧ . . . ∧ f (xm ) = 0

There are eight infinite families of clones.

There are a few “sporadic” clones.

Claim

That’s it, no other clones exist.

Zm and Z∞ are clones.

The original proof was supposedly 1000 pages long, the published version is still

around 100 pages.

Note that Zm is properly contained in Zm−1 . For m ≥ 2, Zm is generated by

thrm+1

and ¬ → . As it turns out, f ∈ Z∞ iff f (x) ≤ xi .

m

There also are variants by adding monotonicity and/or 1-preservation.

And we can do the same for 1-preserving functions.

Satisfiability and Post’s Lattice

Suppose we have a finite set C of logical connectives.

How hard is Satisfiability if the formula is written in terms of the connectives in

C?

Theorem (H. R. Lewis 1979)

Satisfiability for C is NP-complete if the clone generated by C contains Z∞ .

Otherwise the problem is in polynomial time.

35

Back to Bases

There are exactly two functionally complete sets of size 1: NAND and NOR,

discovered by Peirce around 1880 and rediscovered by Sheffer in 1913 (Pierce

arrow and Sheffer stroke).

It follows from Post’s result that a set of Boolean functions is a basis iff for

each of the following 5 classes, it contains at least one function that fails to lie

in the class:

monotonic

affine

self-dual

The proof exploits Post’s lattice from above.

truth-preserving

false-preserving

36

Boolean Circuits

38

Another way to interpret (the composition of) Boolean functions are digital

circuits, at least the ones without feedback.

Boolean Functions

Clones and Post

3

Circuits and Log-Space

Boolean Circuits

39

The connection between electronic circuits and Boolean algebra was first clearly

recognized by C. Shannon in 1938. Here is a rather famous digital circuit:

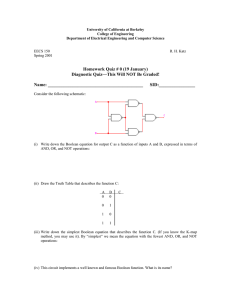

40

We can use Boolean algebra to construct an actual circuit. Here inputs are x, y

and z and the outputs are s (sum) and c (carry). The table describes the

functionality of the circuit.

Half Adder

x

Building a 2-bit Adder

x

0

0

0

0

1

1

1

1

c

s

y

2-bit Adder

x

HA

y

c

y

0

0

1

1

0

0

1

1

z

0

1

0

1

0

1

0

1

s

0

1

1

0

1

0

0

1

c

0

0

0

1

0

1

1

1

From the table we can read off immediately that

s

HA

s

s

s = x yz + xyz + xy z + xyz

c

c = xyz + xyz + xyz + xyz

c

c

Simplify

41

These expressions are rather complicated, but we can simplify them

considerably using equational reasoning in Boolean algebra.

To simplify, let

Innocent Question

42

How hard is it to evaluate a Boolean circuit?

We assume we have values for all the inputs and the want to calculate the

value of the output(s).

u = xy + xy = (x + y) xy

∨

then

∨

∨

∨

s = uz + uz

c = uz + xy

∧

∧

∧

∧

∧

∧

Not too bad. u is essentially the half-adder from above, and the last two

formulae represent the 2-bit adder.

Note that this simplification process would be rather difficult using circuit

diagrams!

a

b

c

d

Obviously Fast

43

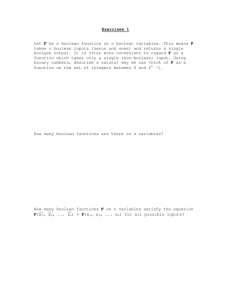

Input/Output Tapes

44

work tape

b a c a b a a a

The natural algorithm propagates the values from the leaves to the top(s) of

the acyclic graph.

0 0 1 0 1

Clearly this can be done in a breadth-first-like fashion and should be linear in

the size of the circuit.

output tape

input tape

1 1 1 0

But note that the evaluation is inherently parallel, one can propagate values

upward in parallel.

So, the statement that one can evaluate in linear time is not all that

interesting, one should take a more careful look.

M

First, we need to look for something more precise than polynomial time

reductions (which are appropriate for P versus NP).

Note that sublinear complexity classes make sense with respect to space: we

can separate the input, output and work tapes and only charge for the work

space.

Log-Space Reductions

45

Key Properties

46

Lemma

⋆

Log-space reducibility implies polynomial time reducibility.

⋆

Language A is log-space reducible to B if there is a function f : Σ → Σ that

is computable by a log-space Turing machine such that

≤log is a pre-order (reflexive and transitive).

x ∈ A ⇐⇒ f (x) ∈ B

Proof. To see part (1) note that the reduction can be computed in time

O(2c log n ) = O(nc ).

We will write A ≤log B.

Transitivity in part (2) is not trivial: one cannot simply combine two log-space

transducers by identifying the output tape of the first machine with the input

tape of the second machine: this space would count towards the work space

and may be too large.

We note in passing that SAT is NP-complete with respect to log-space

reductions rather than just polynomial time reductions as claimed earlier.

This can be seen by careful inspection of the proof for Cook-Levin: in order to

construct the Boolean formula that codes the computation of the

nondeterministic Turing machine one never needs more than logarithmic

memory.

Therefore we do not compute the whole output, rather we keep a pointer to

the current position of the input head of the second machine.

Whenever the head moves compute the corresponding symbol from scratch

using the first machine. The pointer needs only log(nc ) = O(log n) bits.

✷

Circuits and Languages

A single circuit represents a Boolean function f : 2n → 2 (or several such

functions).

In order to use circuits to recognize languages over 2 we need a family of

circuits: a circuit Cn with n inputs for each n.

And the circuit should be easy to construct, more about this later.

We can then define the language of this family of circuits as the set of words x

such that C|x| on input x evaluates to true.

For example, if Cn computes the negation of the exclusive or of all the input

bits then we have a parity checker.

47

Nick’s Class

Unconstrained circuits are not too interesting, but if we add a few bounds we

obtain interesting classes.

Definition (NC)

A language L is in NCd if there is a circuit family (Cn ) that decides L and

such that the size of Cn is polynomial in n and the depth of Cn is O(logd n).

NC is the union of all NCd .

The key idea is that, given enough processors, we can evaluate Cn in O(logd n)

steps.

It is reasonable to assume that a problem admits an efficient parallel algorithm

iff in lies in NC.

For example, it is easy to see that parity testing is in NC1 .

48

Structuring Polynomial Time

49

Circuit Value Problem

While we are dealing with log-space reductions: they can also be used to study

the fine-structure of P.

Problem:

Instance:

Question:

50

Circuit Value Problem (CVP)

A Boolean circuit C, input values x.

Check if C evaluates to true with input x.

Definition (P-Completeness)

A language B is P-hard if for every language A in P: A ≤log B.

Obviously CVP is solvable in polynomial time (even linear time).

It is P-complete if it is P-hard and in P.

There are several versions of CVP, here is a particularly simple one: compute

the value of Xm where

Theorem

X0 = 0,

Suppose B is P-complete. Then

X1 = 1

Xi = XLi ✸i XRi , i = 2, . . . , m

B ∈ NC iff NC = P.

where ✸i = ∧, ∨ and Li , Ri < i.

B ∈ L iff L = P.

CVP is Hard

51

Alternating Breadth First Search

Theorem (Ladner 1975)

A long list of P-complete problems in known, though they tend to be a bit

strange.

The Circuit Value Problem is P-complete.

Proof.

For example, consider an undirected graph G = h V, E i where E is partitioned

as E = E0 ∪ E1 .

For hardness consider any language A accepted by a polynomial time Turing

machine M .

Given a start vertex s and a target t, does t get visited along in edge in E0 if

the breadth-first search can only use edges in E0 at even and E1 at odd levels?

We can use ideas similar to Cook-Levin to encode the computation of the

Turing machine M on x as a polynomial size circuit (polynomial in n = |x|):

use lots of Boolean variables to express tape contents, head position and state.

Theorem

Constructing this circuit only requires “local” memory; for example we need

O(log n) bits to store a position on the tape.

ABFS is P-complete.

The circuit evaluates to true iff the machine accepts.

✷

Summary

Boolean functions have lots of small bases.

Surprisingly, one can fully analyze the structure of Boolean functions under

composition (Post’s lattice).

This analysis is useful to characterize the complexity of satisfiability for

classes of formulae.

Evaluation of a Boolean function is P-hard.

53

—————————————————————————

52