How to Detect a Loss of Attention in a Tutoring System using Facial

advertisement

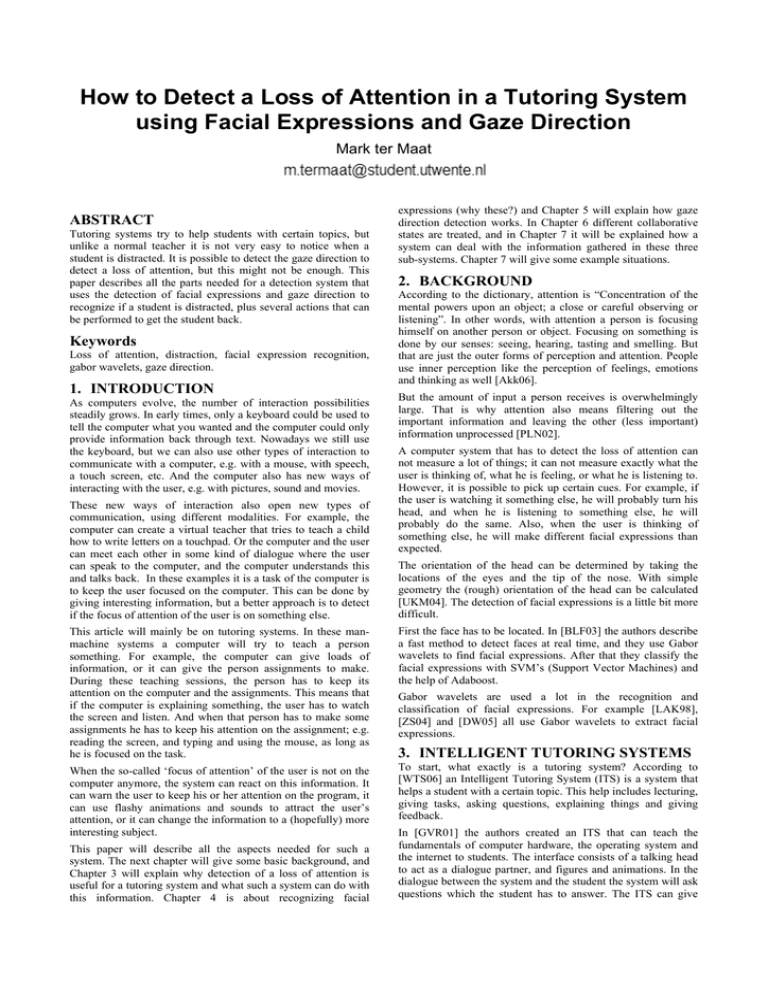

How to Detect a Loss of Attention in a Tutoring System using Facial Expressions and Gaze Direction Mark ter Maat ABSTRACT Tutoring systems try to help students with certain topics, but unlike a normal teacher it is not very easy to notice when a student is distracted. It is possible to detect the gaze direction to detect a loss of attention, but this might not be enough. This paper describes all the parts needed for a detection system that uses the detection of facial expressions and gaze direction to recognize if a student is distracted, plus several actions that can be performed to get the student back. Keywords Loss of attention, distraction, facial expression recognition, gabor wavelets, gaze direction. 1. INTRODUCTION As computers evolve, the number of interaction possibilities steadily grows. In early times, only a keyboard could be used to tell the computer what you wanted and the computer could only provide information back through text. Nowadays we still use the keyboard, but we can also use other types of interaction to communicate with a computer, e.g. with a mouse, with speech, a touch screen, etc. And the computer also has new ways of interacting with the user, e.g. with pictures, sound and movies. These new ways of interaction also open new types of communication, using different modalities. For example, the computer can create a virtual teacher that tries to teach a child how to write letters on a touchpad. Or the computer and the user can meet each other in some kind of dialogue where the user can speak to the computer, and the computer understands this and talks back. In these examples it is a task of the computer is to keep the user focused on the computer. This can be done by giving interesting information, but a better approach is to detect if the focus of attention of the user is on something else. expressions (why these?) and Chapter 5 will explain how gaze direction detection works. In Chapter 6 different collaborative states are treated, and in Chapter 7 it will be explained how a system can deal with the information gathered in these three sub-systems. Chapter 7 will give some example situations. 2. BACKGROUND According to the dictionary, attention is “Concentration of the mental powers upon an object; a close or careful observing or listening”. In other words, with attention a person is focusing himself on another person or object. Focusing on something is done by our senses: seeing, hearing, tasting and smelling. But that are just the outer forms of perception and attention. People use inner perception like the perception of feelings, emotions and thinking as well [Akk06]. But the amount of input a person receives is overwhelmingly large. That is why attention also means filtering out the important information and leaving the other (less important) information unprocessed [PLN02]. A computer system that has to detect the loss of attention can not measure a lot of things; it can not measure exactly what the user is thinking of, what he is feeling, or what he is listening to. However, it is possible to pick up certain cues. For example, if the user is watching it something else, he will probably turn his head, and when he is listening to something else, he will probably do the same. Also, when the user is thinking of something else, he will make different facial expressions than expected. The orientation of the head can be determined by taking the locations of the eyes and the tip of the nose. With simple geometry the (rough) orientation of the head can be calculated [UKM04]. The detection of facial expressions is a little bit more difficult. This article will mainly be on tutoring systems. In these manmachine systems a computer will try to teach a person something. For example, the computer can give loads of information, or it can give the person assignments to make. During these teaching sessions, the person has to keep its attention on the computer and the assignments. This means that if the computer is explaining something, the user has to watch the screen and listen. And when that person has to make some assignments he has to keep his attention on the assignment; e.g. reading the screen, and typing and using the mouse, as long as he is focused on the task. First the face has to be located. In [BLF03] the authors describe a fast method to detect faces at real time, and they use Gabor wavelets to find facial expressions. After that they classify the facial expressions with SVM’s (Support Vector Machines) and the help of Adaboost. When the so-called ‘focus of attention’ of the user is not on the computer anymore, the system can react on this information. It can warn the user to keep his or her attention on the program, it can use flashy animations and sounds to attract the user’s attention, or it can change the information to a (hopefully) more interesting subject. To start, what exactly is a tutoring system? According to [WTS06] an Intelligent Tutoring System (ITS) is a system that helps a student with a certain topic. This help includes lecturing, giving tasks, asking questions, explaining things and giving feedback. This paper will describe all the aspects needed for such a system. The next chapter will give some basic background, and Chapter 3 will explain why detection of a loss of attention is useful for a tutoring system and what such a system can do with this information. Chapter 4 is about recognizing facial Gabor wavelets are used a lot in the recognition and classification of facial expressions. For example [LAK98], [ZS04] and [DW05] all use Gabor wavelets to extract facial expressions. 3. INTELLIGENT TUTORING SYSTEMS In [GVR01] the authors created an ITS that can teach the fundamentals of computer hardware, the operating system and the internet to students. The interface consists of a talking head to act as a dialogue partner, and figures and animations. In the dialogue between the system and the student the system will ask questions which the student has to answer. The ITS can give feedback to the user, and can explain things when the student does not understand a particular piece of information. In [ZHE99] the authors made an ITS for medical students. In this system a physiological change is presented to the student, after which he or she has to predict the effects on seven important physiological parameters like heart rate, stroke volume, etc. When the student made these predictions, the system will start a dialogue with him to correct the errors in these expectations. In these two examples letting the student do the work is very important. When the student is finished, the ITS can give feedback on the work and explain parts of the topic that are not clear yet. 4. WHY DISTRACTION DETECTION These Tutoring Systems can really help, but a problem can arise if the student is not paying attention to the system. For example, the ITS is explaining something or giving feedback on a task, but the student is talking with his neighbour. Or the student is supposed to do a task, but instead he is fiddling with a pencil. Or maybe the system just asked a question and is waiting for an answer while the student is doing something else. In these situations it is useful for an ITS to know that the student is distracted. When it knows that, it can try to do something about it. 4.1 What to do with distracted students When the student has lost his attention from the desired task or object, there are several things to do. • • Warn the user. The ITS can warn the user that his attention is not on the desired task or object, and that he or she should return to that task or object. But this method will only work if the student obligated to work with the system. People do not like to be commanded by a computer, and if they do not like the system anymore they can just walk away. Ask the user to return. If the student is voluntarily working with the system, or if the ITS just is polite, then the system can ask the student to return to the task. • Ask the user what is wrong. If the student has his attention on something else, then maybe there is something wrong. Maybe another person is asking him a question, or maybe he has some problems with the task. If the system asks what is wrong then it can do something with that information. It can wait until the student answered the question, or it can give him extra help with the task. • ‘Silently’ asking for attention. Instead of trying to communicate with the student, the ITS can also try to get the attention with auditory and visual signals. It can give a small beep so the user knows the system is waiting, or it can put some flashing object on the screen. This method (if implemented subtlety) is not as intrusive as asking or commanding the student to return to the task, but now the ITS is only giving signals. The decision is totally with the student. • Stop. If the student is constantly focussing on something else the system can decide to just stop to help the student. But this is a harsh method with the danger of stopping the program when the student did not do anything wrong (e.g. after several false positive distraction warnings). • Pause. Instead of stopping the entire program, the program can also pause its current activity until the student focuses back on the ITS. • Just continue. Instead of trying to get the attention of the student, the tutoring system can just continue with its current activity. This may work if the student is just thinking a bit about a task, or if he is answering a question while the system is waiting for response, but since a tutoring system has to help a student it is not recommended to neglect information like a loss of attention. These are a lot of choices, and most of them depend on a lot of variables, externally and internally. There is no best solution, although good solutions probably use several methods, depending on the situation. 5. THE SYSTEM So, the ITS should be able to detect when a student is distracted using facial expressions and gaze direction. This will result in a system like the one in Figure 1. This system consists of the ITS, two subsystems (to detect gaze direction and facial expressions) Gaze direction ITS Collaborative state Facial expression Processor Distraction Figure 1: a simple model of the system and a processing unit. This unit will be provided with data from the two subsystems and the collaborative state from the ITS, and this data will result in an output that tells if the current student is distracted or not. In the next three chapters the two subsystems and the collaborative states from the ITS will be treated. 6. RECOGNIZING FACIAL EXPRESSIONS For a system that has to detect a loss of attention it might be very useful to detect and recognize facial expressions of the current user. For example, if the user looks sad or angry, he or she is probably thinking about something else and the computer might ask what is wrong. Or if the user looks away from the screen for a second, there is nothing wrong. But when that same user looks away for a second and suddenly starts laughing, then again he or she is probably distracted [SHF03]. So, recognizing facial expressions is probably something the computer will use. But in order to explain how this recognition works, we have to go to the basics: wavelets. 6.1 Wavelets If you have a certain signal or wave, for example a sound wave, it is very costly to store and keep every detail of the signal. A better approach is to find a mathematical formula that describes the signal. An example of this approach is the Fourier transform [WFT06]. This method tries to “re-express the signal into a function in terms of sinusoidal basis functions.” That means that it tries to find a function that is the sum or integral of sinusoidal functions multiplied by some coefficients, in such a way that it has to approach the original signal as close as possible. The problem with this function is that a change in the function affects the whole sample, so this method can not express local minima or maxima. Another, better approach is to use wavelets. Figure 2: Three example Mother wavelets [WWA06] These transformations do not only use spatial information, but also time. It will cut the sample in several pieces, and the result will be a series of wavelets, all for another time frame [WWA06]. To generate the wavelets a very simple wavelet is used. This is called the ‘mother wavelet’. Examples of the most commonly used mother wavelets are shown in Figure 2. These mother wavelets will be transformed, translated and changed until it matches the original sample as much as possible. 6.2 Gabor Filters Gabor wavelets are a more complex form of normal wavelets. These wavelets are composed of two part: a complex sinusoidal carrier and a Gaussian envelope to put that carrier in [CAR06]. In other words, the wavelet takes the form g(x,y)=s(x,y)w(x,y). s(x,y) = exp(i(2π(u0x+v0y)+P)). This is the formula for the carrier. The Gaussian envelope is just an ordinary Gaussian normal function. These Gabor wavelets can be used as a filter for images. This results in a set of wavelets, and this set can be used to extract certain features from the image, for example edges. An example of this feature extraction is in Figure 3. The upper row shows the Gabor filters that were used, and the two rows below that are the results of those three filters on two different facial expressions. These results contain less information because only the most important features are stored. Now they can be used for analysis and recognition. 6.3 Using the Data Now that the facial images went through the Gabor filters, a new image of the face is available in which only the most important features are stored. This image now has to be used for facial expression detection. This can be done in various ways, for example using Principal Component Analysis, but this section will describe to approaches that have a better performance. 6.3.1 Support Vector Machines This approach is used in [BLF03]. They trained seven SVM’s, each one to distinguish an expression in the following list: happiness, sadness, surprise, disgust, fear, anger, and neutral. Each SVM was trained for one of the expressions, and when a Gabor image is fed to a SVM it will give the likelihood of that image belonging to that expression. So, to recognize a facial expression the associated gabor filtered image is put into the seven SVM’s, and the expression that belongs to the SVM that returns the highest likelihood value is chosen. The article also uses AdaBoost (short for Adaptive Boosting). This algorithm uses the same training set over and over and only needs a small dataset. They combined SVM’s with AdaBoost and got even better results than normal SVM’s. 6.3.2 Convolutional Neural Networks In this method a Convolutional Neural Network (CNN) is used to learn the differences between the Gabor images [FAS02]. Convolutional Neural Networks are a special kind of multilayered neural networks [LEC06]. They are trained with a version of the back-propagation algorithm, just like normal neural networks, but their architecture is the difference. They are designed ‘to recognize visual patterns directly from pixel images with minimal preprocessing’. As with the Support Vector Machines, there will be 7 CNN’s, each one trained for one specific expression. 7. GAZE DIRECTION The detection and recognition of facial expressions may be useful, but another great cue to detect distraction is the gaze direction. If the user is not looking at the screen or any other object used in the session then there is a good chance that the user is doing or thinking about something else. To make it easier we assume that the people working with the system do not need extra items besides the computer, because then the system should also detect if the user is looking at that item or at something else. The problem is that it is very hard to detect what the user is actually looking at, because the direction of the eyes is hard to track [UKS04]. Instead, it is more easy to detect the direction of the head. In most of the cases these directions will be the same. To detect the head orientation, first the program needs a face. To do this it will use a Six-Segmented-Rectangular filter (SSR filter). The result are several rectangulars which are divided in six segments (see figure 3). Such a rectangle is a Face candidate if it has an eye and an eyebrow in segment S1 and S3. So, it must at least satisfy the following two conditions: ‘aS1 < aS2 Figure 3: examples of responses of Gabor filters [LAK98] and aS1 < aS4’ and ‘aS3 < aS2 and aS3 < aS6’. In these formulas ‘aSx’ is the average pixel value of segment x. • When the Face candidates are computed a Support Vector Machine (SVM) is used to determine which Face candidates are real faces. A typical face pattern that is used in the SVM is in figure 4. 7.1 Tracking the eyes Next the eyes have to be found. Finding the eyes is relatively easy (local minima), but tracking (e.g. following) them is another story. Blinking can greatly disrupt the pattern and the updating templates will get lost. To solve this problem, instead of tracking the eyes a Figure 4: The six segments of a SSR “between-the-eyes” filter tracking template is used [GOR02]. This template tracks the point between the eyes, which is a bright spot (the bridge of the nose), with two dark spots (the eyes) on both sides. With template matching the positions of Figure 5: A typical face pattern the eyes can know be tracked. 7.2 Tracking the nose After locating the eyes, finding the nose is fairly easy. The tip of the nose is a bright area, and can be found below the eyes. Once the tip of the nose is found it can be tracked. This is done by searching every frame for the brightest spot in the locality of the previous location of the nose. 7.3 Face orientation Now that the locations of the eyes and the nose are known, the direction of the head can be detected. Normally, multiple cameras are necessary for a 3D-picture so depth-distances can be measured. But this system uses only one camera. In general, on a picture of a frontal face, the nose is on a vertical line exactly between the eyes. However, when the face rotates this line will move closer to one eye (distance q), and further away from the other (distance p). The ratio of these distances can be used to calculate the rotation, using the following formula: r = q / (p+q) – 0.5 The sign of r means the direction of the rotation (left of right). The advantages of this method are that it only requires one camera, and it is fast. The face orientation can be calculated in real-time because the time between the frames is all it needs. 8. COLLABORATIVE STATES Knowing the facial expressions and the head direction of the user is important for distraction detection, but it is not enough. For example, when the user has to answer a question, he has to focus on the keyboard to type the answer. But when the system is explaining something, then the user should not look at the keyboard because then he cannot see the program. The problem here is that the distraction is dependent of their collaborative state, i.e. in what kind of state are the current activities of the system and the user. Different states require different forms of attention. These states are: • • • Explaining. In this state the system is explaining or teaching the user something. This can be done in several ways. For example, there may be text on the screen, or there may be an animation. In any case, usually there is something on the screen that requires attention. This means that if the system is in this state then the focus of the user should be on the screen. Working task. In this state the system has given the user a task to do. In this case this is a ‘working task’ which means that it is a task that requires more work and less thinking. Most of the time the user will switch his attention between the screen (to read things) and the input devices. Things get more complicated when the user has extra material next to him, but to simplify things we will assume that the system with its input devices is all that the user needs, and that the user should have his attention on the screen or the input devices. Thinking task. In this task for the user the thinkingpart is more important than the working-part. Users have to think a lot to complete the task. This means that the users will not have their attention on the screen or the input devices all the time. Maybe they stare out of the window trying to come up with a good solution. In this part facial expression can really help, because it must be clear that the user is really thinking about the task. Pause. In this state the user notified the system that he wants a break or something like that, and the attention of the user can be on anything. 9. PROCESSING THE DATA Now that the subsystems have collected the necessary data, it is time to add all the data together and try to find out if the student is distracted. This will be done in the Processor-unit (see figure 1). But data from one separate moment is not enough. You can not say anything if you know that the user looked away at one specific time; maybe it was only for one second. That is why data over time has to be analyzed. This time will be the last X seconds, where X has to be determined by tests and is dependent of the environment and the tasks. This results in the following input in the Processor: • The current collaborative state. • The gaze directions of the last X seconds. • The facial expressions of the last X seconds. From this data the Processor must now say if the student is distracted or not (or give a certain chance that the student is distracted). The most logical choice would be to include some kind of learning system that can deal with the input. An important aspect in these systems is that the data is over time. The following learning methods are probably able to deal with the data [ALP04]: • Hidden Markov Models (HMM’s). These are models in which the states are not observable. But every state emits observations with a certain chance, and these observations can be seen. The structure of the model however is unknown. Every state also has transitions to other states. These transitions also have a certain chance. HMM’s are often used in linguistics. In speech recognition HMM’s are used to find words in series of phonemes. That is exactly the strong point of HMM’s: it is great in series of observation, so the data over time the Processor receives should not be a problem. • Time Delay Neural Networks (TDNN’s). Normal Neural Networks (NN) consists of perceptrons, small basic processing units. With these perceptrons the NN gives a weight to each one of the input variables. In the training phase the output of the NN is compared to the real output, and the weights of each of the input variables are changed accordingly. In the end (hopefully) a model with weight factors exists that can predict the correct output. But a normal Neural Network cannot handle data over time. In Time Delay Neural Networks previous inputs are delayed. After a certain amount of delays all the data (so including the data from the past) is fed to the TDNN as one group of data. The only limitation is that the time window has to be determined beforehand. • Recurrent Neural Networks (RNN’s): In Recurrent Neural Networks the network does not only consist of feedforward connection, but also has self-connections and connections to units in the previous layers. This recurrency gives the model a short-time memory, which can be used to handle the data over time the Processor receives. The limitation here is that training a RNN is very complex and requires large amounts of space. These are three possible solutions for the learning system. It is very hard to say beforehand which solution is the best, although the HMM is probably the most simple model. 10. EXAMPLE SITUATIONS This paper is mainly about using facial expressions to recognize a loss of attention. Normally, only the gaze direction is used to detect if a person is distracted and looking at something else than he or she should [UKM04]. So why add facial expression detection? It adds a lot of extra computational time and makes the detection program a lot more complex. To make clear that facial expressions can help, several examples of distraction are given below. There are also examples of detecting distraction in general (so not specifically with facial expressions). • • Looking away. If the system is in the Explaining state or in the Working task state and the user is looking away, then there is a very good chance that the user is distracted. In those collaborative states it is required that the user has his focus of attention at the screen or the input devices. But the problem is that you cannot expect a user to look at those two objects all the time. People look at other objects once in a while, maybe to relax their eyes for several seconds, or because they suddenly see something. This does not always mean that the user is distracted. However, there are other cues that can help in this situation, for example time. It is no big deal if the user looks away for several seconds, but if it takes too long then the person is probably not paying attention. Another cue is what his current focus of attention is. If that is a fixed object (i.e. his head orientation stays the same) there is a chance he is just relaxing his eyes (more on this point later), but if the user is looking around and focussing on everything except the screen and the input devices then he is probably bored and distracted. Looking at a fixed object. A user looking at a fixed object that is not the screen or any input device can also be distracted (we already assumed that there will not be extra items to work with), but maybe it is just a minor distraction that does not really matter to the system. In this case facial expressions can help. If the user has a neutral face then it is hard to detect anything. But if the user has a very powerful facial expression (e.g. a large grin, surprise, anger, etc) then there is probably something else happening that is distracting him. With a neutral face he could just be thinking about the assignment (this will probably happen a lot in the Thinking task state of the system). • Talking. When detecting facial expressions it is very easy to detect if the user is talking at the same time (a lot of different mouth movements in a short time interval). This is a great cue to detect loss of attention. The user can look at the screen and at the same time talk to a person standing next to him while the computer system is explaining something. Then the user is clearly not paying attention. Of course this can also be detected with a microphone, but in a crowded environment the system does not know who is talking. These are just some examples of situation in which the system can detect distraction. The system proposed in this paper has to learn these cases by itself and this can vary per system and environment. 11. DISCUSSION There are two major concerns about this system. One, it is only theoretical. It has not been build, and there is no absolute evidence that it will work as it should. However, I think that everything is explained well enough and that enough arguments have been given to make it plausible enough that the system will probably work. The second major concern is that the two subsystems, one for gaze direction and one for facial expressions, are only tested inside laboratories (to my knowledge). This means that the quality of the subsystems is not guaranteed in real situations. But the given solutions are not the only available solutions. If another method to detect facial expressions or gaze direction is available that works better than the one given in this paper, it is very easy to substitute that method in this system. The methods given here are just recent methods that are very promising. These are the two major concerns about this system. However, this does not mean that besides these concerns the system is perfectly sound. Maybe facial expression do not contribute to the quality of distraction recognition that much. And maybe there are more easy methods to detect a loss of attention. But more things can be said when the system is actually build. 12. FUTURE WORK Of course the first thing to do is to implement this system to see how it performs. Depending on the results, several improvements can be made. The quality of the subsystems should be tested and if they are not good enough they should be replaced by better methods. After the implementation the best learning method should be tested too. This paper gives several learning methods, but it is very hard to predict which method works best. This should be tested. CONCLUSIONS This paper should give a very clear idea of what a distraction detection system in an Intelligent Tutoring System consists of. Facial expressions are detected using one camera and Gabor filters, and the expressions are recognized by Support Vector Machines. Gaze direction is detected in real-time by also one camera and simple geometry. This data plus the collaborative state in which the system and the user are is fed to a learning system which will tell the ITS if the student is distracted or not. REFERENCES [ALP04] Alpaydin, E., Introduction to Machine Learning, the MIT Press, October 2004, ISBN 0-262-01211-1 [Akk06] Akker, H.J.A. op den, State-of-the-art overview – Recognition of Attentional Cues in Meetings, Augmented Multi-party Interaction with Distance Access, http://www.amidaproject.org/stateoftheart, 2006 [BLF03] Bartlett, M.S., Littlewort, G., Fasel, I.R. and Movellan, J.R., Real time face detection and facial expression recognition: Development and applications to human computer interaction. In IEEE International Conference on Computer Vision and Pattern Recognition, Madison, WI, 2003 [CAR06] Carr, D., Iris Recognition: Gabor Filtering, retrieved March 9, 2006, from http://cnx.org/content/m12493/latest/ [DW05] Du, S. and Ward, R., Statistical Non-Uniform Sampling of Gabor Wavelet Coefficients for Face Recognition, Acoustics, Speech, and Signal Processing, 2005. Proceedings. (ICASSP '05). IEEE International Conference on, Volume 2, p 73- 76, 2005 [FAS02] Fasel, B., Robust Face Analysis using Convolutional Neural Networks, icpr, 16th International Conference on Pattern Recognition (ICPR'02) - Volume 2, p. 20040, 2002. [GVR01] Graesser, A.C., VanLehn, K., Rosé, C.P., Jordan, P.W., Harter, D., Intelligent tutoring systems with conversational dialogue. AI Magazine, 2001. 22(4). [LAK98] Lyons, M.J., Akamatsu, S., Kamachi, M. and Gyoba, J., Coding Facial Expressions with Gabor Wavelets, Proceedings, Third IEEE International Conference on Automatic Face and Gesture Recognition, April 14-16 1998, Nara Japan, IEEE Computer Society, pp. 200-205 [LEC06] LeCun, Y., LeNet-5, convolutional neural networks, retrieved March 24, 2006, from http://yann.lecun.com/exdb/lenet/index.html [PLN02] Parkhurst, D., Law, K. and Niebur, E., Modeling the role of salience in the allocation of overt visual attention, Vision Research, 42:107-123, 2002 [SHF03] Sarrafzadeh, A., Hosseini, H.G., Fan, C., Overmyer, S.P., Facial Expression Analysis for Estimating Learner’s Emotional State in Intelligent Tutoring Systems, Third IEEE International Conference on Advanced Learning Technologies 2003 (ICALT'03) p. 336 [UKM04] Akira Utsumi, Shinjiro Kawato, Kenji Susami Noriaki Kuwahara and Kazuhiro Kuwabara. Faceorientation Detection and Monitoring for Networked Interaction Therapy. In Proc. of SCIS & ISIS 2004, Sep 2004 [WFT06] Wikipedia, the free online encyclopedia, Fourier Transforms retrieved March 9, 2006, from http://en.wikipedia.org/wiki/Fourier_transform [WTS06] Wikipedia, the free online encyclopedia, Intelligent Tutoring Systems, retrieved March 10, 2006, from http://en.wikipedia.org/wiki/Intelligent_tutoring_syste m [WWA06] Wikipedia, the free online encyclopedia, Wavelets, retrieved March 9, 2006, from http://en.wikipedia.org/wiki/Wavelet [ZHE99] Zhou, Y. and Evens, M., A practical student model in an intelligent tutoring system, Proc. 11th Intl. Conf. on Tools with Articial Intelligence, pages 13-18, Chicago, IL, USA, November 1999 [ZS04] Ye, J., Zhan, Y. and Song, S., Facial expression features extraction based on Gabor wavelet transformation, Systems, Man and Cybernetics, 2004 IEEE International Conference on Volume 3, 10-13 Oct. 2004 Page(s):2215 - 2219 vol.3