Biological effects from exposure to electromagnetic radiation emitted

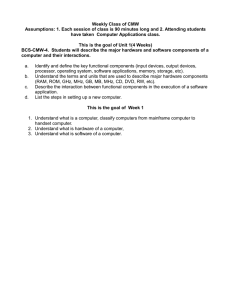

advertisement