Techniques for the construction of robust regression designs

advertisement

The Canadian Journal of Statistics

Vol. 41, No. 4, 2013, Pages 679–695

La revue canadienne de statistique

679

Techniques for the construction of robust

regression designs

Maryam DAEMI* and Douglas P. WIENS

Department of Mathematical and Statistical Sciences, University of Alberta, Edmonton, Alberta, Canada

T6G 2G1

Key words and phrases: Alphabetic optimality; approximate straight line regression; bias; invariance;

minimax; approximate quadratic regression.

MSC 2010:: Primary 62K05, 62G35; secondary 62J05

Abstract: The authors review and extend the literature on robust regression designs. Even for straight line regression, there are cases in which the optimally robust designs—in a minimax mean squared error sense, with

the maximum evaluated as the “true” model varies over a neighbourhood of that fitted by the experimenter—

have not yet been constructed. They fill this gap in the literature, and in so doing introduce a method of

construction that is conceptually and mathematically simpler than the sole competing method. The technique

used injects additional insight into the structure of the solutions. In the cases that the optimality criteria employed result in designs that are not invariant under changes in the design space, their methods also allow for

an investigation of the resulting changes in the designs. The Canadian Journal of Statistics 41: 679–695; 2013

© 2013 Statistical Society of Canada

Résumé: Les auteurs examinent la littérature en matière de plans expérimentaux robustes pour la régression

et y contribuent. Même pour la régression linéaire, il existe des cas où les plans robustes optimaux – dans

le sens d’erreur quadratique moyenne minimax dont le maximum évalué au « vrai modèle » varie dans

un voisinage de celui ajusté à l’origine – n’ont pas encore été élaborés. Les auteurs comblent cette lacune

en présentant une méthode d’élaboration plus simple, sur les plans conceptuel et mathématique, que la

seule autre méthode existante. La technique utilisée donne une nouvelle perspective sur la structure des

solutions. Lorsque les critères d’optimalité choisis mènent à des plans qui ne sont pas invariants en présence

de changements dans l’espace d’échantillonnage, les méthodes proposées permettent aussi un examen de

ces changements dans les plans. La revue canadienne de statistique 41: 679–695; 2013 © 2013 Société

statistique du Canada

1. INTRODUCTION AND SUMMARY

Consider an experiment designed to investigate the relationship between the amount (x) of a

certain chemical compound used as the input in an industrial process, and the output (Y ) of

the process. The relationship is thought to be linear in x; if this supposition is exactly true then

the design that minimizes the variances of the regression parameter estimates, or the maximum

variance of the predictions, places half of the observations at each endpoint of the interval of input

values of interest. It has however long been known (Box & Draper 1959, Huber 1975) that even

seemingly small deviations from linearity can introduce biases so large as to destroy the optimality

of this classical design, if mean squared error, rather than variance, becomes the measure of design

quality.

In Section 2 of this article we review existing results from the literature on robustness of

design, in the context of the most common formulation of “approximate linear regression.” In this

formulation one allows the “true” regression response to vary over a neighbourhood of that which

* Author to whom correspondence may be addressed.

E-mail: daemi@ualberta.ca

© 2013 Statistical Society of Canada / Société statistique du Canada

680

DAEMI AND WIENS

Vol. 41, No. 4

is fitted by the experimenter. The optimally robust design is then one that minimizes the maximum

of some scalar-valued function of the mean squared error (mse) matrix of the estimates. Common

functions are the trace, determinant or maximum eigenvalue of the mse matrix of the parameter

estimates, and the integrated mse of the predictions—these correspond respectively to the A-, D-,

E-, and I-optimality criteria in classical design theory, where the same functions are applied to the

covariance matrix. In each of these cases one typically finds that the optimally robust design must

minimize the maximum of several functions of the mse matrix. A simple and sometimes effective

method to proceed is then to find the design minimizing a chosen one of these functions, and to

verify that the value of this function, evaluated at the putatively optimal design, is indeed the maximum. We call this a “pure” strategy. When it fails—as it does in a great many common instances,

including robust A- and E-optimality—the literature to this point is almost silent. The sole exception is Shi, Ye, & Zhou (2003), who note that the maximum of several differentiable loss functions

is itself not necessarily differentiable, and go on to apply methods of nonsmooth optimization to

obtain a description of the minimizing designs, with an application to quadratic regression.

It is our aim here to introduce a technique that is conceptually and mathematically simpler

than that of Shi, Ye, & Zhou (2003), and to illustrate its application in the contexts of robust

A- and E-optimality for straight line regression, and robust I-optimality for quadratic regression.

The method is described in Section 3, with examples and computational aspects in Section 4.

The technique used injects additional insight into the structure of the solutions. In addition, we

discuss the changes in the A- and E-optimal designs as the design space changes—even under the

classical criteria these designs are not invariant under transformations of the design space.

Regression responses which are approximately linear, but with a more involved structure than

is used in our examples may, at the cost of greater but similar computational complexity, be

handled in the same manner as illustrated here.

2. ROBUST REGRESSION DESIGNS

Suppose that the experimenter intends to make n observations on a random response variable

Y , at several values of a p-vector f (x) of regressors. Each element of f (x) is a function of q

functionally independent variables x = (x1 , . . . , xq ) , with x to be chosen from a design space X .

The fitted response is f (x)θ. If however this response is recognized as possibly only approximate:

E[Y (x)] ≈ f (x)θ

for a parameter θ whose interpretation is now in doubt, then one might define this target parameter

by

θ = arg min (E[Y (x)] − f (x)η)2 dx

(1)

η

X

and then define

ψ(x) = E[Y (x)] − f (x)θ.

(2)

This results in the class of responses

E[Y (x)] = f (x)θ + ψ(x)

with—by virtue of (1)—ψ satisfying the orthogonality requirement

f (x)ψ(x) dx = 0.

X

The Canadian Journal of Statistics / La revue canadienne de statistique

(3)

DOI: 10.1002/cjs

2013

CONSTRUCTION OF ROBUST DESIGNS

681

Under the very mild assumption that the matrix

f (x)f (x) dx

A=

X

be invertible, the parameter defined by (2) and (3) is unique.

We identify a design, denoted ξ, with its design measure—a probability measure ξ(dx) on X .

If ni of the n observations are to be made at xi we also write ξi = ξ(xi ) = ni /n. Define

M ξ = X f (x)f (x)ξ(dx),

bψ,ξ = X f (x)ψ(x)ξ(dx)

and assume that M ξ is invertible. The covariance matrix of the least squares estimator (lse) θ̂,

assuming homoscedastic errors with variance σε2 , is (σε2 /n)M −1

ξ , and the bias is E[θ̂ − θ] =

M −1

ξ bψ,ξ ; together these yield the mean squared error matrix

σ2

−1

mse θ̂ = ε M −1

+ M −1

ξ bψ,ξ bψ,ξ M ξ

n ξ

of the parameter estimates, whence the mse of the fitted values Ŷ (x) = f (x)θ̂ is

mse[Ŷ (x)] =

σε2 −1

2

f (x)M −1

ξ f (x) + (f (x)M ξ bψ,ξ ) .

n

Loss functions that are commonly employed, and that correspond to the classical alphabetic

optimality criteria, are the trace, determinant, and maximum characteristic root of mse[θ̂], and

the integrated mse of the predictions:

σε2

−2

tr M −1

ξ + bψ,ξ M ξ bψ,ξ ,

n

⎞1/p

⎛

2

−1

σε

2

1

+

b

M

b

ξ

ψ,ξ

ψ,ξ n

σ ⎜

⎟

LD (ξ|ψ) = (det mse[θ̂])1/p = ε ⎝

⎠ ,

n

det M ξ

LA (ξ|ψ) = tr mse[θ̂] =

(4a)

(4b)

LE (ξ|ψ) = chmax mse[θ̂] = sup mse[c θ̂],

LI (ξ|ψ) =

X

(4c)

c=1

σ2 −1

+ bψ,ξ M −1

mse[Ŷ (x)] dx = ε tr AM −1

ξ

ξ AM ξ bψ,ξ +

n

X

ψ2 (x) dx.

(4d)

The dependence on ψ is eliminated by adopting a minimax approach, according to which one

maximizes (4) over a neighbourhood of the assumed response. This neighbourhood is constrained

by (3) and by a bound

X

ψ2 (x) dx ≤

τ2

n

(5)

for a given constant τ. That the bound in (5) be O(n−1 ) is required for a sensible asymptotic

treatment based on the mse—it forces the bias of the estimates to decrease at the same rate as

their standard error. Of course if n is fixed it can be absorbed into τ.

DOI: 10.1002/cjs

The Canadian Journal of Statistics / La revue canadienne de statistique

682

DAEMI AND WIENS

Vol. 41, No. 4

Although other neighbourhood structures are possible—see for instance Marcus & Sacks

(1976), Pesotchinsky (1982), and Li & Notz (1982)—that outlined above, a simplified version of

which was introduced by Huber (1975) in the context of approximate straight line regression and

which was then extended by Wiens (1992), has become the most common. Huber (1975) also

discusses and compares various approaches to model robustness in design.

A virtue of the neighbourhood given by (3) and (5) is its breadth, allowing for the modelling

of very general types of alternative responses. This breadth however leads to the complicating

factor that the maximum loss, over , of any implementable, hence discrete, design is necessarily

infinite; this leads to the derivation of absolutely continuous design measures and their subsequent approximation—see the discussion in Wiens (1992), where the comment is made that “Our

attitude is that an approximation to a design which is robust against more realistic alternatives

is preferable to an exact solution in a neighbourhood which is unrealistically sparse.”

If X is an interval, as will be assumed in our examples, then the implementation placing one

observation at each of the quantiles

−1 i − 1/2

xi = ξ

, i = 1, . . . , n,

(6)

n

converges weakly to ξ and is the n-point design closest to ξ in Kolmogorov distance (Fang &

Wang 1994). Of course one might elect to replicate the design at a smaller number of quantiles,

for instance to test for heteroscedasticity. Daemi (2012) discusses other possibilities.

To describe the maximized loss functions max L(ξ|ψ), let m(x) be the density of ξ and define

matrices

H ξ = M ξ A−1 M ξ ,

Kξ =

f (x)f (x)m2 (x) dx

X

and

Gξ = Kξ − H ξ =

X

[(m(x)I p − M ξ A−1 )f (x)][(m(x)I p − M ξ A−1 )f (x)] dx.

Wiens (1992), with further details in Heo, Schmuland, & Wiens (2001), shows that the maximum

losses are attained in a certain “least favourable” class {ψβ |β = 1} in which bψ,ξ = bψβ ,ξ =

1/2

√τ G

β. The maximization of the losses in (4) now requires only that they be evaluated at bψβ ,ξ ,

n ξ

and that the resulting quadratic forms in β then be maximized over the unit sphere. Define

ν=

τ2

∈ [0, 1],

σε2 + τ 2

representing the relative importance, to the experimenter, of errors due to bias rather than to

variance. Then the maximum values of LA , LD , LE , and LI , respectively are (σε2 + τ 2 )/n times

−1

−1

lA (ξ) = (1 − ν)tr M −1

ξ + νchmax M ξ Gξ M ξ ,

1/p

ν

1 + 1−ν

chmax M −1

ξ Gξ

lD (ξ) = (1 − ν)

,

det M ξ

−1

−1 lE (ξ) = chmax (1 − ν)M −1

ξ + νM ξ Gξ M ξ ,

−1

lI (ξ) = (1 − ν)tr AM −1

ξ + νchmax K ξ H ξ .

The Canadian Journal of Statistics / La revue canadienne de statistique

(7a)

(7b)

(7c)

(7d)

DOI: 10.1002/cjs

2013

CONSTRUCTION OF ROBUST DESIGNS

683

These maxima are presented in complete generality—they hold for any independent variables

x and any vector f (x) of regressors. In contrast the continuation of the minimax problem—

minimizing l(ξ)—is highly dependent on the form of the model being fitted. Except in some

simple cases it leads to substantial numerical work.

In the following examples we use the definitions

μj =

xj m(x) dx,

X

κj =

X

xj m2 (x) dx.

Example 1. We illustrate some of the issues in the case of straight line regression (f (x) = (1, x) )

over an interval X = [−1/2, 1/2], under the greatly simplifying but realistic requirement that the

design be symmetric. Then A, M ξ , Kξ , and H ξ are diagonal matrices, with diagonals (1, 1/12),

(1, μ2 ), (κ0 , κ2 ), and (1, 12μ22 ), respectively. We find that

1

κ2

+ ν max κ0 − 1, 2 − 12 ,

lA (ξ) = (1 − ν) 1 +

(8a)

μ2

μ2

⎞1/2

⎛

ν

1 + 1−ν

max κ0 − 1, μκ22 − 12μ2

⎠ ,

lD (ξ) = (1 − ν) ⎝

(8b)

μ2

(1 − ν)

κ2

lE (ξ) = max (1 − ν) + ν(κ0 − 1),

+ν

−

12

,

μ2

μ22

1

κ2

lI (ξ) = (1 − ν) 1 +

+ ν max κ0 ,

.

12μ2

12μ22

(8c)

(8d)

Note that in each of these cases, the maximized loss is of the form

l(ξ) = max{l1 (ξ), l2 (ξ)}.

(9)

The “pure” strategy referred to in Section 1 would have the designer choose one of l1 (ξ), l2 (ξ)—for

definiteness, suppose he chooses l1 (ξ)—and find a minimizing design ξ1 . If it can then be verified

that

l1 (ξ1 ) ≥ l2 (ξ1 ),

(10)

then the minimax design has been found: for any other design ξ we have

l(ξ) = max{l1 (ξ), l2 (ξ)} ≥ l1 (ξ) ≥ l1 (ξ1 ) = max{l1 (ξ1 ), l2 (ξ1 )} = l(ξ1 ).

If (10) fails, then the designer instead finds ξ2 minimizing l2 (ξ) and hopes to verify that

l2 (ξ2 ) ≥ l1 (ξ2 ).

(11)

For lI , Huber (1975) carried out this process and verified that ξ1 is the minimax design. This was

extended to lD by Wiens (1992), who also found that for lA and lE the strategy succeeds only

for ν ≤ νA = 0.692 and ν ≤ νE = 0.997, respectively. For these values of ν the designs ξ2 are

minimax; for larger values both inequalities (10) and (11) fail.

DOI: 10.1002/cjs

The Canadian Journal of Statistics / La revue canadienne de statistique

684

DAEMI AND WIENS

Vol. 41, No. 4

Those A- and E-minimax designs that have been obtained from the pure strategy will be

illustrated in Section 4, where they will be exhibited as special instances of the more general

strategy to be introduced in the next section. In the examples of Section 4 we also present the

minimax designs for the remaining values of ν and discuss the designs for more general design

spaces X = [−T, T ].

Example 2. For quadratic regression (f (x) = (1, x, x2 ) ) over X = [−1/2, 1/2], again assuming symmetry, the pure strategy fails drastically. For instance in the case of robust I-optimality,

we have

⎞

⎞

⎛

⎞

⎛

⎛

1

0 1/12

1 0 μ2

κ0 0 κ2

⎟

⎟

⎜

⎟

⎜

⎜

A = ⎝ 0 1/12 0 ⎠ , M ξ = ⎝ 0 μ2 0 ⎠ , Kξ = ⎝ 0 κ2 0 ⎠ ,

1/12 0 1/80

μ2 0 μ4

κ2 0 κ4

whence

1

240μ22 − 40μ2 + 3 def

+

= 1 + θ0 (ξ).

12μ2

240(μ4 − μ22 )

tr AM −1

ξ =1+

We calculate that

⎛

⎜

Kξ H −1

ξ =⎝

θ 1 κ 0 + θ 2 κ 2 0 θ 2 κ0 + θ 3 κ 2

0

κ2 θ4

0

⎞

⎟

⎠

θ1 κ2 + θ2 κ4 0 θ2 κ2 + θ3 κ4

with θj = θj (ξ), j = 1, 2, 3, 4, given by

θ1 =

240μ24 − 40μ2 μ4 − 3μ22

,

240(μ4 − μ22 )2

θ2 =

20μ22 + 20μ4 − 240μ2 μ4 − 3μ2

,

240(μ4 − μ22 )2

θ3 =

240μ22 − 40μ2 + 3

,

240(μ4 − μ22 )2

θ4 =

1

.

12μ22

The characteristic roots of Kξ H −1

ξ are

ρ1 (ξ) = θ4 κ2

and the two characteristic roots of

θ 1 κ 0 + θ 2 κ 2 θ2 κ 0 + θ 3 κ 2

θ 1 κ 2 + θ 2 κ 4 θ 2 κ 2 + θ 3 κ4

.

Of these two roots, one is uniformly larger than the other, and is

θ 1 κ0 + θ 3 κ4

ρ2 (ξ) = θ2 κ2 +

+

2

θ 1 κ0 − θ 3 κ4

2

1/2

2

+ (θ2 κ0 + θ3 κ2 )(θ1 κ2 + θ2 κ4 )

The Canadian Journal of Statistics / La revue canadienne de statistique

.

DOI: 10.1002/cjs

2013

CONSTRUCTION OF ROBUST DESIGNS

685

(The third root has a minus sign in front of the radical.) Thus the loss is again of the form (9),

with

l1 (ξ) = (1 − ν)(1 + θ0 (ξ)) + νρ1 (ξ),

l2 (ξ) = (1 − ν)(1 + θ0 (ξ)) + νρ2 (ξ).

Further investigation reveals that the pure strategy fails for all ν ∈ (0, 1). Some details are in

Heo (1998). We return to this example in Section 3.3.

3. A GENERAL CONSTRUCTION STRATEGY

In Examples 1 and 2 of Section 2, in the cases in which minimizing designs have been obtained,

they were found by introducing Lagrange multipliers to handle the various constraints, and then

using variational methods to solve the ensuing unconstrained problems. We will continue this

approach, but apply it in the context of the following theorem, whose proof is in the Appendix.

Theorem 1. Given loss functions {lj (ξ)}Jj=1 depending on designs ξ, a minimax design ξ∗ ,

minimizing the loss

l(ξ) = max {lj (ξ)},

1≤j≤J

can be obtained as follows. Partition the class of designs as = ∪Jk=1 k , where

.

k = ξ lk ξ = max lj ξ

1≤j≤J

For each j ∈ {1, . . . , J} define ξj to be the minimizer of lj (ξ) in j . Then ξ∗ is the design ξj∗ for

which j ∗ = arg min1≤j≤J {lj (ξj )}.

While it would be possible to write down general expressions for the form of ξ∗ resulting from

Theorem 1, it is simpler to take advantage of the structure of particular cases.

3.1. Straight Line Regression; A-optimality

As in Example 1 of Section 2, we have

1

l1 (ξ) = (1 − ν) 1 +

+ ν(κ0 − 1),

μ2

κ2

1

l2 (ξ) = (1 − ν) 1 +

+ν

− 12 .

μ2

μ22

(12a)

(12b)

−1

The eigenvalues of M −1

ξ Gξ M ξ appearing in (7a), (8a), and (12) are

κ0 − 1 =

κ2

− 12 =

μ22

DOI: 10.1002/cjs

1/2

−1/2

1/2

−1/2

(m(x) − 1)2 dx,

x2

m(x)

− 12

μ2

(13a)

2

dx.

(13b)

The Canadian Journal of Statistics / La revue canadienne de statistique

686

DAEMI AND WIENS

Vol. 41, No. 4

It is simplest to first optimize subject to μ2 being fixed. Thus we first find ξ1 , with density

m1 = arg min

1/2

−1/2

(m(x) − 1)2 dx,

determined subject to

1/2

= 1,

−1/2 m(x) dx

1/2

1/2

−1/2

−1/2 x

(m(x) − 1)2

2 m(x) dx

− x2

m(x)

μ2

= μ2 ,

− 12

2

− δ2

dx = 0,

where δ is a slack variable. By the theory of Lagrange multipliers (Pierre 1986, chapter 2 for

instance), we may instead consider the unconstrained problem of minimizing

⎤

(m(x) − 1)2 − 2λ1 m(x) − 2λ2 x2 m(x)

2 ⎦ dx

⎣

(m; λ1 , λ2 , λ3 ) =

2 − x2 m(x) − 12

(m(x)

−

1)

+λ

−1/2

3

μ2

⎡

1/2

with the multipliers determined by the side conditions (and (μ2 , δ) chosen to minimize the resulting

loss).

It is sufficient to minimize the integrand of pointwise over m(x) ≥ 0 (the requirement of

symmetry turns out to be satisfied unconditionally); this yields the minimizing density

m1 (x) =

a1 x 2 + b 1

c1 x 2 + d 1

+

(14)

(the positive part), where

a1 = λ2 − 12λ3 /μ2 ,

b1 = 1 + λ 1 + λ 3 ,

c1 = −λ3 /μ22 ,

d1 = 1 + λ 3 .

The significance of the current approach is that we now know that m1 (x) is of the form (14).

Note that this density is overparameterized—if a1 = 0 we can divide all constants by it, thus

obtaining the density

m(x; b, c, d) =

x2 + b

cx2 + d

+

;

(15)

if a1 = 0 then (14) is a limiting version of (15). The densities minimizing l1 (ξ) and l2 (ξ)

unconditionally are of the form (15), with c = 0 and d = 0, respectively.

A parallel development, minimizing l2 (ξ), shows that the minimizing density m2 (x) is also of

the form (15). Thus it is sufficient to restrict attention to the class of designs ξ with densities

of this form.

For the numerical work, it is now more convenient to proceed directly, and employ a numerical minimizer to find constants b∗ , c∗ , d∗ for which the design ξ∗ , with density m(x; b∗ , c∗ , d∗ )

The Canadian Journal of Statistics / La revue canadienne de statistique

DOI: 10.1002/cjs

2013

CONSTRUCTION OF ROBUST DESIGNS

687

minimizes l(ξ) in . The computational details, and the end results of this process, are discussed

in Section 4.

3.2. Straight Line Regression; E-optimality

The development for E-optimality is completely analogous to that for A-optimality, the only

difference being that the losses (12) are to be replaced by

l1 (ξ) = 1 − ν + ν(κ0 − 1),

1−ν

κ2

l2 (ξ) =

+ν

−

12

.

μ2

μ22

(16a)

(16b)

A consequence is that in the range ν ∈ [0, νA ], for which the pure strategy succeeds and

yields an A-optimal design ξ∗ minimizing l2 (ξ) and satisfying l2 (ξ∗ ) > l1 (ξ∗ ), this design is also

E-optimal. To see this denote the losses (12) by l1,A , l2,A and the losses (16) by l1,E , l2,E . Since

l2,E (ξ) = l2,A (ξ) − (1 − ν), ξ∗ also minimizes l2,E (ξ). Since l2,A (ξ∗ ) > l1,A (ξ∗ ) we have that

κ2 (ξ∗ )

− 12 > κ0 (ξ∗ ) − 1.

μ22 (ξ∗ )

(17)

But for any design on χ = [−1/2, 1/2],

1−ν

> 1 − ν.

μ2 (ξ)

(18)

Combining (17) and (18) we have that l2,E (ξ∗ ) > l1,E (ξ∗ ) and so the pure strategy also yields the

E-optimality of ξ∗ .

3.3. Quadratic Regression; I-optimality

Recall Example 2 of Section 2. We carry out the minimization of l1 (ξ) subject to l1 (ξ) ≥ l2 (ξ),

and of l2 (ξ) subject to l2 (ξ) ≥ l1 (ξ), initially for fixed values of μ2 (ξ) and μ4 (ξ). This fixes all θj ,

j = 0, . . . , 4, and so converts the inequalities between l1 and l2 into inequalities between ρ1 and

ρ2 . Thus for the first of these minimizations we solve

m1 = arg min

1/2

−1/2

x2 m2 (x) dx,

(19a)

subject to

1/2

m(x) dx = 1,

(19b)

x2 m(x) dx = μ2 ,

(19c)

x4 m(x) dx = μ4 ,

(19d)

ρ1 (ξ) − ρ2 (ξ) − δ2 = 0

(19e)

−1/2

1/2

−1/2

1/2

−1/2

for a slack variable δ.

DOI: 10.1002/cjs

The Canadian Journal of Statistics / La revue canadienne de statistique

688

DAEMI AND WIENS

Vol. 41, No. 4

For any design ξ with density m, define

ξ(t) = (1 − t)ξ1 + tξ,

0≤t≤1

with density m(t) (x) = (1 − t)m1 (x) + tm(x). To solve (19) it is sufficient to find ξ1 for which

(t; λ1 , λ2 , λ3 , λ4 ) =

1/2

−1/2

x2 m2(t) (x) − 2λ1 m(t) (x) − 2λ2 x2 m(t) (x) − 2λ3 x4 m(t) (x) dx

− λ4 {ρ1 (ξ(t) ) − ρ2 (ξ(t) )}

is minimized at t = 0 for each absolutely continuous ξ, and satisfies the side conditions.

The first order condition is

1/2

0 ≤ (0; λ1 , λ2 , λ3 , λ4 ) = 2

[{d1 + f1 x2 + e1 x4 }m1 (x)

−1/2

−{a1 + b1 x2 + c1 x4 }](m1 (x) − m(x)) dx

for all m(·), where in terms of

θ 1 κ0 − θ 3 κ4

,

2

K2 = θ2 κ0 + θ3 κ2 ,

K1 =

K 3 = θ 1 κ 2 + θ 2 κ4 ,

K = K12 + K2 K3 ,

all evaluated at ξ1 , we have defined a1 = λ1 , b1 = λ2 , c1 = λ3 and

θ1

K1

d1 = λ4

1+ √

,

2

K

(K2 θ1 + K3 θ2 )

√

f1 = 1 − λ4 θ4 − θ2 −

,

2 K

e1 =

λ4 ((K3 − K1 )θ3 + K2 θ2 )

√

.

2 K

It follows that m1 (x) is necessarily of the form

m1 (x) =

a1 + b 1 x 2 + c 1 x 4

d1 + f 1 x 2 + e 1 x 4

+

.

A similar calculation shows that m2 (x) is also of this form. Arguing as in Section 3.1 we consider

densities of the form

+

a + bx2 + cx4

m(x; a, b, c, d, e) =

,

(20)

d + x2 + ex4

and will, in Section 4, find constants a∗ , b∗ , c∗ , d∗ , e∗ for which the design ξ∗ , with density

m(x; a∗ , b∗ , c∗ , d∗ , e∗ ), minimizes the loss (9) in the class of designs with densities of the form

(20). That the density should be of this form was also noted by Shi, Ye, & Zhou (2003) using

The Canadian Journal of Statistics / La revue canadienne de statistique

DOI: 10.1002/cjs

CONSTRUCTION OF ROBUST DESIGNS

8

6

λ

2

4

2

0

689

8

λ1

minmax loss

comparative eigenvalues

2013

0

0.5

(a)

6

4

2

0

1

3

0

0.5

(b)

1

3

2

m(x)

m(x)

ν = 0.25

1

0

−0.5

0

(c)

0

−0.5

0.5

m(x)

m(x)

0

(d)

0.5

3

ν = 0.75

1

0

−0.5

ν = 0.5

1

3

2

2

2

1

0

(e)

0.5

0

−0.5

ν = 0.998

0

(f)

0.5

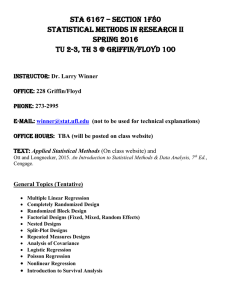

Figure 1: A-minimax designs ξ∗ for approximate straight line regression. (a) Eigenvalues (λ1 (ξ∗ ), λ2 (ξ∗ ))

versus ν; (b) Loss l(ξ∗ ) versus ν; (c)–(f) minimax densities m∗ (x) and 10-point implementations for

selected values of ν. [Colour figure can be seen in the online version of this article, available at

http://wileyonlinelibrary.com/journal/cjs]

methods of nonsmooth analysis, and in particular the Lagrange multiplier rule for nonsmooth

optimization (Clarke, 1983).

4. EXAMPLES, IMPLEMENTATIONS, DISCUSSION

The constants determining the minimax designs of Section 3.1–3.3 have been obtained by employing an unconstrained nonlinear optimizer in matlab. The code is available from the authors.

There is of course a constraint involved—the density must integrate to 1—which is handled as

follows. Within each loop, whenever the constants a, b, . . . in (15) or (20) are returned we integrate the resulting function, and then divide all coefficients in its numerator by the value of this

integral, so that a proper density is then passed on to the next iteration. All integrations were

carried out by Simpson’s rule on a 10,000-point grid. We have generally found Simpson’s rule

to be as accurate as, and much quicker than, more sophisticated routines—at least when applied

to quite smooth and cheaply evaluated functions such as are encountered here. See Daemi (2012)

for more details of the computing algorithm.

Example 1 continued To obtain the minimax designs under the A-criterion we carried out

the process described in Section 3.1, obtaining results as illustrated in Figure 1. Plot (a) of this

figure shows the two eigenvalues (λ1 (ξ∗ ), λ2 (ξ∗ ))—these were defined for general ξ in (13a)

and (13b), respectively—as ν varies. As could perhaps have been anticipated, they are equal for

DOI: 10.1002/cjs

The Canadian Journal of Statistics / La revue canadienne de statistique

DAEMI AND WIENS

8

6

l2

4

2

0

Vol. 41, No. 4

8

l1

minmax loss

comparative losses

690

0

0.5

(a)

6

4

2

0

1

3

0

0.5

(b)

1

3

2

m(x)

m(x)

ν = 0.25

1

0

−0.5

0

(c)

0

−0.5

0.5

0

(d)

0.5

3

2

m(x)

m(x)

ν = 0.5

1

3

ν = 0.75

1

0

−0.5

2

2

1

0

(e)

0.5

0

−0.5

ν = 0.998

0

(f)

0.5

Figure 2: E-minimax designs ξ∗ for approximate straight line regression. (a) Losses (l1 (ξ∗ ), l2 (ξ∗ ))

versus ν; (b) Loss l(ξ∗ ) versus ν; (c)–(f) minimax densities m∗ (x) and 10-point implementations for

selected values of ν. [Colour figure can be seen in the online version of this article, available at

http://wileyonlinelibrary.com/journal/cjs]

ν > νA = 0.692. The loss l(ξ∗ ) is shown in plot (b). At ν = 0 this is that of the classically Aoptimal design, with symmetric point masses at x = ±1/2; at ν = 1 it is determined entirely by

the bias of the continuous uniform measure, which vanishes by virtue of (3). In plots (c)–(f) the

minimax densities m∗ (x) = m(x; b∗ , c∗ , d∗ ) are illustrated for selected values of ν, along with

10-point implementations as at (6).

See Figure 2 for analogous plots of the minimax designs under the E-criterion, in which the

losses (l1 (ξ∗ ), l2 (ξ∗ )) are equal for ν > νE = 0.997. We note that here and in the other optimizations carried out for this article the surface over which one seeks a minimum is quite flat—see

Figure 3 for an illustration—and so it is important to choose starting values carefully and to

then increment ν slowly. The choice of starting values can be facilitated by a knowledge of the

limiting behaviour of the solutions. As ν → 0 the solution tends to the classically optimal design minimizing variance alone; in the limit as ν → 1 the optimal density is uniform. Thus, in

Example 1 we started near ν = 0 with b = −0.24, d = 0 and c the normalizing constant, so that

m(x) = (1 − 0.24/x2 )+ /c, approximating point masses at ±0.5.

Remarks: Both in classical design theory concentrating solely on the variances of the estimates

or predictions, and in our extension of this theory to consideration of bias, the designs constructed

according to the D- and I-criteria are invariant under transformation of the design space, whereas

those under the A- and E-criteria are not. While we have presented our results here for the space

χ = [−1/2, 1/2] only, the methods extend, with minor modifications (resulting entirely from the

The Canadian Journal of Statistics / La revue canadienne de statistique

DOI: 10.1002/cjs

2013

CONSTRUCTION OF ROBUST DESIGNS

691

b* = −0.030099

5.5

5

4.5

2

1.5

−13

1

0.5

x 10

d

0

0

0.4

0.2

0.6

0.8

1

c

c = 0.42644

*

8

6

4

2

−13

1.5

1

0.5

x 10

d

0

−0.08

−0.06

−0.04

−0.02

0

b

d = 8.1218e−014

*

8

6

4

1

0.5

c

0

−0.08

−0.06

−0.04

−0.02

0

b

Figure 3: Loss of E-minimax design ξ∗ in a neighbourhood of two of (b∗ , c∗ , d∗ ) with the third

held fixed; ν = 0.5. [Colour figure can be seen in the online version of this article, available at

http://wileyonlinelibrary.com/journal/cjs]

change in the elements of A), to more general symmetric intervals. Here we discuss the necessary

changes, and the resulting qualitative behaviour of the designs. For the space χ = [−T, T ], (8a)

and (8c) become

1

1 κ2

3

+ ν max κ0 −

lA (ξ) = (1 − ν) 1 +

, 2−

,

μ2

2T μ2

2T 3

1

κ2

3

(1 − ν)

lE (ξ) = max (1 − ν) + ν κ0 −

,

+ν

−

,

2T

μ2

2T 3

μ22

DOI: 10.1002/cjs

The Canadian Journal of Statistics / La revue canadienne de statistique

692

DAEMI AND WIENS

Vol. 41, No. 4

Table 1: Ten-point implementations of robust A- and E-optimal designs for selected values of ν and T .

ν = 0.25

T = 0.05

T = 0.5

ν = 0.5

T =5

T = 0.05

T = 0.5

T =5

A-criterion

±0.0467

±0.483

±4.79

±0.0461

±0.476

±4.70

±0.0401

±0.448

±4.30

±0.0383

±0.426

±4.01

±0.0333

±0.410

±3.71

±0.0304

±0.373

±3.18

±0.0262

±0.367

±2.89

±0.0223

±0.316

±2.13

±0.0180

±0.313

±1.47

±0.0135

±0.246

±0.772

±0.0467

±0.483

±4.5

±0.0461

±0.476

±4.5

±0.0401

±0.448

±3.5

±0.0383

±0.426

±3.5

±0.0333

±0.410

±2.5

±0.0304

±0.373

±2.5

±0.0262

±0.367

±1.5

±0.0223

±0.316

±1.5

±0.0180

±0.313

±0.5

±0.0135

±0.246

±0.5

E-criterion

respectively, and (13a) and (13b) become

T 1 2

1

m(x) −

=

κ0 −

dx,

2T

2T

−T

T

3

3 2

κ2

2 m(x)

−

=

x

−

dx.

2T 3

μ2

2T 3

μ22

−T

For the robust A-optimal designs, as T decreases the interval on which λ2 (ξ∗ ) > λ1 (ξ∗ )

expands to encompass all ν ∈ [0, 1]. As T increases that interval shrinks, and eventually

λ1 (ξ∗ ) > λ2 (ξ∗ ) for all ν. In each such case the pure strategy would succeed; we compute that

the corresponding ranges are T < TL ≈ 0.002 and T > TU ≈ 1.52. The robust E-optimal designs

behave in the same way, with TL ≈ 0.460 and TU ≈ 1.74—note however that the inequality (18)

and its consequences need no longer hold if T > 1.

See Table 1, where we have detailed some 10-point implementations. Those for T = 0.5 are as

shown in Figures 1 and 2, plots (c) and (d). For ν near 1 all of the designs are essentially uniform—

as is the E-optimal design shown in Table 1 for ν = 0.5 and T = 5. From these figures it appears

that the relationship between the designs and the scaling of the design space is rather subtle, and

that an experimenter who implemented the designs by merely applying the same scaling to the

design points as to the design space could be seriously misled.

Example 2 continued In Figure 4, we present representative results for the robust I-minimax

designs for approximate quadratic regression. Recall that for this case the pure strategy fails

for all ν ∈ (0, 1); that this should be so is now clear from plot (a), which reveals that the two

eigenvalues (ρ1 (ξ∗ ), ρ2 (ξ∗ )) are in fact equal. The loss l(ξ∗ ) in plot (b) varies from that of the

classically I-optimal design when ν = 0 to that of the uniform design when ν = 1; in the latter

−1

case A = M ξ∗ = H ξ∗ = Kξ∗ and so both AM −1

ξ∗ and K ξ∗ H ξ∗ in (7d) are 3 × 3 identity matrices

and l(ξ∗ ) = 1.

In Table 2, we give the values of the constants a, b, c, d, e defining the densities m∗ (x), for the

four values of ν in plots (c), (d), (e), and (f) of Figure 4. These values of ν were also used by Shi, Ye,

The Canadian Journal of Statistics / La revue canadienne de statistique

DOI: 10.1002/cjs

CONSTRUCTION OF ROBUST DESIGNS

10

2

0

0.5

(a)

10

m(x)

minmax loss

ρ

5

0

693

5

ρ1

4

3

2

1

0

1

ν = 0.01

m(x)

comparative eigenvalues

2013

5

0

0.5

(b)

4

1

ν = 0.09

2

0

−0.5

0

(c)

0

−0.5

0.5

0

(d)

0.5

2

1.5

ν = 0.5

m(x)

m(x)

2

1

ν = 0.91

1

0.5

0

−0.5

0

(e)

0.5

0

−0.5

0

(f)

0.5

Figure 4: I-minimax designs ξ∗ for approximate quadratic regression. (a) Eigenvalues (ρ1 (ξ∗ ), ρ2 (ξ∗ ))

versus ν; (b) Loss l(ξ∗ ) versus ν; (c)–(f) minimax densities m∗ (x) and 10-point implementations for

selected values of ν. [Colour figure can be seen in the online version of this article, available at

http://wileyonlinelibrary.com/journal/cjs]

& Zhou (2003), and our plots correspond to their Figure 2, plots (d), (c), (b), and (a), respectively.

They used the parameterization m(x) = {(a + bx2 + cx4 )/(1 + dx2 + ex4 )}+ ; we found (20) to be

more stable numerically. We give their constants, translated to the parameterization (20), as well

as our own in Table 2. It is presumably a result of the extreme flatness of the surface being searched

that the densities are so similar—and result in the almost the same values of the loss—despite the

quite startling differences in the values of the constants.

Table 2: Constants defining the robust I-minimax densities (20) for selected values of ν

ν

a

b

c

d

0.01 0.056 (0.721) −72.120 (−180.946) 341.400 (836.120) 0.004 (0.124)

0.09 0.046 (0.278)

−7.545 (−12.627)

0.5

−0.054 (−0.177)

0.012 (0.022)

0.91 0.074 (5.879)

0.872 (6.005)

0.99 0.191 (28.302)

0.962 (5.784)

51.000 (75.438) 0.009 (0.118)

8.792 (9.621)

112.500 (5.971)

0.007 (0.015)

0.082 (−6.472)

44.590 (28.383) 0.194 (28.662)

e

l(ξ∗ )

0.209 (3.447)

2.222 (2.225)

0.601 (0.176)

2.294 (2.306)

0.503 (0.582)

1.880 (1.881)

9.115 (−5.187) 1.174 (1.176)

43.380 (31.072)

1.020 (1.020)

Corresponding values of Shi, Ye, & Zhou (2003) in parentheses.

DOI: 10.1002/cjs

The Canadian Journal of Statistics / La revue canadienne de statistique

694

DAEMI AND WIENS

Vol. 41, No. 4

The implementations shown in plots (c)–(f) illustrate an enduring theme in robustness of

design—that a rough guide in constructing such a design is that one should take those point

masses of the classically optimal design, resulting in many replicates but only a small number of

design points, and spread these replicates out into clusters of distinct points in approximately the

same locations as the classically optimal design points.

APPENDIX

Proof of Theorem 1. Let ξ∗ = ξj∗ satisfy the conditions of the theorem and let ξ be any other

design. Suppose that ξ ∈ j . Then by the definition of j , followed by the definition of ξj as

the minimizer of lj in this class, we have

max {lj (ξ)} = lj (ξ) ≥ lj (ξj ).

(A.1)

lj (ξj ) ≥ min {lj (ξj )} = lj∗ (ξj∗ ),

(A.2)

1≤j≤J

This continues as

1≤j≤J

by the definition of j ∗ , and then, since ξj∗ ∈ j∗ , as

lj∗ (ξj∗ ) = max {lj (ξj∗ )}.

1≤j≤J

(A.3)

Linking (A.1)–(A.3) completes the proof that max1≤j≤J {lj (ξ)} ≥ max1≤j≤J {lj (ξj∗ )}, that is, that

䊏

l(ξ) ≥ l(ξ∗ ) for any ξ ∈ .

ACKNOWLEDGEMENTS

This work has been supported by the Natural Sciences and Research Council of Canada. The

presentation has benefited greatly from the incisive comments of two anonymous referees.

BIBLIOGRAPHY

Box, G. E. P. & Draper, N. R. (1959). A basis for the selection of a response surface design. Journal of the

American Statistical Association, 54, 622–654.

Clarke, F. H. (1983). Optimization and Nonsmooth Analysis, Wiley, New York.

Daemi, M. (2012). Minimax design for approximate straight line regression. M.Sc. thesis, University of

Alberta, Department of Mathematical and Statistical Sciences.

Fang, K. T. & Wang, Y. (1994). Number-Theoretic Methods in Statistics, Chapman and Hall: London and

New York Huber, North Holland: Amsterdam Marcus and Sacks, Academic.

Heo, G. (1998). Optimal designs for approximately polynomial regression models. Ph.D. thesis, University

of Alberta, Department of Mathematical and Statistical Sciences.

Heo, G., Schmuland, B., & Wiens, D. P. (2001). Restricted minimax robust designs for misspecified regression

models. The Canadian Journal of Statistics, 29, 117–128.

Huber, P. J. (1975). Robustness and designs. In A Survey of Statistical Design and Linear Models, Srivastava,

J. N., editors. North Holland, pp. 287–303.

Li, K. C. & Notz, W. (1982). Robust designs for nearly linear regression. Journal of Statistical Planning and

Inference, 6, 135–151.

Marcus, M. B. & Sacks, J. (1976). Robust designs for regression problems. In Statistical Theory and Related

Topics II, Gupta, S. S. & Moore, D. S., editors. Academic Press, pp. 245–268

Pierre, D. A. (1986). Optimization Theory with Applications, Dover, New York.

The Canadian Journal of Statistics / La revue canadienne de statistique

DOI: 10.1002/cjs

2013

CONSTRUCTION OF ROBUST DESIGNS

695

Pesotchinsky, L. (1982). Optimal robust designs: Linear regression in Rk . The Annals of Statistics, 10,

511–525.

Shi, P., Ye, J. & Zhou, J. (2003). Minimax robust designs for misspecified regression models. The Canadian

Journal of Statistics, 31, 397–414.

Wiens, D. P. (1992). Minimax designs for approximately linear regression. Journal of Statistical Planning

and Inference, 31, 353–371.

Received 24 November 2012

Accepted 31 May 2013

DOI: 10.1002/cjs

The Canadian Journal of Statistics / La revue canadienne de statistique