the economics of scientific knowledge

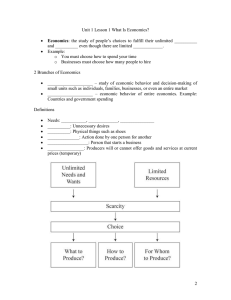

advertisement