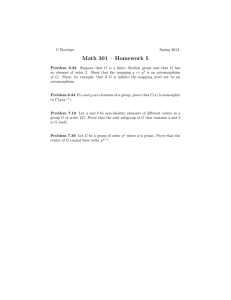

Exercises on chapter 3

advertisement

Exercises on chapter 3

1. If M is a left R-module and X ⊆ M , the annihilator of X, AnnR (X), denotes the set of all

elements of R that act as zero on all elements of X, i.e.

AnnR (X) = {a ∈ R | ax = 0 for all x ∈ X}.

(i) Check that AnnR (X) is always a left ideal of R, and that if in fact X is a submodule of

M then AnnR (X) is a two-sided ideal of R.

(ii) If M is a cyclic R-module, say M = Rx for x ∈ M , then M ∼

= R/AnnR (x) as R-modules.

(iii) Prove that every non-zero cyclic module M = Rx has a maximal submodule (a proper

submodule that is maximal amongst all proper submodules of M ).

(iv) Prove that every finitely generated left R-module M has a maximal submodule. (Hint:

Let x1 , . . . , xn be a minimal spanning set for M and let K = Rx2 + · · · + Rxn , noting that

x1 ∈

/ K.)

(v) Prove that an R module M is irreducible if and only if M ∼

= R/I for a maximal left ideal

I of R.

(i) You can check this.

(ii) Define a map R → M, r "→ rx. It is an R-module homomorphism with kernel AnnR (x). Hence by

the first isomorphism theorem you get induced an isomorphism of left R-modules R/AnnR (x) ∼

= M.

(iii) By (ii), this follows from the fact that every left ideal of R can be embedded into a maximal left

ideal. We proved almost the same thing in class for two-sided ideals using Zorn’s lemma last term...

the same argument works for this.

(iv) As in the hint, we just need to show that M/K has a maximal submodule. Then by the lattice

isomorphism theorem, its pre-image in M is a maximal submodule of M . But that follows by (iii)

since M/K is cyclic, generated by the image of x1 .

(v) Note R/I is irreducible if and only if I is a maximal left ideal of R, by the lattice isomorphism

theorem. This proves one implication easily. Conversely, suppose that M is irreducible. Take

0 $= x ∈ M . Then Rx is a non-zero R-submodule of M , hence Rx = M by irreducibility. Hence

M∼

= R/I for some left ideal of R, necessarily maximal as M is irreducible.

2. Let I be a two-sided ideal of a ring R and let M be a left R-module. Explain a natural way

to make M/IM into a left R/I-module.

Define an action of r + I ∈ R/I on M/IM by

(r + I)(m + IM ) = rm + IM.

We need to check this is well-defined. Say r + I = r! + I and m! + IM = m + IM , i.e. (r − r! ) ∈ I

and (m − m! ) ∈ IM . Then

(r! + I)(m! + IM ) = r! m! + IM.

So we need to see that r! m! − rm ∈ IM . But r! m! − rm = (r! − r)m! + r(m! − m) and the right

hand side belongs to IM . It is clear that this makes M/IM into a left R/I-module.

15

16

3. The goal of this question is to prove directly that Z60 = Z4 ×Z3 ×Z5 = Z4 ⊕Z3 ⊕Z5 . Of course

we know this already by the Chinese remainder theorem since products and coproducts are

the same thing in the category of abelian groups...

(i) Find submodules M4 , M3 and M5 of Z60 isomorphic to Z4 , Z3 and Z5 respectively with

Z60 = M4 ⊕ M3 ⊕ M5 .

(ii) For each i = 4, 3, 5, write down the maps pi : Z60 → Zi so that Z60 together with these

maps is the direct product of Z4 , Z3 and Z5 .

(i) Let M4 = Z15, M3 = Z20 and M5 = Z12. Note M4 ∼

= Z4 , M3 ∼

= Z3 and M5 ∼

= Z5 . Note M3 +M4 =

Z5 (because 5 is the GCD of 15 and 20), hence since 5 and 12 are coprime M5 ∩ (M3 + M4 ) = 0.

Similarly M3 ∩ (M5 + M4 ) = M4 ∩ (M3 + M5 ) = 0. Hence the sum M4 + M3 + M5 is direct. It is

all of Z60 because GCD(15, 20, 12) = 1 so you can write 1 = a15 + b20 + c12 for some a, b, c ∈ Z.

(ii) Define pi by n "→ n + Zi, i.e. reduce modulo i, for each i = 4, 3, 5. Since Z60 = Z/Z60 it follows

by the second isomorphism theorem that for i|60, Z60 /iZ60 ∼

= Z/Zi. So this really does the job.

To see that it is the direct product you need to check the universal property. Take maps qi from an

abelian group A to Z4 , Z3 and Z5 . We need to construct a map from A to Z60 ... Well, a ∈ A maps

to the unique element of Z60 that is congruent to qi (a) modulo i for each i = 4, 3, 5...

4. Let {Mi }i∈I be a family of left R-modules and M be another left R-module.

(i) Prove that

!

"

HomR (

Mi , M ) ∼

HomR (Mi , M ).

=

i∈I

(ii) Prove that

i∈I

HomR (M,

"

i∈I

(iii) Is it true that

HomR (M,

!

i∈I

How about if M is finitely generated?

Mi ) ∼

= HomR

Mi ) ∼

=

!

"

(M, Mi ).

i∈I

HomR (M, Mi )?

i∈I

Q

(i) Suppose we are given an element of L

i∈I HomR (Mi , M ). This

P means we

Pare given maps fi :

Mi → M for each i. Define a map f :

M

→

M

by

f

(

m

)

:=

i

i

i∈I

i

i fi (mi ). Note this

depends

on

all

but

finitely

many

m

i ’s being zero to make sense... In this way we get a map from

Q

L

i∈I HomR (Mi , M ) to HomR (

i∈I Mi , M ). It is easy to see that it is actually a homomorphism of

abelian groups (i.e. better than just a map of sets).

L

Q

Conversely given f ∈ HomR ( i∈I Mi , M ) define (fi )i∈I ∈ i∈I HomR (Mi , M ) by setting fi (mi ) :=

f (mi ) for each i ∈ I. This is a two-sided inverse to the map in the first paragraph.

Note here it is NOT true that

M

M

HomR (

Mi , M ) ∼

HomR (Mi , M )

=

i∈I

i∈I

if I is infinite. Take the case R is a field F , and let M = Mi = F (the one dimensional vector space)

and I = N (or any countable set). The left hand side is then the dual vector space F I of a countable

dimensional vector space, which is of uncountable dimension. The right hand side is just the vector

space F I again, of countable dimension. So they cannot be isomorphic...

Q

Q

(ii) Suppose we are given an element f ∈ HomR (M, i∈I Mi ). Define (fi )i∈I ∈ i∈I

Q HomR (M, Mi )

by letting fi (m) = πi (f

Q(m)), the ith coordinate of f (m). Conversely given (fi )i∈I ∈ i∈I HomR (M, Mi )

define f ∈ HomR (M, i∈I Mi ) by letting f (m) = (fi (m))i∈I .

(iii) This is false in general but I didn’t try to find a counterexample, sorry.... But if M is finitely

generated as an R-module then this is true and you can prove it just like in (ii). Indeed the direct

sums here sit inside the direct products from (ii) as the tuples with all but finitely many entries

being zero. The restriction of the isomorphism from (ii) to these subgroups gives an isomorphism

between the direct sums providing M is finitely generated.

17

5. Recall that a left R-module M is of finite length if it has a composition series. In that case,

the length l(M ) – the number of non-zero factors appearing in a composition series of M –

is a well-defined invariant of M by the Jordan-Hölder theorem.

(i) Suppose that 0 → K → M → Q → 0 is a short exact sequence. Prove that M is of finite

length if and only if both K and Q are of finite length, in which case l(M ) = l(K) + l(Q).

(ii) Suppose that M is a module of finite length and let N and L be submodules. Prove that

l(N + L) + l(N ∩ L) = l(N ) + l(L).

(iii) Interpret (ii) in terms of dimension if M is a finite dimensional vector space over a field

and N and L are subspaces.

(i) If K and Q are of finite length, take composition series for them both. Then glue them together

using the lattice isomorphism theorem to get a composition series for M . In particular, l(M ) =

l(K) = l(Q).

Now suppose that M has finite length. Say

0 = M0 < M1 < · · · < Mn = M

is a composition series. Consider

0 = M0 ∩ K ≤ M1 ∩ K ≤ · · · ≤ Mn ∩ K = K.

I claim that the factors are either simple or zero, hence K has a composition series. Well, by the

second isomorphism theorem,

Mi ∩ K/Mi−1 ∩ K = Mi ∩ K/(Mi−1 ∩ K) ∩ Mi−1 ∼

= (Mi ∩ K + Mi−1 )/Mi−1 .

This is a submodule of Mi /Mi−1 which is simple. So its either zero or all of Mi /Mi−1 which is

simple...

In a similar way to look at the series

0 = π(M0 ) ≤ · · · ≤ π(Mn ) = Q

where π : M → Q is the epimorphism from the s.e.s. The factors are zero or simple again. So Q has

a composition series.

(ii) Consider the s.e.s.

0 → N ∩ L → N → N/N ∩ L → 0.

Since M has finite length, so do N and L, so by (i) we have that

l(N/N ∩ L) = l(N ) − l(N ∩ L).

But N/N ∩ L ∼

= (N + L)/L. Now look at

0 → L → N + L → (N + L)/L → 0

and apply (i) again to see that

l((N + L)/L) = l(N + L) − l(L).

The right hand sides of our two equations are equal, and rearranging gives the answer.

(iii) dim(V + W ) + dim(V ∩ W ) = dim V + dim W .

6. True or False?

(i) Let V = W ⊕ X and V = W ⊕ Y be two decompositions of a left R-module V as a direct

sum of submodules. Then X = Y .

(ii) Let R be a commutative ring and I, J be two left ideals in R. If the modules R/I and

R/J are isomorphic then I = J.

(iii) Let R be a ring and I, J be two left ideals in R. If the modules R/I and R/J are

isomorphic then I = J.

18

(i) False. Take V = Z2 ⊕ Z2 and W = {(0, 0), (1, 1)}, X = {(0, 0), (1, 0)} and Y = {(0, 0), (0, 1)}.

(ii) TRUE!!!! Note that AnnR (R/I) = I, AnnR (R/J) = J and if R/I and R/J are isomorphic they

have the same annihilators in R.

(iii) False. For example take the matrix algebra R = M2 (C). It is a direct sum of two left ideals

I1 ⊕ I2 where I1 consists of matrices with zeros in the second column and I2 consists of matrices

with zeros in the first column. Now R/I1 ∼

= I2 and R/I2 ∼

= I1 . It remains to observe that I1 and I2

are isomorphic as left R-modules (they are both isomorphic to the module of column vectors C2 ).

7. True or False? (i) Every finitely generated module over a field has finite length.

(ii) Every finitely generated module over a PID has finite length.

(i) Of course: finitely generated modules over fields are finite dimensional vector spaces, and they

have finite bases...

(ii) False. Take R = Z and consider the regular module R R which is cyclic, so certainly finitely

generated. If it has a composition series, you get it by picking a maximal submodule, then a maximal

submodule of that, ... and the process stops in finitely many steps. But a maximal submodule of Z

is (2), and as a module that is isomorphic to Z again. So you can go on forever...

8. Prove that every short exact sequence of the form

0 −→ K −→ M −→ R R −→ 0

is split. Is it true that every short exact sequence of the form

0 −→ R R −→ M −→ Q −→ 0

is split?

Let π : M → R R be the epimorphism. We just need to show that it is split. Let m ∈ M be any

element with π(m) = 1R . Since R is a free R-module on basis 1R , there is a unique R-module

homomorphism τ : R R → M with τ (1R ) = m. Then π ◦ τ = id as required...

But the second short exact sequence need not be split! Take for example R = Z and the monomorphism i : Z → Z given by multiplication by 2. For this to split, 2Z would have to have a complement

in Z. But that complement would have to be a submodule of Z isomorphic to Z2 , and there can’t

be such a thing since Z is an integral domain...

9. This exercise is known as the five lemma. Let

0 −→ A1 −→ A2 −→ A3 −→ 0

and

0 −→ B1 −→ B2 −→ B3 −→ 0

be short exact sequences of R-modules. Suppose there are vertical maps γi : Ai → Bi for

each i = 1, 2, 3 so that the resulting diagram commutes. Prove that:

(i) γ1 , γ3 monomorphisms ⇒ γ2 is a monomorphism;

(ii) γ1 , γ3 epimorphisms ⇒ γ2 is an epimorphism;

(iii) γ1 , γ3 isomorphisms ⇒ γ2 is an isomorphism.

This is an example of “diagram chasing” which is usually not hard but often tedious. I’ll just do (i).

Hopefully my notation for the maps is obvious.

Take a2 ∈ A2 such that γ2 (a2 ) = 0. We need to show that a2 = 0. Well, γ3 (πA (a2 )) = πB (γ2 (a2 )) =

0. As γ3 is mono, this implies that πA (a2 ) = 0 already. Hence a2 ∈ ker πA = im iA . Hence

a2 = iA (a1 ) for some a1 ∈ A1 . Now γ2 (iA (a1 )) = iB (γ1 (a1 )) = 0. As iB and γ1 are mono, this

implies that a1 = 0 already. Hence a2 = iA (a1 ) = 0 too.

19

10. Quite soon in class we will show that if R is a PID then every submodule of a free module

is free. Actually we’ll only prove this in class for finitely generated modules, but it is true in

general. Bearing this in mind, think about the following True or False?

(i) If R is a commutative ring, then every submodule of a free module is free.

(ii) If R is an integral domain, then every submodule of a free module is free.

(iii) If R is commutative and every submodule of a free R-module is free, then R is a PID.

(i) False. For example take R = Z6 and consider the submodule 2Z6 .

(ii) False. For example take R = F [x, y], F a field. Consider the submodule (x, y).

(iii) True. Its obvious that R has to be an integral domain (else you get counterexamples like in (i)).

Now suppose (x, y) is an ideal that is not principal. Then (x, y) is not free (like in (ii)).

11. In linear algebra, you often take linearly independent sets and extend them to bases, and

you often take spanning sets and cut them down to a basis.

(i) Use Zorn’s lemma to prove that any set X of linearly independent vectors in an R-module

M is contained in a maximal linearly independent set.

(ii) Suppose that D is a division ring. We know already that all D-modules are semisimple,

hence free. Prove that every maximal linearly independent set of vectors in an D-module M

is a basis.

(iii) In general you need to be careful: if M is a free R-module and X is a maximal linearly

independent set of vectors in M , then X need not be a basis. For example, suppose that R is

an integral domain with the property that every maximal linearly independent set of vectors

in a free R-module M is a basis. Prove that R is a field.

(iv) Let M be a module over a division ring D and let X ⊆ M be a subset of vectors that

span M as a D-module. Prove that X contains a basis of M . (Hint: use the fundamental

lemma about semisimple modules.)

(i) Let F be the set of all sets Y of linearly independent vectors M containing X. Note F is

non-empty as X ∈ F . We just need to prove that F has a maximal element. This follows bySZorn’s

lemma if we show every chain (Yi )i∈I in F has an upper bound in F . Well just take Y = i∈I Yi .

Its an upper bound, but why is it in F ? Well if not, there is a dependency r1 yi1 + · · · + rk yik = 0 for

some i1 , . . . , ik ∈ I and r1 , . . . , rk $= 0. But then you can find i ∈ I such that yi1 , . . . , yik all already

lie in Yi . But that contradicts the linear independence of the set Yi .

(ii) Let X be a maximal linearly independent set of vectors in M . Take m ∈ M . Since X is maximal

linearly independent, X ∪ {m} is linearly dependent. Hence we can write m as a linear combination

of elements of X. Hence X spans M , so its a basis.

(iii) Take M = R R. Take 0 $= x ∈ R. This is a linearly independent set of vectors in M , since R

is an integral domain. I claim its maximal. Well, if not you can find another 0 $= y ∈ R such that

x, y is still linearly independent. But that’s a contradiction: (y)x + (−x)y = 0 is a non-trivial linear

relation between x and y. Hence by assumption x is a basis for R. So we can write 1 = yx for some

y ∈ R. Hence x is a unit, and R is a field.

P

(iv) Note M = 0$=x∈X Dx. Each Dx is simple. Hence by the fundamental lemma on semisimple

L

modules, there exists Y ⊆ X such that M = y∈Y Dy. That is, y is a basis.

12. Let R and S be rings, let M be a left R-module and let B be an R, S-bimodule.

(i) Prove that HomR (B, M ), the abelian group of all left R-module homomorphisms from B

to M , can be made into a left S-module by defining the action of s ∈ S on f ∈ HomR (B, M )

by letting sf be the unique element of HomR (B, M ) with (sf )(b) := f (bs) for all b ∈ B.

(ii) Prove that HomR (M, B), the abelian group of all left R-module homomorphisms from M

to B, can be made into a right S-module by defining the action of s ∈ S on f ∈ HomR (M, B)

by letting f s be the unique element of HomR (M, B) with (f s)(m) = f (m)s for all m ∈ M .

20

Just check some axioms until you are happy. The important thing to remember: right S-module

structure on the FIRST ARGUMENT of hom leads to a left S-module structure on the hom space.

right S-module structure on the SECOND ARGUMENT of hom leads to a right S-module structure

on the hom space.

13. Let R be a ring and M be a left R-module. By 12(i), the abelian group HomR (R, M ) has the

structure of a left R-module. By 12(ii), the abelian group HomR (M, R) has the structure of

a right R-module.

(i) Taking M = R, these constructions make the space HomR (R, R) of all left R-module

homomorphisms from R to R both into a left R-module and a right R-module. Verify that

the left and right actions commute, so that it is actually an (R, R)-bimodule.

(ii) Prove using the map f *→ f (1R ) that HomR (R, R) is isomorphic to R as an (R, R)bimodule.

(iii) Of course EndR (R) = HomR (R, R) is actually a ring with multiplication f g := f ◦ g

(composition). Is the map f *→ f (1R ) a ring isomorphism between EndR (R) and R? Be

careful...

(i) Well we have to check that

(rf )s = r(f s).

Evaluate on some t ∈ R. Then ((rf )s)(t) = (rf )(t)s = f (tr)s and (r(f s))(t) = (f s)(tr) = f (tr)s.

Done.

(ii) Certainly f "→ f (1R ) is an isomorphism of abelian groups between HomR (R, R) and R. So

consider the image of rf s. We want it to be rf (1R )s for our map to be a bimodule homomorphism.

Well like in (i), (rf s)(1R ) = f (1R r)s = f (r)s = f (r1R )s = rf (1R )s. Phew.

(iii) NO. This is a special case of the result in class when we computed the endomorphism algebra of

a free left R-module of rank n. In case n = 1, we showed that EndR (R)op ∼

= R, and the isomorphism

is exactly evaluation at 1. But if R is not commutative, then evaluation at 1 is NOT an isomorphism

between EndR (R) and R without the op...

14. Let D be a division ring and let R be the ring of all 2 × 2 upper triangular matrices over D.

Let e1 and e2 be the diagonal matrix units diag(1, 0) and diag(0, 1).

(i) Show that e1 and e2 are orthogonal idempotents with e1 +e2 = 1, so that R R = Re1 ⊕Re2 .

(ii) Show that Re1 is simple but Re2 is not.

(iii) Show that EndR (Re1 )op ∼

= EndR (Re2 )op ∼

= D as rings. Deduce in particular that Re1

and Re2 are indecomposable R-modules, and the idempotents e1 and e2 are primitive.

(i) Re1 is just the module of column vectors of height 2 with top entry anything from D and bottom

entry zero. You can get from any one non-zero such vector to any other by multiplying by D, i.e.

by multiplying by the element of R with a scalar in its 11-entry and zeros in all other entries. So

Re1 is irreducible.

Re2 is just the module of column vectors of height 2 with arbitrary entries from D. There is a

submodule consisting of all the column vectors with 0 as their bottom coordinate. Hence it is

reducible.

(ii) Recall that EndR (R R)op = R. Hence by lemma from class, EndR (Re1 )op ∼

= e1 Re1 . This is

just the matrices with anything from D as their 11-entry, and all other entries zero. Clearly this is

isomorphic to D.

Similarly, EndR (Re2 )op is e2 Re2 , which is matrices with anything from D as their 22-entry, all other

entries zero.

Since D is for sure a local ring, it follows that Re1 and Re2 are indecomposable, hence e1 and e2 are

primitive idempotents.

15. A ring R is SBN (single basis number) if all finitely generated free left R-modules are isomorphic to R R. (This is equivalent to all finitely generated right R-modules being isomorphic

21

to RR – but the fact that these are equivalent definitions is far from obvious if R is not a

commutative ring!)

(i) Show that if R is SBN then so is every quotient ring R/I.

(ii) Let A = ZN , the free abelian group on the set N. Let R = EndZ (A), the ring of all

Z-module endomorphisms of A. Prove that R is SBN.

∼

(i)

for M , so M =

LnLet M be a finitely generated∼ free left (R/I)-module. Pick a basis x1 , . . . , xnL

n

(R/I)x

and

each

(R/I)x

R/I.

View

M

now

as

an

R-module.

Let

P

=

Rx

be

the

=

i

i

i

i=1

i=1

free R-module on basis x1 , . . . , xn . Define an R-module homomorphism P ! M mapping xi to xi

for each i = 1, . . . , n. Note the kernel of this is IP . So, M ∼

= P/IP . Now R has IBN, so P ∼

= R R.

Hence M ∼

= P/IP ∼

= R/I. Hence R/I has IBN.

(ii) Note A is a left R-module by definition. For i ∈ N, let ei be the projection of A onto its ith

component. Note ei ∈ R is an idempotent and Rei ∼

= A as a left R-module. (The isomorphism here

maps r ∈ Rei to r1i where 1i is the identity in the ith slot in the direct sum A.). So

Y

Y

R=

Rei ∼

A

=

i∈N

i∈N

as a left R-module. But this is a direct product of countably many copies of A. And a finite direct

sum (aka direct product) of copies of R is still direct product of countably many copies of A. Hence

they’re isomorphic.

16. Let A be the abelian group

Zpr11 ⊕ Zpr22 ⊕ · · · ⊕ Zprnn

where p1 , . . . , pn are distinct primes and r1 , . . . , rn ≥ 1.

HomZ (Z, A) and HomZ (A, Z).

Compute the abelian groups

Since Z is a free Z-module of rank 1, evaluation at 1 defines an isomorphism between HomZ (Z, A)

and A. Hence the first abelian group is just A again.

For the second one, pr11 acts as zero on Zpr1 , and the only element in Z with this property is zero.

1

So the only map here is the zero map, and this is the zero module.

17. In class, we proved (using Fitting’s lemma) that if M is an indecomposable module of finite

length, then EndR (M ) is a local ring, i.e. the sum of two non-units is a non-unit. Instead

prove Schur’s lemma: if M is an irreducible module then EndR (M ) is a division ring, i.e. 0

is the only non-unit.

Let f : M → M be a non-zero R-module endomorphism with M simple. The kernel of f is not all of

M , but it is an R-submodule of M , hence it is zero as M is irreducible. Therefore f is injective. The

image of f is not zero, but it is an R-submodule of M , hence it is M as M is irreducible. Therefore

f is surjective. Therefore f is invertible.

18. Let e, f ∈ R be idempotents, and consider the summands Re and Rf of R R.

(i) Prove that Re = Rf if and only if f = e + (1 − e)ae for some a ∈ R. (Hint: (⇒) If f = ae

and e = bf then ef e = e and 1f 1 = f where 1 = e + (1 − e).)

(ii) Prove that Re ∼

= Rf if and only if there exist a, b ∈ R with ab = e and ba = f .

(iii) Deduce that Re ∼

= Rf as left R-modules if and only if eR ∼

= f R as right R-modules.

(i) (⇒). If Re = Rf then f = ae and e = bf for some a, b ∈ R. Hence ef = bf f = bf = e and

f (1 − e) = ae(1 − e) = 0. So f = ((1 − e) + e)f ((1 − e) + e) = ef e + (1 − e)f e = e + (1 − e)f e.

(⇐). If f = e + (1 − e)ae then f ∈ Re so Rf ⊆ Re. And ef = e2 = e so e ∈ Rf so Re ⊆ Rf .

(ii) Note that every element of HomR (Re, Rf ) is gotten just by right multiplication by some element

of eRf . Indeed, right multiplication by such elements certainly gives R-module maps from Re to Rf .

Conversely, given an R-module map from Re to Rf , compose with the projection e from R to Re at

the beginning and the inclusion of Rf into R at the end and you get an R-module homomorphism

from R to R, i.e. an element of EndR (R)op = R which actually lies in eRf .

22

Hence an isomorphism Re → Rf is given by right multiplication by some a ∈ eRf and the inverse

is given by right multiplication by some b ∈ f Re. Then right multiplication by ab gives the identity

map from Re to Re, i.e. ab = e, and similarly ba = f .

(iii) Clear as the statement in (ii) is left-right symmetric.

19. Suppose that R = Mn (F ) for F a field and n > 1.

(i) Find a decomposition 1R = e1 +· · ·+em of 1 as a sum of primitive orthogonal idempotents,

and describe the left R-modules Rei and the right R-modules ei R.

(ii) Find another such decomposition.

(iii) Prove that R is a simple ring, i.e. it has no non-trivial two-sided ideals.

(i) Let ei be the matrix with 1F in its ii entry and all other entries zero. These are orthogonal

idempotents summing to 1. To see that they are primitive, just note that Rei is the left R-module

of column vectors, which is irreducible as you can get any non-zero column vector to any other by

multiplying by some matrix. Hence Rei is indecomposable. Hence ei is a primitive idempotent by

result from class.

(ii) Take any unit in R× , i.e. an invertible matrix u. Then uei u−1 also give such things.

(iii) Let I be a non-zero two-sided ideal. Take a non-zero matrix m ∈ I. Say the ij-entry of m is

not zero. Then ei mej is the matrix equal to this entry in the ij-place and all other entries are zero.

Hence I contains the ij-matrix unit ei,j . Now left multiplying you get all other matrix units in the

jth column, and right multiplying you get all other matrix units in the ith row. Hence we’ve got all

matrix units. Adding we get any matrix. So I = R.

20. Let A = (ai,j ) be an n × n matrix with integer entries and determinant d -= 0.

(i) Let X be the free abelian group on basis x1 , . . . , xn and let Y by the subgroup generated

by y1 , . . . , yn where

n

#

yj =

ai,j xi .

i=1

Prove that X/Y is a finite abelian group of order |d|. How would you compute the isomorphism type of this group explicitly?

(ii) Compute the isomorphism type of the group X/Y explicitly for the following matrices:

2 −1

0

0

$

%

2 −1

0

−1

2 −1

2 −1

0

.

−1

2 −1 ,

,

0 −1

−1

2

2 −1

0 −1

2

0

0 −1

2

What about for the n × n matrix following the same pattern?

(iii) Do the same for the matrix

2 −1

0

0

−1

2 −1 −1

.

0 −1

2

0

0 −1

0

2

(i) Say A ≡ diag(d1 , . . . , dn ) are the invariant factors of A over Z, and assume all di ≥ 0 by rescaling

by −1 if necessary. Note d1 · · · dn = ±d $= 0, since the determinant of an invertible matrix over Z is

±1 (the only units in Z). So all di are non-zero. Then we can pick a basis v1 , . . . , vn for X so that

d1 v1 , . . . , dn vn is a basis for Y . But then its obvious that X/Y ∼

= Zd1 ⊕ · · · ⊕ Zdn . In particular its

order is d1 · · · dn = |d|.

(ii) The determinant of the n × n matrix following this pattern is (n + 1), and its invariant factors

are (1, · · · , 1, n + 1). This is an exercise in determinants... Hence the group X/Y is Zn+1 .

(iii) Using the Ji trick, you see that it has invariant factors (1, 1, 2, 2) and X/Y ∼

= Z2 ⊕ Z2 .

23

21. Let R = C[[x]], the ring of formal power series over C (it is a PID). Consider the submodule

W of the free module V = Rv1 ⊕ Rv2 generated by

(1 − x)−1 v1 + (1 − x2 )−1 v2

and

(1 + x)−1 v1 + (1 + x2 )−1 v2 .

Find a basis v1# , v2# of V and elements d1 |d2 ∈ R such that d1 v1# and d2 v2# is a basis for W .

Describe V /W .

By the way here is why C[[x]], formal power series in an indeterminant x with coefficients in C, is a

PID. Elements of this ring consist of infinite series a0 + a1 x + a2 x2 + · · · for a0 , a1 , · · · ∈ C (possibly

infinitely many being non-zero). Multiplication is the obvious thing. The polynomial ring C[x] is a

subring of C[[x]]. Observe first that any power series whose constant term is not zero is actually a

unit. For instance 1 − x is a unit: its inverse is 1 + x + x2 + x3 + · · · . If you think for a moment you

will see that you can algorithmically work out how to invert any power series with constant term

non-zero inductively ... and end up with a power series going on forever. Given this, it is easy to see

that any non-zero element of C[[x]] is just a power of x, xi , times a unit. Hence the ideals in C[[x]]

are just (xi ) for i ≥ 0 together with the zero ideal. So its a PID.

Now for the problem. Subtract (1 + x)−1 times row 1 from row 2 of the matrix

„

«

(1 − x)−1

(1 + x)−1

(1 − x2 )−1 (1 + x2 )−1

to get the matrix

„

(1 − x)−1

0

(1 + x)−1

(1 + x2 )−1 − (1 + x)−2

«

Now column 2 minus (1 − x)(1 + x)−1 times column 1 gives

„

«

(1 − x)−1 0

2 −1

−2

0

(1 + x ) − (1 + x)

Thus the invariant factors are d1 = (1 + x)−1 and d2 = (1 + x2 )−1 − (1 + x)−2 (or up to units 1 and

x would do). Implementing the row operations above as matrix multiplication, you see that

„

(1 − x)−1

0

«

0

=

(1 + x2 )−1 − (1 + x)−2

„

«„

1

0

(1 − x)−1

−1

−(1 + x)

1

(1 − x2 )−1

Hence

(v1! , v2! ) = (v1 , v2 )

Finally,

„

1

−(1 + x)−1

0

1

(1 + x)−1

(1 + x2 )−1

«

«„

1

0

−(1 − x)(1 + x)−1

1

«

= (v1 − (1 + x)−1 v2 , v2 ).

−1

2 −1

−2

V /W ∼

= R/((1 − x) ) ⊕ R/((1 + x ) − (1 + x) ) ∼

= (0) ⊕ R/(x) ∼

= R/(x) ∼

= C.

22. Prove that Q is not a free Z-module (even though it is torsion free).

Suppose it was a free Z-module on basis vi (i ∈ I). Note I cannot be of size 1, because Q is not

isomorphic to Z as an abelian group (obviously its not cyclic – rational numbers are not integer

multiplies of any one fraction!). Let v and v ! be two different elements from the basis. Then

v = a/b and v ! = c/d are non-zero rational numbers. But then cbv − adv ! = 0, contradicting linear

independence.

23. Let R be a PID and V be an R-module. A submodule W of V is called pure if W ∩ xV = xW

for all x ∈ R. If V is finitely generated, prove that W is a pure submodule of V if and only

if it is a direct summand of V .

24

(Thanks to J.B. for this solution.)

Suppose that W is a summand of V , say V = W ⊕ U . Take x ∈ R. We need to show that

W ∩ xV ⊆ xW . Well, say we have some v ∈ V so that xv ∈ W . Write v = w + u for w ∈ W, u ∈ U .

Then xv = xw + xu ∈ W , hence xu = xv − xw ∈ W ∩ U = {0}. This shows that xv = xw so it lies

in xW .

Conversely, suppose that W is a pure submodule of V . The quotient V /W is finitely generated so

by structure theorem it is a direct sum of cyclic modules. Say V /W = R(v1 + W ) ⊕ · · · ⊕ R(vn + W )

for v1 , . . . , vn ∈ V .

Suppose that R(vi + W ) is cyclic of order di . If di = 0 set ui = vi . If di $= 0, then di vi ∈ W hence

by purity di vi = di wi for some wi ∈ W . Set ui = vi − wi in this case. Note in both cases that

vi + W = ui + W , hence V /W = R(u1 + W ) ⊕ · · · ⊕ R(un + W ) and each R(ui + W ) is also cyclic of

order di . The advantage now is that we actually have di ui = 0 (whereas we only had di vi ∈ W ...).

Finally set U = Ru1 + · · · + Run . We claim that V = W ⊕ U , hence W is a summand of V

as required. Clearly V = W + U because (U + W )/W = V /W . So we just need to check that

W ∩ U = {0}. Take r1 u1 + · · · + rn un ∈ W . Then r1 (u1 + W ) + · · · + rn (un + W ) = 0 in V /W . Hence

as V /W = R(u1 + W ) ⊕ · · · ⊕ R(un + W ), we have that ri (ui + W ) = 0 in V /W for each i. But this

means that di |ri , i.e. ri = di si for some si , hence ri ui = si di ui = 0. Hence r1 u1 + · · · + rn un = 0

which is what we wanted...

24. Let R be a commutative ring. Is it true that the left regular R-module is indecomposable?

What if R is a PID?

No. Take for example the ring Z6 . By CRT, it is isomorphic to Z2 ⊕ Z3 . So it is decomposable.

But if R is an integral domain, then the left regular R-module is indecomposable. Proof: Suppose

R = A ⊕ B is decomposable as a left R-module. Take 0 $= a ∈ A and 0 $= b ∈ B. Consider ab = ba.

It lies in A and in B. Hence its zero. This contradicts R being an integral domain.

25. (i) How many abelian groups are there of order 56 75 up to isomorphism?

(ii) How many isomorphism classes of abelian groups are there of order 108 having exactly 4

subgroups of order 6?

(i) It is the number of partitions of 6 times the number of partitions of 5. For 6 there are 11:

(6), (5, 1), (4, 2), (4, 1, 1), (3, 3), (3, 2, 1), (3, 1, 1, 1), (2, 2, 2), (2, 2, 1, 1), (2, 1, 1, 1, 1), (1, 1, 1, 1, 1, 1). For

5 there are 7: (5), (4, 1), (3, 2), (3, 1, 1), (2, 2, 1), (2, 1, 1, 1), (1, 1, 1, 1, 1). So there are 77. I hope.

(ii) The abelian groups of order 108 are as follows: Z27 ⊕ Z4 , Z27 ⊕ Z2 ⊕ Z2 , Z9 ⊕ Z3 ⊕ Z4 , Z9 ⊕

Z3 ⊕ Z2 ⊕ Z2 , Z3 ⊕ Z3 ⊕ Z3 ⊕ Z4 , Z3 ⊕ Z3 ⊕ Z3 ⊕ Z2 ⊕ Z2 . A subgroup of order 6 is Z3 ⊕ Z2 , and

Z3 , Z2 must be in the unique Sylow subgroups of order 27 or 2. Now Z27 has one subgroup of order

3, while Z9 ⊕ Z3 has four and Z3 ⊕ Z3 ⊕ Z3 has 13. Similarly, Z4 has one subgroup of order 2 and

Z2 ⊕ Z2 has three. So the only possibility is Z9 ⊕ Z3 ⊕ Z4 .

26. True or False?

(i) An n × n nilpotent matrix with entries in a field has characteristic polynomial xn .

(ii) There are exactly 3 similarity classes of 4 × 4 matrices A over the field F2 satisfying

A2 = 1.

(iii) The number of similarity classes of n × n nilpotent matrices over a field F is independent

of the particular field F chosen.

0 1 0

0 1 0

1 3 1

0 0 −6

(iv) The matrices

2 2 −1 and 1 0 1 are similar over Q.

1 1 1

0 1 4

(i),(iii) Both true. The minimal polynomial of a nilpotent matrix divides xn so all roots of it are zero,

so all its eigenvalues are zero, so up to similarity it is just a bunch of Jordan block Jd1 (0), . . . , Jdk (0)

down the diagonal with d1 ≥ · · · ≥ dk > 0 and d1 + · · · + dk = n. Obviously the characteristic

25

polynomial is xn . And the number of similarity classes is equal to the number of partitions of n,

independent of the field F .

(ii) True. The minimal polynomial divides x2 − 1 = (x − 1)2 . So it is just J1 (1) and J2 (1)’s down

the diagonal in JNF. Now 4 = 1 + 1 + 1 + 1 = 2 + 1 + 1 = 2 + 2 (how to put the Jordan blocks down

the diagonal). So there are 3 similarity classes.

(iv) Well this was typo, I meant to have 4 by 4 matrices here! But as it is its a very easy question,

the answer is false I guess: it doesn’t even make sense to say that non-square matrices are similar

at all.

27. How many similarity classes are there of 2 × 2 matrices over F2 ?

Possible characteristic polynomials: x2 , x2 +1 = (x+1)2 , x2 + x = x(x+1), x2 + x+1. The first three

give classes diag(0, 0), J2 (0), diag(1, 1), J2 (1), diag(0, 1). The final one is irreducible polynomial, with

rational normal form

»

–

0 1

.

1 1

So I make it 6.

Note I often use Jordan normal form even if the field is not algebraically closed. PROVIDING all

the roots of the characteristic polynomial lie in the field F , it makes sense to work with JNF. Its

just that there are some characteristic polynomials which don’t have roots lying in F , and for such

ones you have to resort to the rational normal form.

28. Let V be a 7 dimensional vector space over Q.

(i) How many similarity classes of linear transformations on V are there with characteristic

polynomial (x − 1)4 (x − 2)3 ?

(ii) Of the similarity classes you listed in (i), how many have minimal polynomial (x−1)2 (x−

2)2 ?

(iii) Let φ be a linear transformation from V to V having characteristic polynomial (x −

1)4 (x − 2)3 and minimal polynomial (x − 1)2 (x − 2)2 . Find dim ker (φ − 2 id).

(i) Partitions of 4: 4, 3 + 1, 2 + 2, 2 + 1 + 1, 1 + 1 + 1 + 1, 5 in total. Partitions of 3: 3, 2 + 1, 1 + 1 + 1,

3 in total. So there are 15 similarity classes of such linear transformations.

(ii) Need biggest Jordan blocks of size 2. So just partitions 2 + 2 or 2 + 1 + 1 for eigenvalue 1 and

2 + 1 for eigenvalue 2, giving just 2 classes in total.

(iii) We need to compute the dimension of the 2-eigenspace. There are exactly two Jordan blocks of

eigenvalue 2. So this dimension is 2.

29. Let V be a finite dimensional vector space over Q and θ : V → V be a linear transformation

having characteristic polynomial (x − 2)4 .

(i) Describe the possible Jordan normal forms for θ and for each one give the minimal

polynomial of θ.

(ii) For each of the possibilities in (i) compute the dimension of the 2-eigenspace of θ.

(iii) Assume that θ leaves stable only finitely many subspaces of V . What can be said about

the Jordan normal form of θ?

(i) Note we’re looking for a 4 by 4 matrix with all eigenvalues equal to 2: diag(2, 2, 2, 2), diag(J2 (2), 2, 2),

diag(J2 (2), J2 (2)), diag(J3 (2), 2) and J4 (2). Their minimal polynomials are (x − 2), (x − 2)2 , (x −

2)2 , (x − 2)3 and (x − 2)4 respectively.

(ii) This is the number of Jordan blocks, so 4, 3, 2, 2, 1 respectively.

(iii) If the 2-eigenspace is more than 1 dimensional, there are infinitely many 1-subspaces of the

2-eigenspace, all of which are θ-stable. So we must have just one Jordan block of eigenvalue 2, i.e.

θ has JNF J4 (2).

26

30. Let θ : V → V be an endomorphism of a finite dimensional vector space V . Recall for λ ∈ F

that the λ-eigenspace of V is Vλ := ker (θ − λ id), and elements of it are called λ-eigenvectors.

Also θ is called diagonalizable if and only if V has a basis in which the matrix of θ is a

diagonal matrix, in other words, a basis consisting of eigenvectors.

,

(i) Prove that the sum λ∈F Vλ is direct, i.e. eigenvectors with different eigenvalues are

linearly independent.

(ii) Prove that θ is diagonalizable if and only if V = λ∈F Vλ .

(i) Suppose not. Then there exists a linear relation 0 = v1 + · · · + vn be a linear relation with

0 $= vi ∈ Vλi , distinct λ1 , . . . , λn and n > 0. Choose one with n as small as possible. Act with

θ to get that 0 = λ1 v1 + · · · + λn vn . Subtracting λ1 times the first equation we get that 0 =

(λ2 − λ1 )v2 + · · · + (λn − λ1 )vn . This is a linear relation of strictly smaller length, contradicting the

minimality of n.

(ii) If θ is diagonalizable,

then there exists a basis of eigenvectors, hence V is spanned by eigenvectors,

P

hence V = λ∈F Vλ . We already showed in (i) that this sum is direct.

L

Coversely suppose V =

λ∈F Vλ . Pick a basis for each Vλ and glue together to get a basis for V

consisting of eigenvectors. The matrix of θ in this basis is diagonal.

31. Recall from class that θ : V → V is diagonalizable if and only if its minimal polynomial is a

product of distinct linear factors. Actually we only observed this in the case that the ground

field was algebraically closed (since we deduced it from the Jordan normal form). Prove it

now for any ground field.

If θ is diagonalizable, let λ1 , . . . , λn be the distinct eigenvalues. Then the minimal polynomial is

(x − λ1 ) · · · (x − λn ), i.e. a product of distinct linear factors.

Conversely, suppose that the minimal polynomial is a product of distinct linear factors, (x −

λ1 ) · · · (x − λn ). View V as an F [x]-module so that x acts as θ. By the primary decomposition,

V = V1 ⊕ · · · ⊕ Vm where Vi is cyclic of prime power order. Say Vi is of order pi (x)mi for pi (x) ∈ F [x]

prime. The minimal polynomial acts as zero on V . Hence pi (x)mi |(x − λ1 ) · · · (x − λn ) for each i.

But this means each mi = 1 and each pi (x) is of degree 1. Hence V is diagonalizable...

32. Let θi (i ∈ I) be a family of endomorphisms of a finite dimensional vector space V . Prove

that they are simultaneously diagonalizable, i.e. there exists a basis for V with respect to

which the matrices of all of the θi ’s are diagonal, if and only if each θi is diagonalizable and

θi ◦ θj = θj ◦ θi for all i, j ∈ I.

If the θi ’s are simultaneously diagonalizable, they are obviously diagonalizable and commute. Now

consider the converse. Assume the θi ’s are all diagonalizable and that they pairwise commute. We

show that they are simultaneously diagonalizable by induction on dim V . If dim V = 1 it is clear

(everything is diagonal!). Now in general, if each θi is just multiplication by a scalar ci , then in any

basis each θi looks like ci In and they’re simultaneously diagonalizable for free.

So we can find some θi , wlog θ1 , having distinct eigenvalues λ1 , . . . , λn with n > 1. Let Vi =

ker (θ1 − λi ), so dim Vi < n. Note all the other θj leave Vi invariant: if v ∈ Vi then θ1 (θj (v)) =

θj (θ1 (v)) = λi θj (v) hence θj (v) ∈ Vi still.

I claim that the restrictions θj |Vi of each θj to Vi are still diagonalizable. To prove this, note that

that the minimal polynomial of θj is a product of distinct linear factors. The minimal polynomial

of θj |Vi divides this, so it too is a product of distinct linear factors. Hence θj |Vi is diagonalizable.

Now we can apply induction to see for each i that the {θj |Vi |j ∈ I} are simultaneously diagonalizable.

Pick a basis for each Vi consisting of simultaneous eigenvectors, then glue these bases together to

get a basis of simultaneous eigenvectors for all of V .

33. Let V and W be vector spaces over a field F . Construct a natural injective homomorphism

from V ∗ ⊗ W ∗ to (V ⊗ W )∗ , and prove that it is an isomorphism if V and W are both finite

dimensional. Is it an isomorphism if they are infinite dimensional?

27

Define a map from V ∗ × W ∗ to (V ⊗ W )∗ mapping (f, g) to the linear map f ⊗ g : V ⊗ W → F

that sends v ⊗ w to f (v)g(w) (constructed in class). This is bilinear so we get induced a map

V ∗ ⊗ W ∗ → (V ⊗ W )∗ .

I claim that this map is injective. Take something x in the kernel. We can write it as x = f1 ⊗

g1 + · · · + fn ⊗ gn for linearly independent functions f1 , . . . , fn ∈ V ∗ and some other (not necessarily

independent) functions g1 , . . . , gn ∈ W ∗ . It is in the kernel of our map, which means for every v ∈ V

and w ∈ W that (f1 ⊗ g1 + · · · + fn ⊗ gn )(v ⊗ w) = f1 (v)g1 (w) + · · · + fn (v)gn (w) = 0. Since f1 , . . . , fn

are linearly independent, we can find v1 , . . . , vn so that fi (vj ) = δi,j . Then for all i and w ∈ W

f1 (vi )g1 (w) + · · · + fn (vi )gn (w) = gi (w) = 0. Hence gi = 0 for all i. Hence x = 0.

If both are finite dimensional then dim V ∗ ⊗W ∗ = (dim V )(dim W ) = dim(V ⊗W )∗ . So the injective

map we’ve constructed must actually be an isomorphism.

If they’re both infinite dimensional, it definitely won’t be an isomorphism.

34. Recall a bilinear form on a vector space V is a bilinear map from V × V to the ground field

F . Given any two such bilinear forms b, b# , you can add them together to get a new form

b + b# with (b + b# )(v, w) = b(v, w) + b# (v, w) and you can multiply by scalars too. This makes

the space of bilinear forms on V into a vector space in its own right.

(i) Prove that the vector space of bilinear forms on V is naturally isomorphic to the vector

space (V ⊗ V )∗ .

(ii) Assuming that V is finite dimensional, prove that (V ⊗ V )∗ is naturally isomorphic to

HomF (V, V ∗ ). (Hence giving a bilinear form on V is equivalent to giving a linear map from

V to V ∗ .)

(i) Take a bilinear form b. This means a bilinear map from V × V to F . By the universal property

of tensor product, this induces a unique linear map from V ⊗ V to F , i.e. an element of (V ⊗ V )∗ .

Conversely given an element f ∈ (V ⊗ V )∗ you get a bilinear form (v, w) = f (v ⊗ w)...

(ii) If V is finite dimensional then by (i) and the previous question the space of bilinear forms is

isomorphic to V ∗ ⊗ V ∗ . But from class, V ∗ ⊗ W ∼

= HomF (V, W ) for any finite dimensional V . Hence

V∗⊗V∗ ∼

= HomF (V, V ∗ ).

35. Let A and B be abelian groups.

(i) Prove that A ⊗Z Zm ∼

= A/mA.

(ii) Prove that Zm ⊗Z Zn ∼

= Zk where k = GCD(m, n).

(iii) Assuming that A and B are finitely generated, describe A ⊗ Z B in general.

(i) Define a map A×Zm → A/mA by (a, z) "→ az +mA. Note this is well-defined: if z ≡ z ! (mod m)

then az ≡ az ! (mod mA). Also it is balanced. Hence it induces a map A ⊗Z Zm → A/mA such that

a ⊗ 1 "→ a + mA. It is clearly onto.

Conversely, define a map A → A ⊗Z Zm mapping a to a ⊗ 1. Under this map, ma "→ ma ⊗ 1 =

a ⊗ m = 0, so mA lies in the kernel. Hence our map factors through the quotient A/mA to induce

a well-defined map A/mA → A ⊗Z Zm under which a + mA "→ a ⊗ 1. This is a two-sided inverse to

the map in the preceeding paragraph.

(ii) Define a map Zm × Zn → Zk mapping ([a]m , [b]n ) to [a]k [b]k . This is balanced, so induces a

map Zm ⊗Z Zn → Zk such that [a]m ⊗ [b]n "→ [a]k [b]k . Note it is onto since 1 ⊗ 1 maps to 1 which

generates Zk .

On the other hand, Zm ⊗Z Zn is cyclic generated by the vector [1] ⊗ [1] (any pure tensor looks like

[a] ⊗ [b] = a[1] ⊗ b[1] = ab[1] ⊗ [1]; any tensor is a sum of pure tensors hence a multiple of [1] ⊗ [1]

too). And now if you write k = am + bn you have that k[1] ⊗ [1] = am[1] ⊗ [1] + bn[1] ⊗ [1] =

a[m] ⊗ [1] + b[1] ⊗ [n] = 0. Hence Zm ⊗Z Zn is of order at most k. So the epimorphism from the

previous paragraph must be onto.

∼ Zm /(nZm ), which is (Z/mZ)/((nZ + mZ)/mZ) ∼

OR: you can use (i) which shows that Zm ⊗Z Zn =

=

∼ Z/(k).

Z/(m, n) =

(iii) Just write A and B in terms of the primary decompostion and use the fact that ⊗ commutes

with ⊕...

28

36. True or False?

(i) If V is a torsion Z-module then V ⊗Z Q = 0.

(ii) Q ⊗Z Q ∼

= Q.

(iii) If R is a commutative ring and M, N are R-modules, then m ⊗ n = m# ⊗ n# in M ⊗R N

implies that m = m# and n = n# .

(iv) If R is a commutative ring and M, N are free R-modules, then M ⊗R N ∼

= M ⊗Z N as

abelian groups.

(i) TRUE. It suffices to show every pure tensor is zero. Take v ⊗ ab . Since v is torsion, mv = 0 for

a

some m $= 0. But then v ⊗ ab = mv ⊗ mb

= 0.

(ii) TRUE. Define a map Q×Q → Q, ( ab , dc ) "→ ab

. It is balanced, hence it induces a map f : Q⊗Z Q →

bd

Q. It is an isomorphism, because I can write down a two-sided inverse map: g : ab "→ 1 ⊗ ab . Clearly

f ◦ g is the identity, but its not quite clear that g ◦ f is....

c

c

Consider pure tensor ab ⊗ dc = b ab ⊗ bd

= a ⊗ bd

= 1 ⊗ ac

. It follows that any element of Q ⊗Z Q

bd

a

(not just pure tensors) can be written as 1 ⊗ b . Now on such an element it is clear that g ◦ f is the

identity.

(iii) FALSE. I mean rm ⊗ n = m ⊗ rn and you neededn’t have rm = m...

(iv) FALSE. For instance take R = Z × Z. Then R ⊗R R ∼

=R∼

= Z × Z = Z⊕2 as abelian group. But

⊕4

(Z ⊕ Z) ⊗Z (Z ⊕ Z) ∼

= Z as abelian group.

37. Let R and S be rings. We have the tensor functor ?⊗R ? from mod-R × R-mod to abelian

groups and the tensor functor ?⊗S ? from mod-S × S-mod to abelian groups. Composing

them in two different ways gives two different functors F = (?⊗R ?)⊗S ? and G =? ⊗R (?⊗S ?)

from mod-R × R-mod-S × S-mod to abelian groups.

(i) Explain what it means to say that the functors F and G are isomorphic functors.

(ii) Now use the universal property of tensor product to prove that F and G are indeed

isomorphic. (This is “associativity of tensor product”).

(i) It means for any triple M, N, P of modules there is an isomorphism ηM,N,P : (M ⊗ N ) ⊗ P →

M ⊗ (N ⊗ P ) such that for any morphisms f : M → M ! , g : N → N ! and h : P → P ! , we have that

ηM ! ,N ! ,P ! ◦ ((f ⊗ g) ⊗ h) = (f ⊗ (g ⊗ h)) ◦ ηM,N,P .

(ii) Of course we just need a hommorphism (M ⊗ N ) ⊗ P → M ⊗ (N ⊗ P ) such that the pure tensor

(m ⊗ n) ⊗ p maps to m ⊗ (n ⊗ p). The diagram in (i) will then certainly commute. But we need to

prove that there exists such a map using the universal property.

For each p ∈ P , define a map fp : M × N → M ⊗ (N ⊗ P ) sending (m, n) to m ⊗ (n ⊗ p). Its balanced

so induces a unique map f¯p : M ⊗ N → M ⊗ (N ⊗ P ) sending m ⊗ n to m ⊗ (n ⊗ p).

Now given this define a balanced map (M ⊗ N ) × P → M ⊗ (N ⊗ P ) mapping (x, p) to f¯p (x). Its

balanced so induces unique map (M ⊗ N ) ⊗ P → M ⊗ (N ⊗ P ) such that (m ⊗ n) ⊗ p "→ f¯p (m ⊗ n) =

m ⊗ (n ⊗ p). Phew.

38. Let n > 1. Prove that Zn is both a projective and an injective Zn -module.

Clearly its projective as its free.

For injectivity, let’s apply the criterion for injectivity. We just need to show that if I is an ideal

of Zn and α : I → Zn is a module homomorphism then α extends to a map Zn → Zn . We know

I = dZn for some d|n. Let k be such that α([d]) = [k]. Since (n/d) times [d] equals zero in Zn , we

must have that (n/d) times [k] equals zero in Zn , i.e. n divides nk/d, i.e. d divides k. Let e = k/d.

Now define β : Zn → Zn by β([x]) = [xe]. Since β([d]) = [k] = α([d]) we get that β extends α. Done.

39. Let R be commutative. True or false?

(a) Every submodule of a projective R-module is projective.

(b) Every submodule of an injective R-module is injective.

29

(c) Every quotient of a projective R-module is projective.

(d) Every quotient of an injective R-module is injective.

They are all false in general. For counterexamples:

(a),(d) Take R = Z4 and M = Z2 . Note R is both projective and injective (by the preceeding

question). And M is both a submodule and a quotient module of R, indeed there is a short exact

sequence 0 → Z2 → Z4 → Z2 → 0 (first map being multiplication by 2). It doesn’t split since Z4

is indecomposable. So Z2 cannot be injective (since anything starting with an injective does split)

and Z2 cannot be projective (since anything ending with a projective does split).

(b) Take R = Z, M = Q which is injective as its divisible, and consider the submodule Z of Q. It is

not injective since its not divisible.

(c) Take R = Z and M = Z/2Z. Its not projective.

40. Prove that the following conditions on a ring R are equivalent:

(a) every left R-module is projective;

(b) every left R-module is semisimple;

(c) every left R-module is injective.

We have shown that every short exact sequence splits 0 → K → M → Q → 0 if and only if K is

injective, if and only if M is semisimple, if and only if Q is projective.

i

π

41. Suppose that 0 → K → M → Q → 0 is a split short exact sequence of left R-modules. Let

B be a right R-module. Prove that

id ⊗i

id ⊗π

B

B

0 −→ B ⊗R K −→

B ⊗R M −→

B ⊗R Q −→ 0

is exact. Give a counterexample to show that this need not be true if you remove the word

“split” above.

Since tensor is right exact, everything is fine except that the first map idB ⊗ i might not be injective.

But if the ses is split, then there exists a splitting σ : M → K such that σ ◦ i = idK . Then

idB ⊗ σ : B ⊗ M → B ⊗ K satisfies (idB ⊗ σ) ◦ (idB ⊗ i) = idB ⊗ (σ ◦ i) = idB ⊗ idK . Hence idB ⊗ i

is injective as required.

For a counterexample, take 0 → Z → Z → Z3 → 0 where the first map is multiplication by 3. Apply

Z3 ⊗Z ?. The first map becomes the map Z3 → Z3 given by multiplication by 3 = 0 That is not

injective!

42. If f : V → V # and g : W → W # are surjective maps of right and left R-modules, respectively,

prove that f ⊗g : V ⊗R W → V # ⊗R W # is surjective. Give a counterexample to show that this

need not be true if you replace the word “surjective” with the word “injective” everywhere.

It suffices to show any pure tensor of the form v ! ⊗ w! lies in the image, since pure tensors span

V ! ⊗R W ! . But f is onto so there exists v ∈ V such that f (v) = v ! . Similarly there exists w ∈ W

such that g(w) = w! . Then (f ⊗ g)(v ⊗ w) = f (v) ⊗ g(w) = v ! ⊗ w! .

Now replace the word surjective with injective. Take f : Z → Z being multiplication by 3, and

g : Z3 → Z3 being the identity map. Then f ⊗ g : Z ⊗Z Z3 → Z ⊗Z Z3 is the map Z3 → Z3 given by

multiplication by 3 = 0. Hence it is the zero map. Its not injective.

43. Describe the abelian group C ⊗Z Zn .

By general result from last week’s homework, for any commutative ring R, M ⊗R (R/I) ∼

= M/IM .

So this is C/nC. But nC = C again. So this is just the trivial abelian group 0.

44. True or false?

(a) Every submodule of a projective Z-module is projective. (Hint: every submodule of a

free Z-module is free.)

30

(b) Every submodule of an injective Z-module is injective.

(c) Every quotient of a projective Z-module is projective.

(d) Every quotient of an injective Z-module is injective.

(a) True. This follows from the hint because every projective Z-module is free. To prove the latter,

let P be projective, take a short exact sequence 0 → K → F → P → 0 with F free. Then it splits as

P is projective, hence F ∼

= P ⊕ K. But this shows P is isomorphic to a submodule of a free module,

hence it is free by the hint.

(b) False. Take Z inside Q like last week.

(c) False. Take Z mapping onto Z2 , the latter is not projective.

(d) True. Quotients of divisible abelian groups are divisible.

45. A right R-module M is called flat if the functor M ⊗R ? is exact.

(i) Prove that a direct sum of modules is flat if and only if each of the modules is flat.

(ii) Prove that the regular module RR is flat.

(iii) Prove that any projective module is flat.

(i) Let f : A → B be a monomorphism. Applying the functor (M ⊕ N )⊗R ? and using the fact

that tensor commutes with direct sum (which remember is actually some natural isomorphism of

functors...), we get the following commutative diagram:

(M ⊕ N ) ⊗R A

?

?

y

idM ⊕N ⊗f

−−−−−−−→

(M ⊕ N ) ⊗R B

?

?

y

M ⊗R A ⊕ N ⊗R A −−−−−−−−−−−→ M ⊗R B ⊕ N ⊗R B

(idM ⊗f,idN ⊗f )

where the vertical maps are isomorphisms. Now the top map is injective if and only if the bottom

map is injective, which is if and only if both idM ⊗ f and idN ⊗ f are injective. This is exactly what

we needed...M ⊕ N is flat if and only if both M and N are flat.

(ii) The functor R⊗R ? is isomorphic to the identity functor. The latter is exact, hence so is R⊗R ?.

(iii) Let P be projective. Its a summand of a free module. Hence by (i) we are done if we can prove

that any free module is flat. But free modules are direct sums of the regular module. So by (i) again

(which holds even for infinite direct sums ... though I only wrote down the proof for two) we are

done if we can prove that the regular module is flat. But that is true by (ii)

46. Let M be a left R-module. I explained in class by general nonsense (properties of adjoint

functors) that the functor HomR (M, ?) is left exact. Give a direct proof of this statement,

then give an example to show that it is need not be exact.

f

g

Take an exact sequence 0 → A → B → C. Apply the functor HomR (M, ?) to get

f¯

ḡ

0 → HomR (M, A) → HomR (M, B) → HomR (M, C).

The map f¯ sends θ to f ◦ θ, the map ḡ sends φ to g ◦ φ. Note that g ◦ f = 0, hence applying the

functor HomR (M, ?) we get at once that ḡ ◦ f¯ = 0. This shows for free that im f¯ ⊆ ker ḡ.

Now we show that f¯ is injective. Say θ : M → A has f¯(θ) = 0. This means that f (θ(m)) = 0 for all

m ∈ M . But f is injective, hence in fact θ(m) = 0 for all m ∈ M , i.e. θ = 0.

Finally we show that imf¯ ⊇ ker ḡ. Take φ : M → B such that ḡ(φ) = 0. This means that

g(φ(m)) = 0 for all m ∈ M . We need to show that there exists some θ : M → A such that

(f¯)(θ) = φ, i.e. f (θ(m)) = φ(m) for all m ∈ M . Take m ∈ M . Since g(φ(m)) = 0 we have that

φ(m) ∈ ker g = im f , hence there exists a unique element θ(m) ∈ A such that f (θ(m)) = φ(m).

This defines a map θ : M → A. It is an R-module homomorphism because f (θ(rm)) = φ(rm) =

rφ(m) = rf (θ(m)) = f (rθ(m)), hence θ(rm) = rθ(m).

31

47. Let GROU P S be the category of all groups, and AB be the category of all abelian groups.

There is an obvious “forgetful functor” F : AB → GROU P S. We also have the functor

α : GROU P S → AB (“abelianization”) defined on objects by G *→ G/[G, G] (and on

morphisms?). The functor α is either left adjoint or right adjoint to F , I always forget which

until I work it out. Well work it out, then prove that its the case.

Take an abelian group A and any old group K. Then,

HomGROU P S (K, F A) ∼

= HomAB (K/[K, K], A) ∼

= HomAB (αK, A).

The first isomorphism is because any map from K to an abelian group sends the commutator

subgroup [K, K] to zero, so by the universal property of quotients must factor through to give a

unique map from K/[K, K] to A. Hence, (α, F ) is an adjoint pair of functors, and α is left adjoint

to F .

48. Let S be a unital subring of a ring R. There is a functor ResR

S (“restriction”) from left Rmodules to left S-modules defined by sending an R-module M to the same thing but viewed

just as an S-module. Prove that R⊗S ? is left adjoint to ResR

S.

I claim that the functor ResR

S is isomorphic to the functor HomR (R RS , ?). Yes that’s pretty obvious.

Once you’ve noticed this it is exactly adjointness of tensor and hom: R RS ⊗S ? is left adjoint to

HomR (R RS , ?).

Incidentally, ResR

S also has a right adjoint. Indeed, you can also view it as the functor S RR ⊗R ?.

Then by adjointness of tensor and hom, the functor HomS (S RR , ?) is right adjoint to it.

49. Prove that every module over the matrix ring Mn (F ) (F a field) is semisimple.

Well one proof is to note that Mn (F ) is Morita equivalent to F , and every F -module is semisimple.

But you can prove this directly quite easily, as follows. The left regular module is semisimple (it is

a direct sum of n copies of the “natural” irreducible module of column vectors – each column gives

a copy of this module). Hence any free module is semisimple. Hence every module is semisimple (as

a quotient of a free module).

Sorry this was a bit of a lame question.

50. (Pontrjagin duality) If G is an abelian group, its Pontrjagin dual is the group G∗ = HomZ (G, Q/Z).

In fact HomZ (?, Q/Z) is a contravariant functor from abelian groups to abelian groups.

(i) Prove that Q/Z is an injective abelian group.

(ii) Prove that if 0 → A → B → C → 0 is a short exact sequence of abelian groups, then so

is 0 → C ∗ → B ∗ → A∗ → 0.

(iii) Prove that (Zn )∗ ∼

= Zn .

(iv) If G is a finite abelian group prove that G∗ ∼

= G.

(i) It is divisible.

(ii) That the functor HomZ (?, Q/Z) is left exact is easy enough to prove (e.g. by direct argument like

question 46 above). This means that we certainly have an exact sequence 0 → C ∗ → B ∗ → A∗ . The

tricky thing is to show that the injective map f : A → B induces a surjective map f ∗ : B ∗ → A∗ , i.e.

that HomZ (?, Q/Z) sends injections to surjections. This follows because Q/Z is an injective module

by (i).

Indeed, we need to prove that for every map α : A → Q/Z there is a map β : B → Q/Z such that

α = β ◦ f . We get that by the definition of injective module...

(iii) Take the ses 0 → Z → Z → Zn → 0 where the first map f : Z → Z is multiplication by n. Apply

the functor HomZ (?, Q/Z) using (ii) to get a ses 0 → (Zn )∗ → HomZ (Z, Q/Z) → HomZ (Z, Q/Z) → 0.

The map HomZ (Z, Q/Z) → HomZ (Z, Q/Z) here sends θ to θ ◦ f . But HomZ (Z, Q/Z) ∼

= Q/Z, via the

isomorphism given by evaluation at 1. Making this identification, noting that θ◦f (1) = θ(n) = nθ(1),

you get a short exact sequence

0 → (Zn )∗ → Q/Z → Q/Z → 0.

32

The second map is just multiplication by n, so has kernel {m/n (mod 1) | m ∈ Z}. Clearly this is

isomorphic to Zn , via the map sending m/n (mod 1) to m (mod n). Since the sequence is exact,

the first term (Zn )∗ is precisely this kernel, hence it is isomorphic to (Zn )∗ .

(iv) The functor HomZ (?, Q/Z) commutes with finite direct sums (see question 4(i)). So this follows

by (iii) and the structure theorem for finite abelian groups.