Homework 4 Solutions: Algorithms and Data Structures

advertisement

Homework 4

Solutions

Problem 1

(a) Suppose we are given a sequence S of n elements, each of which is an integer in the

range [0, n2 − 1]. Describe a simple method for sorting S in O(n) time. (Hint: think of alternative

ways of viewing the elements.)

Solution Let k be an integer in the range [0, n2 − 1]. If we represent in in n-ary form, it would

consist of two numbers (l, f ) s.t. k = l ∗ n + f where each l and f are in the range [0, n − 1].

Conviniently, the lexicographical ordering of the n-ary representation corresponds to the ordering

of the numbers. Radix-sort as defined on page 284 of the course packet sorts pairs of numbers

like this lexicographically. Radix-sort is a linear time algorithm, so this is obviously a linear time

algorithm too.

Algorithm LimitedSort(S, n)

Input: a sequence S of n number in the range [0, n2 − 1]

Output: sorted S

{ change the representation }

for i ← 0 to n − 1 do

S[i] ← ((S[i] − S[i] mod n)/n, S[i] mod n)

radix-sort(A)

{ restore the representation }

for i ← 0 to n − 1 do

S[i] ← S[i][0] ∗ n + S[i][1]

(b) Does the running time of straight radix-sort depend on the order of keys in

Solution The running time of the radix-sort algorithm that uses bucket-sort as an internal

stable sort (as described on page 284 of the course packet) does not depend on the order of keys,

because bucket-sort starts by removing every element from the array and inserting it in a bucket

(single insertion takes constant time), and then reads each element from the buckets back into the

array all at a constant time cost per element.

(c) Does the running time of radix-exchange-sort depend on the order of keys in the input?

Solution Asymptotic running time will always be O(bn) for an array of n numbers with b bits

each, because for every bit we scan through every element of the array exaclty once. On the other

hand, depending on the number of swap operations we have to perform, the constant factors might

differ. For example, if given a sorted sequence, we will just end up performing nb comparisons.

But given a sequence where at each bth interation of the radix-exchange-sort all elements with 1s

in bth place end up at the beginning, we can end up with as many as nb/2 swaps.

101

100

111

110

001

000

011

010

010

011

000

001

110

111

100

101

001

000

011

010

101

100

111

110

000

001

010

011

100

101

110

111

CS 16 — Introduction to Algorithms and Data Structures

Semester II, 99–00

2

Problem 2 A forensic lab receives a delivery of n samples. They look identical, but in fact,

some of them have different chemical composition. There is a device that can be applied to two

samples and tells whether they are different or not. It is known in advance that most of the

samples (more then 50% ) are identical. Find one of those identical samples making no more than

n comparisons. (Beware: it is possible that two samples are identical but do not belong to the

majority of identical samples.)

Solution The basic idea is that if two samples are different, they may be discarded, because

one of them does not belong to the majority and the majority remains if we do this.

The algorithm processes samples on by one, and keeps track of the current “candidate” for the

majority sample, c, and its “multiplicity”, k. The invariant is that if we add k copies of the c-th

sample to the yet unrpocessed samples, the majority sample of the initial array and the majority

sample of this new array would be the same.

We will use 6= and = to compare the samples (as there is no total or partial order on the

samples).

Algorithm FindMajority(S)

Input: array S of samples

Output: the majority element of S

k←0

for i ← 0 to n − 2 do

if k = 0 then

k←1

c ← S[i + 1]

else

if S[i + 1] = c then

k ←k+1

else

k ←k−1

return c

Alternative solutions would be to go through the array taking each consecutive pair of samples

and comparing it. If a pair contains distinct samples, throw them out. If a pair of two “equal”

samples, keep one of them. Then perform the same procedure on the remaining samples. At each

iteration like this, we will end up “throwing out” at least half of the samples. Let us assume without

loss of generality, that n = 2k , Then the number of comparisons we would have to perform will be

at most n/2 + n/4 + n/8 + . . . + 1 = 2k−1 + . . . + 1 = 2k − 1 = n − 1.

Problem 3 Entries in a phone book have multiple keys: last name, first name, address, and

telephone number. They are sorted first by last name, then by first name, and finally by address.

Design a variation of radix sort for sorting the entries in a phone book, assuming that you have

available a procedure that sorts records on a single key. The running time of your algorithm should

be of the same order as the running time of the procedure that sorts on a single key. (Beware: this

procedure may not be stable.)

Solution Let single-sort(S,k) be the sorting procedure that sorts S according to the key k. If

single-sort is stable, the following algorithm can be used:

Algorithm sort(S):

Input: a sequence S of length n

CS 16 — Introduction to Algorithms and Data Structures

Semester II, 99–00

3

Output: S, sorted

let S 0 be a copy of S

{ to avoid changing S }

single-sort(S 0 ,address)

single-sort(S 0 ,firstname)

single-sort(S 0 ,lastname)

If single-sort isn’t stable, however, sorting by first name may scramble the results of the addresssorting step, and sorting by last name may scramble the results of the first-name-sorting step. In

this case, it is necessary to first sort by last name, then sort each resulting group by first name, and

then finally sort each resulting group by address. Let single-sort(S,k,i,j) be the sorting procedure

that sorts S[i . . . j] according to the key S.

Algorithm sort(S):

Input: a sequence S of length n

Output: S, sorted

single-sort(S,lastname,0,S.size()−1)

lef t ← 0

right ← 0

while (right < S.size())do

current ← S[lef t].lastname()

while (right 6= S.size() and S[right].lastname() = current) do

right ← right + 1

single-sort(S, firstname, lef t, right − 1)

blef t ← lef t

bright ← lef t

while (bright < right)do

current ← S[blef t].firstname()

while (bright 6= right and S[bright].firstname() = current) do

bright ← bright + 1

single-sort(S, address, blef t, bright − 1)

blef t ← bright

lef t ← right

CS 16 — Introduction to Algorithms and Data Structures

Semester II, 99–00

4

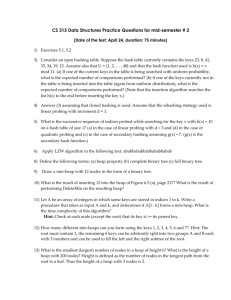

Problem 4

(a) Insert into an initially empty binary search tree items with the following keys (in this

order): 30, 40, 23, 58, 48, 26, 11, 13. Draw the tree after each insertion.

Solution

A 30

B 30

C 30

40

D 30

23

40

E 30

23

40

23

40

58

58

G 30

48

23

F

40

H 30

30

11

23

58

26

40

23

40

48

58

26

11

58

26

13

48

48

(b) Remove from the binary search tree in figure 8.2 the following keys (in this order): 32,

65, 76, 88, 97. Draw the tree after each removal.

Solution

A 44

B 44

17

88

28

65

29

54

C 44

17

97

88

28

82

76

29

76

54

97

80

28

29

54

CS 16 — Introduction to Algorithms and Data Structures

54

82

E 44

17

80

28

82

97

80

29

80

17

88

28

97

82

D 44

80

17

54

82

29

Semester II, 99–00

5

(c) A different binary search tree results when we try to insert the same sequence into an

empty binary tree in a different order. Give an example of this with at least 5 elements.

Solution Consider the elements 10, 20, 30, 40, 50. Inserting them in this order yields a rightheavy tree because each new element is inserted as the right child of the previously-inserted element.

Inserting them in reverse order yields a left-heavy tree because each new element is inserted as the

left child of the previously-inserted element.

50

10

40

20

30

30

20

40

50

(a) inserting in order 10,

20, 30, 40, 50

10

(b) inserting in order 50,

40, 30, 20, 10

Problem 5

(a) Let T be a binary search tree, and let x be a key. Give an efficient algorithm for finding

the smallest key y in T such that y > x. Note that x may or may not be in T . Explain why your

algorithm has the running time it does.

Solution For each node v of a binary search tree, the keys in v’s left subtree are less than v

and the keys in v’s right subtree are greater than v. Thus, at each node a choice can be made:

• if v ≤ x then y is the smallest thing larger than x in v’s right subtree (if v = x then y is

the smallest thing in the right subtree, but this is just a special case of y being in the right

subtree and does not need to be treated separately)

• if v > x then y is the smaller of v and the smallest thing greater than x in v’s left subtree (it

is not necessary to consider v’s right subtree because while everything there is larger than x,

it is also larger than v)

This leads to the following algorithm:

Algorithm findFloor(T ,x):

Input: a binary search tree T and a key x

Output: the smallest y in T such that y > x if such a y exists, and ∞ otherwise

current ← T .root()

best ← null

while (T .isInternal(current)) do

if (current.key() ≤ x) then

current ← T.rightChild(current)

{ y is in right subtree }

else

best ← current.key()

{ current node is a candidate for y }

current ← T.leftChild(current)

{ y is in left subtree }

CS 16 — Introduction to Algorithms and Data Structures

Semester II, 99–00

6

return best

Note that when best is updated we can use best ← current.key() instead of best ← min(best, current.key())

because as soon as best is updated with the current node’s value, we go to the left subtree and all

of the keys in that subtree are less than the current node (and the new value of best).

The running time is O(h), where h is the height of the tree, because in each iteration of the

while loop, current advances one level down the tree. Note that in the worst case this is O(n) and

in the best case it is O(log n).

(b) Write a nonrecursive program to print out the keys from a binary search tree in order.

Solution Since the key of a node v is always greater then or equal to the keys in its left

subtree, and less then or equal to the keys in its right subtree, our algorithm is just a glorified

inorder traversal.

The problem is making this traversal non-recursive. Note that if we are moving up and down

the tree (as in Firutre 4.16), it is enough to know where we came from, to know where we are going

and whether we should print the key or not (we need to know where we came from since a node is

visited several times).

Two algorithms that implement this non-recursive inorder traversal follow. The second one

might be easier to read since it uses algortihm inorderNext that appeared in the second homework,

but it has more complicated running time analysis.

Consider the following picture of a finite state automaton that describes the algorithm. Node

v is initialized to the root of T .

DownLeft

UpFromLeft

Down Right

P

P

P

L

R

L

R

L

UpFromRight

P

R

L

R

begin

v is internal

GO DownLeft

end

v is external

v is root

v is a left child

v is a right child

print v

GO UpFromRight

GO UpFromLeft

v is internal

print v

print v

v is external

GO DownRight

CS 16 — Introduction to Algorithms and Data Structures

Semester II, 99–00

7

The following algorithm is basically the diagram above in pseudo-code:

Algorithm PrintBST(T )

Input: binary search tree T

Output: keys of T printed in order

v ← T .root()

if (T .isExternal(v)) then

return

cmd ← DownLeft

while(true) do

if (cmd = DownLeft) then

v ← T .leftChild(v)

if (T .isExternal(v)) then

print v

cmd ←UpFromLeft

else

cmd ←DownLeft

else if (cmd = UpFromLeft) then

v ← T .parent(v)

print v

cmd ←DownRight

else if (cmd = DownRight) then

v ← T .rightChild(v)

if (T .isExternal(v)) then

print v

cmd ←UpFromRight

else

cmd ←DownLeft

else

{ case when command is UpFromRight }

v ← T .parent()

if (T .isRoot(v)) then

return

{ the end of the traversal }

if (T .leftChild(T .parent(v)) = v) then

{ v is a left child }

cmd ←UpFromLeft

else

cmd ←UpFromRight

Note that the only times we do print is when we are at leaves or when we just came up from

the left child. Since any tree has n − 1 edges and all we do here is traverse each edge once down

and once up, this is an O(n) time algorithm.

The second version of this algortihm uses inorderNext algorithm that appeared in homework 2.

Algorithm PrintBST(T )

Input: binary search tree T

Output: keys of T printed in order

{ find the left-most node in the tree }

v ← T .root()

while (T .isInternal(v)) do

v ← T .leftChild(v)

CS 16 — Introduction to Algorithms and Data Structures

Semester II, 99–00

8

while (v 6= null) do

print v

v ← inorderNext(v)

This routine goes down and up the tree basically in the same way as the algorithm above. We

either go from a leaf to its parent (if such exists), or go to the leftmost node in the right subtree of

v. But note that if we combine all the paths followed by inorderNext for every v, we just follow the

contour of T , same as in the first version of the algorithm. So amortized application of inorderNext

on every v is also O(n) because, in the end, it just traverses every edge once up and once down.

CS 16 — Introduction to Algorithms and Data Structures

Semester II, 99–00

![Question#4 [25 points]](http://s3.studylib.net/store/data/007289590_1-57e227b5dac30eb17dd4115b9416253c-300x300.png)