Testing and Built-in Self-Test – A Survey

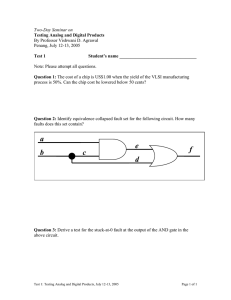

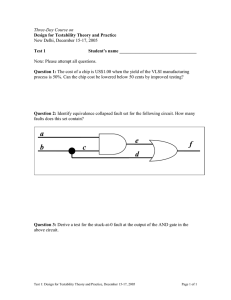

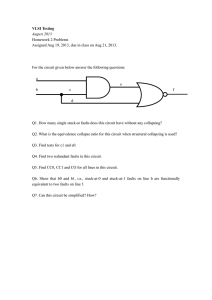

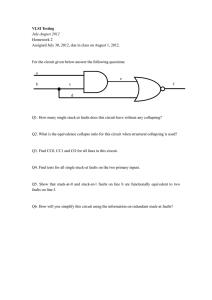

advertisement