Exercise II: Self-Organizing and Feedback Networks

advertisement

FYTN06

HT 2015

Exercise II: Self-Organizing and Feedback

Networks

Supervisor: Mattias Ohlsson

(mattias@thep.lu.se, 046-222 77 82)

Deadline: Jan 5, 2015

Abstract

In this exercise we will study self-organizing maps and feed-back networks, with the

following subtitles:

• Competitive networks for clustering

• Kohonen’s self-organizing feature map

• Learning Vector Quantization (LVQ)

• Elman networks for time series prediction

• Hopfield networks as a error-correcting associative memory.

The problems we will look at are both artificial and real world problems. The

environment you will work in is Matlab, specifically the Neural Network Toolbox

(as in the previous exercise).

Section 1 and 2 contains a summary of the different network types we are going to

look at. Section 3 gives a small description of the data sets that we will use and

section 4 contains the actual exercises. The last section (5) contains a demo for the

graph bisection problem and the traveling salesman problem.

1

1.1

Self-organizing networks

Competitive learning

A competitive network can be used to cluster data, i.e. to replace a set of input vectors by a fewer

set of reference vectors. Figure 1 shows a competitive network with N inputs and K outputs. A

y1

y

. . . . . .

. . . . . .

yK

Wij

11

00

x1

0110 11

00

00

11

11

00

. . . . . .

x

0110 00

11

00

11

2

xN

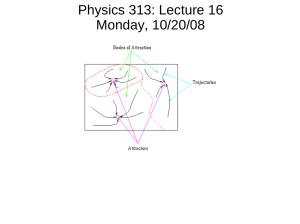

Figure 1: A competitive network.

competitive network is defining a winning node j ∗ . Matlab’s implementation is

j ∗ = arg max(−||x − ω j ||)

(1)

j

The “activation” function for the output nodes is

1 if j is the winning node

yj =

0 otherwise

The winning weight vector is updated according to the following equation:

wj ∗ (t + 1) = wj ∗ (t) + η (x(n) − wj ∗ (t))

In order to avoid “dead” neurons, i.e. neuron that never wins and hence never gets updated,

Matlab implements something called the bias learning rule. This means that a positive bias is

added to the negative distance (see Eqn. 1), making a distant neuron more likely to win. These

biases are updated such that the biases of frequently active neurons become smaller, and biases

of infrequently active neurons become larger. Eventually a dead neuron will win an move towards

input vector. There is a specific learning rate associated with updating theses biases and this one

should be much smaller than the ordinary learning rate.

1.2

Kohonen’s self-organizing feature map (SOFM)

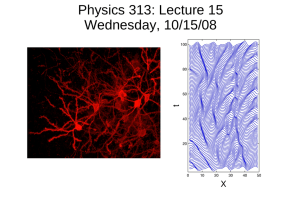

Figure 2 shows a SOFM where a 2-dimensional input is mapped onto a 2-dimensional discrete

output.

The weights are updated according to the following equation:

wj (t + 1) = wj (t) + ηΛjj ∗ (x(n) − wj (t))

where j ∗ is the winning node for input x(n). The neighborhood function Λjj ∗ that is implemented

in Matlab’s toolbox is defined as

1

if djj ∗ ≤ do

Λjj ∗ =

(2)

0

if djj ∗ > do

1

31

d ij

22

1

2

6

W

31

W1

W22

x1

x2

Figure 2: A self-organizing feature map with 2 inputs and a 6x10 square grid output.

where djj ∗ is the distance between neuron j and j ∗ on the grid, hence all neurons within a specific

distance do are updated. Matlab have a few different distance measures (dist, linkdist, boxdist and

mandist) and we will be using the default linkdist distance function. This measures the number of

links between two nodes.

The default learning in Matlab for SOFM is batch mode (learnsomb). It is divided into two

phases, one ordering phase and one tuning phase. During ordering, which occurs for a number of

epochs and starts with an initial neighborhood size, the neurons are expected to order themselves

in inputs space with the same topology in which they are ordered physically. In the tuning phase

smaller weight corrections are expected and also, the neighborhood size is decreased.

1.3

Learning vector quantization

The previous methods are un-supervised, meaning that the user doesn’t have any preconceived

idea of which data points belong to what cluster. If the user supplies a target class for each data

point and the network uses this information when training, we have supervised learning. Kohonen

(1989) suggested a supervised version of the clustering algorithm for competitive networks called

learning vector quantization (LVQ)1 . There are usually more output neurons than classes, which

means that several output neurons can belong the the same class. The update rule for the LVQ

weights is therefore

wj ∗ + η (x(n) − wj ∗ ) if j ∗ and x(n) belong to the same class.

wj ∗ →

(3)

wj ∗ − η (x(n) − wj ∗ ) if j ∗ and x(n) belong to different classes.

where j ∗ is the winning node for input x(n).

Note! In Matlab’s toolbox a LVQ network is implemented using 2 layers of neurons, first a

competitive layer that does the clustering and then a linear layer for the supervised learning task

(e.g. classification). From a practical point of view this does not change the functionality of the

LVQ network and we can assume it consists of only one layer of neurons.

1 Clustering

is also called vector quantization.

2

2

Feedback networks

Matlab implements a few different architectures suitable for time series analysis. One can, for

example, choose from time delay networks 2 , FIR networks3 and Elman type of networks4 . We

will also use Hopfield models as associative memories.

2.1

Elman networks (Matlab version)

Elman networks have feedback from the hidden layer to a new set of inputs called context units.

The feedback connection is fixed, but can have several time-delays associated with it, which means

that the output from the context units are previous copies of the hidden nodes. Figure 3 shows an

Elman network with one hidden layer and two time-lags on the feedback.

Figure 3: An Elman network with one hidden layer and two time-lags.

Note 1 Training an Elman network for the sunspot time series only requires one input and one

output node, since the network itself can find out (using the context units) suitable dependencies

(time lags).

2.2

The Hopfield network

The Hopfield network consists of a fully recurrent network of N neurons s = (si , ..., sN ) with

si ∈ {−1, 1} (see Figure 4). Every neuron is connected to all other neurons with symmetrical

connections wij = wji , and wii = 0. The neurons are updated (asynchronously), using the sign of

the incoming signal vi ,

N

X

vi =

wij sj

j=1

according to,

si =

sgn(vi ) if vi 6= 0

si

if vi = 0

(4)

The training (i.e. determination of the weights) is very fast for the Hopfield network. The weights

2 called

focused time delay networks in Matlab

distributed time delay networks in Matlab

4 called layer-recurrent networks in Matlab

3 called

3

S2

W21=W12

S1

W32=W23

W31=W13

W42=W24

W41=W14

S3

W43=W34

S4

Figure 4: A Hopfield network with 4 neurons.

are given by

wij

=

P

1 X µ µ

ξ ξ

N µ=1 i j

wii

=

0

i 6= j

for P patterns stored in the vectors ξ µ .

Note! Matlab has its own Hopfield model5 , that is implemented using newhop. This model is

better at avoiding spurious states than the original model.

3

Data sets used in this exercise

The data sets used in this exercise are (as for Exercise I) both artificial and real world data.

Some of the data from Exercise I will be reused, namely sunspot, wood and some of the synthetic

classification data. As in Exercise I, we will use Matlab’s own naming for the matrix P that is

storing the inputs to the neural network. If you have 3 input variables and 200 data points the

P will be a 3x200 matrix (the variables are stored row-wise). The targets (if there are any) are

stored (row-wise) in T (1x200 if one output and 200 data points). The files are available from

(http://www.thep.lu.se/∼mattias/teaching/fytn06/)

3.1

Synthetic cluster data

To demonstrate the competitive networks ability to cluster data, a 2-dimensional data set consisting

of 6 Gaussian distributions with small widths will be used. You can use the function loadclust1

to get the data and use Matlab’s plot function to visualize it. For example,

>> [P,T] = loadclust1(200);

>> plot(P(1,:),P(2,:),’r*’);

5 This model is an implementation of the algorithm described in Li, J., A. N. Michel, and W. Porod, “Analysis

and synthesis of a class of neural networks: linear systems operating on a closed hypercube”, IEEE Transactions

on Circuits and Systems, vol 36, np. 11, pp. 1405-1422, 1989.

4

3.2

The face ORL data

With the function loadfacesORL you will get 80 images of 40 people (2 images of each person). The

images are taken from the AT&T Laboratories6 and they are stored in the FaceORL directory.

Each face is a gray-scale image with the original size 112 x 92. There is however an option to

loadfacesORL that will make the images smaller, and perhaps more manageable for the networks

that will use these data. For example,

[P,ims] = loadfacesORL(0.5);

will load the images rescale to a size of 56 x 46. You can then view one of the images with

viewfaceORL;, e.g.

viewfaceORL(P(:,45),ims);

3.3

The letter data

This data set will be used in connection with the Hopfield model. It consists of the first 26 uppercase

letter and they a coded as 7 x 5 (black and white) images. Use loadletters or loadlett to load

all or a single one, respectively. You can look at them using viewlett. Example,

P = loadletters(26); viewlett(P(:,11),’Letter K’);

4

Exercises

4.1

Competitive networks for clustering

In this exercise you will use a competitive network to do clustering. We will start with a synthetic

data set and later on use the faces from ORL.

2-dimensional data You can use the function syn comp in the next 3 exercises.

• Exercise 1: Use 100-200 data from the synthetic data set, 6 output neurons and default values

for the learning parameters. Does it work?

• Exercise 2: Use 100-200 data from the synthetic data set and 6 output neurons. Try different settings of the learning parameters and see what happens. Especially, put

the bias learning rate to zero and see if “dead neurons” appear.

• Exercise 3: Use 100-200 data from the synthetic data set. Use more than 6 output neurons

(e.g. 10) and run the network. What happens with the superfluous neurons (weight

vectors)?

ORL face data In the next exercises we will try to cluster the faces from ORL. In the previous

exercise the network had 2 inputs corresponding to the 2 coordinates for the data. How are we

going to present the faces for the competitive network? We are going to do the simplest thing and

let each pixel value in the image represent one input. This means that a 112 x 92 image will be

represented by a 10304 long input vector.

For the next 2 exercises you should modify syn comp in order to deal with the ORL face data. You

should in principle only have to replace the loadclust1 function with the loadfacesORL function,

comment out the plotting part and possibly increase the def ntrain parameter. It can also be

a good idea to normalize the input data. To view the result you can use the showfacecluster

function (for details, see >> help showfacecluster).

6 http://www.cl.cam.ac.uk/research/dtg/attarchive/facedatabase.html

5

• Exercise 4: Train a competitive network with 5-10 outputs on the ORL faces. You may have

to increase the number of epochs and/or lower the learning rate in order to reach convergence. (Hint: Look at the mean change of weight locations). View the different clusters using

showfacecluster (e.q. showfacecluster(net, ’comp’, P, ims, 1) to view all faces that have

output node 1 as the winner). Does the network cluster the faces into “natural”

clusters?

• Exercise 5: Repeatability! Does the result change a lot if you repeat exercise 4 a

couple of times?

• Not required, only for the fun of it: The weight vectors in the competitive network represents

the cluster centers for each cluster and can therefore be interpreted as the mean face for this

cluster. Modify showfacecluster so that it can show these “cluster faces”.

4.2

Self-organizing feature map

Now we are going to use Kohonen’s self-organizing feature map (SOFM) to cluster both the synthetic data and the ORL face data. The function syn sofm will be used in exercise 6. For exercise

7-8 you should modify it so that it can work with the ORL face data.

• Exercise 6: Run syn sofm to approximate the synthetic (6 Gaussian’s) cluster data. List

at least 2 properties of the SOFM algorithm and relate these properties to the

figure showing the result for the synthetic data.

• Exercise 7: Modify the syn sofm function to deal with the ORL faces and train a [3 x 3]

SOFM on these face data. Use showfacecluster to view the result. Note! The SOFM

can take some time to train, it can therefore be a good idea to work with smaller faces (e.g.

scale factor 0.5). By looking at the clusters that the SOFM creates, are they better

or worse compared to the clusters created by the (simple) competitive networks

above?

• Exercise 8: Can you confirm the property of the SOFM that states that output

nodes far apart from each other corresponds to different features of the input

space?

4.3

LVQ

In this section we will use the LVQ classifier on the same problems as in exercise I. You can use

syn lvq for the synthetic problems and a modify it to handle the liver data. You may have to tune

the learning rate in order to get a good result. You can use the boundaryLVQ function to plot the

boundary implemented by the LVQ network.

• Exercise 9: Use 100 data points from synthetic data set 3 and 5-10 outputs. Optimize your

network with respect to the validation set. What number of outputs gave the best result

and why do you need this many neurons to do a good job? How does the result

compare to the MLP networks of Exercise I?

4.4

Time-delay networks

Now you are going to test the Elman network, called layer-recurrent networks in Matlab, for time

series prediction. To compare with previous models we are going to use the sunspot data as our

prediction task. You can use the function sunspot lrn for these exercises.

6

• Exercise 10: Use an Elman network with 1-5 hidden neurons and 1 delay feedback. Train

a couple of times with different number of hidden neurons. How is the performance

compared to the MLP’s you trained in Exercise I?

• Exercise 11: Experiment with the “delay feedback” parameter. Can you find an optimal

value?

• Not required: Use time-delay networks (timedelaynet) or FIR networks (distdelaynet)

for the sunspot data. Comments?

4.5

Hopfield networks

In this exercise we are going to use the Hopfield model as an error-correcting associative memory.

The images we are going to store in the memory are the first 26 uppercase letters, coded as 7 x 5

black and white images. You can use the lett hopf function for these exercises, which lets the user

store the first k letters in the alphabet; e.g. for k = 5 the letters ’A’ through ’E’ are stored. This

function calls either Matlab’s own Hopfield model or the functions newhop2 and simhop, which

implements and simulates the original Hopfield model (as you know it). For the first 2 exercises

you should use the original Hopfield model.

• Exercise 12: Store the first 5 letters in a Hopfield network. What is the capacity of this

network (if we assume random patterns)? Initiate the network with a letter without

any distortion. Are the stored letters stable? Why / why not? Now initiate the model

with a noisy letter. How good is the ability to retrieve distorted letters?

• Exercise 13: Store 10 letters in the Hopfield model. How many stable letters are there

this time? Why the difference in performance, compared with last exercise?

• Exercise 14: Store 10 letters in Matlab’s Hopfield model. Are the 10 letters stable?

How good is the retrieval ability?

5

Combinatorial optimization (DEMO)

Note! This section is optional, there are no questions to answer. It is provided in order

demonstrate the Hopfield model as a “solver” for the graph bisection problem and to look at the

traveling salesman problem (TSP).

To test the graph bisection problem change to the GB directory and run GBRun.

To test the TSP problem change to the TSP directory and run TspRun.

7