1 Contents Introduction 1 1 Background and objectives to the study

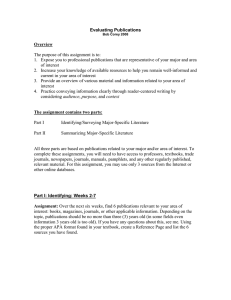

advertisement