DESMET: A method for evaluating Software Engineering methods

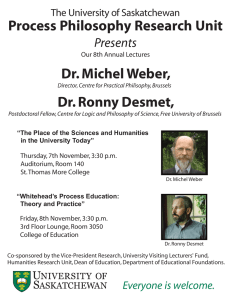

advertisement