Three-Dimensional Object Recognition from Single Two

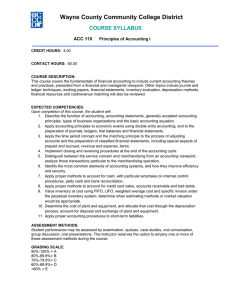

advertisement