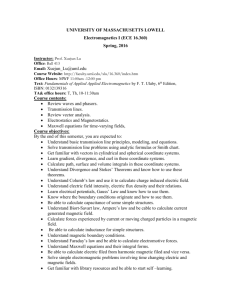

Electromagnetism - damtp - University of Cambridge

advertisement