Learning in a partially hard-wired recurrent network

advertisement

Faculty Working Paper 91-0114

330

B385

COPY 2

Learning in a Partially Hard- Wired

Recurrent Network

oi

The Library

APR

i

University of

at

Department of Economics

University of Illinois

the

1991

»

Urbana-Oham^iQ'''

K. Hornik

Department of Economics

Technische Universitat Wien, Austria

Bureau of Economic and Business Research

Commerce and Business Administration

University of Illinois at Urbana-Champaign

College of

BEBR

FACULTY WORKING PAPER NO. 91-0114

College of

Commerce and

Business Administration

University of Illinois at CIrbana-Champaign

February 1991

Learning in a Partially Hard-Wired

Recurrent Network

Kuan

Department of Economics

University of Illinois at Urbana-Champaign

C.-M.

and

K. Hornik

und Wahrscheinlichkeitstheorie

Technische Universitat Wien, Vienna, Austria

Institut fur Statistik

Digitized by the Internet Archive

in

2011 with funding from

University of

Illinois

Urbana-Champaign

http://www.archive.org/details/learninginpartia114kuan

Abstract

In this

paper we propose a partially hard-wired Elman network.

of our approach

is

A

distinct feature

that only minor modifications of existing on-line and off-line

learning algorithms are necessary in order to implement the proposed network.

This allows researchers to adapt easily to trainable recurrent networks. Given this

network architecture, we show that

back-propagation estimates

mean square

distributed.

in

a general dynamic environment the standard

for the learnable

connection weights can converge to a

error minimizer with probability one

and are asymptotically normally

•

\

Introduction

1

Neural network models have been successfully applied

in

a wide variety of

dis-

Typically, applications of networks with at least partially modifiable

ciplines.

interconnection strengths are based on the so-called multilayer feedforward architecture, in

which

all

signals are transmitted in one direction without feedbacks. In

a dynamic context, however, a feedforward network

senting certain sequential behavior

when

its

may have

difficulties in repre-

inputs are not sufficient to characterize

temporal features of target sequences (Jordon, 1985). From the cognitive point of

view, a feedforward network can perform only passive cognition, in that

puts cannot be adjusted by an internal mechanism

(Norrod, O'Neill,

Sz

when

man

static inputs are present

dynamic environments.

In view of these problems, researchers have recently

networks with feedback connections, see

i.e.,

out-

Gat, 1987). These deficiencies thus restrict the applicability

of feedforward neural network models in

networks,

its

(1988), Williams

&

Zipser (1988), and

recurrent variables compactly

Kuan

been studying recurrent

e.g.,

Jordon (1986),

El-

(1989). In a recurrent network,

summarize the past information and, together with

other input variables, jointly determine the network outputs.

Because recurrent

variables are generated by the network, they are functions of the network connection weights.

Owing

(BP) algorithm

for

to this

parameter dependence, the standard back-propagation

feedforward networks cannot be applied because

the correct gradient search direction

Kuan, Hornik

standard

BP

&;

(cf.

Rumelhart, Hinton,

&

it

fails to

take

Williams, 1986).

White (1990) propose a recurrent BP algorithm generalizing the

algorithm to various recurrent networks.

However,

this

algorithm

has quite complex updating equations and restrictions, and therefore cannot be

used straightforwardly by recurrent networks practitioners.

we suggest an

In this paper

easier

way

to

Elman (1988) network,

focus on a variant of the

unit activations serve as recurrent variables.

tions

implement recurrent networks.

between the recurrent units and

in

We

which only a subset of hidden

We propose

their inputs. This

to hard-wire the connec-

approach has the following

advantages. First, the resulting network avoids the aforementioned problem of pa-

rameter dependence. Second, the necessary constraints on recurrent connections

suggested by Kuan, Hornik,

Third, off-line learning

&

White (1990) can

made

is

easily be

imposed by hard-wiring.

possible for the proposed network. Consequently,

only minor modifications of existing on-line and off-line learning algorithms are

needed. This

is

very convenient for neural network practitioners. Given this hard-

wired network, we show that in general dynamic environments the resulting

mean squared

estimates converge to a

error minimizer with probability one

BP

and are

asymptotically normally distributed. Our convergence results extend the results of

Kuan, Hornik,

&

to the results of

White (1990)

Kuan

White (1990)

k,

for

feedforward networks.

This paper proceeds as follows. In section 2 we

works.

In section 3

algorithms.

we

networks and are analogous

for general recurrent

discuss a variant of the

We establish strong consistency and

briefly

review recurrent net-

Elman network and

its

learning

asymptotic normality of the learn-

ing estimates in section 4. Section 5 concludes the paper. Proofs are deferred to

the appendix.

2

A

Recurrent Networks

three layer recurrent network with k input units,

activation function

ip,

and

m output

units with

/

hidden units with

common

common

activation function

<p

can

be written in the following generic form:

where the subscript

is

the

/

x

1

ot

=

$(Wa +

at

=

*(Cx + Dr +

rt

=

G(x

t

b)

t

-i r t -i,0),

t

the i;x

is

vector of hidden unit activations, o

is

vector of network inputs, a

1

m

the

x

1

vector of network

denote the unitwise activation rules

respectively hidden layer, and r

is

v)

t

indexes time, x

t

$ and ^ compactly

outputs,

t

t

is

the n x

computed through some generic function

1

in the

output

vector of recurrent variables which

G

from the previous input Xt-i, the

previous recurrent variable Pt_i, and

=

the vector of

all

/

[vec(C)', vec(D)

,

vec(W)',b',

v']',

network connection weights. (In what follows,

'

denotes transpose,

the vec operator stacks the columns of a matrix one underneath the other,

is

the euclidean length of a vector

That

is,

=

${WV(Cx + Dr +b) +

r

=

G{x -\,r

the recurrent variables

networks.

When

r

t

=

rt

=

as

ot

the network output

r.

t

t

is

\v\

v.)

More compactly, the above network can be written

t

and

t

_

v)

t

u

(1)

6).

(2)

jointly determined by the external inputs

Clearly, different choices of

G

x*

and

yield different recurrent

o -\ (output feedback),

t

G(ar«_i,r t -i,tf)

= *(W*(C*«-i + Dr

and we obtain the Jordon (1986) network.

When

rt

=

t

.

1

+

b)

+

v),

a -\ (hidden unit activation

t

I

feedbacks),

= G(x

r,

t -i,

r t _ u 9)

= *(Cx

t

_i

+ Drt -i + b),

and we have the Elman (1988) network.

By

recursive substitution, (2)

r<

where

=

Gto.i.r,.!,*)

-1

x'

=

(x<_

i

,

Xt_2,

= G(z,_i,G(zt-2,n_ 2 ,0),0) =

•

•

•

,

complex nonlinear function of

input x t

,

we may

becomes

interpret r

xo)

9

t

is

•••=: ^(z'-\0),

the collection of past inputs.

and the entire past of x

t

.

Hence, r t

is

a

In contrast with external

as "internal" input, in the sense that

ated by the network. Given a recurrent network, the standard

BP

it

is

gener-

algorithm

for

feedforward networks does not perform correct gradient search over the parameter

space because

it

fails to

take the dependence of r t on the learnable network weights

into account. Consequently, meaningful convergence cannot be guaranteed (Kuan,

1989).

Kuan, Hornik,

&

White (1990) propose a recurrent

carefully calculating the correct gradients

BP

algorithm which, by

and including additional derivative up-

dating equations, maintains the desired gradient search property.

To ensure proper

convergence behavior, their results also suggest some restrictions on the network

connection weights. That

bility" region

effort

is

is,

parameters estimates are projected into some "sta-

whenever they violate the imposed constraints. Thus, much more

needed

in

programming appropriate learning algorithms

for recurrent net-

works. Moreover, some of their conditions to ensure convergence of the recurrent

BP

algorithm are rather stringent.

J

A

3

Partially Hard- Wired

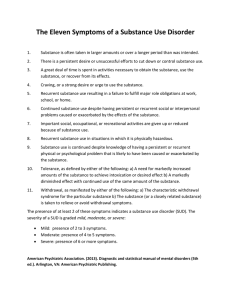

In this section

we suggest an

(1988) network. As

learning algorithms

easier

way

we have discussed

is

to

Elman Network

implement a variant of the Elman

in section 2,

improper convergence of the

mainly due to the dependence of the internal inputs

the modifiable network parameters.

modify the Elman network as

is

To circumvent

depicted in figure

this

rt

on

problem, we propose to

1.

outputs

fr

feedforward hidden units

feedback hidden units

inputs

previous feedback

unit activations

I

Figure

1.

The proposed

partially hard-wired recurrent network.

hard- wired connections are represented by

The hidden

I

—

1/

units,

J)

—

respectively =>.

units are partitioned into two groups containing

and only the units

Intuitively, the units in the first

in

Modifiable and

If

respectively

lr

=

the second group serve as recurrent units.

group play the standard

role in artificial neural

networks, whereas the task of the recurrent units

to "index" information

is

on

Furthermore, the connections between the recurrent units and

previous inputs.

their inputs are hard-wired.

Hence, a

is

partitioned as a

=

[a.,a'r ]',

where a/

the

is

vations of the (purely feedforward) hidden units in the

x

lr

1

first

If

x

1

vector of acti-

group, and a r

is

the

vector of activations of the feedback (recurrent) hidden units in the second

group such that

the connection matrices

If

C

and

rt

=

D

and the bias vector

a r>t -i.

6 are partitioned

com-

formably as

'D/

Cf'

C=

D=

c rj

'h

=

b

Jr

.A-.

then

C

where now

D

r

and

6r

r

,

D

r

a J,t

=

*(CfXt

a r ,t

-

W(Cr Xt +

and

b~ r

+ Dfa r ,t-i +

DrUr.t-l

b

f

)

+ br),

are fixed due to hard-wiring.

Different choices of

Cr

,

determine how the past information should be represented, hence they

are problem-dependent

and should be

left to

Hence, writing the proposed network

ot

in

= $(WV(Cx + Da rit - +

t

l

researchers.

a nonlinear functional form,

b)

+

v)

we have

=: F(x t ,a r t -i,0.)

(3)

= G{x

(4)

,

and

rt

=

<Jr,t-i

= ^(C-Zt-i +

L> r ar,t-2

+

br

)

t

-i,a rit -2,0),

where now

6

=

[vec(Wy, vec(C/)', vec(L> / )

/

,

b'

f

,

v']'

»

is

the p x

m(l

+

l)

1

+

vector which contains

+

lj(k

+

lr

1),

the learnable network weights, where p :=

all

and

/

^=[vec(C r ) ,vec(D r )

contains

the hard-wired weights.

= G{x

rt

cf.

all

equation

t

-

rt _

U

hard-wired weights

Because

r

is

t

t

rt

—

a r( _i

not a function of the learnable weights

respect to the learnable weigths

t,

BP

the

algorithm

t

where

rj t

is

is

thus avoided.

practice

it is

F

1

,*),

t

and the

+i

=9\ +

the aforementioned

9,

follows that the standard

It

applicable to the proposed network with

is

Letting y denote the target pattern presented

9.

t

is

Tlt

V

9

F(x t]

M -F(x

r ,9 )(i

t

t

learning rate employed at time

derivatives of

=: ^(z*"

=

-2,6) ,6)

a function of the entire past of x

algorithm for feedforward networks

at time

t

becomes

9.

problem of parameter dependence

BP

is

,6 r ]

recursive substitution, (4)

u ~9) = G(xt-i,G(x - 2 ,r

Thus,

(2).

By

/

/

/

t

necessary to keep the

BP

estimates

9.

in

,r t ,$t )),

VqF

and

with respect to the components of

t

is

(5)

the matrix of partial

However,

in

both theory and

some compact subset

of IRP

,

thus preventing the entries from becoming extremely large. This, being a typical

requirement

in the

Kuan & White

convergence analysis of the

(1990) and Kuan, Hornik,

BP

& White

type of algorithms, see

(1990), can,

if

e.g.,

not automatically

guaranteed by the algorithm, be accomplished by applying a projection operator

t which maps

M

p

onto

to the

BP

estimates.

Usually, a truncation device

convenient for this purpose. This requirement entails

inactive

when very

large trunaction

little loss

bounds are imposed.

because

it is

is

usually

we only have

In light of (5),

to

BP

modify the existing

Furthermore,

incorporate the internal inputs a r into the algorithm.

training data set

off-line learning

given, the internal inputs a rt can be calculated

is

methods such

0.

recurrent networks quite easily.

It is

then interesting to

know

Let

{V

t

the properties of the

and

(3)

(4).

This

is

BP

Algorithm

be some sequence of random variables defined on a probability space

}

(fi,^7 P),

,

T\ be the

cr-algebra generated by

Vr VT +i,

,

.

.

.

,

Vj,

and

let

{Z

t

}

sequence of square integrable random variables on that probability space.

write

in

Z, 2

E

l

t

the

turn.

Asymptotic Properties of the

4

and

first,

These advantages allow researchers to adapt to

algorithm (5) applied to the proposed network given by

we now

a fixed

if

as nonlinear least squares can then be applied to

estimate the learnable weights

topic to which

algorithm slightly to

tm(Zt)

(P),

f° r

i.e., ||Z||

^le

=

conditional expectation

(^IZI

2

1

E{Zt\T\-m) and

•

||

||

for the

be a

We

norm

/2

.

)

Definition 4.1. Let

vm := sup \\Zt

-E}t%(Z

t

)\\.

t

Then {Z

um

t

} is

= 0(m x

)

near epoch dependent (NED) on

as

m—

*

{V }

t

of size

—a

if

for

some A < —a,

oo.

This definition conveys the idea that a random variable depends essentially on

the information generated by

much on

less

current"

V and

t

does not depend too

the information contained in the distant future or past.

magnitude of the

dies out.

"more or

More

size of u m

details

,

larger the

the faster the dependence of the remote information

on near epoch dependence can be found

McLeish (1975), and Gallant

The

k

White (1988).

8

in Billingsley (1968),

The lemma below ensures

that recurrent variables are well behaved and do not

have too long memory.

Lemma

and

4.2. Let {r t

common

the

random

generated by

bounded

is

Notice that

if

1.

variables (which

is

In

what

is

the input data {x

NED

on {x

triviality,

z

result

is

t

=

follows

we compactly

Ml

<

)|

{V }

on

t

,

where

M^ =

:

of size —a.

form a sequence of independent

}

NED

sequence), then {r t } need not

of arbitrarily large size, see Gallant

}

&

epoch dependence

write the algorithm (5) as

(y't ,x't

)'

and h

t

(0)

=0t + Vth

= VgF(x

t

t

(0 t

,r ,0)(y

t

t

),

— F(x

cf.

Ljung (1977).

This approach

Sanger (1989), Kuan

&

cf. e.g.,

t

,r ,0)).

t

Our consistency

(ODE) method

based on the ordinary differential equation

neural network learning algorithms,

We

—a

input environments.

Clark (1978),

k Kuan

(

t

of size

t

but a necessity when dealing with feedback networks

Ot+l

where

NED

bounded sequence

{V }

on

bounded and continuously

is

xp

NED

pp. 27-31). Hence, introducing the concept of near

not a technical

in stochastic

a

is

If |vec(D r

a special case of an

is

necessarily be mixing but

White (1988,

where {x t }

(4),

derivative.

first

then {r t }

l^'l*7 )!)

Remark

be

hidden unit activation function

differentiable with

sn Pa£R

\

now well-known

is

Oja (1982), Oja

White (1990), Kuan, Hornik,

&

&

Kushner

of

in

&;

analyzing

Karhunen

(1985),

White (1990), and Hornik

(1990).

need the following notation. Let r

The piecewise

linear interpolation of {6 t

^1

TJ±

9\r) =

-)

(

9,

+

}

=

and, for

t

>

1,

:=

let rt

with interpolation intervals

(^)

t

+

i

,

r 6 [rtl rt+1 ),

^)l

{rj t }

is

i]i-

and

each

for

Observe

We

A.l.

"left shift" is

t, its

=

in particular that 0*(O)

0°{rt )

=

6t

.

impose the following conditions.

{V

t

}

and {z

{V }

t

or

(ii)

is

am

are defined

}

some

that for

(i)

t

r

>

on a complete probability space

a mixing sequence with mixing coefficients

of size —r/(r

the sequence {z

t

r

t

\

is

}

t

)

<

—

2)

NED

on {l^} of

size

(ii)

A. 3.

|vec(Z) r

{r] t } is

)|

<

first

M~\

— r/2(r— 1)

of size

—1 with sup

t

|x t

<

|

M

x

< oo

oo.

(4),

and V are continuously differentiate of order

has bounded

m

and

A. 2. For the network architecture as specified in (3) and

<f)

P) such

4,

and sup E(\y

(i)

(Q.,J-,

3.

t/>

is

bounded and

order derivative.

where

M^ = sup,^

\^'{<r)\.

a sequence of positive real numbers such that J2t

It

—

oo and ]P t

rfi

<

oo.

A. 4. For each

6 0, h(0)

=

limt E(h (0)) exists.

t

A.l allows the data to exhibit a considerable amount of dependence

in the

sense

that they are functions of the (possibly infinite) history of an underlying mixing

sequence. For more details on a- and ^-mixing sequences we refer to White (1984).

Assuming that the external inputs x

nicalities

t

are uniformly

bounded

needed to establish convergence and causes no

10

simplifies

some

tech-

loss of generality,

as

.

Kuan

pointed out by

White (1990).

&,

Desired generality

ing the y t sequence to be unbounded.

is

assured by allow-

Note that typical choices

^ such

for

the logistic squasher and hyperbolic tangent squasher satisfy A.2(i).

A.2(ii)

is

needed

White (1990)

in

lemma

BP

The

the constraint suggested by

networks.

A. 3

is

Kuan, Hornik

&

a typical restriction on the

is

needed to define the associated

ODE

whose solution

the limiting path of the interpolated processes {#'(•)}.

result

Theorem

is

Condition

types of algorithms. For example, learning rates of order l/t

satisfy this condition. A. 4

is

and

for general recurrent

learning rates for

trajectory

4.2

as

below follows from corollary 3.5 of Kuan

For

4.3.

the

network given by

(3)

and

&c

(4)

White (1990).

and

the

algorithm (5),

suppose that assumptions A.1-A.4 hold. Then

(a)

one,

(b)

bounded and equiconiinuous on bounded intervals with probability

{#'(•)} is

Let

and

0*

all limits

one,

as

t

Remark

h{9)

—

0,

0, and

if 9 t

in

let

V(Q*) C

9

—

h{9).

enters a compact subset

and thus

in particular,

ifQ

C

ODE

JR P be the domain of attraction of 0*

ofV(Q*)

infinitely often with probability

X>(0*), then with probability one, 6

—

t

0*

— oo.

2.

Because the elements

0* of

0* solve the equation lim E{h(z

t

t

,

rt

,

0))

=

they (locally) minimize

UmE\y - F(x

t

Theorem

ODE

be the set of all (locally) asymptotically stable equilibria of this

contained

Then,

of convergent subsequences satisfy the

4.3 thus

shows that the

BP

,r t ,9)\

2

(6)

.

estimates can converge to a

error minimizer with probability one. Note

conditional on

t

9.

I

11

however that

this

mean squared

convergence occurs

Remark

same

By

3.

T~

the Toeplitz lemma, linvr

as (6). Therefore, the (on-line)

BP

l

Ylt=i &\yt

~ F{x

t

,r t ,d)\ 2

is

the

estimates converge to the same limit as

the (off-line) nonlinear least squares estimator.

Remark

As y

4.

is

t

not required to be bounded, our strong consistency result

holds under less stringent conditions than those of Kuan, Hornik

BP

for the fully recurrent

To

cific

T] t

=

(t

+

l)

-1

we consider the algorithm

& White

{t

l)

-1

}

is

l

2

l) / (0 t

+

The piecewise constant

+

for

—

for

far; in particular,

BP

es-

Kuan,

BP

al-

0") be the sequence of normalized estimates.

interpolation of

U

t

on [0,oo) with interpolation intervals

defined as

U{r)

and again,

with the spe-

(1990) give only a consistency result for their recurrent

gorithm.) Let Ut '—

{(/.

(5)

(Note that no limiting distribution results

.

timators in recurrent networks have been published thus

Hornik,

White (1990)

algorithm.

establish asymptotic normality

choice

&

each

t

=U

t

its "left shift" is

U

t

(r)

T€[T

t

,

,Tt+l )

t

defined as

= U(r +

t

r),

r>0.

Finally, let

H(0) :=lim£[V*/i,(0)]

where

Ip is the

Our

& Huang

processes can

sponding

SDE

/p /2,

p-dimensional identity matrix.

result follows

Kushner

+

from the stochastic

differential

(1979). In contrast with the

now shown

equation (SDE) approach of

ODE

approach, the interpolated

to converge weakly to the solution paths of a corre-

with respect to the Skorohod topology. For more details on weak

12

convergence we refer to Billingsley (1968). The following conditions

asymptotic normality

su Pt x t\

\

M

<

x

<

B.2. A. 2 holds with

B.3. 9"

G int(0)

result.

and {z

B.l. A.l(i) holds,

is

suffice for the

<fr

t

} is

a stationary sequence

oo sup, E(\y t

and

ip

s

\

)

<

NED

on {Vj} of

=

—8

with

oo.

continously differentiate of order

such that h{9")

size

and

all

4.

eigenvalues of H{9*) have negative

real parts.

The

result

Theorem

T] t

=

one, 9 t

—

with

Consider the network given by

4.4.

(t

»

below follows from corollary 3.6 of Kuan

+ l)~

9*

as

t

l

,

(3)

and

k.

White (1990).

(4)

and

the algorithm (5)

suppose that assumptions B.1-B.3 hold and that with probability

—* oo. Then {£/'(•)} converges weakly to the stationary solution

of the stochastic differential equation

dU{r)

where

W

=

~H(9*)U(t) dr

+

2

E(9* f' dW(r),

denotes the standard p-variate Wiener process and

oo

E(0*):=lim

Yl E{h

j

t

(e*)h t+j (9*)'].

= — co

In particular,

{t

where

a

—'

signifies

+ l)^ 2 {9 -9*)

t

convergence

-E^ N(0,S(9*)),

in distribution

and

/•OO

S(9' ):=

exp(77(0*

)s)

E

exp(77(0*

)s)

ds

Jo

is

the unique solution to the

matrix equation H(9")S

13

+

SH{9*)' — — D(tf*)

,

.

Remark

SDE

5.

If

rj t

=

(t

+

1)

*R, where

theorem 4.4 becomes dU(r)

in

=

R

is

a nonsingular p x p matrix, the

H{9*)U{t)<1t + RH{9*) l l 2 dW{r), and the

I

covariance matrix of the asymptotitc distribution of 9 t becomes RS(9*)R'

Remark

converges to 9*

6. If the probability that 9 t

is

positive, but less than one,

the above theorem provides the limiting distribution conditional on convergence to

9*

.

Hence,

if

each 9* £ 0*

0* contains only

,

and

many

finitely

points, assumption B.3

is

satisfied for

9 t converges with probability one to one of the elements of

then the asymptotic distribution of 9

t

is

0*

a mixture of N(9* ,S(9*)) distributions,

weighted relative to the convergence probabilities.

Conclusions

5

In this

paper we propose a partially hard-wired Elman network,

a subset of hidden-unit activations

is

which only

in

allowed to feed back into the network and

connections between these hidden units and input layer are hard-wired.

feature of our approach

is

A

that existing on-line and off-line learning algorithms

can be slightly modified to implement the proposed network. (Note that

learning

is

not possible for a fully learnable recurrent network.) This

convenient for researchers.

standard

BP

distinct

Our

results also

show that

particularly

the estimates from the

algorithm adapted to this network can converge to a

error minimizer with probability one

is

off-line

mean squared

and are asymptotically normally distributed.

These asymptotic properties are analogous to those of the standard and recurrent

BP

algorithms.

As the convergence

connection weights

trast

9,

results in this

paper are conditional on the hard-wired

the resulting weight estimates are not fully optimal, in con-

with fully learnable recurrent networks.

To improve

the performance of the

I

14

I

proposed network, one can train the network with various hard-wired connection

weights and search for the best performing architecture.

f

15

Appendix

Lemma

A. Let {x

t

NED

be

}

{V }

on

of size

t

—a and

let

the square integrable

sequence {r t } be generated by the recursion

- G(x

rt

Suppose that G(-,r,0)

satisfies

exists a finite constant

L such

p

<

-,9) is a

such that for

1

that for all

-

Proof.

\\rt

t

}

is

We

NED

first

- E}t^(r

<

g(x 2 ,r,9)\

-

L\x x

on

{V

t

}

-

<

g(x,r 2 ,0)\

p\ ri

there exists

some

r2

\.

observe that

t )\\

<

\\G{x t . u rt . u

6)-G{E\tT\^-i),E\tT 2 {r

<

\\G(x t . u r t . u

9)-G(EltT 2 ^t.i), r,.!, 0)||

<

LH*,.! -

tions

i.e.,

of size —a.

5)-£j+™(G(x,-i

best

there

x 2 \,

-

||G(*,-i 1 r l - ll

first

i.e.,

all x,

=

where the

r,

r,

contraction mapping uniformly in x,

\G(x, ri ,0)

Then {r

-i,rt -i,6).

Lipschitz condition uniformly in

a

\g(x u r,9)

and that G(x,

t

£?S-

2

(a,.i)||

+

I

r1 -i

p||r 4 -!

-

>

3))||

£j+r

t

.,),6)\\

2

(r«-i)||,

inequality follows from the fact that El*™(G(xt-i,rt-i,9))

mean square

predictor of

G(x

t

-i, r«_i, 6)

among

all

and the second inequality follows from the triangle

16

7

.T

/*™ -measurable

inequality.

is

the

func-

Hence, we

]

I

obtain

Ur m

,

where

i/

xm

and ur%m are the

must show that

on {V

we can

< Com x ° By

Vx,m

I

and

for all

NED

m

(al)

pVr,m-l,

and {r

coefficients for {x t }

0(m x

is

as

)

<

1,

we can

m—

t

},

respectively.

Because {x

oo.

t

} is

We

NED

Co and some Ao < —a such that

find a finite constant

the fact that p

.

pa <

.

some A < —a, fr ,m

for

of size a,

}

t

< Lux m -1 +

mo and some a >

find

1

such that

> mo,

{m/{m+

Ao

l))

<

a.

Let

r-.

I/q

:=

CoL(T

^r,m

I

max

mj°

'

—

1

per

J

L

We now

this

for

prove by induction that for

trivially true

is

some

m

>

m

,

m

all

I

> mo,

v r ,m

£ Dom x °

For

.

m=

?no,

by the definition of Do. Suppose we have already shown that

^ r ,m

< Doin x ° Then,

using (al),

.

<

LC m Xo + pD m Xo

=

(LCo

+ pD

)(m

<

(LCo

+

)(7(m+l) Ao

<

D (m+l) Ao

p£>

+

l)

A

°(m/(m +

A°

1))

,

completing the induction step and thus the proof of the lemma.

Proof of

(4)

is

Lemma

4.2.

bounded and thus

lemma

A,

mapping

it

suffices to

in r.

By boundedness

trivially

show that

of

ip,

the sequence {r

square integrable. Hence,

G

is

As by assumption the

in

t

}

generated by

view of the above

Lipschitz continuous in x and a contraction

first

17

derivative of

xl>

is

uniformly bounded,

G

is

L — M^\C r

clearly Lipschitz continuous in x with Lipschitz constant

a matrix, then

|.A|

:= max{|ylx|

of partial derivatives of

G

=

|x|

:

with respect to

maximal singular value of

root of the

1}.) Similarly, let

V

r

r.

V G

(If

\-

A

is

denote the matrix

r

Note that |V r G(x,

r, 9)\

is

the square

G, and thus by a well-known result from

linear algebra,

<

(trace

<

M

=

M^|vec(D r )|

\V r G(x,r,l)\

=

By assumption, p <

\G(x,r u d)

1.

As

tA

(V r G(x,r,0)V r G(x,r,0)'))

(trace(D r

1/2

^) 1/2

P-

•'

clearly,

- G(x,r 2 ,0)\ < sup \V r G(x,r,e)\

\r l

-r 2 \<p\ri -

r2

\,

r

G

is

a contraction mapping

Proof of theorem

4.3.

White (1990), which we

in r,

We

thereby completing the proof of

verify the conditions of corollary 3.5 of

shall briefly refer to as

{r

— 1, where

t

}

£t

upper bound

of

[KW]

and thus also {£

=

[x't ,r't ]',

for the

}

are

{£t},

NED

bounded sequences

and

let

K% :—

K% x 0, both F(£,

•)

Kuan &

follows from

It

{£

:

|£|

<

and V$F(f

,

lemma

on {V*} of

which establishes condition C.l of [KW]. Let

sequence

requires that in

t

4.2.

[KW]. Their conditions A. 4 and

C.3 are explicitly assumed (our assumptions A. 3 and A. 4).

4.2 that

lemma

M$

size

be an

A/^}. Condition C.2

•)

satisfy a Lipschitz

condition with Lipschitz constants L\{£) and Lo{£,), respectively, where L\ and

Ln are Lipschitz continuous

Lipschitz condition.

It is

in £,

and that both F(-,0) and V$F(-,0)

straightforward to show that continuous differentiability

of A.2(i) ensures these Lipschitz conditions.

White

satisfy a

(1990).

18

See also corollary 4.1 of

Kuan

k.

I

Proof of theorem

4.2 ensures that {r t

{r

t

} is

4.4.

} is

We verify the

NED

on {Vt } of

also stationary. Hence, {£ t

} is

Lemma

conditions of corollary 3.6 of [KW].

size

—8. Stationarity of {x

a stationary sequence

NED

t

}

implies that

on {V

t

}

of size

—8, which estabishes condition D.l of [KW]. Condition D.2 of [KW] follows from

B.3 and the

moment

condition of B.l. Finally, as in the preceding proof, four

times continuous differentiability of B.2 ensures the Lipschitz conditions imposed

in

condition D.3 of [KW]. See also corollary 4.2 of

t

19

Kuan k White

(1990).

References

Billingsley, P. (1968).

Elman,

Convergence of probability measures.

(1988). Finding structure in time.

J. L.

CLR

New

York: Wiley.

Report 8801, Center for

Research in Language, University of California, San Diego.

Gallant, A. R.

k,

White, H. (1988).

A

and inference

unified theory of estimation

for nonlinear dynamic models. Oxford: Basil Blackwell.

Hornik, K.,

Kuan, C.-M. (1990). Convergence analysis of local feature extrac-

k.

tion algorithms.

University of

Jordon, M.

I.

BEBR

Illinois,

(1985).

Commerce,

Discussion Paper 90-1717, College of

Urbana-Champaign.

The learning of representations for sequential perfor-

mance. Ph.D. Dissertation, University of California, San Diego.

Jordon, M.

I.

(1986).

ICS Report 8604,

Serial order: a parallel distributed processing approach.

Institute for Cognitive Science, University of California,

San Diego.

Kuan, C.-M. (1989). Estimation of neural network models. Ph.D.

thesis,

De-

partment of Economics, University of California, San Diego.

Kuan, C.-M., Hornik,

K.,

&

White, H. (1990).

Some convergence

results for

learning in recurrent neural networks. Proceedings of the Sixth Yale Work-

shop on Adaptive and Learning Systems, Ed. K.

S.

Narendra,

New Haven:

Yale University, 103-109.

Kuan, C.-M.,

&

White, H. (1990). Recursive M-estimation, nonlinear regression

and neural network learning with dependent observations.

ing Paper 90-1703, College of

Commerce, University

BEBR

Work-

of Illinois, Urbana-

Champaign.

!

20

Kushner, H.

J.,

&

Stochastic approximation methods for

Clark, D. S. (1978).

constrained and unconstrained systems.

Kushner, H.

J.,

&

New

York: Springer Verlag.

Huang, H. (1979). Rates of convergence

for stochastic ap-

AM Journal of Control and

proximation type algorithms. SI

Optimization,

17, 607-617.

Ljung, L. (1977). Analysis of recursive stochastic algorithms.

tions on

IEEE

Transac-

Automatic Control, AC-22, 551-575.

McLeish, D. (1975).

A maximal

inequality and dependent strong laws. Annals

of Probability, 3, 829-839.

Norrod, F.

E., O'Neill,

M.

in neural networks.

D.,

&

Gat, E. (1987). Feedback-induced sequentiality

In Proceedings of the

ference on Neural Networks (pp.

Oja, E. (1982).

A

simplified neuron

II:

IEEE

First International

251-258). San Diego:

model

as a principal

SOS

Con-

Printing.

component analyzer.

Journal of Mathematics and Biology, 15, 267-273.

Oja, E.,

&

Karhunen,

J.

(1985).

On stochastic

approximation of the eigenvectors

and the eigenvalues of the expectation of a random matrix.

Journal of

Mathematical Analysis and Applications, 106, 69-84.

Rumelhart, D.

E.,

Hinton, G.

E., &:

Williams, R.

J.

(1986). Learning internal

representations by error propagation. In D. E. Rumelhart,

land,

& The PDP

L.

McClel-

Research Group, Parallel distributed processing: Explo-

rations in the microstructures of cognition, (pp.

MA: MIT

J.

I:

318-362). Cambridge,

Press.

Sanger, T. D. (1989).

Optimal unsupervised learning

in

a single-layer linear

feedforward neural network. Neural Networks, 2, 459-473.

21

Williams, R.

J.,

k,

Zipser,

A

D. (1988).

learning algorithm for continually

running fully recurrent neural networks.

ICS Report 8805,

Cognitive Science, University of California, San Diego.

22

Institute of