the assessment practices of teacher candidates - QSpace

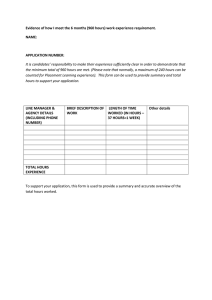

advertisement