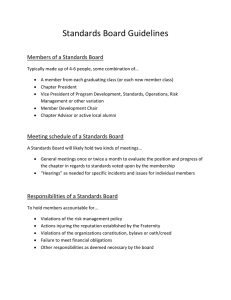

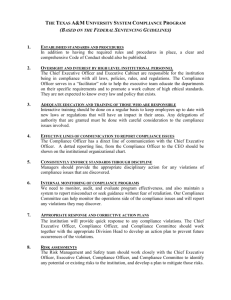

Management of safety rules and procedures

advertisement