document [] - Vysoké učení technické v Brně

advertisement

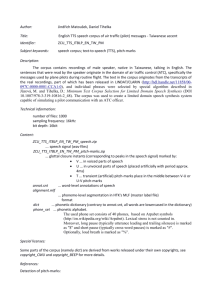

![document [] - Vysoké učení technické v Brně](http://s2.studylib.net/store/data/018115591_1-08bda7a3e570bdf4cf4addd480643452-768x994.png)