RIT Oct 2013 - Software Center

advertisement

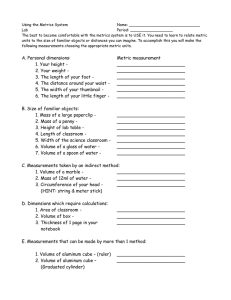

Project #5: Fast Customer Feedback In Large-Scale SE Helena H. Olsson and Jan Bosch Software Center Reporting Workshop, May 22, 2014. Project 5: Objectives and Scope Explores ways in which large-scale software development companies can shorten feedback loops to customers both during and after product development and deployment. Develops data management and feature experiment strategies, with the intention to improve data-driven development practices to increase the accuracy of PM decisions and R&D investments. The ’Open Feedback Loop’ Weak impact on PM decisions and R&D mngmt ? Project 5: Companies Ericsson, Lindholmen (Liselotte Wanhov) AB Volvo (Wilhelm Wiberg, Mattias Runsten) Axis Communications (Rickard Kronfält, Pontus Bergendahl) Jeppesen (Anders Forsman) Grundfos (Jesper Meulengracht) Volvo Cars (Copenhagen) Ericsson (Linköping, Kista, Lund) Project 5: Researchers Prof. Jan Bosch (Chalmers) Dr. Helena Holmström Olsson (Malmö University) PhD student (Malmö University) • Application deadline June 2, 2014 Project 5: Activities Three joint project workshops • February 20th: Kick-Off Workshop • March 18th: Data Management & Feature Experiments • May 12th: Preliminary Findings Workshop Group Interview Sessions • Grundfos • Jeppesen • AB Volvo • Axis Communications (TBD) • Ericsson (Declined) Software Center Reporting Workshop • Lindholmen, May 22nd Sprint 6: Results Project 5: Results Data Management: Recommendations • Closing the ‘open loop’ problem • Data collection practices (structured vs. unstructured) The HYPEX model • Details and illustrative examples for each of the six practices • Case examples On-going feature experiments (HYPEX model) in two companies • Definition of expected behavior(s) • Definition of metrics and instrumentation of code • Data collection and gap analysis Two conference papers based on results from this sprint (XP, SEAA), and one journal paper (in press) based on previous sprint results (IEEE Software). Data Management: Quotes ”You notice that there are a lot of assumptions when questions are often answered with ”we belive, or we think this is what the market wants…”. ”Sadly, a lot of gut feeling is used…”. ” In theory I would like to gather as much data as possible, but in practice this is easier said than done...” ”We have a structured data collection in the sense that we define what should be collected for each function. But we have such a vast amount of functions that we collect data from and not a very structured way of harvesting this data, so in the end, the system has more of an unstructured look…”. Data Management: Recommendations Collect data and develop metrics that correspond to your customers’ KPI’s. Use structured data collection practices for (1) forcasting and trend purposes, (2) when there is a clear goal, and (3) for mature systems for which the environment is known. Use unstructured data collection practices for (1) getting a broad system understanding, (2) when there is an unclear goal, and (3) for immature products for which the environment is unknown. Define and document each data collection algorithm to increase the correctness of the interpretation. Define and collect data to (1) help improve functionality, (2) help remove functionality, and (3) help innovate new functionality. The HYPEX Model Business strategy and goals Strategic product goal generate Feature backlog select Feature: expected behavior (Bexp) implement MVF Bexp Gap analysis no gap (Bact = Bexp) relevant gap (Bact ≠ Bexp) Develop hypotheses actual behavior (Bact) Experimentation implement alternative MVF extend MVF Product The HYPEX Practices 1. Feature backlog generation 6. Alternative feature implementation 2. Feature selection and specification Business strategy and product goals 3. Implementation and instrumentation 5. Hypothesis generation and selection 4. Gap analysis HOW to select a feature? Feature selection: Area of strategic importance to customers. Area of functionality where a big gap between assumptions and actual behavior is expected. Developing new functionality in an area where there is little previous experience. Developing new functionality in an area where there is multiple alternatives of implementing the feature. Area where you want to change user behavior. HOW to define expected behavior? Frequency of use • How often is the feature assumed to be used by customers? Relative frequency of use • When having an old and a new version of a feature - how often do people use the old one instead of the new version? Improved system characteristics • Assumptions about non-functional requirements i.e., response time, throughput, time between failures etc. Improved conversion rate • Assumed number of website visitors who complete a transaction Reduced time for task execution • Time for original task execution vs. expected time for improved task execution Automation of a task • Measure how often a user changes the default setting WHAT metrics to collect? Frequency of use • Metric: Frequency such as every day/week/month/year Relative frequency of use • Metric: Two metrics reflecting frequency of use Improved system characteristics • Metric: Quality attributes such as usability, performance, reliability, security, maintainability etc. Improved conversion rate • Metric: Number of website visitors divided by the percentage of customers who have completed a transaction Reduced time for task execution • • Metric:: Time for original task execution vs. time for improved task execution Metric: Timer that gets triggered at start and closes at finish Automation of a task • Metric:: Frequency of changes of the default setting(s) HYPEX: Lessons Learned Knowledge exchange in the company ecosystem Understanding of the need for early user involvement Rewarding definition of metrics – quality issues Understanding for how/what data to collect Establishing an ”experiment culture” Selection of appropriate feature(s) Definition of metrics – what to measure? Identifying customers to work with – deployment of builds Project 5: Publications Sprint 6 Olsson Holmström H., Sandberg A., Bosch J., and Alahyari H. (2014). Scale and Responsiveness In Large-Scale Software Development. Accepted for publication IEEE Software. Olsson Holmström H., and Bosch J. (2014). Towards Agile And Beyond: An empirical account on the challenges involved when advancing software development practices. In Proceedings of the 15th International Conference on Agile Software Development, May 26 – 30, Rome, Italy. Olsson Holmström H., and Bosch J. (2014). The HYPEX Model: From Opinions to Data-Driven Software R&D. In Proceedings of the 40th Euromicro Conference on Software Engineering and Advance Applications, August 27 – 29, Verona, Italy. Sprint 7 July – December, 2014 Data Management Data Management: How to advance data-driven development practices and utilize post-deployment data to close the open feedback loop to customers. Sprint 7: • Increase focus on the strategy and management perspectives • Develop best practices and guidance for R&D management • Develop best practices and guidance for organizational coordination of innovation Objective: • Develop strategies that help companies strenghten the link between customer data and PM decisions, R&D management and business KPI’s Feature Experiments Feature Experiments: How to initiate, run and evaluate feature experiments to increase the accuracy of R&D investment and allow for continuous validation of functionality with customers. Sprint 7: • Further improve the HYPEX model and it’s practices • Increase the number of feature experiments in companies • Develop best practices based on lessons learned in on-going feature experiments Objective: • Improve companies’ ability to continuously validate software functionality with customers to increase customer value and R&D accuracy. Thank you! helena.holmstrom.olsson@mah.se jan.bosch@chalmers.se