An Integrated Theory of the Mind - ACT-R

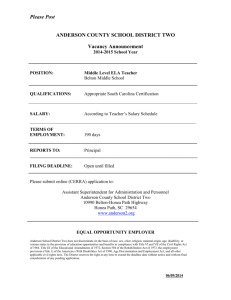

advertisement