7.1 Laplace Transforms

advertisement

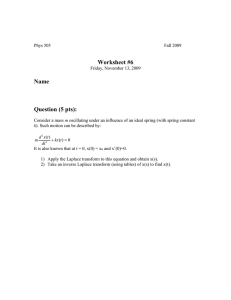

7.1 Laplace Transforms Laplace transforms are used to solve linear ordinary differential equations (ODEs). You probably aren’t familiar with ODEs, but you will see them in the future. They look like y 0 + 2y = 0 , (1) or y 00 − 3y 0 + 4y = 0 , (2) where the primes mean derivatives. We have actually seen these earlier in the course when solving for the potential function φ of a vector field F. When we integrated formulae such as dC(y) = 2y , dy (3) to find C(y), we were actually solving a type of ODE. Unfortunately, not all ODEs are as easy to solve. This is why we introduce Laplace transforms. 7.1.1 Defining the Laplace transform A Laplace transform is a type of integral transform, where we integrate the original function f (t) with some kernel k(s, t) to produce a new function which we will call F (s). Generally, Z ∞ k(s, t)f (t) dt , (4) F (s) = 0 is an integral transform. If f (t) is defined for all t ≥ 0, then the Laplace transform of f is Z ∞ F (s) = L(f ) = e−st f (t) dt . (5) 0 For a Laplace transform the kernel is e−st . We also have the inverse transform f (t) = L−1 (F ) . (6) We will always use f and t for the original functions and F and s for the Laplace transforms. 1 Function f (t) 1 eat tn at e f (t) δ(t − a) Transform F (s) 1/s Function u(t − a) cos ωt sin ωt tf (t) f (at) u(t − a)f (t − a) 1 s−a n! sn+1 F (s − a) e−as Transform e−as s s s2 +ω 2 ω s2 +ω 2 d − ds F (s) 1 F (s/a) a −sa e F (s) Table 1: Some useful Laplace transforms Example: Find the Laplace transform of f (t) = 1. Solution: ∞ Z −st L(1) = e Z ∞ e (1) dt = −st 0 0 1 −st ∞ 1 1 dt = − e = 0 − − (1) = , 0 s s s since e−∞ = 0. Example: Find the Laplace transform of f (t) = eat . Solution: at Z ∞ Z ∞ e−(s−a)t dt 0 0 1 −(s−a)t ∞ 1 1 =− e (1) = , = 0 − − 0 s−a s−a s−a L(e ) = e −st at e dt = since e−∞ = 0 again. Laplace transforms satisfy the linearity property L(af (t) + bg(t)) = aL(f ) + bL(g) = aF (s) + bG(s) , (7) where L(g) = G(s) is the Laplace transform of g(t). It follows from the properties of integration. Table 1 is a list of functions and their Laplace transforms that we will use for this course. We will not calculate them, but we will use the table for reference. Some of these, involving δ(t − a) and u(t − a) we will explain later. The rest can be used immediately. One of these is actually a result known as the First Shifting Theorem, which allows us to find L [eat f (t)] directly from L(f (t)) (8) L eat f (t) = F (s − a) . 2 Conversely, eat f (t) = L−1 [F (s − a)] . To see this, consider Z Z ∞ −(s−a)t e f (t) dt = F (s − a) = 0 (9) ∞ e−st eat f (t) = L eat f (t) . (10) 0 In other words if F (s) = L(f (t)) is known, we can easily find L [eat f (t)], which is F (s − a). Example: Find the Laplace transform of y(t) = 2et + 3e2t . Solution: We make use of property (7) and Table 1 to give us L(y) = L(2et + 3e2t ) = 2L(et ) + 3L(e2t ) 1 1 5s − 7 =2 +3 = . s−1 s−2 (s − 1)(s − 2) Example: Find the Laplace transform of y(t) = eat tn . Solution: We make use of property (8) and Table 1 to give us L(y) = L eat tn = F (s − a) , where F (s) = L(tn ) = n! sn+1 . Hence, L(y) = F (s − a) = 3 n! , (s − a)n+1 Example: Find L−1 1 s2 −4s+5 . Solution: We can rewrite the fraction in the following way s2 1 1 1 = = . 2 − 4s + 5 (s − 2) − 4 + 5 (s − 2)2 + 1 Therefore, since using Table 1 gives us 1 −1 L = sin t , s2 + 1 if we call F (s) = will give us −1 L i.e. F (s − 2) = 1 s2 +1 and f (t) = sin t, then we see that shifting by a = 2 1 s2 − 4s + 5 1 s2 −4s+5 =L −1 1 (s − 2)2 + 1 = e2t sin t , ⇒ L−1 [F (s − 2)] = e2t F (s). 7.1.2 Ordinary differential equations 7.1.2.1 Transforms of derivatives The Laplace transforms of derivatives of y and its derivatives (assuming y and y 0 are continuous) are L(y 0 ) = sL(y) − y(0) , L(y 00 ) = s2 L(y) − sy(0) − y 0 (0) . (11) To prove these, we substitute into equation (5) defining Laplace transforms, and then integrate by parts Z ∞ Z ∞ ∞ 0 −st 0 −st L(y ) = e y (t) dt = e y(t)0 − (−s) e−st y(t) dt (12) 0 0 = (0) − (1)y(0) + sL(y) = sL(y) − y(0) . We can now use the first result to help prove the second, since L(y 00 ) = L((y 0 )0 ) = sL(y 0 ) − y 0 (0) , (13) which gives us L(y 00 ) = sL(y 0 )−y 0 (0) = s[sL(y)−y(0)]−y 0 (0) = s2 L(y)−sy(0)−y 0 (0) . (14) 4 7.1.2.2 First and second order differential equations If you haven’t previously met ODEs, then let’s define what we mean. The most basic type are first order differential equations, which means that only first derivatives appear. We assume that we have y, which is a function of t, i.e. y(t). The equation y 0 + ay = 0 , (15) is a general form of a homogeneous first order differential equation. It contains the first derivative y 0 and the original function y. It is called homogeneous because the right-hand side is zero. An inhomogeneous first order differential equation is something like y 0 + ay = r(t) , (16) where the function r(t) is the inhomogeneous part of the equation. Similarly, we can define second order differential equations which also include y 00 . They can be homogenous y 00 + ay 0 + by = 0 , (17) y 00 + ay 0 + by = r(t) . (18) or inhomogeneous Usually, when solving ODEs we solve the homogenous equation to give a general solution and then the inhomogeneous equation to give the particular solution and add these to get the complete solution. However, we will not do this here. Also bear in mind that we can define nth order differential equations including the nth derivative, but this is beyond the scope of this course. However, in order to solve ODEs, we also need initial values. For a first order differential equation, we need a condition y(0) = K0 , and for a second order differential equation, we need two conditions y(0) = K0 and y 0 (0) = K1 . An ODE with necessary initial condition is called an initial value problem. We will use Laplace transforms to solve these. 7.1.3 Solving ordinary differential equations What does using the Laplace transform do to help us solve ODEs? Recall the equations in (11) for the transforms of first and second derivative. They can 5 be written in terms of L(y) and initial conditions. Therefore we can reduce differential equations to polynomials, which are easier to solve. First order ODEs become linear equations and second order ODEs become quadratic equations. In addition, an inhomogeneous equation can be solved directly without need to solve for both a general and particular solution. Finally, the initial conditions are accounted for without needing to use them to eliminate constants as happens in standard methods of solving ODEs. 7.1.3.1 First order ODEs In order to use Laplace transforms to solve a first order ODE initial value problem, we need to apply all our knowledge of Laplace transforms. We will use examples to understand how it works. Example: Solve the first order equation y 0 (t) = y(t) with initial condition y(0) = y0 . Solution: We take the Laplace transform of both sides of the equation to get L(y 0 ) = L(y) , which using the first equation in (11) gives sL(y) − y(0) = L(y) ⇒ sL(y) − y0 = L(y) . ⇒ L(y) = We now solve for L(y), (s − 1)L(y) = y0 y0 . s−1 In order to solve the problem we need to use the inverse transform to return to y. Hence, considering Table 1, we get y0 1 −1 −1 y(t) = L = y0 L = y0 et . s−1 s−1 This is the solution to the equation y 0 (t) = y(t) with initial condition y(0) = y0 . Notice that y 0 (t) = y0 et and hence y 0 (t) = y(t). You should always check that the solution satisfies the original equation. Sometimes we need to make use of partial fraction decomposition as the next example demonstrates. Example: Solve the equation y 0 (t) − 2y(t) = 4 subject to the condition 6 y(0) = 1 using Laplace transforms. Solution: Taking the Laplace transform gives L(y 0 ) − 2L(y) = L(4) ⇒ sL(y) − y(0) − 2L(y) = 4 , s using the first equation in (11). We simplify this to get (s − 2)L(y) = y(0) + 4 4 =1+ . s s We now isolate L(y), L(y) = 1 4 + , s − 2 s(s − 2) (19) and we now can make use of partial fractions to write A B 4 = + . s(s − 2) s s−2 Simplifying the right-hand side, we find B A(s − 2) + Bs (A + B)s − 2A A + = = , s s−2 s(s − 2) s(s − 2) which gives 4 = (A + B)s − 2A , and hence A + B = 0 and −2A = 4 since s is a variable for the Laplace transform and cannot simply be a number. Therefore B = −A, and A = −2, and the decomposition is 4 2 2 =− + . s(s − 2) s s−2 Returning to our equation for L(y) L(y) = 1 4 1 2 2 3 2 + = − + = − . s − 2 s(s − 2) s−2 s s−2 s−2 s 7 (20) Taking the inverse transform and consulting our Table 1 we get 3 2 −1 −1 y(t) = L −L = 3e2t − 2 . s−2 s In the original equation y 0 (t) − 2y(t) = 4 ⇒ 6e2t − 2(3e2t − 2) = 4 ⇒ 6e2t − 6e2t + 4 = 4 ⇒ 4 = 4 , 7.1.3.2 Second order ODEs For second order ODEs the method is similar but a bit more complicated. Let’s consider a general second order equation y 00 + ay 0 + by = r(t) , (21) and take the Laplace transform of it. We can view r(t) as the input to the system, i.e. the driving force, and y(t) as the output, i.e. the response to the input. This terminology comes from a forced, damped harmonic oscillator, which is very common in physical systems (think of a spring with a driving force and friction). Using the notation Y = L(y), the Laplace transform gives L(y 00 ) + aL(y 0 ) + bL(y) = L(r) ⇒ [s2 Y − sy(0) − y 0 (0)] + a[sY − y(0)] + bY = R(s) , where we used both equations in (11), and that R = L(r). This is called the subsidiary equation We want to solve for Y , so collecting the terms (s2 + as + b)Y = (s + a)y(0) + y 0 (0) + R(s) . We now introduce the transfer function Q(s) = s2 1 1 = , 2 + as + b s + a2 + b − 14 a2 with which we can write Y = [(s + a)y(0) + y 0 (0)]Q(s) + R(s)Q(s) . 8 The final step is to take the inverse transform to find y = L−1 (Y ) to give us the solution. Of course, it is not necessary to calculate Q(s) explicitly if you don’t want to. As long as you isolate Y you can solve the ODE. This general method is just a convenient way to understand how solving equations of this type works. Note that all the information about the homogeneous equation appears in Q. The initial conditions and driving force appear in addition to Q. When solving these equations it is often necessary to use partial fraction decomposition in order to take the inverse transform. If this is unfamiliar to you, it is advisable to refresh your memory. The next example provides a demonstration of how it works. Example: Solve the initial value problem y 00 (t) + 4y(t) = 2et , y(0) = 1 , y 0 (0) = 0 . Solution: The Laplace transform of the equation is L(y 00 ) + 4L(y) = 2L(et ) ⇒ s2 L(y) − sy(0) − y 0 (0) + 4L(y) = 2 . s−1 Substituting the initial conditions, we solve for L(y), 2 s−1 2 s + . ⇒ L(y) = 2 s + 4 (s − 1)(s2 + 4) (s2 + 4)L(y) = s + We now use partial fraction decomposition on the last term 2 A Bs + C = + , (s − 1)(s2 + 4) s−1 s2 + 4 and simplifying the right-hand side gives us A Bs + C A(s2 + 4) + (Bs + C)(s − 1) + 2 = . s−1 s +4 (s − 1)(s2 + 4) We then require that the numerator is equal to 2, which gives the equation 2 = A(s2 + 4) + (Bs + C)(s − 1) . 9 This should hold for all s, so we can substitute some values of s to find the constants. If s = 1, then 2 = A(12 + 4) + (B(1) + C)(1 − 1) = 5A ⇒ A = 2 . 5 When s = 0, and using the value of A 2 2 = A(02 + 4) + (B(0) + C)(0 − 1) ⇒ 2 = 4 − C 5 8 2 ⇒C = −2=− . 5 5 Finally, using s = −1 and the values of A and C, 2 2 2 = A((−1) + 4) + (B(−1) + C)(−1 − 1) ⇒ 2 = 5 + 2B − 2 − 5 5 4 ⇒ 2B = 2 − 2 − 5 2 ⇒B=− . 5 2 Replacing this in the equation for L(y) we get L(y) = s 2/5 (−2/5)s + (−2/5) s 2 1 2 s 2 1 + + = + − − . s2 + 4 s − 1 s2 + 4 s2 + 4 5 s − 1 5 s2 + 4 5 s2 + 4 Finally, taking the inverse transform, and using Table 1 for reference, we get 2 2 1 y(t) = cos(2t) + et − cos(2t) − sin(2t) 5 5 5 3 2 t 1 = cos(2t) + e − sin(2t) . 5 5 5 In our original equation y 00 (t) + 4y(t) = 2et 12 2 t 4 3 2 t 1 ⇒ − cos(2t) + e + sin(2t) + 4 cos(2t) + e − sin(2t) = 2et 5 5 5 5 5 5 2 t 8 t ⇒ e + e = 2et ⇒ 2et = 2et . 5 5 10 7.1.4 Heaviside function/Unit step function We now introduce a more general version of the step function we met previously. The Heaviside function or unit step function, which we will now denote u(t − a) is ( 0 if t < a u(t − a) = . (22) 1 if t > a This is shown for a = 1 in Figure 1. Let’s derive the Laplace transform of uHt-1L 2.0 1.5 1.0 0.5 -2 -1 1 2 t -0.5 -1.0 Figure 1: Heaviside function/Unit step function the step function. Z ∞ Z a Z e −st ∞ u(t − a) dt = e (0) dt + e−st (1) dt a ∞0 Z0 ∞ 1 1 1 e−as = e−st dt = − e−st = − (0) + e−as = . s s s s a a L[u(t − a)] = −st (23) The reason we are interested in the step function is because it might appear along with a function. The second shifting theorem, which we won’t prove says that if L[f (t)] = F (s), then L[f (t − a)u(t − a)] = e−as F (s) , (24) and going the other way f (t − a)u(t − a) = L−1 [e−as F (s)] . Note that ( 0 f (t − a)u(t − a) = f (t − a) 11 if t < a . if t > a (25) (26) You should recognise that any function given in this form can be written using an appropriate step function. The main use of the step function is −s when we have something like es2 in a solution to an ODE and we need to use an inverse transform on it. How would we do this? If we consider it to be of the form e−s F (s) where F (s) = s22 , then we can make use of equation (25) to give L−1 [e−s F (s)] = f (t − 1)u(t − 1) , (27) and so if F (s) = 7.1.5 2 , s2 then f (t) = 2t and hence −s −1 2e L = 2(t − 1)u(t − 1) s2 (28) Dirac delta function The Dirac delta function is defined by the equations ( ∞ if t = a δ(t − a) = , 0 otherwise and Z (29) ∞ δ(t − a) dt = 1 . (30) 0 Even more importantly, because of property (29), when inserted in an integral it has effect of picking out the function at t = a, i.e. Z ∞ f (t)δ(t − a) dt = f (a) . (31) 0 The Laplace transform is Z L[δ(t − a)] = ∞ e−st δ(t − a) dt = e−sa , (32) 0 and hence L−1 (e−sa ) = δ(t − a) . (33) Example: Solve the initial value problem y 00 − 5y 0 + 6y = δ(t − 2) , 12 y 0 (0) = 0 , y(0) = 0 . Solution: The Laplace transform gives us [s2 L(y) − sy(0) − y 0 (0)] − 5[sL(y) − y(0)] + 6L(y) = e−2s ⇒ [s2 L(y) − s(0) − (0)] − 5[sL(y) − (0)] + 6L(y) = e−2s having inserted the initial conditions. Using the notation Y = L(y) and simplifying, we get (s2 − 5s + 6)Y = e−2s e−2s ⇒Y = 2 . s − 5s + 6 If we look at the fraction, we see that s2 1 A B 1 = = + . − 5s + 6 (s − 3)(s − 2) s−3 s−2 This implies the equation 1 = A(s − 2) + B(s − 3) . Using the value s = 2 implies 1 = A(0) + B(2 − 3) ⇒ B = −1, and using the value s = 3 implies 1 = A(3 − 2) + B(0) ⇒ A = 1. Hence 1 1 1 −1 −1 − L =L = e3t − e2t . s2 − 5s + 6 s−3 s−2 1 . Therefore, What is left is now of the form e−2s F (s), if we take F (s) = s2 −5s+6 the result of the exponential will be to produce a step function when we take the inverse transform, e−2s −1 −1 3(t−2) 2(t−2) = u(t − 2) e − e y(t) = L (Y ) = L s2 − 5s + 6 = u(t − 2) e3t−6 − e2t−4 , where we used f (t) = e3t − e2t . Notice that in the example just completed, the appearance of a delta function in the original equation resulted in a step function appearing in the solution. This is a common occurrence. Also notice that there was no proof that the 13 solution satisfied the equation. To do this, you need to know that dtd u(t−a) = δ(t − a). However, you are not expected to understand this, and therefore not expected to prove that a solution containing these entities satisfies the original equation. Example: Solve the equation y 00 (t) + y(t) = δ(t − 1) + u(t − 2) , subject to the conditions y 0 (0) = 1, y(0) = 0. Solution: We get [s2 Y − sy(0) − y 0 (0)] + Y = e−s + e−2s . s Substituting the initial conditions and simplifying, we get e−2s s e−s e−2s 1 + 2 + . ⇒Y = 2 s + 1 s + 1 s(s2 + 1) (s2 + 1)Y = 1 + e−s + Using partial fractions on the final term, we have A Bs + C 1 = + 2 , + 1) s s +1 s(s2 which leads to 1 = A(s2 + 1) + (Bs + C)(s) = (A + B)s2 + Cs + A . On this occasion a comparison of the coefficients is easiest , and requires A = 1, B = −A = −1 , C = 0. Hence e−s e−2s se−2s 1 + + − . s2 + 1 s2 + 1 s s2 + 1 Taking the inverse transform (using Table 1), this implies Y = y(t) = sin t + u(t − 1) sin(t − 1) + u(t − 2) − u(t − 2) cos(t − 2) . 14 7.1.6 Convolution If we have the product of two Laplace transforms, F (s)G(s), what would produce such a product? We have already seen from the fact L[u(t−a)f (t−a)] = e−as F (s) , L[u(t−a)] = e−as , s L[f (t)] = F (s) , (34) that it is not the case that a product gives a product of transforms, i.e. L(f g) 6= L(f )L(g). Instead, it turns out that L(f )L(g) is in fact the transform of the convolution of f and g, defined as Z t h(t) = (f ∗ g)(t) = f (τ )g(t − τ ) dτ . (35) 0 The notation f ∗ g always means that we convolute the functions in this fashion. Convolution Theorem: If F (s) and G(s) exist (the transforms of f and g), then H(s) exists (the transform of h) and H = F G. We won’t prove this, but move straight to applications. What is the point of introducing this? Recall that when we discussed second order ODEs we introduced the transfer function Q(s) and wrote the solution in general as Y = [(s + a)y(0) + y 0 (0)]Q(s) + R(s)Q(s) . Well, unlike the examples we did before where after we found the product R(s)Q(s) we ended up having to do a partial fraction decomposition or some other work to find the inverse transform, we can instead recognise that this is the product of two Laplace transforms and hence the inverse transform should be the convolution of the functions q(t) and r(t). Obviously we know r(t) from the original equation, and q(t) = L−1 [Q(s)]. Often calculating this and then convoluting can make the calculation easier. Let’s redo a previous example to help illustrate the difference. Example: Use the Convolution Theorem to solve the initial value problem y 00 − 5y 0 + 6y = δ(t − 2) , 15 y 0 (0) = 0 , y(0) = 0 . Solution: The Laplace transform gives us [s2 L(y) − sy(0) − y 0 (0)] − 5[sL(y) − y(0)] + 6L(y) = e−2s ⇒ [s2 L(y) − s(0) − (0)] − 5[sL(y) − y(0)] + 6L(y) = e−2s having inserted the initial conditions. Using the notation Y = L(y) and simplifying, we get (s2 − 5s + 6)Y = e−2s ⇒ Y = Q(s)R(s) , where 1 , − 5s + 6 If we look at the fraction, we see that Q(s) = s2 s2 R(s) = e−2s . 1 A B 1 = = + . − 5s + 6 (s − 3)(s − 2) s−3 s−2 This implies the equation 1 = A(s − 2) + B(s − 3) . Using the value s = 2 implies 1 = A(0) + B(2 − 3) ⇒ B = −1, and using the value s = 3 implies 1 = A(3 − 2) + B(0) ⇒ A = 1. Hence 1 1 1 −1 −1 L − =L = e3t − e2t . s2 − 5s + 6 s−3 s−2 This then is q(t) = e3t − e2t . We already know that r(t) = δ(t − 2). Since Y (s) = Q(s)R(s) we use the Convolution Theorem to give us Z t y(t) = r(τ )q(t − τ ) dτ 0 Z t = δ(τ − 2) e3(t−τ ) − e2(t−τ ) dτ . 0 Notice that to take this integral we need to upper limit to be ∞. Now, t ≥ τ since it is the upper integration limit. Then there are two possibilities. If t < 2, then the argument of the delta function will never be zero, and hence δ(τ − 2) = 0 for all values of τ in the integral. On the other hand, if t > 2, then at some point τ − 2 = 0 in the integral. In addition, regardless of the 16 value of t > 2 we will get the same result since the only non-zero value comes from τ − 2 = 0. Therefore taking the upper limit to be ∞ will not change the result, provided we had t > 2. It that case Z ∞ δ(τ − 2) e3(t−τ ) − e2(t−τ ) dτ = e3(t−2) − e2(t−2) = e3t−6 − e2t−4 . y(t) = 0 Therefore, depending on the value of t, we get ( e3t−6 − e2t−4 if t > 2 y(t) = . 0 if t < 2 But this is of the form u(t − a)f (t − a) and so we find that we can write this with a step function y(t) = u(t − 2) e3t−6 − e2t−4 , as we found before. If we had non-zero initial conditions in the example, we would have treated them in the usual way. Only the method for Q(s)R(s) changes. We can also use the properties of convolution to solve integral equations. This is best illustrated by an example. Example: Solve t Z y(τ ) sin(t − τ ) dτ , y(t) = − sin t + 0 using Laplace transforms. Solution: Denoting Y (s) = L(y), and writing y(t) = − sin t + y ∗ sin(t) , we get Y (s) = − 1 1 + Y (s) , s2 + 1 s2 + 1 17 where we used the Convolution Theorem on the second term. Solving for Y (s) we get 1 1 Y (s) 1 − 2 =− 2 s +1 s +1 2 1 s =− 2 ⇒ Y (s) 2 s +1 s +1 1 ⇒ Y (s) = − 2 . s Hence, y(t) = −t . 18