Parallel Multiphysics Simulations of Charged Particles in Microfluidic

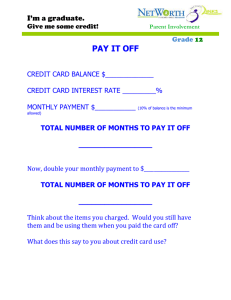

advertisement

Parallel Multiphysics Simulations of Charged Particles in Microfluidic Flows Dominik Bartuschat, Pilsen, Czech Republic June 16, 2014 ESCO 2014 4th European Seminar on Computing 1 D.Bartuschat, U.Rüde Chair for System Simulation, FAU Erlangen-Nürnberg 2 Outline ● Motivation ● The waLBerla Simulation Framework ● Algorithm for Charged Particles in Fluid Flow ● Simulation Results ● Parallel Performance Pilsen, 16.06.2014 - Dominik Bartuschat - System Simulation Group - Parallel Multiphysics Simulations of Charged Particles (Contact: dominik.bartuschat@fau.de) 3 3 Motivation Simulating separation (or agglomeration) of charged particles in micro-fluid flow, influenced by external electric fields. ● Medical applications: ● Optimization of Lab-on-a-Chip systems: ● Separation of different cells. ● Trapping cells and viruses. ● Deposition of charged aerosol particles in respiratory tract (e.g. drug delivery). ● Industrial applications (agglomeration): ● Filtering particulates from exhaust gases. ● Charged particle deposition in cooling systems of fuel cells. © Kang and Li „Electrokinetic motion of particles and cells in microchannels“ Microfluidics and Nanofluidics Pilsen, 16.06.2014 - Dominik Bartuschat - System Simulation Group - Parallel Multiphysics Simulations of Charged Particles (Contact: dominik.bartuschat@fau.de) 4 4 Multi-Physics Simulation ce on io ns co tio ec nv n e d ty i s n n a h c e g r r fo e c r io e c e l tro ic t a t s fo Electro (quasi) statics object movement Rigid body dynamics Fluid dynamics hydrodynamic force Pilsen, 16.06.2014 - Dominik Bartuschat - System Simulation Group - Parallel Multiphysics Simulations of Charged Particles (Contact: dominik.bartuschat@fau.de) 5 5 The waLBerla Simulation Framework 6 waLBerla ● Widely applicable Lattice Boltzmann framework. ● Suited for various flow applications. ● Large-scale, MPI-based parallelization: ● ● Domain partitioned into Cartesian grid of blocks assigned to different processes. MPI communication between the blocks, based on ghost layers. Pilsen, 16.06.2014 - Dominik Bartuschat - System Simulation Group - Parallel Multiphysics Simulations of Charged Particles (Contact: dominik.bartuschat@fau.de) 7 7 Charged Particles in Fluid Flow 8 Lattice Boltzmann Method 1 eq fi (x + �ci ∆t, t + ∆t) − fi (x, t) = − (fi − fi ). τ ● Discrete lattice Boltzmann equation (single relaxation time, SRT) Note: used two relaxation time (TRT) model for separation simulation. ● Domain discretized in cubes (cells). ⟶ ● Discrete velocities ci and associated distribution functions fi per cell. D3Q19 model Pilsen, 16.06.2014 - Dominik Bartuschat - System Simulation Group - Parallel Multiphysics Simulations of Charged Particles (Contact: dominik.bartuschat@fau.de) 9 9 Stream-Collide The equation is solved in two steps: ● Stream step fi (x + �ci ∆t, t + ∆t) = f˜i (x, t + ∆t) ● Collide step 1 eq ˜ fi (x, t + ∆t) = fi (x, t) − (fi − fi ) τ Pilsen, 16.06.2014 - Dominik Bartuschat - System Simulation Group - Parallel Multiphysics Simulations of Charged Particles (Contact: dominik.bartuschat@fau.de) 10 10 Fluid-Particle Interaction - waLBerla and pe ● ● ● Particles mapped onto lattice Boltzmann grid. Each lattice node with cell center inside object is treated as moving boundary. Hydrodynamic forces of fluid on particle computed by momentum exchange method*. * D.Yu, R. Mei, L.-S. Luo, W.Shyy „Viscous flow computations with the method of lattice Boltzmann equation“ (2003) Pilsen, 16.06.2014 - Dominik Bartuschat - System Simulation Group - Parallel Multiphysics Simulations of Charged Particles (Contact: dominik.bartuschat@fau.de) 11 11 Poisson Equation and Force on Particles ● Electric potential described by Poisson equation, with particle‘s charge density on RHS: ρparticles (x) −∆Φ(x) = �r �0 ● Discretized by finite volumes. ● Solved with cell-centered multigrid solver implemented in waLBerla. ● Subsampling for computing overlap degree to set RHS accordingly. ● Electrostatic force on particle: − → Fq = −qparticle · ∇Φ(x) Pilsen, 16.06.2014 - Dominik Bartuschat - System Simulation Group - Parallel Multiphysics Simulations of Charged Particles (Contact: dominik.bartuschat@fau.de) 12 12 Charged Particles Algorithm foreach time step, do // solve Poisson equation with particle charge density set RHS of Poisson equation while residual too high do perform multigrid v-cycle to solve Poisson equation // solve lattice Boltzmann equation considering particle velocities begin perform stream step compute macroscopic variables perform collide step end // couple potential solver and LBM with pe begin apply hydrodynamic force to particles apply electrostatic force to particles pe moves particles depending on forces end Pilsen, 16.06.2014 - Dominik Bartuschat - System Simulation Group - Parallel Multiphysics Simulations of Charged Particles (Contact: dominik.bartuschat@fau.de) 13 13 Results 14 Charged Particles in Fluid Flow Pilsen, 16.06.2014 - Dominik Bartuschat - System Simulation Group - Parallel Multiphysics Simulations of Charged Particles (Contact: dominik.bartuschat@fau.de) 15 15 Charged Particles in Fluid Flow • Computed on 144 cores (12 nodes) of RRZE - LiMa • 132.600 time steps • TRT LBM with optimal Poiseuille parameter • 643 unknowns per core • 6 MG levels Channel: 2.56 x 5.76 x 2.56 mm Dx=10µm, Dt=4⋅10-5s, τ=1.7 Particle radius: 80µm Particle charge: ±105e Inflow:1mm/s, Outflow:0Pa Other walls: No-slip BCs Potential: ±51.2V Else: homogen. Neumann BCs Pilsen, 16.06.2014 - Dominik Bartuschat - System Simulation Group - Parallel Multiphysics Simulations of Charged Particles (Contact: dominik.bartuschat@fau.de) 16 16 Scaling Setup and Environment Weak scaling: ● Costant size per core: ● ● ● ● Size doubled (y-dimension). MG (Residal L2-Norm ≤ 2⋅10-9): ● ● ● ● 1283 cells. 9.4% moving obstacle cells. V(3,3) with 7 levels. 10 to 45 CG coarse-grid iterations. Convergence rate: 0.07. 2x4x2 cores per node. Executed on LRZ‘s SuperMUC: ● 9216 compute nodes (thin islands), each: ● ● ● ● 2 Xeon "Sandy Bridge-EP" chips @2.7 GHz, 32 GB DDR3 RAM, Infiniband interconnect. Currently ranked #10 in TOP500. Pilsen, 16.06.2014 - Dominik Bartuschat - System Simulation Group - Parallel Multiphysics Simulations of Charged Particles (Contact: dominik.bartuschat@fau.de) 17 17 24000 20000 20000 16000 16000 12000 12000 8000 8000 LBM MFLUPs 4000 MG MLUPs 0 0 100 200 300 400 Number of nodes ● Parallel efficiency @512 nodes: LBM 94 % MG - 1 V(3,3) 59 % ➡ LBM ➡ 4000 Mega lattice site updates per sec. 24000 MLUPs MFLUPs Mega fluid lattice site updates per sec. Weak Scaling for 240 Time Steps 500 8192 cores 1.77M particles scales nearly ideally, MG scales reasonably well, MG performance restricted by coarsest-grid solving & L2 norm. Pilsen, 16.06.2014 - Dominik Bartuschat - System Simulation Group - Parallel Multiphysics Simulations of Charged Particles (Contact: dominik.bartuschat@fau.de) 18 18 Summary ● Parallel multi-physics algorithm for charged particles in fluid flow. ● First results for separation of charged particles. ● Weak scaling performance results of main components, multigrid solver and LBM. ● Extension (work in progress): ● ● Considering ions surrounding the charged objects (electrophoretic retardation). Solving Poisson-Boltzmann equation instead of Poisson equation. Pilsen, 16.06.2014 - Dominik Bartuschat - System Simulation Group - Parallel Multiphysics Simulations of Charged Particles (Contact: dominik.bartuschat@fau.de) 19 19 Thank you for your attention! 20