Running Microsoft Windows Server on HPE Integrity Superdome X

advertisement

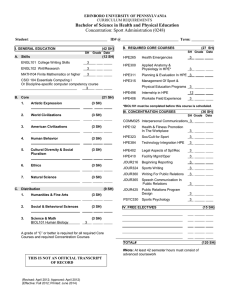

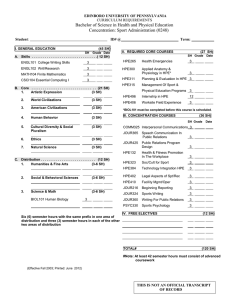

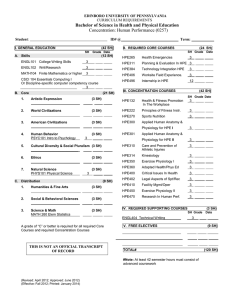

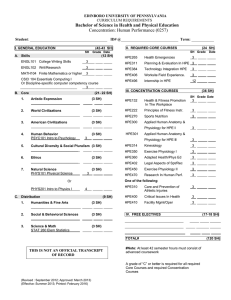

Running Microsoft Windows Server on HPE Integrity Superdome X Scale-up your Windows SQL environment to new heights Technical white paper Technical white paper Contents HPE Integrity Superdome X redefines mission-critical x86 compute.......................................................................................................................................................................................................3 HPE Integrity Superdome X ............................................................................................................................................................................................................................................................................................................3 Hardware overview..................................................................................................................................................................................................................................................................................................................................3 Server blade building block ...................................................................................................................................................................................................................................................................................................... 4 Hardware differentiators ............................................................................................................................................................................................................................................................................................................. 8 Maximum configurations for Microsoft Windows Server 2012 R2 ........................................................................................................................................................................................................ 9 Recommended hardware configuration............................................................................................................................................................................................................................................................................10 HPE Superdome X Server .......................................................................................................................................................................................................................................................................................................10 Software overview .................................................................................................................................................................................................................................................................................................................................. 11 Operating systems .......................................................................................................................................................................................................................................................................................................................... 11 HPE Software components for Windows support on HPE Superdome X .................................................................................................................................................................................... 11 Microsoft Windows Server 2012 R2 Installation ......................................................................................................................................................................................................................................................... 12 Recommendations for Windows Setup ....................................................................................................................................................................................................................................................................... 12 Installation steps............................................................................................................................................................................................................................................................................................................................... 13 Recommended software configuration and tuning ............................................................................................................................................................................................................................................... 27 Operating system ........................................................................................................................................................................................................................................................................................................................... 27 Software advisory notes.................................................................................................................................................................................................................................................................................................................. 28 Finding customer advisories ................................................................................................................................................................................................................................................................................................ 28 General known issues ................................................................................................................................................................................................................................................................................................................. 28 Issues related to the Windows environment ......................................................................................................................................................................................................................................................... 29 Technical white paper Page 3 HPE Integrity Superdome X redefines mission-critical x86 compute HPE Integrity Superdome X redefines mission-critical compute by blending the best of x86 efficiencies with proven HPE mission-critical innovations to deliver groundbreaking performance and a superior uptime experience. Based on HPE BL920s Gen8 and Gen9 Server Blades, the Integrity Superdome X offers breakthrough scalability and uncompromising reliability. Combined with the Microsoft® Windows® operating system, this builds a powerful environment to support the most demanding data-intensive workloads, with the reliability, availability, and efficiency needed for your most critical applications. This paper explains the best ways to run Microsoft Windows Server® 2012 R2 on the HPE Integrity Superdome X. HPE Integrity Superdome X HPE Integrity Superdome X is an x86-based server, purposefully and exceptionally well-designed to support the largest scale-up database workloads with up to 288 cores, 24 TB of RAM, and close to 1 TB of I/O bandwidth. Whether you have a large number of concurrent, short-lived, or large complex queries—the powerful architecture of Superdome X will deliver high performance and low latency for your decision support and business processing workloads. Featuring a modular and well-balanced architecture, Superdome X provides the ultimate flexibility for your mission-critical environment. An efficient bladed form factor allows you to start small and grow as your business demands increase. If your databases are growing or you need to support the roll out of new applications, or when your client usage increases, you can efficiently scale up your environment by adding blades. You can start as small as 2-socket configuration and scale up all the way to 16 sockets, to support applications expanding beyond the limits of standard x86 server offerings. Additionally, the HPE hard partitioning technology, HPE nPars, enables you to deploy different workloads in different partitions that are secure and electrically isolated from one another—an ideal environment to consolidate multiple applications. Featuring a comprehensive set of RAS capabilities traditionally offered only on proprietary servers, Superdome X provides the ideal foundation to run your mission-critical applications on standard x86 operating systems. HPE Superdome X supports Microsoft Windows Server 2012 R2 Standard and Datacenter Edition. This combination provides the ideal platform to deploy your most-demanding Microsoft SQL Server databases and applications. This solution is the result of the long-standing relationship and technical collaboration between Hewlett Packard Enterprise and Microsoft, delivering the scalability and reliability necessary to address the most-demanding mission-critical requirements of your enterprise applications. Microsoft SQL Server 2014 includes key in-memory capabilities across all workloads including online transaction processing (OLTP), data warehouse, and business intelligence. The new in-memory OLTP engine component allows memory-optimized tables to reside in-memory, improving transactional throughput, and lowering latency. With these capabilities, you can run large database workloads in a scale-up architecture, bringing with it the benefits of this type of Windows/SQL Server deployment such as less complexity and lower OPEX. You can also consolidate many database engines and workloads on a single OS instance server and assign the right resources to match your requirements and SLAs. You can even create smaller size servers to run your various applications on the same enclosure in a more isolated environment. In short, the combination of SQL Server and HPE Superdome X provides a platform delivering new levels of performance, flexibility, and scalability for your Windows mission-critical applications and SQL Server databases. Hardware overview HPE Superdome X products consist of two main components: HPE BladeSystem Superdome Enclosure and the HPE BL920s Gen8 and Gen9 Server Blades. The enclosure has the capacity to accommodate eight BL920s server blades each with two sockets containing an Intel® Xeon® processor E7 v2 (Gen8) or an Intel Xeon processor E7 v3 (Gen9). Together, these components are referred to as the complex. The eight server blades can be part of a single hardware partition (HPE nPartition, HPE nPar, for short), or optionally carved into smaller HPE nPars of one, two, three (Gen9 only) or four server blades each. The servers (HPE nPars) are logically and electrically isolated from each other, so they can function independently. HPE nPars flexibility allows the server to be appropriately sized for any workload. Note See the HPE Integrity Superdome X QuickSpecs, for more detailed information about hardware configurations and specifications. Technical white paper Page 4 Server blade building block The conceptual block diagram of the computing resources in the HPE BL920s Server Blade is shown in figure 1. This simplified diagram shows the server blade I/O (two flexible LAN on motherboard [LOM] and three mezzanine slots), two processor sockets, and the memory associated with each processor. Figure 1. Conceptual block diagram of the HPE BL920s Server Blade Each server blade supports two different types of removable I/O cards: mezzanine slots and LOM modules. The three mezzanine slots can accept PCIe Gen3 host bus adapters. One or two LOM modules can be installed on each server blade. Processors HPE BL920s Gen8 Server Blades support Intel Xeon Processor E7 v2 while the Gen9 Server Blades support Intel Xeon Processor E7 v3 as specified in table 1. Table 1. HPE BL920s Gen8 and Gen9 Server Blade supported processor matrix INTEL XEON PROCESSOR E7 Processor (Gen8) Cores per processor Frequency Cache Power Intel Xeon Processor E7-2890 v2 15c 2.8 GHz (3.4 GHz Turbo) 37.5 MB 155 W Intel Xeon Processor E7-2880 v2 15c 2.5 GHz (3.1 GHz Turbo) 37.5 MB 130 W Intel Xeon Processor E7-8891 v2 10c 3.2 GHz (3.7 GHz Turbo) 37.5 MB 155 W Intel Xeon Processor E7-4830 v2 10c 2.2 GHz (2.7 GHz Turbo) 20 MB 105 W Intel Xeon Processor E7-8893 v2 6c 3.4 GHz (3.7 GHz Turbo) 37.5 MB 155 W Processor (Gen9) Cores per processor Frequency Cache Power Intel Xeon Processor E7-8890 v3 18c 2.5 GHz (3.3 GHz Turbo) 45 MB 165 W Intel Xeon Processor E7-8880 v3 18c 2.3 GHz (3.1 GHz Turbo) 45 MB 150 W Intel Xeon Processor E7-4850 v3 14c 2.2 GHz (2.8 GHz Turbo) 35 MB 115 W Intel Xeon Processor E7-8891 v3 10c 2.8 GHz (3.5 GHz Turbo) 45 MB 165 W Intel Xeon Processor E7-8893 v3 4c 3.2 GHz (3.5 GHz Turbo) 45 MB 140 W Technical white paper Page 5 Memory There are 24 DIMM slots associated with each socket, for 48 slots per server blade. • The 48 slots are arranged as 16 channels of three DIMM positions each; DDR4 memory (Gen9) or DDR3 memory (Gen8) • The DIMMs must be loaded in groups of 16 on the blade where you have 16 DIMMs, 32 DIMMs, or 48 DIMMs per server blade • Gen8—The DIMM slots can accept DDR3 DIMMs with capacity of 16 GB or 32 GB on Gen8 blade servers. Thus, the maximum for the memory configuration is 1.5 TB per server blade using 32 GB DIMMs. • Gen9—The DIMM slots can accept DDR4 DIMMs with capacity of 16 GB, 32 GB, or 64 GB on Gen9 blade servers. Thus, the maximum for the memory configuration is 3 TB per server blade using 64 GB DIMMs. • General memory configuration rules: – For best performance, the amount of memory on each blade within the partition should be the same. – Use the same amount of memory on each processor module within a partition. – Use fewer DIMM populations of larger DIMM sizes to get the best performance where possible (use 16x 32 GB DIMMs instead of 32x 16 GB DIMMs if you need 512 GB of memory per Blade) Note The 16 GB DIMMs are only supported with 16 DIMMs (min/max) configuration per BL920s Gen8 Server Blade. That is 256 GB of RAM per blade. Table 2. Supported memory configurations for Superdome X BL920S GEN8 BL920S GEN9 Double data rate type DDR3 DDR4 Max. memory per complex 12 TB 24 TB DIMM slots per server 48 48 DIMM sizes 16 GB, 32 GB 16 GB, 32 GB, 64 GB I/O adapters Table 3 lists PCIe Gen3 adapters supported with Windows Server 2012 R2 in the BL920s Gen8 Server Blade. These I/O adapters have gone through the rigorous quality standards of Hewlett Packard Enterprise. More adapters will be available in the future. Table 3. I/O adapters supported in Superdome X TYPE PRODUCT PART NUMBER Mezzanine Fibre Channel adapter HPE QMH2672 16Gb FC HBA 710608-B21 Gen8 Mezzanine Ethernet adapter HPE Ethernet 10Gb 2-port 560M Adapter 665246-B21 Gen8 LOM Ethernet adapter HPE Ethernet 10Gb 2-port 560FLB Adapter 655639-B21 Gen9 Mezzanine Ethernet adapter HPE FlexFabric 20Gb 2-port 630M Adapter 700076-B21 Gen9 LOM Ethernet adapter HPE FlexFabric 20Gb 2-port 630FLB Adapter 702213-B21 Technical white paper Page 6 • Flexible LOM slots – At least one HPE Ethernet 10Gb 2-port 560FLB Adapter (655639-B21), installed in LOM slot 1, is required in each partition based on Gen8 blade servers, or HPE FlexFabric 20Gb 2-port 630FLB Adapter (700065-B21) for partitions based on Gen9 blade servers. Another optional LOM can be installed in LOM slot 2. There can be between eight and 16 network LOM adapters per enclosure. • Mezzanine slots – HPE QMH2672 16Gb Fibre Channel Host Bus Adapter One 16 Gb FC adapter in mezzanine slot 2 per partition is required for SAN boot. The minimum FC adapter per HPE nPar is one and maximum eight for an eight-blade partition. – HPE Ethernet 10Gb 2-port 560M Adapter (665246-B21) for Gen8 or HPE FlexFabric 20Gb 2-port 630M Adapter (700076-B21) for Gen9 Depending on the server requirements (nPar size) it is possible to have zero (0) to eight adapters (located in blade mezzanine slot 1 for an eight-blade partition). Table 4. Minimum and maximum I/O adapters per BL920s Gen8, Gen9 and hardware partition (nPar) I/O ADAPTER LOCATION SINGLE BLADE NPAR GEN8 GEN9 710608-B21: HPE QMH2672 16Gb FC HBA Mezzanine 2 Min 0, Max 1 MIN 1, x x MAX 8 665246-B21: HPE Ethernet 10Gb 2-port 560M Adapter Mezzanine 1 Min 0, Max 1 700076-B21: HPE FlexFabric 20Gb 2-port 630M Adapter 700076-B21: HPE FlexFabric 20Gb 2-port 630M Adapter Mezzanine 3 Min 0, Max 1 MIN 0, x MAX 8 x MIN 0, x MAX 8 655639-B21: HPE Ethernet 10Gb 2-port 560FLB Adapter LOM 1 Min 1, Max 1 655639-B21: HPE Ethernet 10Gb 2-port 560FLB Adapter x MAX 8 700065-B21: HPE FlexFabric 20Gb 2-port 630FLB Adapter 700065-B21: HPE FlexFabric 20Gb 2-port 630FLB Adapter MIN 1, LOM 2 Min 0, Max 1 MIN 0, x x MAX 8 x Note For a configuration to be valid both blade and nPar minimum and maximum limits have to be adhered to. It would be better to have a least one LOM and one Fibre Channel adapter per blade to offer maximal flexibility. In this scenario, an nPar can be made with just a single blade. A 16-socket (eight-blade) Superdome X system may be configured with 1 to 24 dual-port Fibre Channel mezzanine controllers. There may also be between 8 and 16 dual-port network LOM adapters; one is required in LOM slot 1 while an optional one may be utilized in LOM slot 2. It is also possible to add up to 24 additional network mezzanine controllers supported in any available mezzanine slot. In all case, each HPE BL920s server blade supports a maximum of two LOMs and three mezzanine cards. Technical white paper Page 7 Table 5. Interconnect modules supported in Superdome X TYPE PRODUCT PART NUMBER Fibre Channel interconnect Brocade 16Gb/16 SAN Switch for BladeSystem c-Class C8S45A Brocade 16Gb/28 SAN Switch Power Pack+ for BladeSystem c-Class C8S47A Ethernet interconnect (pass-thru) HPE 10GbE Ethernet Pass-Thru Module for c-Class BladeSystem 538113-B21 Ethernet interconnect HPE 6125XLG Ethernet Blade Switch 711307-B21 HPE 6125G Blade Switch Kit 658247-B21 HPE 6127XLG 20Gb Ethernet blade Switch 787635-B21 One Fibre Channel interconnect module must be installed in I/O bay 5 and another module may be installed in I/O bay 6 in the back of the enclosure to provide SAN storage connectivity and provide SAN boot capabilities. One of the Ethernet interconnects must be installed in I/O bay 1; a second Ethernet interconnect of the same type may be installed in I/O bay 2, if desired. If optional mezzanine adapters are used, then the corresponding interconnect modules of the same type can be installed in I/O bays 3 and 4. See the configuration options table in the HPE Integrity Superdome X Server QuickSpecs document for more information: hp.com/h20195/v2/GetDocument.aspx?docname=c04383189. 16-socket configuration: Eight server blades installed into an HPE Integrity Superdome X enclosure will result in the server structure shown in figure 2. Figure 2. Conceptual block diagram of eight HPE BL920s Server Blades The Superdome X server can be partitioned into a pair of eight socket servers (8s, 4 blades). In this case, for best performance, configure server blades 1/1, 1/3, 1/5, and 1/7 as one server, and server blades 1/2, 1/4, 1/6, and 1/8 as another independent server. Technical white paper Page 8 You can also create 2S (one blade) and 4S (two blades) partitions. Odd or even slot loading for BL920s blades in the same partition is required by nPar sizing rules. Additionally, with Gen9 blade servers only, it is possible to support 6S (three blades) partitions using the same slot loading rules. You can see that the Superdome X has a modular structure. The most basic building block is the processor and its associated socket local memory. Each processor has an embedded memory controller, through which it has extremely fast access to its local memory. The processors are combined in pairs, connected by Intel QuickPath Interconnect (QPI) links. Communication among server blades is facilitated through the HPE crossbar fabric. The fabric is built upon the HPE sx3000 chipset, a new version of the chipset that formed the backbone of HPE Integrity Superdome 2 servers. This structure gives the Superdome X a non-uniform memory architecture (NUMA)—the latency time for any given processor to access memory depends on the relative positioning of the processor socket and the memory DIMM. The time it takes for a memory transaction to traverse through the interconnect fabric is somewhat longer than the fast access to socket local memory. This fact is important when tuning for optimal memory placement, as described in the NUMA considerations section at the end of this document (in “Recommended software configuration and tuning” on page 27). Hardware differentiators The Superdome X platform offers a variety of features that make it superior to other server platforms in terms of reliability, availability, and serviceability (RAS) and scalability features. Flexible scalability The Superdome X can be ordered from the factory as a system from two sockets (one server blade) to 16 sockets (eight server blades). Unified extensible firmware interface The Superdome X is a pure unified extensible firmware interface (UEFI) implementation. As such, it overcomes the limitations of the older BIOS approach—it is able to boot from extremely large disks and that boot process is faster due to the larger block transfers. HPE Onboard Administrator Onboard Administrator (OA) is a standard component of HPE BladeSystem enclosures that provides complex health and remote complex manageability. OA includes an intelligent microprocessor, secure memory, and a dedicated network interface and it interfaces with the HPE Integrated Lights-Out (iLO) subsystem on each server blade. This design makes OA independent of the host server blade and operating system. OA provides remote access to any authorized network client, sends alerts, and provides other server blade management functions. Using OA, you can: • Power up, power down, or reboot partitions remotely send alerts from the OA regardless of the state of the complex • Access advanced troubleshooting features through the OA interface • Diagnose the complex through a Web browser For more information about OA features, see the Superdome X Onboard Administrator user guide available at h20564.www2.hpe.com/hpsc/doc/public/display?docId=c04389052. Firmware-First error containment and recovery Superdome X platforms facilitate the containment of errors through the Firmware-First error handling, which enhances the Intel Machine Check Architecture. Firmware-First covers correctable errors, uncorrectable errors, and gives firmware the ability to collect error data and diagnose faults even when the system processors have limited functionality. Firmware-First enables many platform-specific actions for faults including predictive fault analysis for system memory, CPUs, I/O, and interconnect. Core analysis engine (CAE): CAE runs on the OA, which moves platform diagnostic capabilities to the firmware level, so it can drive self-healing actions and report failures even when the OS is unable to boot. It also provides possible reasons for failures as well as possible actions for repair and detailed replacement part numbers. This speeds recovery to reduce downtime. Technical white paper Page 9 Windows system event logs: The HPE Mission Critical WBEM Providers for Windows Server x64 Edition ensure relevant events are passed from the hardware and firmware to the Windows system event log. Likewise, some of the OS events or alerts are passed to the hardware so that information is tracked and can be analyzed, contained, or worked around. OA live logs: If the system goes down and the OS is not available, the user can use the OA system event log (SEL) and forward progress log (FPL) to see if an error occurred. These errors will also be sent to remote management systems like HPE Insight Remote Support (IRS). For more information about IRS, see hp.com/us/en/business-services/it-services.html?compURI=1078312. Double Device Data Correction: Double Device Data Correction + 1 “bit” (DDDC + 1), also known as double-chip sparing, is a robust and efficient method of memory sparing. It can detect and correct single- and double-DRAM device errors for every x4 DIMM in the server. By reserving one DRAM device in each rank as a spare, it enables data availability after hardware failures with any x4 DRAM devices on any DIMM. In the unlikely occurrence of a second DRAM failure within a DIMM, the platform firmware alerts you to replace that DIMM gracefully without a system crash. The additional 1-bit protection further helps correct single-bit errors (e.g., due to cosmic rays) while a DIMM has entered dual-device correction mode and is tagged for replacement. DDDC + 1 provides the highest level of memory RAS protection with no performance impact and no reserved memory in Intel Xeon E7-based servers; the full system memory is available for use. Memory error recovery with Microsoft Windows Server and SQL Server: Furthermore, Hewlett Packard Enterprise has worked with Microsoft to give the operating system awareness of uncorrectable memory errors that would cause the system to crash on most industry-standard servers. Microsoft Windows Server 2012 R2 as well as Microsoft SQL Server (2012 and 2014) support memory error recovery. Memory errors are detected and corrected automatically using hardware and firmware mechanisms, but there are occasions where they cannot be corrected and become “uncorrectable”. A machine check exception is generated; the firmware logs and reports it to the operating system. If the affected memory page is not in use, or can be freed by the application or by Windows, then the page is unmapped, marked as bad, and taken out of service. Information is reported in the system event log, but the system does not crash and an operation to change the faulty component can be scheduled later when convenient. For SQL server reference, check support.microsoft.com/kb/2967651, which describes how SQL handles notification of memory errors within its buffer pool. For more details about HPE Integrity Superdome X system architecture and RAS refer to hp.com/h20195/v2/GetDocument.aspx?docname=4AA5-6824ENW. Maximum configurations for Microsoft Windows Server 2012 R2 Microsoft Windows Server 2012 R2 supports a maximum of 64 sockets, and 4 TB of RAM, therefore nPars should not exceed 4 TB of RAM, but can comprise multiple blades as depicted in table 6. Table 6. Maximum configurations for Windows Server 2012 R2 16-SOCKET 8-SOCKET 4-SOCKET 2-SOCKET Processor cores (18 cores per socket) 288 144 72 36 Logical processors (Hyper-Threading on) 576 288 144 72 (16 GB DIMMs) 2 TB 1 TB 512 GB 256 GB (32 GB DIMMs) 4 TB* 4 TB* 3 TB 1.5 TB 4 TB 3 TB Memory capacity (64 GB DIMMS) Mezzanine slots 24 12 6 3 LOM 16 8 4 2 * This is the current Windows limit (not a hardware limit). Technical white paper Page 10 Note You can use other configurations. The idea of the table 6 is to show the maximal ones. Recommended hardware configuration HPE Superdome X Server Minimum firmware release for Windows Server 2012 R2 support The minimum Superdome X Server firmware version that supports Windows Server 2012 R2 with BL920s Gen8 blade servers is 5.73.0, corresponding to HPE Superdome X Server firmware bundle 5.73.0(b) or 2014.10(a). For BL920s Gen9 blade servers, HPE recommends to run at least Superdome X firmware bundle version 7.6.0. For supported I/O firmware information refer to Superdome X firmware matrix using the following link: h20566.www2.hpe.com/hpsc/doc/public/display?sp4ts.oid=7161269&docId=emr_na-c04739260 The Superdome X IO firmware and Windows software components should be updated using the “Superdome X IO Firmware and Windows Drivers image”, version 2016.01 (or later). Note that HPE recommends updating the I/O firmware offline, Windows drivers and software may be updated online; for more information see the release notes in the “Superdome X IO Firmware and Windows Drivers image”, located on the Superdome X download page on HPE Support Center , under “Software—CD ROM”. Hyper-Threading Intel Xeon Processor E7 family implement the Intel® Hyper-Threading Technology feature. When enabled, Hyper-Threading allows a single physical processor core to run two independent threads of program execution. The operating system can use both logical processors, which often results in an increase in system performance. However, the two logical processors incur some overhead while sharing the common physical resources, so the pair does not have the same processing power, nor consumes the same electrical power, as two physical cores. Beyond that, the increased parallelism can cause increased contention for shared software resources, so some workloads can experience degradation when Hyper-Threading is enabled. On the Superdome X, the Hyper-Threading state is an attribute of the partition, therefore, it is controlled through interaction with the OA. By default, Hyper-Threading is enabled. The current state can be ascertained through the Onboard Administrator parstatus command: parstatus –p <partition identifier> -V Hyper Threading at activation: Enabled Hyper Threading at next boot: Enabled Note That both the current state and the state after the next boot are shown, because any change to the Hyper-Threading state will not take effect until the partition is rebooted. The OA parmodify command can be used if it is desired to change the Hyper-Threading state: parmodify –p <partition identifier> -T [y|n] As a general rule of thumb, it may be desirable to change Hyper-Threading from the disabled to the enabled state if the workload that is running is fully utilizing all of the physical cores, as evidenced by a high value for CPU utilization. Another case where Hyper-Threading would be beneficial is if the physical cores are spending a relatively large amount of time waiting for cache misses to be satisfied. If Hyper-Threading is enabled, it may help to disable it if the applications that are running tend to consume the entire capacity of the processor cache. The two logical processors share a common physical cache, so they may interfere with each other if the application cache footprint is large. Windows standard performance analysis tools can be used to gain insight into the benefit or detriment of using Hyper-Threading. You may just want to experiment running your workload in both states to see which one works better for your particular application. Technical white paper Page 11 Memory For performance reasons, it is highly recommended to have the same amount of memory on each server blade in a partition. Power management The Superdome X implements a variety of mechanisms to govern the trade-off between power savings and peak performance. I/O Hewlett Packard Enterprise recommends that all disks connected to the Superdome X over the two-port QLogic 16 Gb FC adapters are multipathed for high reliability and hardware availability, and across multiple blades within the same nPar for higher redundancy. If one blade fails, the system will still function, if it can access the storage in the surviving blade. Also, as configuration may change, for example if the hardware partition needs to grow thus include more BL920s, Hewlett Packard Enterprise recommends to setup the boot controller in the lower number blade of the nPar to avoid Windows changing some crucial parameters for boot. Software overview Operating systems HPE Superdome X is certified for Windows Server 2012 R2. • The minimum version level required is Windows Server 2012 R2 with KB2919355 slipstreamed (included); also known as “Spring 2014 update” or April 2014 updates. • For more information about KB2919355, consult the following URL: support.microsoft.com/kb/2919355/en-au. • In addition, Microsoft November 2014 cumulative updates are required. They consist of the following QFEs (hotfixes): – KB3000850 – KB3003057 – KB3014442 – KB3016437 (only applicable for Domain Controllers) Files are available for download here: microsoft.com/en-us/download/details.aspx?id=44975. The recommended version to use is Windows Server 2012 R2 with November 2014 updates slipstreamed because it already includes both April 2014 and November 2014 cumulative hotfixes. • Lastly, released March 2015 Microsoft KB3032331 is also required. This Microsoft hotfix can be downloaded from the following URL: support.microsoft.com/kb/3032331/en-us. It is a good practice to have an up-to-date system using the latest published Microsoft Windows Updates for your environment. HPE Software components for Windows support on HPE Superdome X HPE Integrity Superdome X IO firmware and Windows Drivers Image In 2016 Hewlett Packard Enterprise released the “HPE Integrity Superdome X IO Firmware and Windows Drivers image”. This ISO image is specifically for HPE Integrity Superdome X Server with Gen8 and Gen9 blades, it includes: • Utilities to assist with installation and system management • Windows drivers • WBEM providers • I/O firmware updates The deployment for these components is done through HP SUM 7.4.0 application, which is part of the ISO image too. Note: The HPE Service Pack for ProLiant (SPP) is designed for HPE ProLiant Servers; it does NOT support updating Microsoft Windows Server 2012 R2 drivers or firmware on HPE Integrity Superdome X Servers. Technical white paper Page 12 The “HPE Integrity Superdome X IO Firmware and Windows Drivers image” supersedes the “HPE BL920s Gen8 Windows Drivers Bundle” which was used prior to that, for the deployment of Windows drivers and software on HPE Integrity Superdome X running Windows Server 2012 R2 (on BL920s Gen8. At the time, HP SUM was not supported in this environment). The list of components and versions can be found in the release notes from the support page, please look for the “HPE Integrity Superdome X IO firmware and Windows drivers image” at the following address: h20566.www2.hpe.com/hpsc/swd/public/readIndex?sp4ts.oid=7595544&swLangOid=8&swEnvOid=4168 (under Software-CD-ROM) • Combine driver This component installs driverless INFs to identify various chipset devices. • iLO 3/4 interface driver HPE ProLiant iLO 3/4 Channel Interface Driver allows software to communicate with the iLO 3 or iLO 4 management controller. HPE ProLiant Health Monitor, HPE Insight Management Agents, WBEM Providers, Agentless Management Service, and other utilities use this driver. • iLO 3/4 management driver HPE ProLiant iLO 3/4 Management Controller Driver package provides system management support, including monitoring of server components, event logging, and support for the HPE Insight Management Agents and WBEM Providers. • Intel ixn/ixt driver (BL920s Gen8) This component package contains the drivers for HPE Ethernet 10Gb 2-port 560FLB Adapter and HPE Ethernet 10Gb 2-port 560M Adapters running under Microsoft Windows Server 2012 R2. • HPE QLogic NX2 10/20GbE Multifunction Drivers for Windows Server x64 Editions version (BL920s Gen9) This component package contains the drivers for the HPE FlexFabric 20Gb 2-port 630FLB Adapter and the HPE FlexFabric 20Gb 2-port 630M Adapter Adapters running under Microsoft Windows Server 2012 R2. • QLogic FC Storport driver This component contains the QLogic Storport driver which provides HPE QMH2672 16Gb Fibre Channel Host Bus Adapter driver support for HPE storage arrays. • HPE System Management Homepage for Windows x64 version The System Management Homepage provides a consolidated view for single server management, highlighting tightly integrated management functionalities including performance, fault, security, diagnostic, configuration, and software change management. • HPE Mission Critical WBEM Provider for Windows Server x64 Edition version The HPE Mission Critical WBEM Providers supply system management data through the Windows Management Instrumentation framework for HPE Superdome X systems and options. It delivers mission-critical features to the system operation under Microsoft Windows Server 2012 R2. This includes the IPMI BT driver to communicate with the hardware. It provides an “optimize” button in “HPE System Management Homepage” under “Advanced server health information” to apply some of the recommended settings discussed throughout this document. It enables support for HPE Insight Remote support (IRS) 7.4 or above. HPE recommends using the latest version of the HPE Integrity Superdome X IO firmware and Windows Drivers Image. Microsoft Windows Server 2012 R2 Installation Recommendations for Windows Setup In order to ease Windows deployment we recommend that you have the following items ready before you install Microsoft Windows Server on an HPE Superdome X. • Use a computer connected to the Superdome X OA (technician computer). • Have a SUV dongle cable available (HPE part number 409496). This dongle cable brings out USB (2 port) serial (DB9) and VGA (DB15) and could be used to connect a USB device to perform the installation. • Have Microsoft Windows Server 2012 R2 Standard or Datacenter Edition media (with at least KB2919355 update embedded). Technical white paper Page 13 – It must be UEFI bootable. – It can be stored on a USB flash storage, a DVD, or provided as an ISO or IMG file image. – USB flash storage or USB DVD readers can be connected to the SUV port on the front of each blade. Hewlett Packard Enterprise recommends using USB devices because they generally offer an acceptable transfer rate. – An .ISO or .IMG file image can be used too, in conjunction with media redirection capability of the nPar’s Integrated Lights Out (iLO) remote console. Be aware that in this case the technician’s computer must be located on a low latency network (close to the Superdome X OA), otherwise, the data transfer can take a long time and the Windows installation can timeout. • Ensure you have the required Microsoft Windows Server 2012 R2 Standard Edition media with appropriate Windows licensing and the product key. Make sure you have downloaded the “HPE Integrity Superdome X IO firmware and Windows Drivers Image” • The image is located on the HPE support site, when the product selected is HPE Integrity Superdome X, and Windows Server 2012 R2. h20566.www2.hpe.com/hpsc/swd/public/readIndex?sp4ts.oid=7595544&swLangOid=8&swEnvOid=4168 – You can create a DVD or use iLO virtual media, or copy the image on the target nPar and mount it. • Have an nPar made of only one blade for the initial Windows Setup. Some of the steps require reboots and the larger the nPar, the longer it takes to reboot. nPars can be expanded later on. Windows should be installed on the lowest numbered blade of a partition and connected to the lower numbered processor socket on that blade (HBA in mezzanine slot). This will eliminate the possibility of removing a lower numbered blade from that partition. • Have a disk, or LUN provisioned on the nPar. – Since blades do not have internal storage, a disk should have been created on your SAN storage. – Hewlett Packard Enterprise recommends to use zoning and LUN masking techniques to prevent confusion and mistakes during Windows initial setup. Whenever possible the disk or LUN should be presented to the host through only one path. – If multiple paths are used, Windows Setup will see only one disk online and all the other paths would show other disks in an offline state. Do not try to “online” them. – The recommended size for the Windows boot disk is at least 512 GB to accommodate a potential kernel dump and pagefile of 128 GB (given the 4 TB RAM maximum supported by Windows Server 2012 R2). – The disk or LUN should be clean (no partition) to avoid any potential access violation error. Installation steps This part describes the main steps when you are planning to install Windows on a Superdome X nPar. This assumes that the hardware is running the latest available firmware, at least the minimum recommended. The main steps consist of: 1. Connect to the HPE Integrity Superdome X OA 2. Create the nPar (only one BL920s Blade with at least one flexible LOM and a Fibre Channel adapter) 3. Present the disk/LUN to be used as the Windows system disk (Boot from SAN) 4. Load Microsoft Windows OS 5. Install HPE BL920s Windows Drivers and software 6. Install (if applicable) additional Windows roles or features (for example the multipath I/O feature) 7. Enable Remote Desktop, set pagefile size, configure memory dump (kernel type), configure power options 8. Update Windows with the required Microsoft hotfixes or QFEs—run Windows update 9. Enable all data LUNs and SAN paths—verify multiple paths Technical white paper Page 14 10. Grow nPar as required and verify configuration (this may include checking again the paths to disks if the other blades have additional connected HBAs). 11. Customize the Windows environment Windows can be installed manually using the Microsoft Windows media, or automatically through a deployment server. Note The following chapters are provided to guide you through the manual setup but it is beyond the scope of this paper to cover all different ways, methods, or tools that can be used to perform a task. For information on using the OA GUI, see the HPE Integrity Superdome X and Superdome 2 Onboard Administrator User Guide: h20628.www2.hp.com/km-ext/kmcsdirect/emr_na-c04389052-6.pdf For information on using the OA CLI, see the HPE Integrity Superdome X and Superdome 2 Onboard Administrator Command Line Interface User Guide: h20565.www2.hpe.com/hpsc/doc/public/display?docId=emr_na-c04389088 Preparing the installation • From the technician’s computer, connect to the HPE Integrity Superdome X OA – To access an active OA GUI: Use the active OA service IP address from the Insight Display on that enclosure as the Web address in your laptop or PC browser. – To access an active OA CLI: Use a telnet or Secure Shell program based on the configured network access settings and connect to the active OA service IP address. • Log into the OA as administrator. Use “Administrator” user account and its password; the default password can be found on the OA toe tag. Figure 3. HPE Integrity Superdome X Onboard Administrator interface • Create an nPar with the first BL920s for your Windows Server environment. • It is important to mention that if the nPar is based on a BL920s Gen8, you can only add Gen8 blade servers to grow the nPar; likewise BL920s Gen9 can only be included in nPars based on Gen9 blade servers. – Start with the minimal size of a hardware partition (1 BL920s). – The nPar should just contain one blade with at least one flexible LOM and a Fibre Channel adapter. The reason is that during the Windows installation several reboots will occur. The more hardware in the nPar, the longer it takes to initialize each time the system is restarted. – Every hardware partition (nPar) has two identifiers: a partition number (the primary identifier from an internal perspective) and a partition name (a more meaningful handle for administrators). Technical white paper Figure 4. HPE Integrity Superdome X Onboard Administrator—Complex—nPartitions summary Figure 5. HPE Integrity Superdome X Onboard Administrator—nPartition—Virtual Devices • Boot the nPar – You can monitor the nPar boot through its iLO Integrated Remote console – The system initializes Figure 6. nPartition—iLO integrated Remote Console—Boot entries at initialization Page 15 Technical white paper Page 16 • Configure the Fibre Channel Adapters for boot To enable boot from a QLogic Fibre Channel device, use the UEFI system Utilities and select Device Manager to enable the boot controller. Hewlett Packard Enterprise recommends enabling both boot controllers from the first blade (monarch) before installing Windows. – When boot reaches the EFI Boot Manager menu, press U for System Utilities – Select Device Manager – Select the first HPE QMH2672 16Gb 2P FC HBA—FC Figure 7. nPartition—System Utilities—Device Manager – Select Boot Settings – Toggle Selective Login to <Disabled> – Toggle Selective LUN Login to <Disabled> – Toggle Adapter Driver to <Enabled> Figure 8. nPartition—System Utilities—Device Manager—FC adapter—Boot Settings Technical white paper Page 17 – Ctrl-W to save – Ctrl-X to exit – Repeat for the second adapter – At the end Ctrl-X to exit Device Manager, and go back to the System Utilities main menu Start Windows initial installation phase • Connect the Windows media to the nPar You may connect your SUV dongle cable to the blade with a USB flash storage or DVD, or use the nPar’s virtual device under the iLO Integrated Remote Console to redirect your ISO image. • Ensure that USB device and Fiber device are seen from the UEFI Shell – From the System Utilities, select Boot Manager, and UEFI Shell Standard UEFI commands can be used to detect newly connected hardware – At the UEFI Shell, you can issue the Reconnect –r command, followed by a Map –r command Shell> Reconnect –r (Reconnects one or more UEFI drivers to a device) Shell> Map –r (Show device mapping table; only FAT32 partitions can be seen by UEFI, and shows as FS#) Figure 9. nPartition—UEFI Shell—Map—r command • In this example, the USB or CD-ROM device contains a FAT32 partition recognized by UEFI as a known file system (FS0). Some of BLK devices map to a Fiber connection, indicating that SAN devices have been presented to the Host Bus Adapters. This is the Windows installation media. The file system will need to be accessed in order to start Windows installation. Technical white paper Page 18 • Start Windows installation – At the UEFI Shell (following the example in figure 9) Shell> Reconnect –r (Reconnects one or more UEFI drivers to a device) Shell> Map –r (Shows device mappings) – At the UEFI Shell type FS#: where # maps to the file system number shown for your Windows media Shell> FS0: (Enter) – Go to the Windows install boot program, FS0:\> CD \EFI\BOOT (Enter) – Execute BootX64.EFI from the command prompt to start the Windows installation FS0:\EFI\BOOT> BOOTX64 (Enter) – When prompted “press any key” to start the Windows Installation (standard Windows Setup initialization process) Note Windows installation can be monitored through the nPar iLO Integrated Remote Console, or through a terminal connected to the nPar console, because the Windows Setup support headless installations through its Emergency Management Services (EMS). Windows EMS provides remote management and system recovery options when other server administrative options are not available. EMS uses the Special Administration Console (SAC) feature of Windows Server. The SAC can be used to manage the server during Windows deployment and runtime operations through its setup channels. Additionally, the SAC provides administrative capability such as the viewing or setting of the OS IP address, shutting down, and restarting of the OS, viewing a list of currently running processes. In addition, it allows creating and navigating command channels with the Windows command shell (cmd.exe) to interact and manage the operating environment. • Follow the Windows installation process – Enter the desired language for the installation and the Windows Setup phase starts – Enter the product key – Select the disk for the Windows system – Files will be copied and reboots will occur – Wait for the last phase where you are prompted to enter the Administrator's password – Login – The Windows initial setup phase ends now • Install Hewlett Packard Enterprise recommended Windows Drivers and software The HPE Integrity Superdome X hardware partition running Microsoft Windows Server 2012 R2 now requires the HPE recommended drivers and software to be installed (Windows Administrator’s is required for this task). The components are provided through the “HPE Integrity Superdome X IO Firmware and Windows Drivers image” that can be downloaded from the HPE Integrity Superdome X support page. This ISO image provides HP SUM (Smart Update Manager) to discover hardware and software on the system and propose appropriate updates. HP SUM installs updates in the correct order and ensures all dependencies are met before deployment of an update. Technical white paper Page 19 Note Hewlett Packard Enterprise recommends updating the I/O firmware offline using the Superdome X IO Firmware and Windows Drivers image offline that is to say, although HP SUM may propose IO firmware updates, it is preferable to unselect them. In effect, HP SUM may present a reboot button before the firmware update completes. If the reboot occurs, the affected IO adapters may become unusable. This is particularly true if done on an nPar with more than one blade. For more information see the “HPE Integrity Superdome X IO Firmware and Windows Drivers image” release note. • Supply a media that contains the “HPE Integrity Superdome X IO Firmware and Windows Drivers image” on the HPE Integrity Superdome X. • Mount the ISO image using File Manager on the desktop. – You can mount the ISO image using the iLO virtual media if you are connected to the nPar using the iLO remote console – Or you can copy the *.ISO image on your nPar – Alternatively you can have it on a media (such as a DVD) and present the media to the nPar In Windows file manager, double click on the ISO to have it mounted • On the target nPar (target server platform), double click on the “launch_hpsum.bat” from the CD’s (ISO image) root directory (or run HP SUM.bat from \hp\swpackages). • Once started, select “Localhost guided update”, then select the deployment mode. Figure 10. Launch_hpsum from the mounted “HPE Integrity Superdome X IO Firmware and Windows Drivers” image Technical white paper Page 20 Hewlett Packard Enterprise highly recommend to pick the “interactive mode” if the nPar is made of more than one BL920s blade server (i.e 2, 3, 4, or 8 blades) because of potential firmware upgrade not necessarily wanted. • Select “interactive” (radio button) and click “OK” at the bottom of the “localhost guided update” window. It will take several minutes for HP SUM to perform a baseline inventory of installed software and firmware as well as list the content of the ISO image. Figure 11. HP SUM—Localhost Guided Update—Inventory • Once the inventories of baseline and node are done, click on “Next”. Individual smart components candidate for installation will be listed by HP SUM. Technical white paper Page 21 The button on the very left column of the component indicates how HP SUM determined that the component needs to be installed as depicted in figure 12. Figure 12. List of components and their deployment status HP SUM shows smart components compatible with the platform hardware and Operating System. The entire list of Windows components (drivers, software) and additional firmware will be displayed with their current update required. • You can select the button next to the component and click with the mouse to change the update requirement (it is a toggle button). In the case of figure 12, the update requirements are “Selected” and “Force” meaning that it would be installed respectively as an item that is selected to be installed, and in the other case since components are already up to date the status is force)… For information about HP SUM refer to: h17007.www1.hp.com/us/en/enterprise/servers/solutions/info-library/index.aspx?cat=smartupdate&subcat=hp_sum (the current version is 7.4.0) Note: As stated earlier, there is a known issue when an nPar contains several blades for the online I/O firmware updates—for example the ones entitled “HPE Firmware online flash for QLogic…or HPE NX2 QLogic online firmware upgrade utility”. It is recommended to NOT select them. I/O firmware updates should be done offline; for more information refer to h20564.www2.hpe.com/hpsc/doc/public/display?docId=emr_na-c04796854 • Once you have selected the components you can click on “deploy.” • Watch the deployment status or wait for the final screen which indicates the completion state (as depicted in the next figure). Technical white paper Page 22 Figure 13. Deployment completed with status of deployed components • At the end of the deployment, you can reboot the system if everything completed as expected. • You may check in Windows Computer settings / Device manager—when Windows is back online, there should be no yellow exclamation mark beside any device listed. The following components should be installed: – Combine driver – iLO 3/4 interface driver – iLO 3/4 management driver – Intel ixn/ixt driver (BL920s Gen8) – HPE QLogic NX2 10/20GbE Multifunction Drivers for Windows Server x64 Editions version (BL920s Gen9) – QLogic FC Storport driver – HPE System Management Homepage for Windows x64 – HPE Mission Critical WBEM Providers for Windows Server x64 Edition version Recommended environment customization After the system installation, you can use Windows Server Manager to do some basic customization such as enable Remote Desktop and rules associated within the Windows firewall, etc. A system administrator with administrative privilege on Windows can perform those tasks. For debugging purposes, Hewlett Packard Enterprise recommends defining a custom pagefile and set debugging options to create a kernel memory dump. Technical white paper Page 23 • Pagefile size The pagefile can be adjusted to a custom size with minimum and maximum size of 131072 MB as depicted in figure 14. This size of 128 GB is optimal for a server (nPar) which RAM size is 4 TB. With HPE Mission Critical WBEM Providers for Windows Server x64 Edition version 1.2 or above, the pagefile is set to an optimal value (1/32 total RAM size of the nPar), therefore it is not necessary to set it manually. The following commands are just provided here as a reference. • Use Control Panel, “System” properties to alter the default by disabling the automatic management of the pagefile for all drives, and setting the pagefile size to 131072 MB (=128 GB). • Or, issue these two commands under a Windows Command Prompt environment wmic computersystem where name=“%computername%" set AutomaticManagedPagefile=False wmic pagefileset where name=“%SystemDrive%\\pagefile.sys" set InitialSize=131072,MaximumSize=131072 – A reboot of the system is required to take the new pagefile size into effect (reboot can be done later). Also note that this size may change to 1/32 server RAM size, if the server RAM size is different from 4 TB. Figure 14. Windows System Properties—Advanced—Performance—Virtual Memory—Custom size • Kernel memory dump In case of a critical error, the system will attempt to generate a memory dump. Due to the large amount of physical memory, the size of the file can be significant and the suggestion is to write a kernel memory dump instead of a full memory dump. You may have to alter the default settings. – Use Control Panel, “System” properties, “startup and recovery”, in the system failure section, select Write debugging information to “Kernel memory dump” as depicted in figure 15. Technical white paper Page 24 Figure 15. Windows System Properties—Advanced—Startup and Recovery settings • Power settings—display timeout. To be able to gracefully shut down a Windows partition from the OA with the “poweroff partition x” command, it is necessary to disable the display timeout in all Windows power plans. Also, if the system is running high-demanding workloads it is obvious to run in a high-performance power plan. To change these settings you may: – Use Control Panel, “Power Options” “High Performance”, then “Choose when to turn off display”, select “Never” and “Save Changes” Figure 16. Control Panel—Power options – Alternatively one can use a Windows command prompt, and enter following command to turn off the display timeout in all power plans, and apply changes immediately: powercfg -setacvalueindex scheme_min sub_video videoidle 0 powercfg -setacvalueindex scheme_max sub_video videoidle 0 powercfg -setacvalueindex scheme_balanced sub_video videoidle 0 powercfg -setactive scheme_current Technical white paper Page 25 Note Changing power settings require a reboot to be taken into effect. This can be scheduled later. • Additional Windows features Install any additional role or feature for your operating environment. Microsoft provides multipath I/O (MPIO) feature to support storage (disk) access through multiple redundant paths. This feature is not installed by default and will need to be present on the system in order to enable all paths to disks for redundancy purpose. – To install MPIO, use Server Manager, Local Server, select “Manage”, “Add roles and Features”, and follow the screens until it is installed. – After installing the feature, the system will need to be restarted. • Install Microsoft Windows Server 2012 R2 November QFEs (Microsoft hotfixes) and run Windows update if applicable. Note Windows Server 2012 R2 November update is required only if the Windows installation media was from April 2014 with KB2919355 included. Administrative privileges are required. November update should have been downloaded (refer to page 11) and is around 800 MB in size. Either it is recommended to copy the files to a temporary directory on your server, or access them through a device with fast access (example media connected on the USB SUV dongle cable). Each package can be started by double clicking on the package with the mouse. A reboot is required at the end of each package. These operations will take time. Assuming Microsoft OS updates (cf page 11 in the Operating system section) have been downloaded and copied in a folder accessible from the installed nPar; from the folder containing the November updates: 1. Double click on Windows8.1-KB3000850-x64.msu, and run the update, at the end the system will have to reboot. 2. Same for Windows8.1-KB3003057-x64.msu. 3. Then Windows8.1-KB3014442-x64.msu. 4. The last one from the November update is KB301643. This hotfix only applies to a Domain Controller, therefore if the role hasn’t been installed it is not necessary to install the hotfixes, and in fact, it will fail to install (Windows8.1-KB3016437-x64.msu). 5. Next, install KB3032331 by running its installer. 6. At the end of the process, you can clean up the temporary folder that contained the source of the files if any. Note If possible, run Windows Update to make sure your Windows environment has the latest hotfixes. Enable all paths on the SAN At this stage, you can present all the disks/LUNs to the host and enable all SAN paths that have to be enabled for improved redundancy, reliability, and access. Technical white paper Page 26 It is also necessary to make sure that the Microsoft Windows Device Specific Module (DSM) is enabled for the paths. When multiple paths are enabled, it is necessary to let MPIO add the device using the MPIO Control Panel applet. • Example with HPE 3PAR StoreServ Storage – From “Control Panel” “MPIO” (or “Server Manager” “Tools” “MPIO”) click “Add” and the “Add MPIO Support” window appears from the “MPIO Devices” tab. – In the Device Hardware ID: text box, enter 3PARdataVV, and then click OK. Figure 17. Control Panel—MPIO properties—MPIO Devices—Add MPIO 3PARdataVV device support – A reboot is then required – Command line equivalent with a Windows Command Prompt is: C:\Users\Administrator> mpclaim -r -I -d “3PARdataVV" C:\Users\Administrator> Shutdown -r Verify multiple paths on the server (nPar) After the system reboots, verify that the multipathing had been taken into account. There are multiple ways of doing this; for example it is possible to capture a snapshot of the configuration from the MPIO Control Panel applet, but one can also use Device Manager, in Computer Management, and check the Disk properties, or alternatively use the MPCLAIM command under a Command Prompt window. • Verify MPIO devices (as administrator) – Login as administrator – Click on “Start”, “Control Panel”, “MPIO” – Go to the last tab “Configuration Snapshot” – Check the box “Open File upon Capture” and click on “Capture” Or – Click on “Server Manager”, “Tools”, “Computer Management” – Go to the “Device Manager” item on the left – On the right side, click on the “+” next to the “Disk drives” – Select the 3PARdataVV…Disk device, and right-click to see their properties as depicted in figure 18. Technical white paper Page 27 Figure 18. Computer Management—Device Manager—Disk Drives Add blades to the nPar as required Once the OS has been updated with the latest Microsoft hotfixes and the HPE software packages required supporting the OS environment, the next steps will be customization steps. For instance, you might want to grow the hardware partition (nPar) adding blades (and their adapters) to your already existing nPartition for Windows. You can use the HPE Integrity Superdome X OA (GUI or CLI) to add the blades. The system will need a restart to take into account the new blades, and Windows will automatically detect the new hardware. There might be a couple of reboot operations inherent to the discovery and installation of the new hardware. Once the system is back online and stable, you can customize and install applications as needed. Customize the Windows environment and install applications At this stage, you can customize your operating environment and install your applications. Recommended software configuration and tuning Operating system Power settings Hewlett Packard Enterprise recommends high-performance power plan for highly demanding workloads. Trim EFI boot entries Customers are advised to remove stale or unwanted boot options to save boot time. Use “efibootmgr” to remove the preboot execution environment (PXE) boot options when the OS is installed and remove the boot options to the old OS, after installing a new one. NUMA and processor group considerations Windows processor groups The concept of a processor group (also known as a k-group) was introduced in Windows Server 2008 R2 when the operating system increased its scalability to support more than 64 logical processors. A processor group is a static set of up of 64 logical processors. Processor groups are numbered starting with zero. If you have less than 64 logical processors, then there will be a single processor group: Group 0. The use of processor groups was chosen by Microsoft to ensure existing applications continue to operate on Windows without modification. Legacy applications (that is, non-processor group-aware applications) would be assigned (or affinitized) to a single processor group. This gives them access to a maximum of 64 logical processors for scheduling threads. The Windows API was extended to provide additional functionality to permit new or modified applications to extend their operations across multiple processor groups by appropriate use of API calls. This is the case of SQL Server since release 2008 R2. Technical white paper Page 28 The HPE Integrity Superdome X nPar can expose a large number of logical processors depending on the size of the partition and whether Intel Hyper-Threading is enabled. For optimal performance, the MC WBEM providers adjust the size of processor groups to match a socket or a blade depending on the total core count. An additional restriction is that a NUMA node must be fully contained within a processor group. This means a processor group cannot contain a partial set of processors within a socket. Within a BL920s blade server, each processor socket is an individual NUMA node. Therefore, the logical processors presented by a single socket must be fully contained by a processor group. A processor group can extend over multiple sockets, and processor groups can extend over multiple blades within an nPar as long as the processor count stays under the 64-processor limit. HPE Superdome X supports processors with a core count per socket of different sizes but Windows cannot exceed 64 logical processors per processor group, table 7 depicts the processor group size limits depending on processor cores. Hyper-Threading provides boost over cores by doubling the amount of logical processors, but neither of these two logical processors (threads) will be as powerful as the one core from which they are carved. Table 7. Processor group size limits GEN8 6 CORES HT-ON 6 CORES HT-OFF 10 CORES HT-ON 10 CORES HT-OFF 15 CORES HT-ON 15 CORES HT-OFF Processor per blade server 24 12 40 20 60 30 Processor group maximum 48 48(*) 40 60 60 60 Number of blade server per group 2 4 1 3 1 2 (*) Bounded by supported blade count per nPar. GEN9 4 CORES HT-ON 4 CORES HT-OFF 10 CORES HT-ON 10 CORES HT-OFF 14 CORES HT-ON 14 CORES HT-OFF 18 CORES HT-ON 18 CORES HT-OFF Processor per blade server 16 8 40 20 56 28 72 36 Processor group maximum 64 64 40 60 56 56 36 36 Number of blade server per truncated group 4 8 1 3 1 2 0.5 1 (*) Bounded by supported blade count per nPar. Software advisory notes Finding customer advisories For the most up-to-date information about issues on this platform, go to: hpe.com/support. Then enter Superdome X in the “Enter a product name or number” box. After hitting the “GO” button you will be taken to the HPE Integrity Superdome X page, select “top issues” tab, and click on “Advisories, bulletins & notices” tab. This will provide a list of the most recent advisories. General known issues Boot messages The following messages will be seen while a Superdome X server is booting: lpc_ich 0000:20:1f.0: I/O space for ACPI uninitialized lpc_ich 0000:20:1f.0: I/O space for GPIO uninitialized lpc_ich 0000:20:1f.0: No MFD cells added -And- Technical white paper Page 29 iTCO_wdt: failed to get TCOBASE address, device disabled by hardware/BIOS These messages indicate hardware on auxiliary server blades is not initialized. Only the hardware on the monarch server blade is initialized, and if a server blade is auxiliary, this hardware is disabled. These messages are expected and are to be ignored. There will be one set of these messages for every auxiliary server blade, that is, seven sets of them on an eight-server BladeSystem. Suspend/resume The hibernate/suspend/resume functionality is not supported on HPE Integrity Superdome X. If by accident a hibernation operation is initiated on the system, power cycle the system from the OA, and reboot the system in order to recover. Issues related to the Windows environment Network interface cards not named appropriately When you newly install Windows on an nPar with a recent partition firmware (7.6.0) the network interface adapters will not be named correctly under Windows for example “Ethernet Instance 200” instead of “Mezzanine Enc 1 Bay 1 Slot 1 Function 0.” Use HPE System Management Homepage (SMH) to display information reported by the HPE Mission Critical WBEM Providers for Windows Server x64 Edition will help identify the cards. You can then change the names in Windows or click the “Change to Consistent Device Naming” button to get the names changed automatically. Refer to the following customer advisory: h20564.www2.hpe.com/hpsc/doc/public/display?docId=emr_na-c04945139 Boot controller issue—Windows may fail to boot with STOP 0x7B In certain reconfiguration scenarios, Windows may fail to boot with a blue screen STOP 0x7B because of inability to load the boot driver for the boot controller. This is documented in a customer advisory available using the following link: h20564.www2.hpe.com/hpsc/doc/public/display?docId=emr_na-c04945837 If that failure occurs it is possible to recover using Windows Safe boot mode. For more information please refer to the Microsoft knowledge base article support.microsoft.com/en-us/kb/3024175 Potential slow SQL Server Log file initialization phase A very slow SQL Log file initialization phase may be observed on HPE Integrity Superdome X systems running Windows Server 2012 R2 and using QLogic QMH2672 adapter. This has been observed when using the default maximum transfer size setting of 512 KB and the specific 8 MB workload. The workaround consists in changing the default maximum transfer size to 2 MB instead of 512 KB. You can use the following command in Windows to change this. C:\cpqsystem\pnpdrvs\qlfcx64 –tsize /fc /set 2048 For more information, take a look at the following advisory: h20566.www2.hpe.com/hpsc/doc/public/display?docId=emr_na-c04936705 Intel Ethernet Thermal Sensor Monitor Service Reports a Failure to Start in Windows When the Server Is Configured with an HPE Ethernet 10Gb 2-port 560M or 560FLB Adapter. Event ID 7024 in Windows System Logs each time the server is restarted with the following erro: The Intel Ethernet Thermal Sensor Monitor Service terminated with service-specific error 0 (0x0). on a Superdome X server configured with an HPE Ethernet 10Gb 2-port 560M or 560FLB Adapter. This is due to an Intel service installed with the adapter which is not use on a Superdome X partition, therefore the service can be safely removed. HPE Mission Critical WBEM providers 1.3 removes this IETS service, upon installation as it detects it. For more information, please check the following advisory: h20564.www2.hpe.com/hpsc/doc/public/display?docId=emr_na-c04879760 Technical white paper Resources Information about the HPE Integrity Superdome X hpe.com/servers/SuperdomeX You can look at the related links and check data sheets, documents, technical support and manual, please find below the titles and links to the Superdome X manuals. HPE Integrity Superdome X Server documentation is accessible through the “manuals” tab under the following URL h20564.www2.hpe.com/portal/site/hpsc/public/psi/home/?sp4ts.oid=7161269 HPE Integrity Superdome Onboard Administrator documentation • HPE Integrity Superdome X and Superdome 2 Onboard Administrator User Guide • HPE Integrity Superdome X and Superdome 2 Onboard Administrator Command Line Interface User Guide for HPE Other useful resources HPE 3PAR Windows Server 2012 and Windows Server 2008 Implementation Guide h20628.www2.hpe.com/km-ext/kmcsdirect/emr_na-c03290621-14.pdf HPE Verified Reference Architecture for Microsoft SQL Server 2014 on HPE Superdome X h20195.www2.hp.com/V2/GetDocument.aspx?docname=4AA6-1676ENW&cc=us&lc=en HPE Reference Architecture for Microsoft SQL Server 2014 mixed workloads on HPE Integrity Superdome X with HPE 3PAR StoreServ 7440c Storage Array h20195.www2.hpe.com/V2/GetDocument.aspx?docname=4AA6-3436ENW&cc=us&lc=en Microsoft Performance Tuning Guidelines for Windows Server 2012 R2 msdn.microsoft.com/en-us/library/windows/hardware/dn529133 Learn more at hp.com/go/integrity Sign up for updates Rate this document © Copyright 2015–2016 Hewlett Packard Enterprise Development LP. The information contained herein is subject to change without notice. The only warranties for Hewlett Packard Enterprise products and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. Hewlett Packard Enterprise shall not be liable for technical or editorial errors or omissions contained herein. Intel and Intel Xeon are trademarks of Intel Corporation in the U.S. and other countries. Microsoft, Windows, and Windows Server are either registered trademarks or trademarks of Microsoft Corporation in the United States and/or other countries. 4AA5-7519ENW, April 2016, Rev. 5