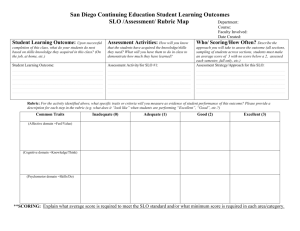

Student Learning Outcomes Assessment Handbook

advertisement