DE FINETTI WAS RIGHT: PROBABILITY DOES NOT EXIST It is

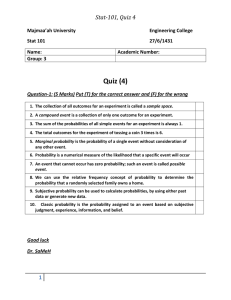

advertisement