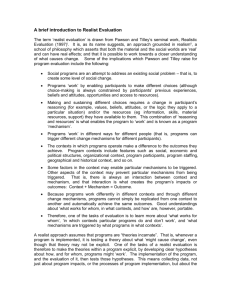

Realist Evaluation - Community Matters

advertisement