Rectangular Full Packed Format for Cholesky`s Algorithm

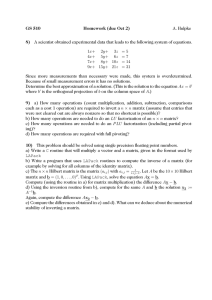

advertisement