Pipeline and Batch Sharing in Grid Workloads

advertisement

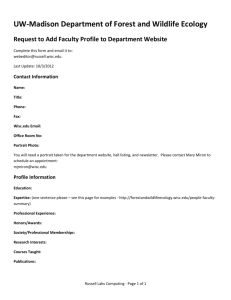

Pipeline and Batch Sharing in Grid Workloads Douglas Thain, John Bent, Andrea Arpaci-Dusseau, Remzi Arpaci-Dusseau, and Miron Livny WiND and Condor Projects 6 May 2003 Goals › Study diverse range of scientific apps Measure CPU, memory and I/O demands › Understand relationships btwn apps Focus is on I/O sharing www.cs.wisc.edu/condor Batch-Pipelined workloads › Behavior of single applications has been well studied sequential and parallel › But many apps are not run in isolation End result is product of a group of apps Commonly found in batch systems Run 100s or 1000s of times › Key is sharing behavior btwn apps www.cs.wisc.edu/condor Batch-Pipelined Sharing Batch width Shared dataset Pipeline sharing Shared dataset www.cs.wisc.edu/condor 3 types of I/O › Endpoint: unique input and output › Pipeline: ephemeral data › Batch: shared input data www.cs.wisc.edu/condor Outline › › › › › Goals and intro Applications Methodology Results Implications www.cs.wisc.edu/condor Six (plus one) target scientific applications › › › › › › › BLAST - biology IBIS - ecology CMS - physics Hartree-Fock - chemistry Nautilus - molecular dynamics AMANDA -astrophysics SETI@home - astronomy www.cs.wisc.edu/condor Common characteristics › Diamond-shaped storage profile › Multi-level working sets logical collection may be greater than that used by app › Significant data sharing › Commonly submitted in large batches www.cs.wisc.edu/condor BLAST search string blastp matches genomic database BLAST searches for matching proteins and nucleotides in a genomic database. Has only a single executable and thus no pipeline sharing. www.cs.wisc.edu/condor IBIS inputs analyze forecast climate data IBIS is a global-scale simulation of earth’s climate used to study effects of human activity (e.g. global warming). Only one app thus no pipeline sharing. www.cs.wisc.edu/condor configuration CMS CMS is a two stage pipeline in which the first stage models cmkin accelerated particles and the second simulates the response of raw events a detector. This is actually just the first half of a bigger pipeline. configuration cmsim geometry triggered events www.cs.wisc.edu/condor problem setup initial state argos integral Hartree-Fock HF is a three stage simulation of the non-relativistic interactions between atomic nuclei and electrons. Aside from the executable files, HF has no batch sharing. scf solutions www.cs.wisc.edu/condor Nautilus initial state nautilus intermediate bin2coord coordinates rasmol physics Nautilus is a three stage pipeline which solves Newton’s equation for each molecular particle in a threedimensional space. The physics which govern molecular interactions is expressed in a shared dataset. The first stage is often repeated multiple times. visualization www.cs.wisc.edu/condor inputs physics corsika raw events corama standard events ice tables mmc noisy events geometry mmc triggered events AMANDA AMANDA is a four stage astrophysics pipeline designed to observe cosmic events such as gamma-ray bursts. The first stage simulates neutrino production and the creation of muon showers. The second transforms into a standard format and the third and fourth stages follow the muons’ paths through earth and ice. www.cs.wisc.edu/condor SETI@home work unit setiathome analysis SETI@home is a single stage pipeline which downloads a work unit of radio telescope “noise” and analyzes it for any possible signs that would indicate extraterrestrial intelligent life. Has no batch data but does have pipeline data as it performs its own checkpointing. www.cs.wisc.edu/condor Methodology › CPU behavior tracked with HW counters › Memory tracked with usage statistics › I/O behavior tracked with interposition mmap was a little tricky › Data collection was easy. Running the apps was challenge. www.cs.wisc.edu/condor Resources Consumed 5000 Real time Memory I/O 20 4000 3000 15 2000 10 Na ut ilu s AM AN D A IB BL SE HF 0 C M S 0 IS 1000 AS T 5 TI Time (hours) 25 •Relatively modest. Max BW is 7 MB/s for HF. www.cs.wisc.edu/condor Memory, I/O (MB) 30 I/O Mix 10000 Endpoint Pipeline Batch 100 10 1 Na ut ilu s AM AN D A HF C M S IS IB AS T BL TI 0.1 SE Traffic (MB) 1000 •Only IBIS has significant ratio of endpoint I/O. www.cs.wisc.edu/condor Observations about individual applications › Modest buffer cache sizes sufficient Max is AMANDA, needs 500 MB › Large proportion of random access IBIS, CMS close to 100%, HF ~ 80% › Amdahl and Gray balances skewed Drastically overprovisioned in terms of I/O bandwidth and memory capacity www.cs.wisc.edu/condor Observations about workloads › These apps are NOT run in isolation Submitted in batches of 100s to 1000s › Large degree of I/O sharing Significant scalability implications www.cs.wisc.edu/condor Scalability of batch width Storage center (1500 MB/s) Commodity disk (15 MB/s) www.cs.wisc.edu/condor Batch elimination Storage center (1500 MB/s) Commodity disk (15 MB/s) www.cs.wisc.edu/condor Pipeline elimination Storage center (1500 MB/s) Commodity disk (15 MB/s) www.cs.wisc.edu/condor Endpoint only Storage center (1500 MB/s) Commodity disk (15 MB/s) www.cs.wisc.edu/condor Conclusions › Grid applications do not run in isolation › Relationships btwn apps must be understood › Scalability depends on semantic information Relationships between apps Understanding different types of I/O www.cs.wisc.edu/condor Questions? › For more information: • Douglas Thain, John Bent, Andrea ArpaciDusseau, Remzi Arpaci-Dusseau and Miron Livny, Pipeline and Batch Sharing in Grid Workloads, in Proceedings of High Performance Distributed Computing (HPDC12). – http://www.cs.wisc.edu/condor/doc/profiling.pdf – http://www.cs.wisc.edu/condor/doc/profiling.ps www.cs.wisc.edu/condor