Assessment Facilitators Group Meeting Minutes September 26, 2014

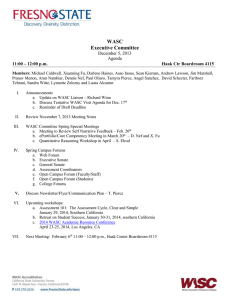

advertisement

Assessment Facilitators Group Meeting Minutes September 26, 2014 Attending: Melinda Jackson, Robin Love, Diane Wu, Carol Reade, Julie Sliva Spitzer, Elaine Collins, Lynne Trulio, Hilary Nixon, Dennis Jaehne, Cami Johnson, Brandon White, Jinny Rhee, Minnie Patel, Scot Guenther, Cheryl Allmen, Demerris Brooks (DOUBLE CHECK) Meeting convened at 12:10pm Welcome and introductions of group members and guests Brandon White, Chair of the Program Planning Committee, and Cami Johnson, Chair of the WASC Steering Committee. WASC Accreditation Updates: Cami Johnson updated the group on the purpose of the Institutional Education Effectiveness Index (IEEI) spreadsheet required by WASC, and answered questions about the level of detail needed in descriptive information for this spreadsheet. She also reminded the group about the next steps in the WASC accreditation process: conference call with campus leadership on November 12, to identify “lines of inquiry” for follow-up, and onsite campus visit April 14-16, 2015. AF group members should plan to be available to meet with the WASC on-site team during those dates, if needed. Cami also announced a plan for a student work showcase during the WASC campus visit, and asked group members to suggest appropriate student research, scholarship, creative activities, or other achievements to be included in this showcase. Discussion of new assessment form used in 2013-14 reports.: Group members reported that the new form was more work for chairs and/or department assessment coordinators than the previous report template (partly due to the fact that it was new). Some questioned the relevance of the graduate and retention rates included in Part B of the new form, as this seemed disconnected from the assessment of student learning. Melinda Jackson clarified that the graduate and retention rates are considered “indirect” assessment measures, while the assessment of specific Program Learning Outcomes (PLOs) are considered “direct” measures. She suggested that while both types of assessment data are important, programs may find it useful to be aware of trends in their graduation and retention rates on an annual basis, particularly given the new budget model which gives departments more control and responsibility over their individual budgets. Scot Guenther mentioned that some chairs in the College of Humanities needed help in accessing the Required Data Element (RDE) numbers from the Institutional Effectiveness and Analytics (IEA) office, but that becoming more familiar with these numbers proved valuable to them once they were able to review the data. Discussion of plan to provide feedback on annual assessment reports: The group discussed whether to follow the same model as last year, in which college AFs provided feedback to the programs in their colleges based on the WASC PLOs rubric. An alternative model in which crosscollege teams would consider a set number of programs was discussed, but ultimately it was decided to continue using the previous model. ACTION: AFs will begin reviewing annual assessment reports from the programs in their colleges, with the goals of providing written feedback to all programs by the end of the Fall 2014 semester. Deadline: December AF meeting (December 19, 2014). Proposal to present case studies of assessment in different programs at each AF group meeting this year. ACTION: College of Social Sciences will present on the Environmental Studies programs at our next meeting in October; College of Engineering will present in November. The group discussed the question of how assessment reports should be organized for departments with multiple programs. One option is to combine the reports for all programs into a single document, with each program listed sequentially. Another option is to require separate assessment reports for each program, although this may create a burden for departments with multiple programs. Dennis Jaehne suggested that the end-user experience should serve as a guide. Since assessment reports should be listed separately for each program on the UGS program records webpage, it is desirable to have separate reports for each program, to make it as easy as possible to access the information a user is seeking. After some discussion, it was agreed to table this issue for further discussion. ACTION: Revisit this question at a future meeting, and make a decision to provide clear guidance for 2014-15 assessment reports. Some questions have come up regarding requirements for the assessment of minors. MJ clarified that if a minor program is required as part of a degree program, then the way(s) in which the minor contributes, at least in part, to fulfillment of the PLOs should be made clear in the program planning process. The group discussed the implications of this, and it was further clarified that this need not involve detailed documentation of direct assessment at the level of courses within the minor, but simply an explanation of how the (required) minor contributes to the learning outcome of the program (e.g., Breadth of knowledge). Minor courses (that are required) should also be mapped to the PLOs. Cheryl Allmen presented an update on the progress in Student Affairs assessment over the last year, including (FILL IN DATA ON NUMBER OF PROGRAMS ETC.)