Document 17973372

advertisement

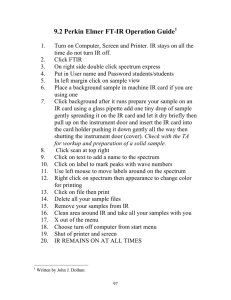

>> Vani Mandava: So welcome everyone. It's with great pleasure that I introduce Ben Chidester, who is an intern on the Microsoft Research Outreach Team this summer. He's a Ph.D. student at the University of Illinois Urbana-Champaign, advised by Professor Minh Do. His research interest lies in image processing and statistical learning. And his work focuses on applications and bioemerging domain. He's done some great work with us this summer, applying his problem on Azure, applying deep neural networks and trying some different implementations on Azure. And his talk today is titled Cell Classification of FT-IR Spectroscopic Data for Histopathology using Neural Networks. Welcome Ben. >> Ben Chidester: Thank you. Thanks for the introduction, Vani. And thanks for joining. So Vani introduced the title already. There's a lot of things there that I want to go through a little bit, introduce at a time what all the entries are for. As a brief outline of what this talk will include, first talk about the application of FT-IR or Fourier Transform Infrared spectroscopy for histopathology. And some of the current challenges with that work and the motivation for using deep neural networks in this with this FT-IR data. Also talk about implementing the DNNs on Azure and what Azure brings to the table and then finally culminating all these different steps is actually classifying cells of FT-IR data to aid in the process of histopathology. So to begin, I'll start with just describing a bit about what is Fourier Transform infrared spectroscopy. It's a type of chemical imaging that uses infrared light. Infrared light is emitted from a source and it passes through a beam splitter which allows -- you have a translating mirror here. So you can change the phase of the light that's returning to the original source. And they interfere with one another and pass through the sample, they're detected, and this detector records what's called interferogram which if you use Fourier Transform, it can give you a measure of absorption. As this infrared light passes through, it resonates with the sample with some of the vibrational frequencies of functional groups of molecules. And then it's absorbed. Otherwise it passes through and we get a spectrum here of for different frequencies of infrared light how much is absorbed and how much passes through and then for each pixel or each spot in the sample, we have the spectrum, if we put them all together we get what is a 3-D volume. If I look down one pixel through the 3-D volume I'll see the spectrum. And each frequency I have an image which is a measure, which is an absorption map. So for every frequency I'll have a different image. So that's the way that FT-IR works. So in the end you have not one single image but actually this 3-D volume. The resolution of FT-IR is about five microns. So at each one of these spectrum we're seeing the response of possibly several cells, possibly a pure region of one cell but possibly several cells overlapping. So this spectrum actually tells us a lot about the molecular chemical composition of what's inside the sample. So that's where the power comes in. So normal histopathology. If you want to go to a doctor to look at a tumor, see if it's cancerous or not, the doctor would take, do a biopsy and extract a core from the site and the core is sliced very thinly into different slices. Each one of these slices is then stained. And using like H and E staining, and from this a doctor will observe under a microscope the structure and then from the structure will decide a pathologist will decide whether it's cancerous or the grading of cancer. So they're looking for things in this structure of the data. So with FT-IR we're proposing to replace this staining stage so instead of doing the staining, we actually image each slice and we have this 3-D volume and spectrum and then from the spectrum at each pixel I can, looking at a spectrum I can tell a lot about the molecular composition, and I can create actually a map of the different cell types that are found there. So some of the key players are things like epithelium or Thalagen or fibroblasts and from this then a pathologist could look at this map and make a diagnosis about cancer or the grading of cancer. And what we'd like to do is automate this task of labeling using machine learning. So that's the current process. But this hasn't really seen much clinical use yet because of a couple of different drawbacks. One of the main ones is that the imaging is very slow. So the image of the core could take about a day. And if you're trying to see several patients a day, this is kind of prohibitive. We want to see more like maybe 30 minutes for patients core not a day. The other issue is that to know what the cell label should be requires a lot of insight by a pathologist or someone's who is trained to look at the spectrum to say what this cell type is. And so the features that are currently being looked at for doing this classification, they're designed, these metrics are designed by pathologists. And another problem is that these features are specific to each tissue type. So this spectrum for a certain cell type may change from breast or to prostate tissue. So if we want to extend it to a new tissue type, then we have to create new features each time and tune them and engineer them and see which ones work the best. So this is what my project is looking at is this pipeline of classifying this data. So I have my spectrum input and I'd have some sort of feature extraction classification stage and then in the output I would have a cell label. So what we're proposing is to look at deep learning for doing this classification process. So in deep learning, I no longer have the feature extraction stage. I actually just have a neural network. And I can feed into my neural network just the individual data points themselves, the absorptions at each frequency. As input nodes to a network, and I can train this network and get out the class label. So neural networks have a lot of advantages. One of the key ones for us is looking at learning the features automatically. So no longer need the pathologist to tell me what things are important or what kind of metrics I should be looking for. I can actually try to learn these on our own without any prior knowledge. And it's also been shown to provide very high classification accuracy in a lot of problems like speech and image classification. But it also, we face some challenges with using deep learning, which if anyone's worked with deep learning you know it requires a significant amount of computational resources and parameter toothing, and what is also a challenge is the more data the better. So for all these -- which is a good thing but also can be a bad thing. If you have enough data it can work well but if you're limited on data it can be a problem. So to help with this computational resource constraint and parameter tuning is where Azure comes into play. So using Azure for data science problems we can actually have, create lots of different virtual machines in the cloud, which I can access through the Azure portal or through SSH. I can do Windows with Linux machines. I can have storage and I can store on my online storage accounts virtual hard disk containing my data or blobs Azure's type of file storage. And I also have access to APIs and Python and different languages to access these virtual machines and my data. So in terms of parallelizing or distributing this task, it's kind of two approaches that are used or that we could take. So let's see. The simplest approach would be what we could call embarrassingly parallel, which is the terminologies used in this area. So basically each virtual machine I just have a neural network and I train independent of each other. And maybe I have a head node that kind of organizes and distributes the workloads. When one virtual machine is done it reports back and it gives a new job to another one. A more sophisticated method would be something like that's very I/0 intensive maybe I'm training one network and I'm distributing the work across many virtual machines, possibly asynchronously and they're all kind of doing different tasks and reporting back to the head node. So this is more sophisticated approach. This is a more simple approach. So this might be the ideal of I/0 intensive. I think just doing this embarrassingly parallel, there's lots to be gained as a lot of the work and time that's spent in deep learning is tuning these hyperparameters which we can do without the need for this more intensive parallelization. So also if you look at the libraries out there, the limitation is not many libraries allow for this kind of intense parallelization. So there's a lot on this slide. But if you work with deep learning maybe you're familiar with some of these libraries. But the one I like to point out, a couple I'd like to point out to use and one in particular I'm using is Theano or Pylearn 2, built on top of Theano. So it offers a lot of deep learning already implemented networks. It's quite actively maintained and as new features in deep learning are coming out they're pretty good about adding them into the code base. And other good ones are Caffe and Torch7. Caffe especially if you're interested in doing computational neural networks, which is something I may turn to in the future. But I definitely would recommend looking at those as well. But mostly I picked Pylearn 2 because it's very easy to use it's in Python, which is something I'm familiar with. And pretty easy just for getting started with. And if you're interested in knowing more about these libraries, like you can talk afterward. So I'll describe my setup in Azure how I'm using Azure to do this deep learning training. So I have, if you start with the portal in the cloud, I can create my virtual machine. And through the portal and I can SSH into it, make a Linux machine, for instance, and I can install all of my favorite Python libraries or whatever libraries you may want to include. And I can create a storage account where I hook to the VM and where I store my FTR dataset. So that's what I currently have operating. Once I have this VM created, then I can just -- I just copied it and can make more instances of the same VM and connect each one of them to the same dataset. And access all of them through SSH and using Python Scripts I can kind of delegate different tasks or different networks for each of the machines. So I want to show a little bit just with my Azure setup. Let's see. So the portal is really easy to access. It's just manage.windows.ur.com. But I don't seem to have the server. Let's see. Oh. Lost connectivity. Let's try again. Okay. Here we go. So I can load up the portal and shows all of my items that I have. I can look at my virtual machines and here I have five virtual machines running, I work at at group in Illinois CIG, call it CIG Pylearn and then the instance number. I can look at one of these because of the dashboard and currently it's not doing too much. This is an eight core machine and it has 14 gigabytes memory. So with Azure research, Azure for research you can get up to 32 cores which I'm using currently. Ranging in different sizes. And I can also have my storage accounts. Whoops. Here we go. So I have a storage account called CIG General Store. And on here I have my containers, virtual hard drives, and I can see all the hard disks for the operating system on virtual machines and this top one, BRC 961, which is the FT-IR dataset that I'm using. And so on here it's really easy to like create new virtual machines or create new storage accounts. Very easy to manage everything. The other great thing about this is that I don't have to worry about actually managing all the hardware of a cluster or server. So like our group at Illinois has an old server that we got from an old group so it's basically not usable. It's so old that it's obsolete in terms of what's currently out there. But with Azure I can just have, I have access to the most recent hardware and I don't have to worry about maintaining the hardware or backing up things. So it's pretty nice in that sense. Okay. So now I'll talk just about actually doing class -- cell class labeling using FT-IR. So the dataset that we have for our experiments about 97 samples. So if you can see each one of these is a sample like a slice taken from a core, with biopsy. So from each sample, there's about 200 by 200 pixels each pixel is spectra, about 2 million spectra, of which about 500,000 are labeled. And then this is just one image for one frequency, the response. But we would have an image for each different frequency band. So 501 bands. There's seven different cell types that we're looking at. And these ones that are labeled were labeled by a specialist and you'll notice there's some holes in the data. Those are not like missing cells or anything. It's sometimes the cells may be constituted of different cell types or maybe hard to identify. So these ones we have labeled are what are considered for certain this is the cell type it is. So for using it for training. So I split it up into about 70 percent training, 10 percent validation, and 20 percent testing. And this is the setup that I am using. So I extracted a spectrum from the 3-D volume and I just store all the spectra in big 2-D matrix. So each row is a spectrum and then these are across the row is their response for different frequencies, and then I just feed this spectrum into the neural network. This is actually a little bit misleading because there's not one node at the output but there's actually seven for each of the classes. Each node representing a probability of that class. And then for the experiments, I use like two to four layers. But this kind of preliminary work. So there's no reason not to go for more. So I'll be looking to do more, try more layers and different configurations, also just using five to 500 nodes per layer but there's no reason we can't go higher. I'm just using the entire data for input layer for 501 nodes. If you're familiar with deep learning, there's a lot of different activation functions you can use. It's another part of the tuning, and I'm using rectified linear units and soft max regression for the output. And then the output we'd be getting actually just one label which would constitute an entire cell map. Some results I've gotten from some of the networks that did the best, so using the rectified linear units for activation and then some different configuration of layers and nodes. This is just the number of nodes per layer and for each layer is the first layer, second layer, and then accuracy is around close to 90 percent. But I think there's a lot of room for improvement. So that's part of the deep learning work is continuing to search different sets of hyperparameters and different configurations and different learning rates can get better results. So that will be something I'll be looking toward the future toward to improving these results. Let's see. Okay. So in conclusion, FT-IR spectroscopy, there's a lot of potential to provide quality or, not qualitative, quantitative morphological and molecular information for histopathology. And there's still a great need in histopathology for more accurate diagnosis. So hoping that FT-IR can aid greatly in that. And using deep neural networks, this allows for accurate hoping to continue to improve without the need for doing having domain knowledge and with Azure we can I was able parameter search, which greatly expedites the process of hyperparameters. cell classification feature imaging or to parallelize this searching for So to acknowledge just some people who were helping. I'd like to thank Vani, my mentor. And Harold, just for the opportunity to intern at Microsoft, and some of the staff I talked with, Trishul was really helpful. Great insight, other interns like David, thanks for their helpful conversations. And my advisor back at Illinois and the group we work at Illinois Rohit Bhargava and other members of his group. So that concludes my talk. So thanks for your attention and if you have any questions feel free to ask. >>: Can you talk about future direction? >> Ben Chidester: Yeah, I don't think I have a slide for it in here, actually. So let's see. So I think in the future -- that's not it. Let's just go back to the spectroscopy picture. One thing that we're hoping to do is try to take more advantage of like this spatial data that we have, information, so not just labeling each spectrum individually but trying to make use of the entire 3-D volume. That would be one, that's one thing, and also if I go back to this histopathology section. Or slide. The ultimate goal is really to automate this whole process. So maybe we get to the cell map and then from the cell map actually doing, using morphological features doing machine learning on top of this to create a diagnosis or grading of cancer or labeling cancer as pixels. Not necessarily to replace the pathologists but there's a great need for second opinion. So there can often be a lot of inconsistency between pathologists with diagnosis or even a pathologist if doing subsequent diagnosis of the same sample can give different diagnoses. So we're hoping that an automated histopathology process could actually aid a doctor in giving a second opinion. But that's the future goal, direction of it. Any other questions? >>: Just kind of curiosity, how long does it take to go through the process of labeling and seeing which ones the neural network has been trained, the single spectra from the corpora. >> Ben Chidester: Once it's trained it's quite fast it's mostly in the training takes a long time. Training the networks, it can take like a few hours. Or several hours, usually, for configuration. That's what I've found. So far. But labeling is pretty quick, yeah. >>: Do you use the I/0 intensive training? >> Ben Chidester: No, I'm not using that. So I'm not using -- I'm not using this method. I'm just using the simple embarrassingly parallel. >>: Different virtual machines that try to train different type of -- >> Ben Chidester: Exactly. Each machine has its own set of, configuration of neural network. It trains on its own. So projects like ADAM do this style of parallelization. Or BeliefNet by Andrew, I think who does something like this. But Ion's [phonetic] not doing that, yeah. >>: Another question about the images. So when you do FT-IR image it's not a single slide, right, it's a 3-D, when you take, do biopsy it's a 3-D mask or not, or is it like ->> Ben Chidester: >>: Yeah -- I didn't get that. >> Ben Chidester: Let's see where was it here? >>: [indiscernible] does that mean that it -- if I have a volume and a CT scan, I would say every slide is -- so when you make a treaty volume from CT scan it just describes the organ and treaty but here it's not the case, right, this is for a cell in 2-D. >> Ben Chidester: >>: It actually would be like 4-D technically. It should be 4-D. >> Ben Chidester: Because for each slice in the core you have a 3-D volume. And you'd have, so the fourth dimension is ->>: In that sense, how are you classifying it? >> Ben Chidester: I'm just using, I'm only considering like one slice of the core, not the whole thing. Yeah. But yeah, so I'm just one slice and I'm using just that slice, imaging which gives you the 3-D volume and then doing the labeling from there. >>: You said 97 samples. Is it 97 slices? >> Ben Chidester: [applause] Slices from the orbs, exactly. >> Ben Chidester: Thanks for your attention.