University user perspectives of the ideal computing environment and SLAC’s role Bill Lockman

advertisement

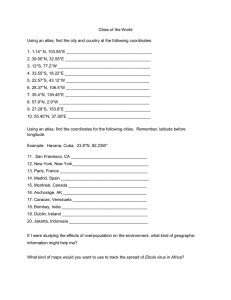

University user perspectives of the ideal computing environment and SLAC’s role Bill Lockman Outline: View of the ideal computing environment ATLAS Computing Structure T3 types and comparisons Scorecard My view of the ideal computing environment Full system support by a dedicated professional • hardware and software (OS and file system) High bandwidth access to the data at desired level of detail • e.g., ESD, AOD, summary data and conditions data Access to all relevant ATLAS software and grid services Access to compute cycles equivalent to purchased hardware Access to additional burst cycles Access to ATLAS software support when needed Conversationally close to those in same working group • Preferentially face to face These are my views, derived from discussions with Jason Nielsen, Terry Schalk UCSC), Jim Cochran (Iowa State), Anyes Taffard (UCI), Ray Frey, Eric Torrence (Oregon), Gordon Watts (Washington), Richard Mount, Charlie Young (SLAC) July 17, 2009 SLUO/LHC workshop Computing Session Bill Lockman 2 ATLAS Computing Structure • ATLAS world-wide tiered computing structure where ~30 TB of raw data/day from ATLAS is reconstructed, reduced and distributed to the end user for analysis • T0: CERN • T1: 10 centers world wide. US: BNL. No end user analysis. • T2: some end-user analysis capability @ 5 US Centers, 1 located @ SLAC • T3: end user analysis @ universities and some national labs. • See ATLAS T3 report: http://www.pa.msu.edu/~brock/file_sharing/T3TaskForce//fin al/TierThree_v1_executiveFinal.pdf July 17, 2009 SLUO/LHC workshop Computing Session Bill Lockman 3 Data Formats in ATLAS Format Size(MB)/evt RAW - data output from DAQ (streamed on trigger bits) 1.6 ESD - event summary data: reco info + most RAW 0.5 AOD - analysis object data: summary of ESD data 0.15 TAG - event level metadata with pointers to data files 0.001 Derived Physics Data ~25 kb/event ~30 kb/event ~5 kb/event July 17, 2009 SLUO/LHC workshop Computing Session Bill Lockman 4 Possible data reduction chain (possible scenario for “mature” phase of ATLAS experiment) July 17, 2009 SLUO/LHC workshop Computing Session Bill Lockman 5 T3g T3g: Tier3 with grid connectivity (a typical university-based system): • • • • • Tower or rack-based Interactive nodes Batch system with worker nodes Atlas code available (in kit releases) ATLAS DDM client tools available to fetch data (currently dq2-ls, dq2-get) • Can submit grid jobs • Data Storage located on worker nodes or dedicated file servers • Possible activities: detector studies from ESD/pDPD, physics/validation studies from D3PD, fast MC, CPU intensive matrix element calculations, ... July 17, 2009 SLUO/LHC workshop Computing Session Bill Lockman 6 A university-based ATLAS T3g • Local computing a key to producing physics results quickly from reduced datasets • Analyses/streams of interest at the typical university: performance ESD/pDPD at T2 # analyses e-gamma 1 W/Z(e) 2 W/Z(m) 2 minbias 1 physics stream (AOD/D1PD) at T2 # analyse s e-gamma 2 muon 1 jet/missEt 1 • CPU and storage needed for first 2 years: components 160 cores 70 TB July 17, 2009 SLUO/LHC workshop Computing Session Bill Lockman 7 A university-based ATLAS T3g Requirements matched by a rack-based system from T3 report: The university has a 10 Gb/s network to the outside. Group will locate the T3g near campus switch and interface directly to it July 17, 2009 SLUO/LHC workshop Computing Session 10 KW heat 320 kSI2K processing Bill Lockman 8 Tier3 AF (Analysis Facility) Two sites expressed interest and have set up prototypes • BNL: Interactive nodes, batch cluster, Proof cluster • SLAC: Interactive nodes and batch cluster T3AF – University groups can contribute funds / hardware • Groups are granted priority access to resources they purchased • Purchase batch slots • Remaining ATLAS may use resources when not in use by owners SLAC-specific case: • Details covered in Richard Mount’s talk July 17, 2009 SLUO/LHC workshop Computing Session Bill Lockman 9 University T3 vs. T3AF Site: Advantages: Disadvantages: University • Cooling, power, space usually provided • Limited cooling, power, space and funds • Control over use of resources to scale acquisition in future years • More freedom to innovate/experiment • Support not 24/7, not professional. Cost • Dedicated CPU resource may be comparable to that at T3AF • Potential matching funds from university • Limited networking and networking support • Access to databases • No surge capability • 24/7 hardware and software support • A yearly buy in cost (mostly professional) • Less freedom to innovate/experiment • Shared space for code, data (AOD) by university • Excellent access to ATLAS data and • Must share some cycles databases • Fair share mechanism to allow universities to use what they contributed • Better network security • ATLAS release installation provided Some groups will site disks and/or worker nodes at T3AF, interactive nodes at university T3AF July 17, 2009 SLUO/LHC workshop Computing Session Bill Lockman 10 Qualitative score card University T3g T3AF no generally yes High bandwidth access to the data at desired level of detail variable good Access to all relevant ATLAS software and grid services variable yes Access to compute cycles equivalent to purchased hardware yes yes Access to additional burst cycles (e.g., crunch time analysis) generally not yes Access to ATLAS software support when needed generally yes yes some being negotiated being negotiated Full system support by a dedicated professional Cost (hardware, infrastructure) • Cost is probably the driving factor in hardware site decision • hybrid options are also possible A T3AF at SLAC will be an important option for university groups considering a T3 July 17, 2009 SLUO/LHC workshop Computing Session Bill Lockman 11 Extra July 17, 2009 SLUO/LHC workshop Computing Session Bill Lockman 12