Summary of Physics Analysis Perspectives and Detector Performance Interests Peter Loch

advertisement

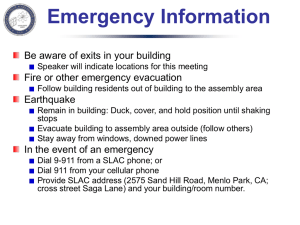

Summary of Physics Analysis Perspectives and Detector Performance Interests SLUO LHC Workshop SLAC July 16-17,2009 Peter Loch University of Arizona Tucson, Arizona USA This Talk 2 P. Loch U of Arizona July 17, 2009 Scope Some input from what I heard yesterday West Coast physics analysis interest Jason, Anna,… German analysis facility at DESY Wolfgang More emphasis on first data performance evaluation Underlying event & pile-up Fast access to systematics of hadronic final state energy scales Jets, taus, missing Et Some focus on possible analysis facility role of SLAC Resources and critical mass Meetings & educational efforts Biased views “Small” university group experience Arizona is locally below critical mass for most studies of interest But excellent and long standing connections to CERN and ATLAS (PL) SLUO LHC Workshop SLAC July 16-17,2009 Personal connections to CERN > 20 years Arizona is ATLAS member since ~15 years Well established collaborations with INFN Pisa, INFN Milan, MPI Munich, CERN, ATLAS Canada,… Sometimes limited to intellectual input role so far! Longstanding (continuing) and short-lived (need-driven) collaborations Some personal connections to theorists/phenomenologists West Coast (SLAC – Peskin, Oregon – Soper, Washington – S.D. Ellis), FNAL/CERN (Skands, Mrenna), BNL (Paige), LPTHE/Paris VI (Salam/Cacciari),… Not limited to client role! 3 Critical Mass & Resources What does critical mass mean? Sufficient local expertise around the corner Detector, software, physics analysis Peers facing similar problems and/or challenges Low communication thresholds for new graduate students etc. Common group interests Significant number of experts and soon-to-be experts work closely together on related topics E.g., Ariel’s Jet/MET/b-tag group SLUO LHC Workshop SLAC July 16-17,2009 Fast senior and peer review of new ideas and general progress Less time lost with “wrong approaches” Willingness to share new ideas at early stages and “help out” Collaborative work environment Usually not available to university groups Some exceptions! P. Loch U of Arizona July 17, 2009 (Computing) Resources Locally available computing CPU power, storage Often limited for university groups Historically no or little dedicated funding for computing requested “Hardware group” tag with funding agents Different visions on group contribution to ATLAS Distributed computing resources often deemed sufficient Grid etc. – see next slide! Funding crunch at state level does not help Recently implemented new charges to groups at state universities Cooling & power for larger systems Not so much an issue for Arizona so far! Loss of “free” operation support Loss of system manager may become a problem for Arizona Physics Analysis Resources 4 P. Loch U of Arizona July 17, 2009 SLUO LHC Workshop SLAC July 16-17,2009 ATLAS Computing Model for Physics Analysis (long term) Graph from Jim Cochran, this meeting 5 Physics Analysis Aspects P. Loch U of Arizona July 17, 2009 Mostly needs critical mass Intellectual guidance, exchange and progress review essential Assuming we want to keep significant analysis activity in the US/at the West Coast I think we should (entry point for new graduate students, already significant investments,…)! Resources provided by ATLAS distributed computing Data extraction, compression by official production system… Managed by ATLAS physics groups …or on Tier2 “around the corner” for different filters Reflecting local (US ATLAS etc.) group interest SLUO LHC Workshop SLAC July 16-17,2009 Local refined and final analysis needs much less resources DnPD as simple as root tuples Tier3g cluster, desktop, laptop can be sufficient depending on analysis May have somewhat relaxed time scale Not always true, in particular for early data (competition with CMS, conferences, graduation deadlines,…) But what about performance? On the Side… 6 P. Loch U of Arizona July 17, 2009 Tier3 in US ATLAS Tier3’s are not a US ATLAS resource They are a local group resource! US ATLAS spends money to help with setting up Tier3’s… Help with dataset subscriptions, some software support (my understanding)… Hardware and configuration recommendations to simplify support role …but they are not running it for you! Tier2’s or larger groups may offer to host smaller group’s computing At a price: $ or fractional use of equipment by host are discussed Helpful if no or little local computing support Contractual limitations in base programs may require funding agent agreements/permissions Requests for American Recovery & Reinvestment Act (ARRA 2009) funds by universities Not only computing SLUO LHC Workshop SLAC July 16-17,2009 Arizona: ~25% requested for computing, rest replacement of obsolete electronic lab equipment Not a direct competition to US ATLAS Tier1/2 funding Invitation to apply fully supported by US ATLAS – including per group recommendations for scale of computing hardware to acquire! Thanks to Jim! Unique chance for university groups to get ready for analysis of first data! Performance Tasks for First Data 7 Need flat jet response rather quickly P. Loch U of Arizona July 17, 2009 1.6 pT 2.4 GeV Basis for first physics analyses E.g., inclusive jet cross-section Systematics can dominate total error already at 10-200 pb-1 Depending on final state, kinematic range considered, fiducial cuts,… single simulations extracted from minbias Requires understanding of hadronic response (Jason, Ariel,…) Minimum bias Single charged hadron response of calorimeter Pile-up with tracks and clusters Di-jets Underlying event with tracks and clusters Direction dependent response corrections Prompt photons SLUO LHC Workshop SLAC July 16-17,2009 Absolute scale by balancing, missing Et projection fraction, etc. Initial focus on simple approaches Least number of algorithms Least numerical complexity Realistic systematic error evaluation Conservative ok for first data! But also try new or alternative approaches! (plots from CERN-OPEN-2008-020) jet calo R calo signals ptcalo pt pt pt 8 P. Loch U of Arizona July 17, 2009 JES Systematics (Example) figures from K. Perez, Columbia U. Systematic error evaluations for JES Requires understanding of effect of calorimeter signal choice in real data Towers, noise suppressed towers, topological cell clusters Corresponding algorithm tuning SLUO LHC Workshop SLAC July 16-17,2009 Noise selection criteria, mostly Need to consider at least two different final states Can be di-jets, photon+jet(s), single track response May need repeated partly or full event re-reconstruction Need calorimeter cells to study different signals Dataset with most details – ESD or PerfDPD Considerations for MC based calibrations Study change of response from calibrated (jet) signals for different hadronic shower models Determined with one model, applied to simulated signals from other 9 JES Systematics Resource Needs (1) Iterative reconstruction with quick turn-around Large (safe) statistics to focus on systematic effects Can be several 100k of ESD events each for different final state patterns (photon triggers, jet triggers, MET triggers, combinations…) >1M for minimum bias! CPU ~1 min/event, disk size ~1.5 Mbytes/event Need to save at least 2 iterations Change configurations from default reconstruction Run on cells from default reconstruction Need for cell signal re-reconstruction (RAW data) not obvious in jet context Maintenance of configurations Book-keeping Potentially several rounds to explore parameter space(s) Semi-private production scenarios feeding back to official reconstruction Highly efficient access to significant resources Speed is an issue SLUO LHC Workshop SLAC July 16-17,2009 Maybe not quite within the 24-48 hours gap between data taking and reconstruction, but close! Local CPU and disk space Cannot afford dependence on grid efficiency Need clear tracebacks in case of problems Reports/output files lost on the grid? Database access Latest conditions data need to be available locally E.g., from CAF etc. P. Loch U of Arizona July 17, 2009 JES Systematics Resource Needs (2) 10 P. Loch U of Arizona July 17, 2009 Changing hadronic shower models in G4 Not clear that appropriate resources are available in production system… Especially after data taking starts! … but important part of evaluation of systematic uncertainties! All jet calibrations will likely use some MC input – admittedly with different sensitivities to hadronic shower models Long term fully MC based? Big project SLUO LHC Workshop SLAC July 16-17,2009 Setting up full simulation, digitization, and reconstruction chain Severe disk space requirements if truth level detector information is turned on (probably needed) ~2.4 MByte/event → 5.7 Mbyte/event, 8% increase in CPU Dedicated resources preferred Especially if not part of the standard ATLAS production May not be taken on by other international institutions Maybe MPI Munich – resource limitations? Potential Role of SLAC 11 P. Loch U of Arizona July 17, 2009 Physics analysis Clearly potential to provide critical mass as an analysis facility Involvement of West Coast institutions required See Wolfgang’s talk: existence of analysis facility needs community to fill with life (providing expertise, attendants…) Does not seem to be a big problem! Physics interest for initial data well aligned at the West Coast E.g., lots of common interests in various standard model hadronic final states, minbias/pile-up, b-jets, W/Z production… SLAC Tier2 can be resource to extract user data Need some organization to handle request Just the grid? Analysis groups? Individuals? Extension of Tier2 to allow some interactive/local batch analysis capabilities? Depends on final university Tier3 funding Hosting computers for West Coast (and other) institutions? Meeting and office space SLUO LHC Workshop SLAC July 16-17,2009 Accommodate visitors working on specific aspects of an analysis Provide meeting space for groups working on same/similar projects Seems to be possible Certainly desirable from my standpoint! Some coordination with LBL? Analysis facility extension to analysis center? Going to SLAC instead of CERN saves $$$ for Arizona! Reduce amount of time grad student spends at CERN by better preparation! Role in Performance Evaluation 12 P. Loch U of Arizona July 17, 2009 Bigger task Needs critical mass and significant resources Would need dedicated computing resources for a unique contribution to ATLAS Critical mass not a problem at all C.f. Ariel’s group SLAC meetings have been a significant source of new ideas in hadronic final state reconstruction Already two very productive US ATLAS Hadronic Final State Forums (2008 and 2009) ~30 attendants in very informal environment – low threshold for contributions & discussions Want to continue with 1-2/year meetings New convenership for HFSF – PL likes to continue to be involved in organization Several concepts seriously worked on today were initially discussed at these meetings Latest: data driven jet calibration, some jet substructure focus Workshops have “real work” portion with educational perspective First access to ATLAS jet simulations for some attendants, thanks to huge effort of Ariel’s group – Bill L., Stephanie M. etc. may remember! Computing resources – a wish-list? Dedicated task or project assigned computing resources need extension of Tier2 center SLUO LHC Workshop SLAC July 16-17,2009 Don’t believe typical Tier3 computing does the job – very similar infrastructure and maintenance levels as Tier2 may be required Something for Richard to work on! Resource needs not completely out of scope, even for re-reconstruction But also needs organization, local batch management, disk space management, i.e. $$ SLAC seems to be able to provide infrastructure – in principle! How can potential clients help? How much do funding agents allow us to help? Personal opinion I see a clear potential for a very significant contribution to the timely improvement of the hadronic final state reconstruction in ATLAS with SLAC hosting the intellectual and resource center for the related activities 13 Educational Role P. Loch U of Arizona July 17, 2009 Education beyond software tutorials (e.g., “ATLAS 101” lectures) I like DESY’s approach of “lower level” schools E.g., focusing on detectors principles and reconstruction approaches, selected (early) physics & performance topics Mostly aim at new graduate students and post-docs Also good source to refresh knowledge for senior collaborators Good opportunity to capture knowledge within the collaboration Original designers or other specialists can lecture on detector physics in the context of the actual ATLAS designs Preserve knowledge potentially lost due to people leaving ATLAS Not everything is written down! SLAC could help to organize and host such schools Need to involve external experts to help with lectures E.g., ATLAS calorimetry, some physics topics… SLUO LHC Workshop SLAC July 16-17,2009 Probably need modest financial support Not clear – I imagine mainly for the lecturers’ travel and some local infrastructure Scope of support? Possibly from US ATLAS project?? Helps grad students in smaller groups to get into ATLAS faster Education beyond the local expertise useful for full physics analysis Can improve and accelerate understanding of data Knowledge of detector principles often weak point in analyses