>> Helen Wang: Good morning everyone. It is... many of you already know him and have collaborated with...

advertisement

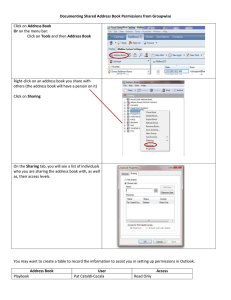

>> Helen Wang: Good morning everyone. It is my great pleasure to introduce Serge Egelman; many of you already know him and have collaborated with him. Serge is a postdoc at Berkeley in David Wagner’s group. There is a group of researchers and professors in collaboration with Intel and so on. Serge today is going to tell us about his recent work with his colleagues, the security privacy permissions systems and so on on mobile devices. >> Serge Egelman: Thanks for the introduction and thanks a lot for having me. Most of the talk today is about permissions. I say mobile devices, but honestly I think most of this is applicable to other types of platforms as well. Most of this was inspired by some work that several of my colleagues did looking at malware on mobile devices, and so they did this study about a year ago, a little over a year ago, and were analyzing malware found on mobile devices in the wild, and they found that actually a majority of it is aimed at monetizing various information collected off of devices. In terms of putting this into information that is useful for users, they found that a lot of it is sending premium SMS messages; whereas, most legitimate applications don't use the SMS permission, so if we want to give advice to users, we might say that the SMS capability signals potential malware. Therefore, if you see apps that request SMS ability, you should be wary; don't use them. So the question is given the current way that most mobile platforms are designed, is it really possible for users to follow advice along these guidelines. Looking at potentially harmful permissions and screening apps based on that. To give an example of that I am going to show this with regard to Android. We've done a lot of this research on Android but again, I think a lot of this is applicable to a lot of other platforms. Anyway, we go to the Android market. Say we want to find a clock app; we search for clocks and it brings up a whole bunch of different apps. We find the one that looks appealing based on the title or the price and we click it. It brings up a description and lots of other information here, more of a description, a title, the manufacture, some screenshots, so if we like this one, we will click download and then it brings us to another screen. So on this screen, it gives the user the choice first and foremost to accept this and download, but then below that it lists the permissions that thing is requesting. And course with limited screen real estate, you can only really show three permissions at a time, so if the user wants to scroll down, he finds all the way at the bottom that yes, this wants to send SMS messages, so now they have to go back to the description page, go back again, go to the search results and now we find another app. We click this one; it brings up the description page again. We click download and now we find that it doesn't have any offending permissions. This took 10 steps just to figure out which apps are requesting SMS permissions. Maybe one possible improvement is by redesigning the architecture of the market we could, if SMS is the most important one that users care about, we could add maybe a little icon to annotate, and so now this goes from 10 steps into one step. To give another example of this problem to emphasize this, if we have an existing app and users just hear about this, that their app is sending premium SMS messages, maybe they have some fraudulent charges on their bill, they want to look at existing apps on their phones and so they go to the main screen, click the menu thing. It brings up a whole bunch of their apps. They have to scroll down, find the settings app, click that and then it brings up a whole bunch of different settings panels and so they have to scroll down again, find the applications one, click that, click manage applications. Now it shows a bunch of applications on here, so maybe they look at the flashlight one. They want to look at that one first, so it brings up settings for the flashlight app and then we have to scroll down just to see which permissions it’s requesting, so the problem is because the permissions are done at install time, the only time that the user is aware that it's sending SMS messages is when they click the install button. When it actually sends the SMS messages they have no way of knowing, so now it takes a 12 step process in order to figure out post talk, which app was sending the SMS messages. There must be a better way and we've done some thought experiments on this. One example, just off the top of my head is maybe the first time an app sends an SMS message you could have a pop-up box and if the user doesn't click cancel in time, it sends the message and it shows where the message is going and what the text is, or maybe you could have just a little icon that appears in the status bar. These are simple methods that would result in more awareness of what the phone is doing and how it's using permissions. The talk for today is about this notion of choice architecture and how these design decisions can have profound impacts on security and privacy and we're going to look a little bit at the problems with the current approaches as well as some improvements that we've come up with based on how to best use permission mechanisms in certain contexts for different types of permissions as well as looking at what sort of permissions and risks users actually care about. Just to give a brief bit of background, this idea of choice architectures was popularized by Thalar and Sunstein in their book Nudge; it's a pop behavioral economics book. It's a quick good read, but the idea here is all about framing. When people are presented with options, how you frame those options can have profound effects on what options they ultimately choose, so soft paternalism. So to give an example, just looking at the different popular mobile platforms, they have varying numbers of permissions that users are confronted with. On one end you have Android which has 165 different permissions. One thing I'll say right now is this the number 165 fluctuates throughout the talk because we've done this on various different versions of Android, and so the number of permissions has gone from 120 something to 170 in the most current version, so roughly 165 permissions now, 170, depending on which version of Android you are using. Windows Phone is sort of in the middle with 16 permissions and IOS is on the other end of the spectrum with only two permissions that users are prompted with, location and pop-up dialogs. The problem with having different numbers of permissions is on one end of the spectrum if you prompt users with 165 different permissions, that's going to quickly result in habituation and they're going to start ignoring everything because they see so many requests so frequently. On the other end of the spectrum though if you're not telling users what they are doing with their data, that's going to quickly result in outrage once it becomes public that a lot of this data is being collected potentially against their interest, so we've actually already seen this. There've been several stories in the news about apps on the iPhone that have been collecting data that users were completely unaware about. For instance, their contact lists, there was the whole path fiasco with address books being uploaded. There needs to be some amount of transparency but too much can be counterproductive. >>: [inaudible]. >> Serge Egelman: Yes. >>: Any application can just theoretically access your camera without asking the user? >> Serge Egelman: Correct. There is a vetting process. I'm going to get into this in a minute, but there is a vetting process that apps are supposed to go through and it's completely opaque, so who knows what Apple does to vet applications. There is a terms of service that says what you can and cannot do with data collected from the iPhone. For instance collecting address book data was completely against the terms of service and an application that's being downloaded by millions of people, that should've been caught in the vetting process that it was doing this. The fact that it wasn't says something about the accuracy of the vetting process. To look at some specific current problems, my colleagues and I did a study this past year where we were looking at Android permissions and again, I think this is applicable to other platforms, some of the shortcomings here. So we did this in two parts. We first did an online survey and the main thing was that we wanted to recruit existing Android users and make sure that they actually had an Android device and we did this by using AdMob which is a mobile advertising service. We commissioned a bunch of AdMob ads that showed up on Android devices and we basically had people take this survey on their device. We had a little over 300 respondents. The first thing that we did was we had three different permissions, so we randomly drew these from a set of 9 or 10 and we had multiple-choice questions where we showed the actual text of the permission, so in this case here it is granting internet access, and we had these multiplechoice questions asking users what ability this permission is requesting. The answer here would be loading advertisements and sending your information to the applications server. These other ones aren't really applicable. People who answered I don't know for these, we just filtered out. There were five multiple-choice answers which were not mutually exclusive, well, none of these is mutually exclusive. Yes? >>: Can I go back? So this list doesn't give the best answer if I'm a technical person. >> Serge Egelman: Why not? >>: Because sending information to the application server is just one perspective. It should be this app can happen to any server on the internet. >> Serge Egelman: The question is though which of these does this permission allow. >>: It doesn't give me the best answer I can choose if I am a technical person. I don't know if that would have impacted people's response. That is my question. >> Serge Egelman: I'm not sure that was the case. I don't think that impacted any of the responses because most of the people didn't really have very strong technical backgrounds. Anyway, we gave people three of these randomly drawn from a set of nine and what we found was while the percentage correct was significantly higher than what the expected value would have been for random guessing at the five different responses, it was still very poor. People got .6 questions out of three on average. There were only eight participants out of the 300 who were correctly able to define all three of the permissions. We found that comprehension was very poor, so we decided to follow this up with a laboratory experiment to try to get some qualitative data and see why comprehension was so low. We recruited 24 participants from Craigslist, again existing Android users. We had them come in with their existing Android devices and we did the experiment on various Android devices. We had them install two different applications and we gave them scenarios where they were to find applications from the market and we observed them to see whether they paid any attention to permissions screens during these installation processes. In the third part of the experiment we asked them about an application that they use frequently on their phone. We asked them what permissions it uses and we had them go into the settings panel like I showed you guys so that they could actually see the permissions and we had them describe those permissions to see even when the permissions were in front of them whether they were able to correctly define them. And the last part we asked them a specific question, so based on that list of permissions we asked can an app, can this particular app send SMS messages. We found that the majority of them were incorrect. A lot of the problem came from, so during, so this shows what they looked at during the installation processes. The minority of them actually looked at permissions and actually only about 40% were aware that the permissions even existed. In terms of the takeaways, we find that most of the problem comes from the fact that people don't actually look at the permissions because the screen comes too late in the process. Another explanation is that people were simply habituated. They said, of the people that we didn't observe looking at the screen then and there they said they are aware of permissions and that they don't look at them because they see these all the time. Many said that they actually thought it was a license agreement so clicking through was just a formality. So we have some suggestions on how this could be improved. One, only prompt on the permissions that are really important, that people actually care about that have some likelihood of actual harm, and also the information needs to come earlier in the process. When the permissions appear right now it's after the user already clicks install and so there are various cognitive biases that might prevent the user from going back in the process after they click install and deciding to find another application. >>: So I think there's something actually that has been left outside of this. We did studies along some of these lines ourselves and I think what you find is that the way you talk about the permissions as something negative, something to minimize something, to reduce something and so on and so forth. Now what we found was that for many users, not all of them, but quite a large number, permissions is the way that they read that word is capabilities. More capabilities means better, so the more permissions you have the better off you are and if you can get a free application with the most permissions under the sun like 154 in the case of Android then your goal then basically is you just won the lottery. >> Serge Egelman: I'm going to get to this in a minute. >>: Maybe negative versus positive in terms of colors the interpretation of these results quite a bit. >> Serge Egelman: Yes. I agree and that's the result that we found later on. Stewart? >>: So you said this is too late in the process; does that presuppose that what you're trying to do is make an install decision if you are allowed to deny the permission when it's requested? >> Serge Egelman: Well, I am going to later contradict what I just said, which is if we assume that we want to do this at installation time and that's the assumption that I'm going to contradict later. If we assume that we want to do this at installation time, we shouldn't show it after the user clicks install. So there is a choice supportive bias so user clicks install and then sees the permissions and they might agree that these are bad and they don't feel comfortable granting these permissions, but at the same time, they don't want to second-guess their previous choice or decide that they made a bad decision, so they are going to go ahead with it. That's one of the problems. If we agree that we are going to do this at installation time we should have it earlier, which I'm not agreeing. >>: Question. Looking at the result, it seems like a lot of people just don't know what the permissions are. >> Serge Egelman: Yes. >>: And then I have personally observed how my dad uses Android phone just recently and it made me think, shall we actually even give users permissions. So I look at the problem in different angles to try to solve the problem from the app market as much as we can because there's got to be a significant fraction of users who have no idea of what permissions are. >> Serge Egelman: Yes. And I guess this is a larger point that I wasn't planning on getting into at this talk, but what we found in this experiment is, and this is in the paper, the vast majority of users were making decisions based on app reviews rather than permissions and the reviews were actually a pretty reasonable proxy for misbehavior of the apps, so people tend to trust reviews and felt the reviews were pretty accurate and also a lot of people who thought that permissions were kind of overreaching in many cases, that tended to be reflected in the reviews. As a more general point I think the problem of malware on mobile markets is really nonexistent and instead the problem that we are trying to solve is gray ware, apps that actually do offer a positive value proposition to most users, but are using data in secondary ways that might make some people uncomfortable and so then it just becomes, the objective is just making the uses for this data a lot more transparent and give people choice. Anyway, so the second problem was comprehension. Of the small fraction of participants who did pay attention to these and then also when we showed them permissions screens and asked them to define these, we found that almost everyone was unable to define these permissions. When we explicitly asked does this application that you use frequently send SMS messages, 64% were unable to say correctly whether it did or not. One of the big problems we found was confusing the category with the actual permission. Here's a screenshot of one version of Android and you see that it has these category labels which are in bigger bold text right above the actual permission, so for instance, hardware controls is the category of recording audio and so asking people what this meant, they really only read the hardware controls part first and thought well maybe it has access to the camera, maybe it can record audio, maybe it can vibrate the phone, turn the flashlight on, and so there was a lot of ambiguity here based on these category labels. The other problem that we found was given the extent of the list of permissions, a lot of the permissions required understanding the full set to understand the actual scope of any one permission, so for instance, reading SMS or MMS messages, there are actually two different permissions for reading sent versus received messages and unless you are aware that those two permissions exist, you're not entirely sure of what the scope is of this permission. Our suggestion from this was that these descriptions could be improved, possibly eliminating the categories and also narrowing the list of possible permissions, so that users don't have to understand 160, 170 different permissions in order to know what capabilities an app is trying to request. In this previous study we looked at the install time warnings, but what about other mechanisms. For instance, on iPhone and I think on Windows Phone as well, there are some pop-up messages for location. It will ask if you want to grant locations, so that is a different type of mechanism altogether. And of course, this is applicable to more than just smart phones in terms of mechanisms for granting permission. To recap, there are install time warnings and these have been used extensively in Android because they are adaptable to many different permissions. And the permissions are granted a priority before the user even opens the application so the advantage here is that you can grant future abilities. You don't really have to do much for a platform developer’s standpoint. You just need to read in the manifest and then populate these fields in the installer. Some of the problems with these is that there is limited real estate so if you are prompting on all of these at install time, you can really only show three at a time here on this particular Android device. If there are more than three permissions, you are relying on the user to scroll down. And then there are questions of what order these permissions appear in. They are also easy to overlook as I mentioned before, and the biggest problem though is that they lack context because they are granted a priority the user doesn't understand in what situations these are actually going to be requested, so one improvement on this might be during runtime warnings. The advantage of runtime warnings is that you can add contextual information. The user clicks a button and then they see this warning. Well, they knew what they were trying to do when the warning appeared and therefore if they have a little bit more contextual information that they could use to make a better decision. Another thing you could do is you could even put some of that contextual information in the warning so here's a mockup of something maybe pairing a device. You can show with the name of the device is. It's a lot more dynamic when you are doing it at runtime than ahead of time with the install time warning. But these have drawbacks as well. Habituation is a big problem, so if you are constantly showing warnings for everything, eventually users are just going to swat them away. Users often see these as a barrier to a primary task, so I probably don't have to go into the problems with UAC here. Another approach that Apple has been doing in addition to their two different runtime warnings is they curate the market. Every app that's uploaded has to go through some sort of vetting process, but this should be a great idea because it keeps everything from the user. We are just telling them what’s safe and what's not safe. The user doesn't actually have to expend any sort of cognitive effort on deciding whether or not I should install this program. Of course it's very resource intensive, so if you're hiring a team of people to manually vet apps, then this can get quite costly and it doesn't scale very well. The other problem is that it's completely opaque. Who knows what they are actually looking for? The example I mentioned before, so Path was uploading contact list so even though this was expressly against the terms of service. Because, you know, we have no idea what the actual process was, there was obviously some sort of flaw. There've been several other emerging approaches which I guess sort of fall into the category of trusted UI. Yes? >>: Apple does [inaudible] they just also do curating for [inaudible] harsh language and kind of questionable content sort of thing [inaudible] kind of [inaudible] type of stuff and presumable that is actually important to some of their users. >> Serge Egelman: Yes, but it's also a huge source of frustration for developers because in their terms of service it well, offensive. Well, what's offensive? Is a completely subjective process. >>: And another thing the developers I'm sure must be frustrated. I was looking at the [inaudible] publication on the IOS recently and basically it has all of these warnings you can imagine because you can download books that will have harsh language and all of these other characteristics and so the book reader is obviously to be blamed for that. >> Serge Egelman: Yes. >>: It is rather silly. >> Serge Egelman: There have been several papers on trusted UI. Most recently, work that Helen did on access control gadgets at Oakland this year, and so the idea behind trusted UI is that you integrate the permission granting mechanism within the user's workflow, so buttons that are drawn by the OS. The application developers specify a placeholder. The OS draws the buttons so nothing can be drawn on top of that and it's a trusted path to granting this permission. It's part of the user’s workflow and so we are not taking extra steps. This is not an impediment to the primary task and they can't be spoofed. They can be spoofed but there is actually no incentive to spoof these because you're not going to grant the permission by spoofing them. Here's a mockup of maybe we have one for sending SMS messages and so maybe the OS draws the destination number on the button and now it's just up to the user to click this button and it sends the SMS message. Another advantage of trusted UI is then you can have more complex gadgets and use them like choosers to choose subsets of the data. Maybe you have a calendar thing that wants to add a bunch of things to your system calendar. When you get a prompt the user can go in and do some finer grained editing on what they approve of what they don't approve. Of course, trusted UI is not really a panacea either because you can't grant abilities in the future easily. They also can't really handle asynchronous requests, so things that the user didn't initiate. That's a big difficulty. So you can't use trusted UI for everything. I'm sure you could design several maybe objects in the OS to, with callbacks, where you are looking for specific events from sensors when you're going to trigger the permission. Maybe you have a button that the user grants, but that sort of defeats the spirit of the trusted UI. It's no longer part of the user's workflow now. >>: [inaudible] scheduled? >> Serge Egelman: Yes. That's exactly what I'm talking about. But it's no longer really part of the user’s workflow. It's now part of a separate task that they are doing to schedule this permission request and that sort of goes against the spirit. >>: I think it's exactly in the spirit because the user schedules it and no one else can schedule it. For the things that [inaudible] there are no [inaudible] it's not the user scheduling it the scenario where the schedule is not that clear to the user or [inaudible] permanent access. That is the scenario that there is no way for the user to [inaudible]. In at least a permanent way. >> Serge Egelman: I don't know. I think there's a much larger discussion there. We will take that off-line. So there are several different mechanisms that you could use to grant permissions, but I think one of the big shortcomings that we see why there are problems in a lot of systems is that everyone just uses one mechanism. There can only be one mechanism that we use for every permission, so any Android all of it is done through install time permissions and install time certainly has cases where it's effective, but… >>: [inaudible]. >> Serge Egelman: That's weird. Just a preview for the next slide is really screwed up. So anyway, we did this thought experiment where we were looking at all of the permissions that are available on all of the mobile platforms. We combined them together by also merging redundancies and getting rid of stuff that the users don't really have any business being confronted with. For instance, multicast, or turning on debugging. Users probably don't care about those sorts of things. So we came up with 83 unique permissions. We did this thought experiment where we just sat down over the course of several weeks and I am going through each of these permissions and deciding what would be the most effective mechanism for granting this permission and why. To give an example, maybe you have a security app that would permanently disable a device if you report your device stolen, you go to some website and it fries your device. So one of the things that we looked at was risk level. This is pretty severe. You wouldn't want a random application breaking your device without your consent. Is this reversible? No. That's the whole point is that it can't be undone. Is there incentive for widespread abuse? Probably. And so the consensus is the only real way of doing this given the mechanisms available would be at install time. You're not going to have a trusted UI element on the device to break it in real time because you don't possess the device. You're not going to have a runtime warning asking the person who's stolen your device whether they really want to erase the device or not. Another example on the other end of the spectrum… Yeah? >>: [inaudible] abuse, why would somebody want to do that? What's the… >>: Blackmail. >> Serge Egelman: Yeah, blackmail. Changing time at the other end of the spectrum, so risk level, really just an annoyance. Can you undo it? Yeah, absolutely. You go back and change the time. Is there incentive for widespread abuse? Maybe you can think of some. James Bond has a nefarious plot where you update the time on everyone's cell phones as part of some heist, but realistically, you are not going to be spreading, and it’s unlikely that you're going to see a lot of malware that the main attack vector is changing the time on people’s phones. The consensus here is that this is so minor and revertible that maybe we should just grant this implicitly whenever a device wants to change the time. But maybe we can have some audit mechanisms so that users can see what application changed the time. Then this came up, we came up with this idea of the implicit access permission mechanism. For things that are very minor and are either revertible or just pose an annoyance, we want to minimize habituation, so there's no point asking about these before they are granted. Instead, why don't we just let them go and make it easy for users to figure out what the app is doing this? In the case of changing the time, then maybe we have an audit mechanism by annotating the settings page indicating which app most recently changed the time and you could see this maybe for wallpaper as well showing which app changed the wallpaper last and so users can see if there is an offending app that is doing things against their wishes. They can then identify the app and remove it. Of course, this isn't to say that this permission shouldn't exist anymore for wallpaper or time. I can still imagine this being declared a manifest file so that people could then audit what applications are actually doing. It's just that we are not confronting the user with these decisions. From this we came up with this sort of hierarchical of permission granting mechanisms from least desirable to most desirable. In the absolute best case we would hope that we are not going to confront users with things. We're just going to make it to where it's easy to undo the bad things that happen. At the other end of the spectrum, if there is really nothing else we can do, we are kind of forced to use an install time warning and just sort of hope for the best. >>: UI [inaudible] so in the previous scenario I want to talk about changing time and stuff. Because the UI [inaudible] disturb the workflow of the user at all right, [inaudible]. >> Serge Egelman: Say you have a couple of permissions they need to go into effect at once, so in trusted UI you have a series of button presses. >>: [inaudible] you just [inaudible] the system UI [inaudible] application has to be [inaudible] trusted by UI. >> Serge Egelman: Sure, but if you're going to have sort of compound permissions… >>: [inaudible] capability. >> Serge Egelman: No I understand. But if you want a couple of things to happen all at once, so, you know… >>: [inaudible] what is that called? >>: Composition. >>: Composition. So I think that, but I do think that there is one benefit. >>: [inaudible] understands conceptually that we do not, may not need to know that the time [inaudible] needs to change for some things. >>: But the user does not need to do anything. The user just need to, the application just use the system UI to change the time rather than directly invoking the API. I think there is a benefit to your approach, nevertheless. The benefit is not to strip the user, but the benefit is to reduce the application development costs. >> Serge Egelman: Sure. I still disagree that in practice I still think that there are a lot of cases where trusted UI is just going to annoy the user and disturb the flow. >>: [inaudible] trusted UI is not to… >> Serge Egelman: Okay, some of the things… >>: [inaudible] to be different. It is the application already needs to ask the camera. The application already needs to ask… >> Serge Egelman: Sure. Changing the wallpaper. So you have an app that maybe does themes on the phone. It's going to change the wallpaper, the skins… >>: [inaudible] system UI, trusted UI. >> Serge Egelman: Sure, but then it's not… >>: It's exactly like [inaudible] already implementing [inaudible]. >>: This should be an off-line. >> Serge Egelman: Yeah. Take this off-line. So anyway we came up with this flowchart for how to choose permissions. Yeah. >>: Unrelated to that discussion on trusted UI. I think there are two mechanisms missing here at least. One of them is user initiated configuration of the permissions and another which is like an admin push for permissions, where you might be getting an enterprise app from your enterprise administrator who's setting some appropriate default permission… >> Serge Egelman: I think the latter is out of scope because it doesn't involve the user, or the end-user really. I think the point of this, I mean in that case you could sort of want that in with implicit access, right, because it's stuff that's going out without user involvement at all, enduser involvement. We’re going to present this at HotSec in two weeks. We came up with this flowchart then for how to decide when to use which permission mechanism. To give an example here, granting internet-access, so can this be undone? Well, not really. You can't get the bits back. Is it severe? If it's abused is it going to cause, is it just an annoyance? Well, probably. If you are not roaming internationally and you have an app that is using a lot of data, there is only so much that can happen. We want implicit access here with an audit mechanism, so that if we do find that we are over our bill one month we can then look and see which application is hogging all of our bandwidth. Say we are roaming, though, this changes the severity. Did the user initiate it? In many cases, no. The request to transmit data isn't really part of the user's flow. Can this be altered? Do you want to, you know, yes, it could be altered. You could have some sort of firewall that the user is configuring, but that's unlikely in those cases. Does this need to be granted ahead of time? Not really, so this would be a case for a confirmation dialog, maybe a runtime warning when the application wants to request data, when the user’s roaming internationally. The other example is permanently disabling the device. >>: There's one thing that when you are talking about [inaudible] giving permission, it seems like considering one permission at a time is not the right way to do it, right, because internetaccess. By itself it's not that bad, but what else can go bad there? If you have access to camera and internet access, well, things might go bad, right? >> Serge Egelman: Yeah, this is a larger question. Maybe people bring this up with internetaccess, but actually, we've been crawling several application markets and looking at what permissions applications are requesting and 90% of apps request internet-access and from the user’s perspective it's sort of expected that applications are accessing the internet. When we ask users about what are the risks of using the camera, it’s sort of implicit that one of the risks is uploading what is taken from the camera. So permanently disabled, you can't undo this. It's very severe. It is not really initiated with the user that is holding the device. Can you do a subset of data? Well, not for this purpose if you want to brick the phone. Does this have to be done ahead of time? Yes. The only time you're going to be able to do this easily is with an install time warning. We looked at of these 83 permissions that we started with and how many of these could be lumped into each category and we found that actually the majority of them could be just granted without approval ahead of time and then add some audit mechanisms. This has the potential to greatly reduce the number of user interactions and therefore preventing habituation. About a quarter of these could be done with trusted UI and, you know… >>: [inaudible] how many of these implicit assets can also be [inaudible] trusted UI? That would be interesting. >> Serge Egelman: And I agree that a lot of the implicit access could be done with trusted UI. >>: [inaudible] actually can be done with trusted UI without prompting the user without the user knowing anything. >> Serge Egelman: Yeah, okay. One caveat with this is this just categorizing the set of permissions, so this doesn't say anything in terms of how frequently users are going to be interacting with one of these and this is actually a future thing, some joint work that I'm going to get to in a little bit that I am doing with Jian [phonetic]. One thing we are piggybacking on this is we are planning on doing a field study and as a secondary thing; we are hooking the API calls so that we can actually measure the frequency with which each of these permissions is actually granted typically, well not granted, but used typically. >>: [inaudible] Android people [inaudible]? >> Serge Egelman: Yeah, so my co-author on this, Adrian is… >>: Adrian is. >>: I see. Because I mean that Android permission is still broken. >> Serge Egelman: Yes, and actually it's funny. Google hasn't been very receptive to this. We've actually gotten a lot more, the Mozilla people have actually been listening to a lot of this more so than the Google people. I don't know. I guess you guys must be familiar with boutique gecko. It's [inaudible] creating a whole new mobile platform based on the gecko browser and so… >>: [inaudible]. >> Serge Egelman: Yeah. Right. >>: [inaudible] compatibility issues. >> Serge Egelman: Yeah. So this comes to the last question, which is which permissions are actually important to users. We've done a series of studies on this. In one which I presented recently at Weiss we looked at, we did a willingness to pay study to try to get at what permissions are important. We do this on mechanical Turk. We show different screenshots of fictitious apps along with the permissions that those apps requested and prices. The idea was to look at, we counterbalanced for the different apps for the different fictitious apps so that we could control for price and permissions requested. We hit almost 500 people, a third of which were Android users, US-based. What we did was we had four conditions. The first condition was the cheapest. We asked people which of these apps would they purchase if they had purchase one of them. For about $.50, $.49 was the app that requested the most permissions, so internet-access, GPS, and recording audio. We chose these three permissions because one, because the app that we described internet-access would be required and also internet-access, as I said, is requested in practice by almost every app anyway. Then we looked at record audio and location. We look at location because it's been this subject of most of the mobile privacy research for the past 10, 15 years, so we figured this would be a good starting point to look at how the people actually care about location relative to other permissions. Then we looked at record audio because we asked about a couple of permissions during our initial lab study that I talked about and we found that recording audio against a user's wishes is one of the most severe ones people stated. So we decided to contrast that with location. The next cheapest one was $.99. It was only internet-access on the audio. Then $1.49, location and internetaccess, and then finally for the fewest permissions it was $1.99. So the task looked like this. Again, these are fictitious apps. They look different, so the names are different. The manufacturers are different. The descriptions and the screenshots are all different, but we counterbalanced these, and so what we really did was just control for the price and the permissions. We found that 25% of our participants stated a willingness to pay more money for fewer permissions. To get to Ben’s point, so it's surprising here that people were paying $1.49. We were surprised at the number of people who were in the middle two conditions and the reason was in the exit survey they thought that some of these additional permissions signaled desirable features. If it requested location, that's good. They want to pay extra for the location. We found that this effect carried for about half of our participants, so the request for location was just as likely to signal desirable features as an undesirable encroachment on personal privacy. >>: Users actually have to pay somehow? >> Serge Egelman: No. >>: Or just express a willingness? >> Serge Egelman: Yes, it was a stated willingness to pay. Oriana [phonetic]? >>: [inaudible] correlate to [inaudible] test the user [inaudible] because using the user example… >> Serge Egelman: We measured that actually. After we asked them to pick an app, we asked how interested are you in this app, because we were originally thinking that if there was going to be a correlation between their willingness to pay and also desirability, we were going to toss out the people that were just uninterested, but we found that there was actually no significant correlation whatsoever, so we ended up using all of our participants. Again, this was stated willingness so this is probably an upper bound, but it's still interesting that a statistically significant portion of our users were willing to pay three times as much more, four times as much to get fewer permissions. >>: Can you give some more details? >> Serge Egelman: Yes. The difference between the first condition and the last condition. >>: Internet access, GPS was a certain one? >> Serge Egelman: Recording audio. From this in our exit survey we also asked about several other permissions. We had I think about 15 permissions that we asked people about and we had them rank them in terms of concerns. We ordered those on the right and we have also done this on our initial survey that we had done on AdMob, but we found some differences based on these rankings, and this came down to, there are several confounding factors here. One is we used different recruiting mechanisms. This one was AdMob. This one was mechanical Turk. We did these over different time periods, but also we didn't use exactly the same permissions that we were asking about in both cases. One thing that was interesting is that of the permissions that we asked about, location was consistently towards the bottom. Based on the discrepancies and the confounding factors here, we decided we should do another survey just looking at concerns over permissions of all of the permissions that we could find. We took all of the Android permissions. At that time I guess there were about 170. We use the 16 Windows Phone permissions. We were going to incorporate IOS as well, but we have already covered that set based on these. Then we merged redundancies and got rid of extraneous ones and we were left with 54 total permissions. We did this card sorting exercise on a whiteboard. To give an example of redundant ones, there are two different permissions for reading received versus sent SMS messages. That doesn't really need to be two permissions. What's the difference between power off and reboot? Not that much. The difference between force stopping applications and killing processes, and so on. So we decided we could merge these into single permissions. Ben? >>: So given all of these how did this really [inaudible] the permissions in the Android, about? It looks like somebody was just randomly inventing these things and [inaudible]? >> Serge Egelman: I've heard different stories. The story that I've heard most consistently is that it was forced upon the developers by the legal team. >>: [inaudible]. >> Serge Egelman: I don't know about the number of permissions, but in terms of doing it the way that they do where they show everything, you know, ahead of time. It's no wonder why many users said this looked like a license agreement. It's because the initial version of Android was sort of, this was the lawyers decided this is how we're going to do it. I don't know how they come up with 170 something or how they decided what would be a permission and what wouldn't. I can't really answer that. Another example would be getting rid of extraneous ones, enabling multicasts, for instance, what user, how many users are going to be qualified to answer that question? We got rid of that. We did the survey. Again, on mechanical Turk we had over 3000 participants. From these 54 different permissions we wrote down possible negative outcomes and we came up with 99 possible outcomes that corresponded to these permissions. From these 99 for each participant we randomly chose 12 of them that we showed to each person and we had them rank these. The idea was we weren't interested in sort of baseline level of concern; that's sort of impossible to determine without really, well, context, and also you can't really determine that without these actually happening to most users. But we wanted to try to rate these relative to each other so we could figure out what are the most concerning permissions and which are the least concerning. We had a five-point Likert scale and we worded this as how would you feel if an app dot, dot, dot without asking you first, and we showed 12 of these concerns and had people rate them what we found was for the high concerned risk most of this came down to financial loss, so sending premium SMS messages, which is how most malware monetizes itself making expensive calls, international, 1900 numbers, destroying the device or destroying data, such as doing a factory reset, deleting context. Incidentally, I was just talking to Helen about this, a lot of these big concerns like the data loss could be potentially you could revert to a previous state if we had really good, really well integrated cloud backup and recovery, so you might not in the future, you might not need permissions surrounding these if we could make it really easy to get your data back. Yeah? >>: [inaudible] recovery medium in these scenarios are easily done. There may be other damages associated where your contacts are being sent to other people… >> Serge Egelman: We were surprised that we found maybe in the middle of the list was sort of the privacy stuff, like information disclosure. The reason that was in the middle of the list is that that was very high variance, so it was like 25% of the permissions were at the top were universally concerning, so stuff with financial loss and data loss. At the bottom the low concern stuff, so changing settings which can easily be undone, sending stuff to other computers only, so, for instance, sending your location just to an application’s servers to find location-based services and no human sees it. People really did not care about that at all, and sending other data like that, so like unique identifiers. As long as no human sees the resulting data, people don't really care about that. Then there is sort of the middle 50% and it wasn't that people ranked these in the middle; it was that these were very polarizing and high variant. I think a lot of work, a lot more work needs to be done on maybe clustering these, maybe coming up with personas to look at, you know, developing better algorithms to decide of the ones in the middle what users are likely to care about these and what users are not. Again, one of the points I made earlier is that and this was echoed here, is that location, people don't really care about this. Well, I misstated that. It's not that they don't care about it. They care about it less than many other things that their devices could potentially be doing. Mobile privacy has been studied for at least 15 years now but location has been the sole focus of that, but compared to all of these other things that could have profound privacy consequences, people care about location a lot less. We asked about location sharing in four different contexts, so sharing locations with members of the public was the most concerning, but is still ranked 52 out of 99 concerns. Sharing with advertisers, friends and then at the bottom app servers, so as long as no human sees it, people didn't care at all about location. So then this brings me to the last point which is looking at context and how context impacts a lot of these decisions. Given two different requests, so maybe Twitter wants your phone number and this is how a request might currently appear where there is no other information. It's just saying this application wants this type of data; allow or don't allow. But maybe we're going to add some context, so if we say that it's for advertising purposes, obviously this is going to have a huge impact on whether users allow this request or not. This is work I'm currently doing with Jian where we are looking at the impact, if we had a rationale for the request, what are the impacts on users? The initial idea was basically to add a UI to TaintDroid so that we can say if we know that this is going to known advertisers, why don't we put that in these warnings so it would be more contextual. There are a lot of variables. This is work in progress so I don't really have that much data on this, but some of the things that we are looking at are differences between if we say that it's going to first party versus third-party, is that going to make a difference? If it's only viewable on the device versus leaving the device, does that make a difference? And actually to the latter question, we can say no; we've already looked at that. We did an initial pilot. We did this on mechanical Turk with 100 users and we had them view a screenshot of a request and we said you are using Facebook, for instance. We did this for four different apps and you see this screen, what would you do and why? The control condition we had Facebook requesting the address book with no other information. We then specified that the purpose is application features and data will leave the device. Another condition was application features and data will not leave the device. Advertising, data will leave the device and analytics, data analysis, data will leave the device. From this preliminary pilot, one we found that people have no idea what was meant by analytics and so we probably shouldn't specify that, or maybe just group it into advertisers or either first party, third-party. There was no distinction between data remaining on the device are going to the cloud, so the difference between application features that were going to be processed in the cloud versus on the device, people didn't care. But across all of these conditions, despite specifying a rationale in a word or two, 60% of our participants regardless of conditions still wanted more detail. So did a follow up. We had five different conditions here, similar to before; we had a control where we didn't specify any other information. We had what we called the app functionality condition, which is shown in the picture here, so we specified both the purpose of which was application features and customization, as well as sharing policy, so data will not be shared with third parties. We have an ad condition where for the purposes it said advertising. Yeah? >>: Who specify this purpose? Is it like the app [inaudible] specify this purpose, the sharing? Because it seems like there's no way to enforce this test, right? >> Serge Egelman: There are certainly policy ways of enforcing it. Privacy policies are enforceable. >>: Right but who would enforce that? Is that the mobile OS? >> Serge Egelman: If people are lying in these, then that's FCC-enforceable. >>: [inaudible] illegal [inaudible]. >> Serge Egelman: Yes. Policy. >>: Okay. >> Serge Egelman: I mean so this is sort of a thought experiment. Forgetting the limitations of the technology, if we were going to engineer it this way, if we found this as effective, then the question would be what are the steps that we would need to do to make this possible. So we had advertisements where we said the purpose was advertising and this would be shared with third parties and that we got rid of the purpose box altogether and just specified sharing between first party or third parties. We also had four within subject conditions where we just varied the application and the type of data, so we showed Facebook requesting the address book, and we had Yelp looking for location and Skype asking for their phone number and a MyCalendar app wanting access to the calendar. We chose these apps because they were the most popular apps on Google Play that requested these permissions. The preliminary findings are that when no information was given, this was really surprising, in the control condition, people assumed that data would not be shared, so there was not a statistically significant difference between the control condition and the conditions that said data would not be shared with third parties, which was surprising because we are cynical and we thought that, you know, I tend to think that when no information is given about sharing policy, it can be assumed it's going to everyone. This wasn't the case for most users. We also found that there wasn't really a difference between whether or not we showed the purpose. It really came down to who it was being shared with and why. People asked afterwards in the exit survey that regardless of the condition they were in, everyone wanted to know more information about why the data was being shared and with whom it was being shared. If it wasn't being shared they care a lot less. Our next steps with this are we are planning a lab study and so this is sort of a new mockup that we've been doing. About half of our participants wanted this information about who the data is being shared with and so the idea is now that may want something expandable so that at a high level it gives you a brief synopsis and for users who want more information they could maybe click. If it is shared with other companies, they could click that, something expands stating the name of the company, contact information, why that company is requesting the data, what that company does. These were all the things that people wanted, so we wanted to look in the laboratory whether people would expand these and be able, be more able to answer questions about what's happening with their information. So this is an iterative design process and once we've honed the designs we are planning to do a field study where we are going to instrument people's phones with these warnings and look at how they interact with them and at what point habituation takes effect. At some point people are probably going to swat these away without paying much more attention. Another thing we are looking at is scoping, so clicking the box for remembering this decision, under what circumstances would people want to select that. Will they want to revisit the decision if the sharing policy changes, if the purpose changes. Do they want this decision to remember it, to hold for this one application for all types of permissions, a specific subset of permissions, so these are the questions we're looking at now. >>: In the Yelp case, the location is used most for the main purpose, like finding restaurants… >> Serge Egelman: Yes. >>: And those are shared with [inaudible]… >> Serge Egelman: Yes. >>: And then it's kind of hard to automatically [inaudible] first, and second it's also hard to figure out which ones [inaudible]. So what is your take on that? >> Serge Egelman: This seems to be put into the warning. I don't think the primary purpose that it is, that's probably not the best wording, but, you know, purpose, so it might save both, application-specific features and advertising. That's why we are doing these iterative design experiments to figure out what is the best wording to communicate that. >>: [inaudible] to see which component of the code is asking permission access? As soon as the location is accessed by third-party [inaudible] within the code, we know, which [inaudible], so that way we can differentiate [inaudible], but there's a question of if the first part of the component requesting the location and that's also shared with other parties, that then makes these warnings [inaudible]. >>: Why don't you throw in some random explanations into the mix like due to upper global warming, or to save puppies? [laughter]. >> Serge Egelman: Yeah, that's a good idea. We… >>: [inaudible] that they will do whatever to save the puppies. >> Serge Egelman: The issue is… >>: That's a good control definition. >> Serge Egelman: Yes, and there is a famous behavior economics study on this where people, if you give any explanation, people generally comply with the request regardless of how ridiculous the explanation is. The point is, actually going back to a previous question, the idea is that the text that we put there is standardized so one thing I want to test in the near future is IOS 6 is planning to have developer’s specified text next to the warning so why location is been requested, and my hypothesis is if developers are specifying this and there is no standard text that's used, it's going to quickly lose all meaning to the most users. So the platform developer needs to specify what is the appropriate text that will appear here, and so under that constraint, I don't think anything like preventing global warming or saving puppies most platform developers would allow it to be on their set of permissible texts. So the conclusion is that a lot of the current choice architectures for mobile privacy and security are failing users because the requests are going unnoticed. There are way too many of them and they are difficult to understand. At the same time, users really do express an interest anyway in knowing what the apps are doing and there's a lot we can do in helping to design the platforms to accommodate at. That's it. [applause]. Thanks. >>: I'm trying to figure out asking people at the time of install what permissions they are going to run? Have you looked at it at all? Do people have any understanding? Of the 10 or 20 apps you have gone on this, do you understand how many of these have any of the permissions you are now making such a big deal about? Oh, I don't want to share permission for ad purposes. Do you have any idea of the 20 that you've already got, how many… >> Serge Egelman: No. The answer is no. I mean in that first study that I talked about when we had people open an application that they use frequently and they claim to be familiar with, and while looking at the permission screen we asked can this send SMS messages, and 64% couldn't answer that question when looking at the list of permissions that the app was requesting as well as based on their knowledge of the app, having used it. >>: [inaudible] I don't want allow my application for advertising. Well, I've already done it with 20 others. So why is it such a… >> Serge Egelman: Sure. And I think again that advertising is, that's a little more nuanced, but generally speaking I think that if a lot of the data usage was made more transparent to users, so maybe with notification icons, so when location is being requested having a more interactive, so when the GPS icon appears if the user wants to they can click on it maybe and it would show what application is using the GPS and maybe some short explanation of why. It's likely that a lot of people will, I believe that people might actually interact with that app to make some effort. Right now the mechanisms just don't exist for the people who are interested in learning more. >>: [inaudible] privacy posture. Like I actually have no idea what my privacy posture is and how big a deal is it to have a Delta [inaudible]? I got from no idea to no idea, right? >> Serge Egelman: Well, so we know that people express concern. We know that they are currently not being served and so if we can incrementally show that we are getting closer to aligning with fair stated preferences. Certainly we know that stated preferences are kind of bogus to begin with, but there is some grain of truth when people express desire for keeping certain data private. >>: So if you look at this list of [inaudible] permissions which is great [inaudible] purpose what is the downside of the story of people that delete their applications? What about the [inaudible] and asking why? >> Serge Egelman: We've actually done that. I don't have the data off the top of my head. Adrian just ran a survey and I haven't looked at the results yet. We designed a survey, a very qualitative survey. We were asking them if they have uninstalled applications before and why they've uninstalled those applications, but I don't have the data off the top of my head. I can send it to you once I have it. >>: [inaudible]. >> Serge Egelman: I'm not sure. This, so we started… >>: [inaudible] user [inaudible]. >> Serge Egelman: Yeah, yeah, I'm not sure if we submitted that anywhere yet. I haven't seen the results so I would hope that she submitted it. >> Helen Wang: Any last questions? Okay, thank you very much. >> Serge Egelman: Thank you. [applause].