22018 >>: Hello. Hi, everyone. I'm (inaudible) from the... engineering group, and it's my pleasure to introduce Dan Marino...

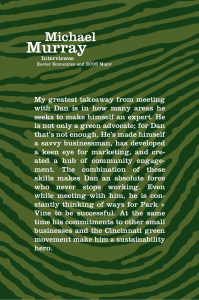

advertisement

22018 >>: Hello. Hi, everyone. I'm (inaudible) from the research and software engineering group, and it's my pleasure to introduce Dan Marino today. He is no stranger. He spent two internships with us hacking on compilers. And he's graduating from UCLA. He works with Don Wullstein at UCLA, and he is undergraduate at UC Berkeley and then he worked in the industry for five years before I decided that he was getting paid too much and he went back to graduate school. And so in graduate school, he's been working on different aspects of programming languages. And today he's going to be talking about how to give semantics to concurrent programming languages. Dan. >> Dan Marino: Thanks. And thanks everybody for coming. All right. So parallel programming is hard. And here is just one illustration why that is the case. You take this simple C++ program that has two variables and exactly two threads. The thread T here set the variable X to be some newly allocated memory. And then sets the init flag to true. And Fred, you over here test that init flag and only if it's set to true, then it goes ahead and dereferences X. And so the question we want to ask ourselves from this parallel program is can this crash? And in particular, can we get a null D reference, a segmentation null here at statement D. And if you don't spend a lot of time thinking about the low level details of concurrent languages or about memory models, then you probably would say this program looks fine. But in fact, it can crash. So if parallel programming is so hard, as a programmer, I might think, you know, forget this concurrency stuff. I'm just going to continue writing my sequential code. I've gotten good at that, so I'll just do that instead. And but there's a problem with this. And that, you know, comes back to Moore's Law, right? So we have this graph showing the transistor count doubling every two years or so, and that's continued all the way up to the present day, but the architectural landscape is changing and these transistors are getting used in a new way. So five years back or more, we started hitting physical constraints that stopped us from being able to use these transistors from making deeper pipelines and increase clock speeds. And instead, we saw number of cores on a chip being -- increasing. And so instead of having this sort of familiar model of a CPU talking to some number of levels of cash and then talking to memory, architecture are started changing and they started looking more like this where you had many CPUs and maybe many different caches. And eventually they all sort of can talk to the same shared memory here at the top. And so this sort of fundamental change down at the architecture level trickles up to the programmer. And so this programmer who has become accustomed to having his programs run faster, if he just waits a year for faster processors to come out or to be able to add new features without sacrificing performs or to had have his algorithms work on bigger problems simply by waiting, that's not going to work anymore. He's not going to get this sort of free lunch that he's gotten from Moore's law in the past, unless he can start thinking about his program in a parallel way and allow it to scale in this way. Okay. So if our programmer's going to be forced to think about -- to code in parallel, let's see how he might reason about this example program we had. Okay. How could these different threads be interleaved? Well, they could be that thread T runs entirely first and then followed by thread U. And in that case, then, we'll set X to this newly allocated memory. We'll set init to true. This will C init equal to true and then dereference X. Everything looks okay because X has already been initialized. Oh, but we could have the case where this interleaving where actually C executes in between A and B, in that situation, well, when C executes and it's still going to be false, its initial value, and so D won't be executed at all. Again, no program crash. Looks okay. And you can apply the same reasoning if you sort of look at this other interleaving. So okay, that was kind of hard, especially for a four-line program to have to reason about that. But and the program looks okay. But I said that this program could crash. So what's the you flaw here? And the thing is this program (inaudible) sort of considering all the stuff that's going on underneath the covers across this hardware/software stack. And in fact, when the optimizing compiler and hardware get their hands on this program, they choose to do their optimizations looking only at one thread at a time. And in fact, they might say, hey, statement B doesn't depend on statement A. They access different variables. There's no sort of data dependence or control dependence there. It might be faster to go ahead and reorder those. So if either the compiler or the hardware does that, then we end up with this situation where this interleaving that's suggested here could happen. And indeed, that would cause a null pointer dereference because C would see it at equal to true before X had been initialized. So why, I mean, why do we want to do these reorderings anyway? It's because there's, you know, been a lot of research and we've come to rely on our compilers to give us code that performs well by performing optimization such as comments on expression elimination and register promotion and smart instruction scheduling. And all of these different optimizations have the potential to reorder accesses. Similarly, the hardware, we have an out of order execution and things like store buffers to try and hide the latency of cache misses for instance. These same features also will cause memory accesses to be reordered. And so what that means is that when we were in the sequential world, the programming language which had the same strong sequential semantics that you expected, but now that we're quoting in this parallel world, we still want these performance optimizations that we've come to rely on, but then we have to have these weak semantics at the programming language level where we have to reason about our memory accesses potentially being reordered. And so the result of this current approach of allowing all of these optimizations and then putting up a few weak semantics is that we have nasty bugs. We all know that concurrency bugs are difficult to track down because they may only happen intermittently on certain code path and certain interleavings and they can have serious consequences. I just have a couple of examples up here of situations where concurrency bugs caused expensive or very expensive and even cause death in cases of radiation therapy machine. And so as we see increasing number of parallel programs because our programmers are being forced to code in this parallel style, and we're seeing increasing core counts, we can only extrapolate this and think we're going to see even more of these nasty bugs as we move forward. And I think that we can definitely do better here. And I think we can do better by taking kind of a wholistic approach and we can allow programmers to develop these fast and safe concurrent programs by exploiting cooperation among these different layers of the hardware and software stack. So I'm going to talk about two main projects today. Going to spend most of my time talking about DRFx which is -- it is -- it gives straightforward programming language level semantics to concurrent programming language while still allowing efficient limitation by taking advantage of cooperation across this stack. And I'm also going to talk -- that's what I'm going to spend most of my time talking about. I'll also later talk a little bit about LiteRace which was a tool I built for -- that uses sampling in order to have high-performance data race detector. And data race is a common kind of concurrency bug. Okay. So first I'm going to talk about DRFx and to first thing we're going do is to find what a memory model is. So one way to define a memory model is it defines the order in which memory operations can execute or the order in which they can become visible to other spreads in the system. And so as you can see from this definition, a memory model is actually essential in order to describe the behavior of a concurrent program. Because if you don't know what memory writes are visible to what reads in different threads, then you can't say how your program is going to behave. So one memory model is sequential consistency. It was introduced by Leslie Lamport back in 1979. And the idea with run time is that memory operations appear to occur in some global order that the consistent with the program order and they appear that way all threads in the system. So it's basically interleaving semantics. And this is in fact what our programmer who mistakenly thought that program couldn't crash earlier, he was using this sort of SC memory model. And so that's basically thought of as the most intuitive memory model that we can provide but it disallows a lot of these optimizations that I mentioned earlier. So the current state of the art for programming in language memory models are these data race free models or DRF0 models. And they give this intuitive sequentially consistent behavior that we want for a certain class of programs. Data race free programs. But for other programs it provides much weaker semantics. So the idea with a DRF0 is that programmers are encouraged to use and in fact often do use sort of high-level synchronization constructs in order to communicate between threads like locks and signal of wait. And so the compiler agrees not to sort of do anything crazy with its optimizations with respect to these operations. And the compiler also can communicate that down to the hardware using fences and translates these into atomic operations and the hardware is restricted from reordered around those operations. And a program is considered data race free if the programmer has included enough of these synchronizations operations so that no two conflicting accesses, that is accesses to the same memory location where at least one is a write, so that no two conflicting accessing to share memory can actually occur concurrently. So if you have enough synchronization, if you have a well synchronized program, then your program is data race free, and we get this SC behavior that we want under these DRF models. But we get weak or no semantics for racy programs, so the java memory model is an example of one of these DRF0 models. And it provides a much more complicated semantics for your language or for your program if it contains data races. C++, the new C++0X memory model is kind of an extreme version of a data race free model where they give absolutely no semantics to a program that contains data races. So just going back to our example program here, you actually can make this a data race free program. For instance, in the new C++0X, you can do that by specifying that this init flag, atomic key word there. And that indicates it's being used for synchronization. And then the compiler and the hardware are prevented from performing these problematic reorderings that we saw earlier. Okay. So what's the problem with the DRF0 memory model? Well, basically it's very difficult to determine whether or not a particular program has a data race. There are for instance type systems for race freedom, but they're limited in their applicability because they generally apply to lock-based code, limited styles of synchronization or if they're -- if they support more, (inaudible) analyses tend to be conservative or if they support different -- any variance of synchronization, then they don't scale well to large programs. So that's in the static world. In the dynamic world, it's not enough because testing, you're only testing certain paths of your program. You don't get full coverage. And so you may miss some interleavings, you may miss some paths, and so you won't find all the data races in your program. Furthermore, dynamic analyses tend to be very expensive for detecting races, slowing down your program by 8x or much more than that in some cases. And the fact that it's easy to introduce data race, you can see that this is actually true by just -- I made a quick search of some Bugzilla databases and found bug reports that were unresolved and had the race condition in their description. And here from Mozilla, we have 122 of them. In MySQL, we have 12. So these are real bugs that happen all the time. And so we end up with this really -- for these programs, we end up with this really weak semantics or no semantics at all for our programs. So sort of a summary of this is that if we have weak or no semantics for racy programs, and it's easy to unintentionally introduce data races, well, that's problematic for debugability because our programmers need to assume that they're seeing non-SC behavior for all their programs because they're not sure whether or not there's a race in there. It's problematic for safety in their field [indiscernible] Bowman coauthors showed how reasonable compiler authorizations that are sequentially valid when combined with a program that has a data race can actually cause your code to take a wild branch and jump to start executing arbitrary code. And that's exactly -- you know, that's one of the reasons why in the C++0x memory model they give no semantics to a racy program because this kind of thing might happen. Now, in java, they didn't have the luxury of saying we're not going to give any semantics because they have these safety guarantees they need to maintain. So they have this, you know, rather complex description of what a program behaviors are allowed in the presence of a data race, but it turns out that it's so complicated that it's really very difficult to tell if a particular compiler optimization is even admitted by the model. And in facts that I'm checking as pinol [phonetic] showed that certain compiler optimizations that were explicitly intended to be allowed by the model in fact were not. And so it's difficult to reason with these kind of memory models. So our solution to this was the DRFx memory model. And in this memory model, we take the approach that a data race is a programming error. And as such, we should feel free to terminate a program at runtime if a data race is detected. Okay? And by allowing ourselves this freedom and as I'll show you by using cooperation between the compiler and the hardware, we get another model that has good debugability. We're going to give sequential consistency for all executions. It's a safe programming model because we're going to halt a program before any non-SC behavior is exhibited. And it's easy to determine what compiler optimizations are allowed. Basically all sequentially valid optimizations are going to be allowed with a few restrictions that I'll describe later. And so with this hardware compiler codesign and the ability to throw exception, we end up with strong end-to-end guarantees from the source language down to the actual executions of compiled code with a nine percent slow down on average. So let's take a look at what the memory model guarantees. Okay? The first part of the guarantee is if the programmer gives you a data race-free source program, then all executions of that program will be sequentially consistent. However, if there's a race in the program, the program may be terminated with a memory model exception. So with the picture the way it looks so far, it seems like we need some precise runtime data race detection. But that's slow if you try and do that in software and converse solutions are very complex. But we actually have an escape hatch here. If your program has data races, we don't have to raise an exception. We can go ahead and allow the program to continue executing as long as we can guarantee that the behavior we're seeing is sequentially consistent. So we take advantage of that in order to simplify the runtime detection process. >>: [Indiscernible] may be raising? An exception may be raised [indiscernible]? >> Dan Marino: May be raised. >>: Okay. >> Dan Marino: Yeah. We have two options. If the original program has a data race and we're running it, if that data race is going to result in SB behavior, then we -- or non-SB behavior, then we absolutely must throw a memory model exception. If that data race is in -- somehow is exhibited but doesn't cause non-SC behavior, can somehow guarantee that we're still going to get sequential consistency, then we don't need to raise it. So let's take a look at how we do this. Here's our example program for earlier without that [indiscernible]. So if this is a racy program, we've got a race on both X and on the init variable. And here's that problematic interview thing. And this would have been done by the compiler. This reordering of these accesses. So our inside was that the compiler could actually communicate to the hardware regions where it performed optimizations. Regions in which it might have reordered accesses in order to optimize. You can do that using some new kind of instruction called a region fence. It tells the hardware where these code regions are. Now, the hardware's responsibility then is to just to detect conflicts, read two accesses to the same memory location where at least one is the write. Among -between regions with SEQ concurrency. So in this problematic interleaving, these regions definitely execution concurrently and so the hardware needs to raise a memory model exception. If, on the other hand, the execution looks like this, well, then, you know, we have this reordered occurred, but it's not actually causing any problems because you know, it completes entirely before this other region with which it races starts executing. And so we don't -- we still have a data race that's exhibited in this program. There's no happens before relation between these regions. There's no synchronization operation forcing them to execute in one order or the other. But nonetheless, we can guarantee SC behavior and so we don't need to race any exception. And so this is the simplification. We don't have to do full race detection. We only need to do data race detection on concurrently executing regions that were communicated by the compiler to the hardware. So we rely on this cooperation and so there's a set of requirements that the compiler and the hardware need to obey in order to get our strong guarantees that we're looking for. So for its part, the compiler partitions each thread interregions by using the region fence instructions and it puts synchronization operations in their own region. And so this stops -- in well synchronized programming, this sort of stops any regions that have conflicting accessing from executing concurrently and so we won't raise any exceptions at runtime. It also agrees to optimize only within a region. So it's limited in its scope by these regions that it puts in. But the nice thing is that it can perform all of the sequentially valid optimizations that it wants to within that region, except that it can't introduce speculative reads or writes because that could raise -- cause the hardware to race an exception in an otherwise race-free program. For its part, the hardware needs to treat these fences as memory barriers, essentially not optimizing across fences. But otherwise can do all the reorderings it usually does. And it has to perform this conflict detection. So those -- that set of requirements, we've formally specified them and used them to establish DRFx guarantees. >>: [Indiscernible]. >> Dan Marino: So the region fence -- so the requirements are that synchronization -- synchronization operations definitely have to be in their own region, right? So in that sense, they are related to synchronization. But they don't -- but region fences can be added in places other than right where synchronizations operations are. And I'm going to show you later why you might want to do that. Okay? Why it would be useful to actually put in one of these fences even when there is no synchronization. >>: And so [indiscernible] still be races [indiscernible]? >> Dan Marino: Yes. The region fences don't do any additional synchronization. All they -- yeah. They're just limiting where that synchronization is. >>: [Indiscernible]. >> Dan Marino: It is a limitation, but it also stops the hardware from moving memory accesses across it. >>: I see. So you said the compiler [indiscernible]? >> Dan Marino: Yes. That's correct. And the hardware needs to -- okay. So whatever region fences the compiler comes up with, the hardware also needs to -- will detect conflicting access -- first of all, that's why everything is in a region. Right? There's nothing that not in a region. So everything is in a region. And the hardware will always detect conflicts between concurrently running regions. And so if [indiscernible] performs that reordering within there, it would still notice that it conflicted with another region that was executing concurrently. >>: So the hardware [indiscernible] region of its own. >> Dan Marino: It won't insert the region, because the compiler has already inserted regions. So its response -- and so the compiler is inserting regions. Every line of code that will begin the program is in a region. And as long as -- the basic idea is that as long as there's no conflicts between the currently executing regions, then you actually have a region serializable execution. And it doesn't matter whether or not it was the compiler that reordered those things. The only things that the hardware is only allowed to do those reorderings within the regions of the compiler inserting. >>: So if [indiscernible]. >> Dan Marino: That's correct. Okay. So yeah, we formalize these requirements for the compiler and the hardware. And then you use those to establish that indeed, these -- if the compiler and the hardware meet these requirements, then we get this end-to-end guarantees that we want to give the programmer. And that is that a data race-free source level program implies that there will be no memory model exception raised at runtime for any execution of that program; and furthermore, if an execution goes through without raising a memory model exception, then that execution is sequentially consistent with respect to the source program. Okay? So I claim that this restrictive form of race detection was efficient and simple. And it is. And in fact, there is analogy here with hardware transactional memory. In hardware transactional memory, we have these transactions that are running concurrently and you have to detect conflict between them and then roll back and reexecute a transaction if conflicts are detected. And we actually do something very similar here, but it's actually simpler because some of the difficulty in implementing hardware transactional memory is doing checkpointing and having to roll back transactions. Here we don't have any rollbacks. We actually are able to terminate the program because we can guarantee there's a data race. Also, another source of difficulty in implementing transactional memory is because the programmer's controlling the boundaries of transactions, they can be arbitrarily large, but the hardware only has bounded resources to work with so there has to be some sort of fall-back mechanism. Here we actually -- our compiler's inserting the region fences and so we can actually bound the rise of these regions. And that's exactly what we do. And this is why you might want to put in region fences where you know aren't synchronization operations. And so this -- by bounding the number of memory locations that are accessed in any particular region, we ease the hardware, the job of the hardware, and the disadvantage to this is that we are still -- we're limiting the compiler to optimize only within regions. We're sort of further limiting its scope by bounding these regions. What we would like, if a hardware wasn't necessarily required to limit its scope optimizations, so we actually distinguish these bounding region fences from the region fences we insert for synchronization so that the hardware can actually coalesce these regions at runtime if the conservative compiler analysis -- if it has resources available either because it has a lot of resources dedicated to conflict detection or because the compiler was conservative in its bounding analysis. >>: [Indiscernible]. >> Dan Marino: Because the compiler has to agree not to reorder accesses across the regions. Otherwise we can't get the end to end. We can get the sequential consistency from a compiled program but not from the original source [indiscernible]? >>: Okay. [Indiscernible]. >> Dan Marino: So this the case for our implementation. That brings me to the next slide. So we took LLVM and we do this naïve bounding analysis. And for this analysis, we did put a region fence at every loop package. So essentially, you have a single liberation of loop. Now, this is not -- this is naïve. And you could do something else. You could unroll the loop and have several iterations be in a region and then, you know, loop that way. So we just haven't implemented these more advanced features to make the analysis -- well, not even the analysis but just make the insertion of region fences better. And, yeah. And so ->>: So those fences that the compiler [indiscernible] you just mentioned really are [indiscernible] in the sense that compilers actually telling the hardware you may put [indiscernible] because I didn't reorder across. >> Dan Marino: Yes. >>: Doesn't mean the [indiscernible] has to put it. >> Dan Marino: That's exactly right. So the hardware is still able to do reordering as long as it has resources available to do this conflict detection over the larger region. Yeah. So that's -- I mean, that's maybe will explain why we don't see such a huge performance loss when we are doing this sort of putting this naïve thing of putting a fence at every back [indiscernible] because the hardware is still able to do all the reordering it wants across those loop iterations as long as it has the resources. Okay. So that's what we did for the compiler based on LLVM. For the hardware, we simulated this in FeS2 and we used a lazy conflict detection and did this region coalescing that we're talking about when possible. And we measured the performance on these parallel benchmarks, PARSEC and SPLASH-2. And PARSEC in particular is supposed to capture, you know, the types of programs we'll be running on multicore machines, the emerging workloads for those kind of machines. So these are the results we saw. I on average, nine percent slow-down. The blue portion of this bar roughly corresponds to the overhead that was caused by lost optimization opportunities in the compiler. So because the compiler is being restricted to these regions, it's not able to do all of the optimization it normally would and so that's what the blue portion of the bar represents. The red portion is from the lost hardware optimization and the additional cost that the hardware has to incur from doing this hardware conflicts sort of race detection. So if we were to improve the way in which we were inserting these bounding fences, I think that there's a lot of hope that we can get this blue section bar to shrink further than at this now and actually bring these overheads down. >>: [Indiscernible] you don't think it's the case that the [indiscernible]? >> Dan Marino: If there was a data race in that loop, then you might be more likely to race memory model exception at one time because you're doing conflict detection over larger regions. And you may have broken it by doing some of these optimizations, but we're going to catch it. >>: [Indiscernible] these programs may have such bugs. >> Dan Marino: They may have such bugs. I think we did encounter a race in one of two of these benchmarks that seem to be an intention race that was in there, but for the most part, these are race-free programs, and so these -- you know, there just really is lost optimization potential. >>: [Indiscernible]. >> Dan Marino: If you [indiscernible]. Yeah. We haven't done that yet. Okay. So for summary of this DRFx stuff, this idea of having the compiler communicate to the hardware regions where it might have performed optimizations and then doing a lightweight form of data race detection, along with this freedom to raise an exception and terminate a program on racy execution gives us a memory model that's easy to understand both for programmers and compiler writes. The programmers need understandable SC behavior for all programs, unless of course they're terminated, in which case they know they definitely have a data race in their program. And the compiler is allowed to perform all its favorite sequentially valid operations without tricky reasoning like you have to do in the java memory model with a few restrictions. And it's efficient, you know, because it admits all these optimizations and because the detection that we have do in hardware is actually fairly simple. And so we saw this -- we only saw a nine percent slowdown on average. So -- which I think is a reasonable price to pay for an understandable memory model. >>: [Indiscernible]. >> Dan Marino: Yes, you do. And I think that that still would be a problem that you would want to address. I mean, I think it's still worth looking for data races even when you have a memory model like this. Perhaps even more so because you don't want to have these kind of [indiscernible]s in the field. I mean, there are compromises that you could think of. You could use DRFx for development and then say if you think that it's better that your program limp along in the field, you know, not do this conflict detection, I don't know filled recommend that because that would just make that more difficult to debug. But you know, that is -- you know, that's an interesting point in this. But I don't know that it's any worse than saying that your program with branch to arbitrary code at the start of executing either. So . . . All right. So if there are no other questions on the DRFx portion so far? Yes? >>: [Indiscernible] synchronization option [indiscernible] do you include in that [indiscernible] synchronization [indiscernible]? >> Dan Marino: Well, we would include all of your low-level sort [indiscernible] interlocks operations. Those would need to be on their own in addition to library calls to something like lock and unlock and things like this. >>: [Indiscernible] if I have data race, right? Because these things [indiscernible]. >> Dan Marino: So I think that we can do -- so there's two different approaches you can take here. You can say that the libraries are not subject to the DRFx and sort of isolate them and say that you just put them in their own region. You could also choose to actually only put lock and unlock -- sorry -- only consider the lowest level machine instructions as being -- as being synchronization operations and then insist that those lock and unlock actually be coded in a way that they would know, you know, benign races or races that people, you know, consider the underlying machine's memory model to get correct, that you actually had to have -- use that atomic operations. In our tests, these threads -- these programs were using T threads and we did not recompile a T threads library, and we put those calls in regions. Okay. And so I'm going to move on to talk about LiteRace which uses a sampling approach in order to make data race detection lightweight. So we don't have DRFx hardware. DRFx requires hardware support, so what can we do in the meanwhile? Well, one thing we can do is we can try and find data races using software tools. And you know, we want to find data races for multiple reasons. First of all because of exposure to these weak memory model effects that we were talking about all through DR effects, these reorderings and unintuitive behaviors. But even if you had sequentially consistent semantics, data races still can indicate bugs because oftentimes they indicate some violation of a high-level consistency property. For instance, if you don't have U techs locks inserted to protect the state of an object that is consistent. So you still want to find data races even if you have SC, and especially when you don't have SC. And as I mentioned earlier, static techniques are rarely used. You know, it's an active area of research and there's good work in type systems for avoiding raises in lock-based code, but for code that doesn't use locks, then you're sort of left out in the cold. And so that's where dynamic race detection has some advantages. It's able to support -- easily support any kind of synchronization down to low-level comparant swaps. And when they report a race, dynamic race detectors are capable of doing that with no false positives. On the downside, dynamic race detection has low coverage because it only -you know, it can only run on the test cases that you give it, and only on certain interleavings. And so you can miss bugs. And in addition, there's also very high overhead for doing dynamic race detection. Precise detectors, you know, the fastest ones slow down an application by 8x, and some of them out there are 50 X or 100 X. And so as a result, developers and testers are unlikely to use these tools. And so LiteRace, the goal here was to sort of keep these advantages that dynamic detection has and mitigate some of these disadvantages. The idea is that if we can make it lightweight enough, then we can increase the coverage by running it all the time, maybe even during beta deployment. And we also want to maintain this feature of data race detection that we're able to support all the different synchronization primitives. It's also very important that we don't give false positives because we want to -the last thing we want to do is send developers on a wild goose chase looking for a race that doesn't exist. That's not the way to get people to use your tool. And we found that this sampling approach was actually effective here. We were able to run it on x86 binaries. We ran it on Apache and Firefox, on these concurrent see runtime for dot.net, and Dryad distributive application framework and ended up finding 70 percent of the races that were present in a particular -that were exhibited in a particular execution by sampling only two percent, 2 percent sampling rate, and that translated into a 28 percent slowdown versus a seven and a half X slow down for the fastest full data race detector we could build. So here's what that architecture looked like. We take an x86 binary and we instrument it to log events of interest, which in this case are memory accesses and synchronization operations. Then we generate at one time a log of these events which we then postmortem, after the execution, analyze using happens before race detection to report races. The idea of using sampling then, of course, is to reduce the number of events that we have to analyze, and that should speed up this part of the code and also the detection process. So sampling has been shown to be effective in other contexts. So in profile-guided optimization, for instance, this has been very effective, and in ASPLOS '04, the swatch rule was introduced which found memory -- memory leak bugs using sampling very effectively. But it's not clear that you can apply this technique easily in the domain of race detection. And the problem is that most accesses don't participate in a race, and furthermore, by definition, we need to capture two accesses, the two racing accesses in order to do this. And at the same time, we want to avoid these false positives. And I'll explain why we have to be careful with that in a moment. So let's take a look at what happens before race detection looks like. So here's a graph of two threads, and program order creates happens before edges within a thread. And then synchronization operations induce happens before edges across threads. And here we have a properly synchronized program that uses logs to make sure that you're not writing X at the same time that you're reading X. And we know that there's no race here because we can actually draw a path between these two accesses in our happens before graph. If we had an unprotected access outside, then we have a data race because we can't draw a path between them. So we can see why this is -- why dynamic happens between race detection is very, very expensive and that's because we have to monitor -- we have to do some extra work at every synchronization operation and every memory access. So we want to use sampling to get rid of some of these. And you notice that if we miss one of these memory accesses, well, we might miss this data race here for instance if we didn't log this read of X. But we're already missing bugs. We're in a dynamic analysis. We're only testing some paths. So if this doesn't happen too often, we'll be okay with that. However, if we were to not log one of these lock operations, for instance, well, that can actually effect the happens before graph. And so we can end up reporting a false race between these things that are actually properly synchronized. So bottom line is we use sampling for the memory accesses but we do not sample synchronization operations. We log every synchronization operation that happens. So how are we going to doing sampling? We want to find both of these accesses and have a low sampling rate. So the first thing that I tried was, well, why don't I see how random works. It actually wasn't nearly as bad as I expected it to be. With a ten percent random sample rate, we detected around 25 percent of the races that were exhibited during an execution. And if you bump that up to 25 percent, we found 45 percent of the races. But I should point out that this is still, you know, if you're logging a quarter of the memory accesses in your program, you're going to slow down your program a whole lot. So this is not really great. And in fact, we can do a whole lot better. We end up sampling only around two percent of memory accesses and still find 70 percent of the races in the program. Yeah? >>: [Indiscernible] do the sampling? >> Dan Marino: So sampling is based on this cold path hypothesis. And we consider code to be cold if it hasn't been executed before in a particular fragment. Okay. And that was important. It turns out that we did this per thread. And so the hypothesis was, especially if you have a well-tested program, then the races between hot paths are already going to have been found and fixed. And that we want to focus our effort on these per-thread cold paths. >>: Is it possible to locate all the synchronization of the [indiscernible]? >> Dan Marino: So -- well, I mean, the cost of logging all of the synchronization operations plus the two percent of the memory accesses ended up being a 28 percent slowdown on average over our benchmarks. It varied a bit. >>: So only 28 percent, most of [indiscernible]. >> Dan Marino: I would say that that is true. I don't remember if I -- this has been a while. I don't remember if I looked at exactly -- I think I did break down exactly what the synchronization cost was versus the memory, but I would say that most of it was [indiscernible]. Right. So here are those results that we got. These first two columns were calculated by running a special version of the tool that actually logged all accesses, so that means it's actually performing full data race detection, but noting along the way which of those accesses which I have been sampled by our sampler. We then find all of the distinct pairs of racing instructions that were exhibited during that execution and see the percentage of those that were found by the sampled -- if we he only used the memory accesses that would have been sampled. So that's how we get this number of an average 67 percent of the races exhibited during a particular execution found by our sampler. And then this is the effective number of memory accesses that were locked in each of those applications. And then of course over here, we did the regular tool without doing the full logging to see what its performance would be, and then this full logging slowdown was we took out all of the overhead of sampling in deciding whether we were sampling or not and just did as fast as we could using the same infrastructure sort of to log all the memory accesses and synchronization operations. So doing that full logging, we slowed down seven and a half X as opposed to just 28 percent slowdown for the LiteRace version? >>: How do you know how many race -- how do you know -- you know all the race? >> Dan Marino: I know all the races that happen during a particular execution, that are exhibited during a particular execution. >>: So like a separate tool. >> Dan Marino: It was a tool that did -- yes. It was a tool that logged all memory accesses and all synchronization operations. And so from that I can do happens before race detection and exhaustively find all the races that were exhibited during that execution? >>: [Indiscernible]. >> Dan Marino: Well, you know, you have to argue with a developer to tell them that they're [indiscernible]. You know, we did find something that at least we could get people to admit were performance problems because of these races, like missing -- scheduling something because of a race or something. >>: [Indiscernible] safe to use even though they're [indiscernible] ->> Dan Marino: I don't think so. I think that we should [indiscernible] for having absolutely data race-free programs. That's why DRFx seems like a reasonable memory model for me. >>: [Indiscernible] funny [indiscernible] how many you find [indiscernible] and then there isn't nothing [indiscernible] no one really cares [indiscernible] races. >> Dan Marino: Yeah. I wish that we could change that perception. I really do think that it's going to get worse as we get more and more concurrency going on. These data races, actually many exhibit real problematic behavior? >>: Are you saying like if just in the real executions, almost never observe the bad behaviors [indiscernible]? >>: I think the problem is that developers think [indiscernible]. >>: It means like race detection are not useful [indiscernible]. So I'm kind of wondering like how many raises are [indiscernible]. >> Dan Marino: Yeah. Excellent question. And it's something that we've struggled with in analyzing this because diving into a code base like this that's large and then trying to determine whether some race report is potentially benign, you know, my initial reaction because it's just my attitude towards this is you shouldn't be programming with air races so this isn't a benign race, but you know, looking at that with like, okay, with I really reason about this, do I think it can exhibit bad behavior? That's difficult to do. And so I would like to know how many of these were quote/unquote benign or intentional? >>: [Indiscernible]. >> Dan Marino: For sure. >>: So [indiscernible]. So should I do to write this log? >> Dan Marino: Well, I mean, that's a difficult question. That's a practical issue that we're -- that you have to deal with now. You know, I mean, I would say long term, you want to take away the incentive for doing something like that. You want to say, you know, have your hardware or your compiler be smart enough that it can do, you know, it can make a high-performing lock for you and with you saying exactly, you know, using these operations? >>: [Indiscernible] take over responsibility [indiscernible] synchronization. >> Dan Marino: [Indiscernible] and I don't think that when it comes to systems code that that the always going to be feasible. I think you're always going to have someone who needs to get their hands in at the low level and do some of this stuff. And for that, you know, I don't think that this necessarily addresses that, but I mean, ideally, you want to be able to verify that using something -- I think you have a tool for verifying fences -- you have the right fences in. So I mean, low-level verification would be great. I think that the bottom line is I also think that you need to have the developer explicitly annotating this kind of behavior in a readable way so that tools are able to reason about it and help that. I think that that's key. Yeah? >>: So [indiscernible] races found [indiscernible], tens or hundreds? >> Dan Marino: For most of these executions -- so let me distinguish between -first of all, a particular static -- what I'll call a static data race between to lines of code may exhibit itself many times during an execution. And so I think for some of these you're talking about definitely hundreds if you count repetitions. If you just count the number of static, which is what I'm doing here, because generally, the sampling version, you know, only found a couple of one that was exhibited a lot of times in the full lock. Then you know, I think that when -- for like concert and Dryad, I think it was down around 15, you know, that kind of order of magnitude. >>: [Indiscernible]. >> Dan Marino: It's static. >>: So did you take a look at any [indiscernible] and see if they were for instance [indiscernible]. >> Dan Marino: I did not see if any of them were [indiscernible] reports for Firefox. For concert, we did track one of them and go to the developer and they were convinced that it was benign in a sense but so a task that was ready to be scheduled wouldn't be picked up for some delayed enough time because of the race, but they were willing to live with that? >>: Did you get and understanding that with that performance [indiscernible]. >> Dan Marino: I also think that people don't like to fix races because it's not always easy do. You don't know exactly what synchronization you want to use without causing -- being very course [indiscernible] causing a lot of performance [indiscernible]. Yeah? >>: Is there any way that you can test your [indiscernible] model [indiscernible] to see which ones [indiscernible] actually still [indiscernible]? >> Dan Marino: That's interesting to see whether what happens before races were very far apart so that they wouldn't cause any problem? That would be an interesting way to combine this, yeah. >>: You don't have that ->> Dan Marino: I don't have that information. So I'm not going to describe all these different samplers that we use but I just wanted to show this because it's kind of a cool graph. These cold path hypothesis samplers are these two on the left. The bar shows the percentage of data races that they found in executions and the dot shows the percentage of memory accesses that were sampled. So you want a dot closer to the bottom on a tall bar. So these obviously are doing pretty well. Just for fun, I did this uncold path sampler which logged everything except for the ones that would have been logged by a cold path sampler to see what happened. And obviously, 99 percent of the memory accesses were logged and we still only found a third of the races that exhibited in the program. And I don't have any really deep idea of exactly why this is the case, but it is good empirical evidence that this cold path hypothesis is reasonable. So just real quickly, you know -- yes? >>: [Indiscernible]? >> Dan Marino: No. Because of this idea that a static race may exhibit itself multiple time during an execution, yes, exactly. >>: Since it's static, why would -- if you have a hot path race, then this would not [indiscernible]. >> Dan Marino: Lower frequency [indiscernible]. So I'm going to just quickly sort of position this on some other related work on both DRFx and LiteRace. Obviously DRFx is a continuation of all work that's been done in memory model, both the hardware level and the programming language level. And you know, what we wanted to do was give as strong a guarantee as we could while still allowing a lot of these optimizations. And this -and by using this idea of race detection, there's also been a lot of work in hardware and software race detection, but none of these solutions were either high performing enough to use in DRFx or if they sacrifice precision, they didn't do it in a way that we could sort of harness to still give strong guarantees. So we had to sort of do something different there. Obviously LiteRace is a software race detection tool and it was the first one that used sampling in order to make things lightweight. There was work back in the early '90s on detecting SC violations that we were inspired by for the DRFx work where in the hardware they detected races in in-flight instructions in the processors and then terminated the [indiscernible] race or terminated the program if that was the -- if that happened. And so our insight here was, okay, how are we going -- that only gives you SC for the compiled binary. How are we going to raise that all the way up to the programming language level to give it end-to-end guarantee. So the idea of regions was key there in order to allow that to happen. There's also been other work on memory model exceptions. We're not the first to suggest that it would be nice if we could terminate a program when there was a data race and that's been suggested in the context of full data race detection and then in work that was concurrent with ours in this conflict exception work by Lucia, et al. They do something very similar where they detect concurrent conflicts between regions, but they don't do this bounding that we do. And in fact, that allows them to give a slightly stronger guarantee which is serialized available of synchronization-free regions of code. The trade-off for that is that they have to handle this sort of unbounded detection scheme and it makes the hardware more collection. So I'm running a little long so I'll try and quickly finish up here but I'm going to discuss a little about some ongoing work and future work. So I wanted to pose a question: Is SC actually too slow? This is kind of the -what DRX0 models and DRFx work, we all sort of are working under this assumption that you know, if you try and do SC, then you can't do all of these optimizations and your code is going to perform horribly. But then you notice that in the hardware community, there's been all this architecture research that shows how you can really reduce the gap between a model like SC and a really weak memory model like release consistency. And it's not just in the research. In fact, x86 provides a variant of TSO which in many senses is a very strong memory model. It's not as strong as SC but it has a straightforward operational semantic that's recently been described. It's easier to understand than for instance the java memory model. And clearly it's getting stronger guarantees than the C++0X memory model because it doesn't behave arbitrarily in the face of data races. So if the hardware community is able to do this, maybe the compiler is where we're going to lose all of our performance. So you know, what's going to happen if we try and make an SC-preserving compiler? And so the idea here was I'm going to take an LLVM which is a state of the art optimizes C compiler. And then check every transformation, every optimization that it does for its potential to reorder accesses to shared memory. Then did I able the passes or modify them to be SC preserving because you only have to disable the pass for instance for shared variables. You can still allow reorderings of local variables. So that's what I did when I was modifying these passes. And this included things like loop invariant code motion, global value numbering, does a form of common subexpression elimination and many other passes. So I took this new compiler which I should distinguish this from like other work in SC compiler that actually insert fences to make sure that your program behaves SC on a particular architecture because that is not what this is doing. This is just not performing any non-SC operations itself. And then I measured the slowdown of this compiler, this restricted compiler when running on stock hardware over fully optimized verse of LLVM. And these are the results that I saw over the par second slosh benchmarks. On average, 2 or 2 and a half percent slowdown. Now, this [indiscernible] application, we see a 35 percent slowdown, which is pretty severe. But for most applications it looks okay. In fact, you know, in some cases, the compiler appears to have been stepping on its toes a little bit, get slightly better performance when we disable some of these optimizations. So is two and a half percent too much to pay for an understandable memory model? I would say absolutely not. 35 percent might be though. So if you are really interested in writing facesim, then maybe that's going to be a problem. So I was thinking, you know, hardware manages to close the gap by performing optimizations optimistically under the assumption that there are no data races and then recovering if there's a potential race encountered at runtime. And it does this by monitoring cache traffic. So it says, you know, if no one has accessed this cache line since I got it, then everything is okay. My optimization was okay. Otherwise I swash computation and reexecute. We can actually allow, if we can change the hardware so it actually exposes the mechanism to the compiler, then we can actually do the same kind of thing in the compiler where we optimistically optimize and then if we notice a -- if we notice a race, then we roll back and execute unoptimized code. And that actually allowed us to reduce the overhead on facesim by half just by applying this technique to a single optimization pass facesim expression or global value number in pass actually in LLVM. So just before concluding here, I want to point to this quote by Sarita Adve and Hans Boehm who both have a lot of experience working in this area of memory models. Sarita is very instrumental in the java memory model and Hans in C++0X memory model. And in their communication in the ACM article recently they said: We believe a more fundamental solution to the problem will emerge with a co-designed approach, where future multicore hardware research evolves in concert with the software models research. And this is exactly what we were doing in DRFx, and I think to a very good effect. And I think that we can continue to do that into the future. In the near term, I would like to think about ways of adding additional flexibility to the DRFx memory model. You know, is there a way that we can support sort of a explicit benign races in the program without allowing those to sort of bleed in and violate our safety guarantees that we want to provide. You know, or is there sort of an analog to the C++ low level atomics that gets us more information about exactly how synchronizations are being used? In terms of this SC-preserving compilation, I think we need to sort of -- this is a surprising result, this thing that on average we're only seeing a two percent slowdown by disabling all of these optimizations, so we need to keep on doing this on some more benchmarks and sort of see exactly which optimizations are important in what situations. And additionally, I'd like to be able to sort of formally capture exactly what kind of optimizations allow these interference checks which is the [indiscernible] I was talking about which allows the hardware to -- the compiler to do the thing similar to the hardware to optimize optimistically and then to roll back if interference was encountered. And then, you know, more long term, I think that this idea -- this wholistic approach of considering all levels of a programming stack is going to be really important. And I think we need to allow programmers to express their -- the intended concurrent behavior of a program or the intended synchronization discipline, to express that in a reason-readable format at the high level. You know, in some cases, for many applications, hopefully that takes of the form of some kind of declarative description of the parallelism they want to see, but for some low-level system stuff, you know, we may still need to have low-level synchronization disciplines like read, copy, update. But hopefully we can communicate exactly how we're intending this discipline to work so that our compilers and static analysis tools can take advantage of it. In the case over here of the more high-level parallelism descriptions, the compiler can take care of generating high-performing low-level parallel code. And over here, you know, hopefully we can flag discipline violations and bugs that the systems programmer was unaware of. And I also think there's more opportunity here at the interface between the architecture and the compiler for getting higher performance out of our parallel programs. For instance, you know, maybe having the compiler distinguish local variables from share variable accesses somehow to the architecture so that it's able to perform more aggressive optimizations on local things, just like the compiler can. Or somehow communicate memory-sharing patterns to get us better performance. And in short -- and I think this applies not just to multicore architectures but all the future architectures that we're going to see. So together, I think this wholistic approach across the stack and hopefully harnessing some cooperation, we can get programmers to develop fast and safe concurrent systems. And that's it. [Applause.] >>: [Indiscernible]. >> Dan Marino: Well, I would -- so what I'm worried about are the programmers that are not intending to write programs that way and are actually writing racy programs unintentionally. And that the where I think that -- now, I mean, if your question is if I had static techniques that allowed me to guarantee race-free programs while still being expressive and high-performance, I say that that is great. But the proved very difficult to try and achieve that. And so I think that whether it's DRFx or some other technique, I think this idea of leaning on the hardware a little bit to let us do this, you know, more easily, is reasonable? >>: So if the programmers [indiscernible], they should use better [indiscernible] or something, but if they're -- if they have races in their programs, [indiscernible] then seems like LiteRace would be a better attack to find those [indiscernible] and change the programs. >> Dan Marino: Although -- I mean, I agree. Although, the problem with that is that there is -- you're still not guaranteeing that you're going to find all the races. >>: But even if you guarantee [indiscernible] consistency, if the programmers did not realize that there were races in the program to begin with, the [indiscernible], right? >> Dan Marino: That's true. And but yet, I mean, the nice thing there though is at least you can reason -- you can reason in that natural intuitive way about what you're seeing happen. You know, you can say, okay, this must have happened before this which happened before this and then got interleaved this way as opposed to thinking, this statement could have arbitrarily executed early. That's why I'm seeing this value and this register. So it really does become a debugging nightmare when you have these weak numbering model effects. >>: Thank you. [Applause]. >> Dan Marino: Thanks.