37007 Lenin Ravindranath Sivalingam: Okay. We'll get started. ... to introduce Robert back here. As you know, he...

37007

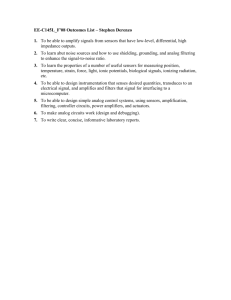

Lenin Ravindranath Sivalingam: Okay. We'll get started. It's my pleasure to introduce Robert back here. As you know, he interned here with us two times I think 2011 and '12. He was one of our most successful interns. His paper that came out of MSR, one of the best papers around. He's been very prolific as a grad student. He just told me he also has a newspaper so he works pretty much across the stack in thinking about continuous mobile vision. I'll give it to Robert at this point, thank you.

>> Robert LiKamWa: Thanks. Just to correct the record, it was 2012 I was at

Microsoft Research Asia. Oh, no, sorry. '11 was Asia. Here, '12 was here, and '13 was here as well. So, yeah, I had a great time, too. So as he mentioned, I'm Robert LiKamWa, and I'm from Rice University. I work in mobile systems. And in mobile systems, in my research, I've had the luxury of operating across three traditional domains of computer architecture, operating systems and machine learning all to advance what I consider to be the frontier of personal computing is mobile systems. And it's fun to take a step back and look at frontier of personal computing and take a philosophical lens. It's always been about the input that's been driving computing. The more the computer can sense, the more it can serve us. Sense more, serve more. In fact, at the dawn of personal computing, the personal computer, the game changer was the keyboard and the mouse with which now you can sense the user's desires and it can serve you content. With smartphones, we allow it to sense our location. We allow it to sense our social networks, and can serve us richer content as well. And with wearable devices, we allow it to sense our activity and allows us to sense our biometric information and it can serve us personal information. But the question is always what's next.

What is the ultimate form of input that we can provide to our personal computing devices. My answer to that question is vision. This is the ultimate form of input. We as humans use vision to drive our interactions with the world around us. Different faces, different objects in a room.

Computers similarly need that vision in order to connect to the world.

Imagine this busy workplace environment. If computers had vision, it could look out for important people and remind us and tell them important things.

If computers had vision, it could keep a social log of the people that we've been talking, day in, day out, and it could remind us, hey, I haven't chatted with Fred very much recently. Maybe I should catch up with him over a coffee. If computers had vision, it could maintain a visual search engine of all the objects in our lives. So I could say, hey, Siri, where are my keys.

Hey, Cortana, where are my keys? And it would tell me, hey, it's on your dresser, go grab it and get out the door. So this would be a great future where you could continuously observe from a mobile device to provide vision services what we call continuous mobile vision. Showing the computer what we can see so that it can serve us more effectively. But the question is always why can't we do this today? I mean, we have high resolution image sensors that can see the world, right? And we have nice vision algorithms that are working very, very well today. But there's two critical barriers. One physical and the other social. The physical one is energy efficiency and I spend the bulk of my time working on this problem. And so the bulk of this

talk will also be about energy efficiency. But moving forward, I'm also very interested in the privacy aspects relate to continuous mobile vision as well.

And these impact many different form factors, whether it's the phone or the smart glasses or these drones, the personal drones that will fly around as well. But to study these barriers, I like to tear apart systems, rip out the guts. So here's the Google Glass. We shattered it apart. Took out the cage for the battery, hooked up the wire so we could hook it up to a monsoon power monitor, took some thermal measurements as well, just to see what's going on here. And it should come as no surprise to you that because the Google glasses are very small wearable battery, 2,100 milliwatt hours, it dies in

40 minutes when you try to do any vision task on it. My apologies. Okay.

So this is not only a problem because the battery is small and you have to recharge it, but it's also unsafe. Every watt of power consumption that you draw turns into heat. That energy translates into heat. Every watt turns into 11 degrees Celsius of surface temperature differential. And so that means when you're doing video chat, it raises you to three watts, to

56 degrees Celsius, which is above the threshold of this skin burning, burning skin. And so wearable technology is really hot stuff. It's space melting technology, but this is really a problem because that means power consumption is inconvenient, uncomfortable, and unsafe. And I'm not just picking on Google Glass here, but any of the other solutions will have to face these problems as well in order to dissipate that heat properly and really just not generate that power in the first place. And so towards efficient vision, we need to set an ambitious goal. I set ten milliwatts, so that's the average idle power consumption of your smartphones today. That's what we need it to be for these devices to be always on all the time.

Sharrod.

>> So are you telling me that Google Glass didn't have the protections built in to prevent the application developer from burning your skin?

>> Robert LiKamWa: Yes.

>> Okay.

>> Robert LiKamWa: However, these numbers are with the device not connected to the human. Right? So, it could be that when it's on -- this is in free space. So it could be that the human could be a nice cooling agent as well, which is nice in colder climates. But anyway, so we need to set this ambitious goal of ten milliwatts and completely eliminate this temperature concerns all together and it would free us from the outlet. That small wearable battery would last an entire week and it opens the door for us to use energy harvesting as well from solar or from WiFi in order to power these devices continuously. So that's really great of ten milliwatts, if we could achieve that. But if we look at where we stack up today, you can see we have a long way to go to bridge this gap. And so to bridge that gap, we need to study the entire vision system stack, all the way from the top to all the way to the bottom. From the applications to the operating systems, and to the sensor itself. The problem actually is that all of these elements have been provisioned for high quality photography, high quality videography and we

should instead provision for vision. Rethinking the vision system around the task at hand. And so because I've considered things at the application level, operating systems and sensor level, this also actually conveniently serves as the outline for my talk today. So let's begin the talk. These applications, in think vision for the future, you don't just have one of these applications running. You have tens of these applications running from different third party developers, providing social analytics or remembering what food we ate and the list could go on forever. So we need to provide scalable support to support all of these applications run at the same time.

We need a concurrent library framework support. If we look at today's application support for vision applications, at a very, very high level, what it looks like is this, as your application pulls frames from the camera service and it does things with that frame and can provide a social logger here. But if you actually look at what developers do today, they don't write the vision algorithms themselves. They instead call into these vision libraries like OpenCV using vision headers and then at runtime, it binds into that vision library so becomes a single process that then will pull in the frames, scale it down, detect the faces in there. Yes.

>> What is vision headers.

>> Robert LiKamWa: So headers to the library.

>> Oh, header to the library.

>> Robert LiKamWa: Library headers, yes. Generating these vision application primitive objects that the vision -- and so the vision library is the one doing all these duties and the application can use these primitive objects to do its tasks and run. And that works very well for that single application. But how does that work when you try to have multiple applications all happening at the same time? Well, you need to maintain these key objectives. Application isolation. One application should not impact the correctness of any of the other applications. We need to be developer transparent. Yes?

>> One thing that you showed that gap 2000 milliwatts to ten milliwatts, right? Are there any estimates out of [indiscernible] about how much humans spend on [indiscernible]? Like how much is the energy budget in terms of calories or whatever?

>> Robert LiKamWa: Yeah. How many hamburgers per --

>> Exactly. How many hamburgers per --

>> Robert LiKamWa: I calculated that once a long time ago. I don't remember now, though. I will calculate it again for you sometime.

>> Because that would be sort of the upward bound, right?

>> Robert LiKamWa: Not necessarily. We could do better than humans. If you have things that humans don't have access to, including the cloud, for example. Right? There's an extent to which the human is not optimized. We can optimize beyond a human. We as a human race can optimize beyond a human.

>> Okay.

>> Robert LiKamWa: Our calculators can calculate much faster than I can do a square root. Okay. So developer transparency. Developers shouldn't have to worry about the guts of a system. They should be only focused on writing their nice application or the very high level primitives. We need to provide sufficient performance and efficiency so that we don't burn the user every time we try to use these applications. Yes?

>> So you set it on by 2010, right? And then you were going into this thing about having multiple -- supporting multiple applications. Wouldn't you want to just do it for one app first before you decide that you're going to do it for all these other applications? I mean, a lot of times, I don't know how many applications run at the same time [indiscernible] algorithms. Do you have a sense of -- you talked about Google Glass or anything else.

>> Robert LiKamWa: Right. Yes. So, your question is that why don't we optimize for one first and then optimize for many? Right? And especially -- and then you raise another interesting comment is that today, what you're alluding to is that today on our smartphones, we only run one application at a time anyway in the foreground. So we should only optimize for one. But in this future that I'm positioning is we have in wearable device that's always on, always looking out, and so you don't want to have the context twitch between these applications as a user. That would be a big burden on this user, on this continuous device. So for this future, we really need whatever support that we design for one application to map to all the other applications as well. And I will get into how we optimize for that single app performance then later.

>> So your ten milliwatts [indiscernible] is cumulative across all

[indiscernible].

>> Robert LiKamWa: Yes.

>> Okay. And what's Facebook here?

>> Robert LiKamWa: Maintaining another social log experience.

>> My life bits.

>> Robert LiKamWa: Yeah.

>> [Indiscernible].

>> Robert LiKamWa: Recognizing faces, remembering that you've seen people before.

>> If you see somebody, you want their Facebook update. You don't want

[indiscernible].

>> Robert LiKamWa: Right.

[Laughter]

>> Robert LiKamWa: Could be. Could be. So let's move forward. Let's move forward because today's camera service -- so today's camera service is typically used as the boundary. And that's great because that preserves application isolation as the camera service provides a different frame to each of these applications and then the applications can operate on those frames independently. That's great for application isolation but there's poor performance and efficiency because there's a lot of redundancy in the computation and memory across the system. Even though that application on the left has already gone in that frame, scaled it down, and put a face on it, the further applications will also have to do those exact same things, creating a lot of redundant operations in the system, leading to poor efficiency and performance. On the other hand, then, the natural thing to do for us would be to reduce that redundancy in the system, follow those systems principles of reducing redundancy. And so have a single computation and a single instance in physical memory. Sharing these common primitives among these applications at that library layer. And that would be great for performance and efficiency but it would also have poor application isolation and so if one application maliciously modifies that single instance in physical memory, it would then span to all the other applications as well.

And so this would fail to provide that application isolation and propagate those accidental and malicious errors as well. So instead, what I suggest is using library calls as the boundary. This is our key idea to virtualize the vision library calls, turning all those procedural calls into remote procedural calls and therein centralizing those function call so that we can have efficiency and performance by understanding the redundancy in the system. And so built on that key idea, I present our Starfish design. David has a question.

>> That's just [indiscernible], right?

>> Robert LiKamWa: Excuse me?

>> That's LRPC for local --

>> Robert LiKamWa: Local -- LRPC, either local or lightweight remote procedural calls. That's right. So, on the same device, yeah. So, on that key idea we present our Starfish design. The split process library solution for efficient transparent multiple application service. Consists of two components. A Starfish Library that uses those vision headers to intercept the library calls and then a Starfish core service which receives those

library calls to execute, track, and share the different library call computations that are happening in the system.

>> I have a question. So this idea of memorizing these core calls

[indiscernible], in particular in the context of sensors for example in

[indiscernible] which also did very similar things in the context of available sensors and so on. Where does this fall into that scope?

>> Robert LiKamWa: Sure. So your question is that a lot of people have studied memorizing of -- or providing services for multiple applications simultaneously.

>> Particularly using memorialization trick and in particular in the context of sensors on wearable devices.

>> Robert LiKamWa: Yeah. So this is specifically for library solutions where people are doing library processing, instead of just -- from ace, which is a context sensing work, right? The service is basically providing data.

It's almost sensor date, whether fused or native from the sensors. And what we're doing is abstracting library processing itself. So as a developer writes using these vision library calls, that's the layer that we're using.

It's about boundaries, basically. We're using the library calls as that boundary.

>> [Indiscernible]?

>> Robert LiKamWa: The idea is general to any sensor processing. However, with vision, we typically see that there is a lot of redundancy in the system between different applications needing the same primitives to do their operations. Okay. I'm going to move a little bit forward then. So, this works basically how you would expect it to work is that on the first call, that vision library sends a call to the core service and the core service checks if it's seen this call before. And because it hasn't, it uses a vision library to do the call. It takes 20 to 200 milliseconds depending on that call itself. After that, it can store the results and give the results back to that application up there. When a subsequent call comes in from another application, it's core service, now it's seen the call before and so it can skip that execution all together, reducing that overhead and simply retrieving the results back. And so that works great not only for that application but for any subsequent application that comes along as well. Now we have only the overhead of that single execution. So this split process design works really nicely to minimize that processing overhead but it does come with its own challenges. We do have to keep preserving that developer transparency and library transparency. We want to support unmodified code.

And this comes for free if we use the vision library headers as that boundary. We also want to have secure application service. And here, that means that one application should not impact the correctness of the other applications but also one application developer should not be able to infer anything about any of the other application developer's calls from the way that they see their returns coming up with the core service.

>> So, on mobile devices today, I see these sorts of common functionalities that you're mobile device or wearable would offer would be provided by the hardware and/or OS manufacturer. So, like, for example, take a picture on an iPhone is something that's hardware optimized and the OS is providing a generic interface for other applications to use and end beneath the hardware is optimized for that because it's using image sensor processors and whatnot.

Similarly on wearables that are tracking my physical activity or my location, again, there's a high level interface that applications have and the hardware or the OS optimizes underneath that. So tell me like what is your vision here? Is the vision here that there will be some third party library developer that's going to provide that library or --

>> Robert LiKamWa: So your question is who is the author of -- architect of for example Starfish, if it's to be implemented in a real system, right?

>> It's probably that, but really, you know, what is the natural evolution of these devices? Is it not natural for devices to provide hardware support and the high level interface to applications? Are we not already going to get there anyway because we've gotten there with phones and we've gotten there with physical activity trackers?

>> Robert LiKamWa: Sure. So, okay. I'm coding your question. And then the -- sure. So your question is about the natural evolution about sensors, sensor services having a high level application interface and a low level hardware optimizations. So I believe that this is a system optimization that would take place in the operating system itself as a library almost accelerator in some sort of way for multiple efficient service for multiple concurrency service to multiple applications. That's where I see this sitting in that framework. Is that now that applications don't have to worry about this guts of this concurrency and yet get all the benefits of the efficiency and performance that come with it. So I think it falls into that model of providing nice abstractions and yet providing nice mechanisms underneath. Okay. So -- yes.

>> I also don't quite understand why you're worried about the secure applications, basically delaying the cache retrieval. I mean, today, this caches don't worry about anything like this, right? If I have an application

[indiscernible], it's awesome. Everybody's happy. I mean, the

[indiscernible] why are you worrying about this?

>> Robert LiKamWa: Well, increasingly, the OS is starting to worry about -- not commercially, but in the literature, cache timing attacks are a threat.

>> Nobody is actually thinking about delaying cache retrievals

[indiscernible]. Is it really a concern?

>> Robert LiKamWa: It is in Azure, in datacenters.

>> [Indiscernible] when the local, when they simulate local -- when they have SSDs locally instead of HDDs, they simulate the latency.

>> That's different. That's different. They're not separating applications. That's not separating applications. If you get a cache hit today on your PC because some applications [indiscernible] and it's in the memory, it works for you, right?

>> So Chrome actually does this [indiscernible].

>> Yeah, but that's in private data. It's explicit more or less that you don't want to remember anything --

>> [Indiscernible].

>> Robert LiKamWa: By the way, just to --

>> [Indiscernible] that's wrong. That's the answer. Just because it's being done, doesn't mean it's right. Think like that.

>> I think this is such a second level worry --

>> Robert LiKamWa: By the way, yes, I think it's also a second level worry.

>> [Indiscernible] second level worry to me too.

>> Okay, there you go. And I will do -- I will gladly [indiscernible].

[Laughter]

>> Robert LiKamWa: All right. Let's move forward because actually, this is not a -- it's basically a paragraph in the paper. It's a design decision that we made in order to preserve the application isolation but it's not a significant pillar of the work, if you will. Instead, actually, this last element is the doozie here.

>> [Indiscernible] I think it should be right.

>> Robert LiKamWa: Thank you, Victor.

[Laughter]

>> [Indiscernible].

>> [Indiscernible].

>> Robert LiKamWa: Well, I think it's -- okay. Anyway. I just want to move forward a little bit. This last segment is actually very important because there's a lot of overhead in argument passing and copying across these boundaries now that we split everything in different processes. And so we're

going to reduce all this overhead, we're going to create all this overhead that will reduce the impact of our work if we don't take care of argument passing. So to kind of -- I think most of the people in this room know about argument passing, but maybe for the people over the connection, right, your application and your core sit in different process spaces, virtual address spaces. And so to communicate across them, you can use a shared memory. The kernel can provide this shared memory and now an application can take that frame, write it into that shared memory, the Starfish core can copy it out of that shared memory, perform the processing to extract that face, copy that face into the shared memory and then the application can copy out of that shared memory in order to have that face so that it can operate on it. And so this shared memory provides this nice asynchronous access to -- across these boundaries, which is really great except that copying in and out of this shared memory is very expensive. It's a 3.5-millisecond overhead for these -- for each of these operations. Yes?

>> I'm confused now. So the motivation for in is to save power. That was the first slide, right?

>> Robert LiKamWa: That's right.

>> And then it's not performance because clearly, you're going to delay access.

>> Robert LiKamWa: Yes, right.

>> To the cache.

>> Robert LiKamWa: That's right.

>> So why are you telling us that now you're saving performance?

>> Robert LiKamWa: Because performance and efficiency are tightly coupled.

The cost, that 3.5 milliseconds is CPU cycles that we want to reduce.

>> So I should interpret that number as energy cost.

>> Robert LiKamWa: Right. Exactly, yes. So we want to minimize this

[indiscernible] copy overhead.

>> How big is the image, just to get an idea?

>> Robert LiKamWa: 640 by 360 color images on Google Glass. It's a weird perspective, right? But it's 640 by 360 color image on Google Glass for these numbers. Now -- yes?

>> We're missing something here. Why are you copying these entire frames and even for networking stack, you just pass around pointers, right?

>> Robert LiKamWa: Oh, you can't just pass around pointers because there are different address spaces. So you have to -- in order to actually copy your memory in, you have to -- in order to copy your object into that memory, you have to allocate that shared memory, then copy your object in.

>> It's analogous to zero [indiscernible].

>> Robert LiKamWa: Yes, it is actually. That's what I was about to get to also is that this has been studied for many different designs. This has been studied that even in the literature, I'd say. And many of it requires object redefinition. For example, in the network stack or in other lightweight RPC, sun RPC, you design around this idea of zero copy so you have your buffer there in the first place and you write into that buffer. It's also been -- yes, Peter.

>> So, one question I have, like just from [indiscernible] included in the

OS itself, right, then the application wouldn't really get the frame. It would simply say whenever you see a face, give me the face, right? Then you wouldn't really -- the app wouldn't get the whole frame ever time.

[Indiscernible] passing it back and forth, right? You would just get notifications [indiscernible] I see these faces and then the OS would sort of have to incorporate all these optimizations that you are talking about but some of these would sort of go away because, you know, like this one, right?

You wouldn't be -- all thee apps would not be getting the frames. Some of the -- some of the overhead would go away directly because you just get these notifications, light?

>> Yeah. I would concede that if you're designing your library solution around the idea of registering for a service, for example, that returns you these objects, then some of these problems go away but some of these mechanisms need to be embodied in that mechanism as well. And so this would need to be restudied in that kind of framework.

>> So you're assuming that the OS doesn't change.

>> Robert LiKamWa: Right.

>> Right? And then you --

>> Robert LiKamWa: I have designed this in user land. So, yes.

>> Correct.

>> Robert LiKamWa: Okay. So it's been revisited by -- for code offload as well for cloud because you want to reduce the overhead of your network transmissions because that's very expensive and so for comment MAUI or

CloneCloud, for example, they use object tracking in order to reduce the number of copies that they have do there as well over the network stack. But we have the luxury of being on the same physical device so we can optimize for that shared memory instead. And so, we have designed three techniques,

building on our wisdom from the literature. In order to reduce all of these deep copies down to zero or one copies per RPC call. The first mechanism we use is very simple. It's a protected shallow copy. Once the core, for example, gets the handle on a shared memory, it's in the right visual address space and so we can just do some shallow copies out of that shared memory in order to create the object, but we also have to protect it with copy on write in order that we maintain application isolation. And we can do that on the core and we can do that on the application itself and we can provide a reduction of two of these deep copies right there right off the bat. The other interesting thing we could do is because we're controlling that vision library execution, we can actually intercept calls to malloc so that interposing on malloc, and instead writing data directly into a shared memory. So what we do there is instead of malloc returning newly allocated shared memory, we return a shared memory segment itself so that the library will just write directly into those shared memory. Then we can just do shallow copies when we're marshaling the object in. And the final one we do is we maintain an argument table on the Starfish application side and so what that is is we maintain all the input arguments and all the output arguments linking between the objects and their shared memory pointers so that if it's seen an object before, it doesn't have to copy it into shared memory anymore.

And this is actually quite useful for vision because you usually have streams of functions. One function's input is usually a previous function's output.

So it's typical that you would have seen the object coming in before from a previous library call. And so all together, with all of these techniques, we're able to reduce those four deep copies into zero or one deep copies.

It's going to have a copy if it hasn't seen the argument before into that argument table, but otherwise we can reduce these deep copies entirely and that provides us great efficiency as I'll show through the evaluation later.

And so that rounds out the split process challenges and our solutions to those challenges entered to realize this Starfish design. Now, I'd like to point out actually that the Starfish design, while we designed it for concurrency service, efficiency and performance, it's also a nice centralized point to do some interesting things for privacy, for access control, for example, where Scanner Darkly is a nice work from Sunan Jonah at UT Austin where he's providing access control at a finer grain level for the vision features themselves. So this Starfish core service would be a great place to enact those permission mechanisms. It would also be a great place to exploit hardware heterogeneity whether that's cloud offloading or doing this hardware acceleration as well because it has a bigger picture of all the different operations that are happening and can collect a knowledge of what -- of the tasks of the system and then map that much better to the hardware as well.

So it's a nice centralization point for that as well. So these are some opportunities that we see moving forward from the Starfish design, even though we designed it for the concurrency service. But in order to evaluate our claims of that -- our design decisions and validate our design decisions and evaluate the potential of Starfish to work across multiple applications, we have to first prototype it. And so we built Starfish on the Android operating system with the OpenCV because it's a mainstream vision library and we tested it on our Google Glass because we already had it hooked up to our battery monitor, but it would really work on any Android phone as well. If

we look at this, the way the system works today, we typically have application developers who use this OpenCV framework, and the OpenCV framework receives frames from the Android camera service, has that call back mechanism on preview frame, converts it to an OpenCV representation, and provides that to the application. So the application developer writes this call back mechanism on camera frame where it gets that OpenCV frame and it can perform calls on that OpenCV frame through the vision library over that framework itself. So we sit directly on top of that framework. Starfish sits there replacing that framework. It replaces the core service as well and so it doesn't touch the developer code. It just operates on where the

OpenCV was performing its duties instead. And this works not only for one application but for multiple applications in the foreground and background all running happily on this device for in concurrency service. And so that provides a background for us to evaluate our design decisions again and evaluate the scalability of how well it will actually support multiple applications running at once. So to look at our design, we first looked at a per call basis. We looked at the resize call to see what's the overhead there. And natively, it takes about 21 milliseconds to provide that call on that 640 by 360 frame. But once we apply Starfish, without these argument passing mechanisms, we're actually going to incur a lot of overhead both on the input side and on the output side and so you actually double the overhead of that original call. After we provide our mechanisms, we can really slash that optimization down through the argument passing through those memory optimizations we can reduce it to three milliseconds of overhead in the system. You may notice that there's a notch here. Executing the function takes less time with Starfish mechanisms there and that's actually because we're hacking that malloc call. So it doesn't have to allocate memory. The memory is already there as that shared memory segment and so we can write directly into that. So we're actually saving time on the execution itself.

>> So just to understand, [indiscernible] application change?

>> Robert LiKamWa: No. It requires them -- a slight application change.

They need to redirect through our framework instead of the OpenCV framework, but otherwise -- which could be done statistically, actually, but we actually modified the applications.

>> So I saw that you're passing this pointers now so the [indiscernible] is to keep track of the pointers, you know, from [indiscernible].

>> Robert LiKamWa: The application is maintaining that, and that's in the library, the Starfish Library.

>> Oh, the Starfish Library, I see. I see.

>> Robert LiKamWa: That's how we're providing it. So that was the first call. But for the subsequent calls, the native library has no concept of first or second and so it also consumes about 21 milliseconds. But once we provide Starfish, we can slash that down to six milliseconds of overhead.

And so all together, this works really well for two applications, even, when

the native function execution takes over ten milliseconds but it works even better when you have multiple calls into this same resize operation over and over again. And so to see that --

>> [Indiscernible]?

>> Robert LiKamWa: Yeah. But across multiple different applications. But what we want to really evaluate is against multiple applications, not just on a per call basis but on an application by application basis. So for this, we evaluated multiple combinations of face detection, face recognition and object recognition and homography detection and we did multiple combinations of these all running at the same time but for this talk, I'll talk about one particular benchmark which is using multiple incidences of face recognition applications all running at the same time. And for this, when you run one application, we have a little bit of overhead here. From the native power consumption to the Starfish power consumption, there's a little bit of overhead. But once we add more and more applications, the benefits become clear, is that while natively we would be incurring more and more overhead on power consumption, now Starfish draws about single application power no matters how many applications you're adding on there. It does slightly raise, but much slower than the original native execution. And so with this, we can provide great concurrent library service to all these applications at once. But the problem is that that one watt is still very high. As Victor mentioned earlier, we should be optimizing that single application power as well. So we kind of need to dig down and see, characterize further, where is that power going? 450 milliwatts of that we consider system overhead is

Android and Google Glass were not particularly designed for continuous mobile vision. There's going to be other system services that are running.

Telephony, for example, and other things, but we did look at what's fundamental to the vision tasks at hand here. And 150 milliwatts of that is in processing. You can kind of see that from the slope here of the native execution. But the remainder is actually an image sensing itself. It's actually that act of capturing that image and providing it to an application is actually very expensive, that 500 milliwatts. And so we have to do --

>> But 150 milliwatts even seems very low to me. Is it a reasonable kind of face recognition that you're running and at what frame rate?

>> Robert LiKamWa: Oh, we're running at .3 frames per second, so one every three seconds.

>> And what kind of face recognition algorithm are you running?

>> Robert LiKamWa: We are running -- we're using the hardware -- we're using the hardware to provide our face detection and then we're using face recognition using Eigenfaces.

>> So that's a very low --

>> Robert LiKamWa: Very small database.

>> What I'm saying is [indiscernible] far less than using the state of the art recognition algorithm and it would easily take at least a watt and

Android [indiscernible] to increase recognition at .3 frames a second. So how representative do you think that number is for processing?

>> Robert LiKamWa: It's going to depends on your algorithm, like you're saying, and it's going to depend on the --

>> [Indiscernible] vision is working very well and all that, surely you're not [indiscernible] Eigenfaces which [indiscernible]. You're probably talking about [indiscernible] networks and so on, right? I mean, that would take for more than --

>> Robert LiKamWa: We'll actually get there, to deep networks as well.

>> But [indiscernible].

>> Robert LiKamWa: Not 100 percent.

>> That's not the baseline --

>> Robert LiKamWa: No.

>> It will take you a few watts, maybe.

>> Robert LiKamWa: It will take you 400 millijoules per frame, actually, in order to do object recognition --

>> [Indiscernible]?

>> Robert LiKamWa: No. Using NVIDIA's Tegra K1, it took 400 millijoules per frame to do object recognition.

>> [Indiscernible].

>> Robert LiKamWa: That's right. But if you do decycle it.

>> All right.

>> Robert LiKamWa: Okay. In any case, one way or the other, we have to focus in on these elements, these fundamental elements and we see that image sensing itself is very expensive and we need to reduce that because it's still keeping us very far from our goal. And it turns out that this is -- this high power consumption of image sensing is due to the fact that there's a very -- the operating system is again provisioned for high quality photography and videography, not for image sensing itself. Instead, we don't need these high resolution images from that high quality photography and videography. Vision can actually handle lower resolution images. In fact, for object recognition through using Google in that for example for object

recognition, it was state of the art in 2014, it only takes .1 mega pixels as the input to its service. So vision doesn't need high quality images. That would be great if image sensing is energy proportional. That is, we don't mind spending a lot of energy per frame if we're getting a high resolution other a high frame rate frame coming out. But on the other hand, if you want a low resolution and low frame rate capture, we should pay a fraction of that price. It turns out this is not true today, in today's image sensors. And so instead of having this nice green line of energy proportionality there, it looks fairly flat actually, as we're still consuming a lot of power, even as we move to the farther left, lowering that resolution of the frame. So to characterize this, to understand this, we tore apart five different image sensors from two different manufacturers to understand what's going on here.

And we got a power wave form that looks something like this. So you can see there's an analog line, a digital line, a PLL line, but what's really interesting here with the power and the time is this shape of this -- there's an active period where pixels are actively getting read out, one after another, after which there's an idle period where it waits until the next frame is captured. And because energy is power times time, this area under the curve actually gives us some nice intuitions into what's actually going on here. Is that at high resolution, you're getting a wider active period.

And at lower resolution, you're getting a shorter active period. But the rest of that time is being filled up with that gray idle power. And so that's why, even as you move all the way to the left side of that graph, you're still consuming a lot of that idle power consumption and so that's what's actually limiting the energy proportionality of your image sensor.

Sharrod.

>> So aren't you assuming in this analysis that the object in question, it fills up the large majority of the frame? Like are you not also helping me remembering where my keys are which might be sitting on a table? So my head may not be like right in front of the keys.

>> Robert LiKamWa: So what you're pointing out is a motivation for our work, is that if only a low resolution image suffice for everything, then we should just create a sensor that operates over here. And such sensors do exist.

Instead, we want to use the same sensor to capture high quality images and low quality images but also pay the energy price associated with that quality.

>> Okay. But the other place I was going to go was that I also want to be able to remember, you know, Robert is over there and my keys are over there roughly at the same time. Right? So I think that was your motivating application is to both tell me that Robert is over there and my keys are over there on the other side of the view.

>> Robert LiKamWa: So what this opens the door for is to be able to put your device into those modes where you could capture a wider view and see more things but also then target in on lower resolution frames and then pay an energy price that's proportional to that capture. So it allows you do these

hierarchical algorithms for example that you trigger based on what you see from different qualities of frames. Do you have a question?

>> My question is can you define what you meant by idle power? What's happening in that time window which you're running in gray?

>> Robert LiKamWa: Functionally, not much is happening there. There is going to be some exposure time where it's collecting light and converting it but most of this time it's staying on, it's leakage, it's static power, which seems wasteful. It is wasteful, frankly. And because that idle power is so wasteful actually, we looked into the image sensors and said, well, what can we do there? And it turns out that image sensors have this standby pin that you can actually put your device, your sensor into standby and then wake it up very quickly. And so that's intended for if you're going wake up your phone while your camera app is on, you can put this sensor to low power so when you wake up the application. But the OS doesn't use this low power mode during its actual capture mechanism. Because it's assuming that you have got a high quality frame coming in or a high frames per second, it doesn't have time to spend in that idle power anyway so it kind of makes this assumption that it would always be on. Instead, we need to use that pin properly and so we have two driver mechanisms that we can enable in order to provide though aggressive power management. The first is to turn off the sensor when you're not using it, put it in that standby mode. And that's a very good way to immediately reduce all that energy that would have been spent in that gray region. Now, you do have to turn on the sensor in order to expose it to light so that it can collect those pixels, collect those protons and turn them into pixels and reading them out to that purple region there. You could see how much energy you would be saving in between. And then the other optimization we do is to optimize the pixel clock frequency. This should be very familiar to people who are processor clock optimizations is with a slower clock, you have a lower power, but it takes more time. With a faster clock, it takes more time but it consumes more power. And so you can do your calculus one and figure out where is the minimum on that tradeoff and we did and we find that the optimal clock frequency is actually a function of the pixel count and the exposure time. So these two mechanisms are fairly simple but they're also very, very effective at creating this energy proportionality. Because beforehand, no matter where you go on your pixel count or your frame rate, you're still going to be consuming a lot of power down to that -- from that red down to that yellow at about 280 milliwatts.

Meanwhile, after we apply our driver based management techniques, we can see it gets a lot more colorful. We can see it goes into the greens, the teals, the blues, the dark blues, as you go farther and farther down in pixel count and framework, saving a lots of power proportionally. And so that means that for a point one mega pixel frame at three frames per second, we're saving from 280 milliwatts all the way down to 20 milliwatts in order of magnitude in savings. And so this energy proportionality actually really works.

Aleck.

>> So if you looked at other smartphone OSs, do they all have this property that they don't turn the camera off into standby mode or is this just sort of an artifact of Google Glass?

>> Robert LiKamWa: Well, we saw this with Android and we saw this with Linux as well for their open max and so everything that we have seen has been along these lines. We have not torn down into IOS. I'm not familiar with it, nor the Windows stack either. So --

>> Seems like such an obvious optimization. I'm surprised that the OS developers didn't...

>> Actually, a related question is does 280 milliwatts sound very high, so state of the art -- especially at three frames per second. Nowadays, least in the last couple of years, [indiscernible] 50 milliwatt processors that do ten frames per second very comfortably at higher mega pixel rates. So the hardware seems to be also improving and --

>> Robert LiKamWa: But not the sensor hardware and there's actually a fundamental reason there. And I'll get there as well.

>> But the measure of that 280 mega watts is an extremely high number for an imageer today.

>> Robert LiKamWa: But that's because --

>> [Indiscernible].

>> Robert LiKamWa: Okay. Sure.

>> Is that representative of [indiscernible]?

>> Robert LiKamWa: The truth is I'm not sure. The truth is I'm not sure. I haven't explored today's -- I haven't hooked up today's image sensors to these power monitors, but I have a feeling that they won't be and I can explain that in just a few more slides.

>> That's fine.

>> So between these two [indiscernible], we know which one contributed how much?

>> Robert LiKamWa: It depends on your exposure time. It depends upon your environment, but about half and half is what we saw when we actually applied them one by one. So we've come a long way now from this stock of two watts, reducing it by an order of three for this -- using this concurrency service, the Starfish. And another factor of three by using this nice sensor management as well at the driver level. But there's still a long way to go to get to our goal of 10 milliwatts and there's a very troubling thing that we see is that the sensor itself is still too expensive at that

20 milliwatts. And maybe it's surprising that I'm focusing on that purple segment when in red segment is so much bigger, the compute overhead. But I think that the compute overhead is not as fundamental, right? Along with

Moore's law will continue for another five years, there's Gene's law and that's that, the compute power dissipation decreases exponentially as our transistors get more dense. And that's not only because of the density but also because of the specialization and integration as well has been pushing this power profile further down for the compute power. And so in five years,

I expect it to go down an order of magnitude, if not more than that.

However, on the sensing side, there is something troubling that we see here, is that the analog readout consumes over 70 percent of the active power, even if we're reducing this idle power entirely, the analog readout is still going to be far too expensive for what we're trying to do here. And so even with today's image sensors, we're still going to have that problem as well because

ADC efficiency is fundamentally limited by this thermal slope here. The KT/C limits the resolution to which you can actually read out your bits with a given energy and so the energy per sample on this axis and the effective number of bits, you can see it's limited by this thermal slope here. So the future improvements in ADC power efficiency are limited to less than one order of magnitude so it's not about the ADC but it's about how you actually use it. Using it wisely. And right now, reading that entire pixel array, the dense set of all these pixels coming through is not the wise way to use it. It's far too expensive for this analog readout. Instead, we should be following the systems principle of processing early and discarding raw data, something that we've seen well applied for doing things like cloud offload.

We should do it here too and now our expensive resource isn't the network stack. It's actually the ADC itself. And so we should be doing vision processing even earlier into that image sensor itself. Yes?

>> Quick question. The chart that you showed the stack capture CMOS sensors, does that apply to CMOS or also CCD?

>> Robert LiKamWa: CMOS image sensors but they're the most efficient and their ADC is designed for the CMOS.

>> So the chart that you showed works for CMOS?

>> Robert LiKamWa: Yes.

>> Okay.

>> Robert LiKamWa: CMOS and BiCMOS. But yeah. So process early, discard raw data. Even in the analog domain, that's our key idea, but it's much easier said than done. Whenever you move anything in analog domain, you face these key challenges of design complexity and noisy signal fidelity. In terms of design complexity, a key difference between digital architectures and analog architectures is that there's no bus in the analog domain. You can't just pass data across this bus. Instead, you have to pass it across pre-routed interconnects. And these interconnects have to be carefully geometrically designed because any wrong move you make can create congestion

and overlap, causing a parasitic effects to the signal and the efficiency there as well. And so this complexity of passing data around limits the extent to which we can do analog computing. On the other hand, another key difference between digital and analog is that in digital, you can snap your charge to a zero or to a one to get your value. In analog, that charge is your value itself. So you can't really snap it to anything. So you have to deal with the noise that you face inherently in your circuit. Including this thermal noise which is very fundamental. This KT/C that you'll see of thermal noise, with the high capacitance, you can trade this off with the capacitance. With the high capacitance, you can have low noise and high energy. With the low capacitance, you can have high noise and low energy so you could trade these off with each other. But the point is you'd still be accumulating more and more noise as you go through more and more processing, accumulating that signal noise and again, limiting the extent to which you can do analog computing. And we've seen this effect previous analog architectures as well. And a lot of people stay away from the analog domain for these reasons but even when you do enter the analog domain, you usually see architectures have a DAC and an ADC on either side in order to maintain that signal fidelity. And so as a result, the ADC actually consumes over

90 percent of the energy consumption of these designs as well.

>> So what do you mean by analog computing? What are you actually trying to do? I mean, directly operate on every pixel's value or how --

>> Robert LiKamWa: So, your question is what am I doing in the analog domain?

>> Yeah, what is it like -- are you trying to execute some kind of filter, some kind of a neural network?

>> Robert LiKamWa: At this point I'm still talking philosophically. I'll get down to physically what we're doing as well. But philosophically first is that -- and it's going to depend on what you're doing in that analog domain. We see some key insights that we can do for vision tasks and moving vision to the analog domain. The first is that noisy images are actually okay for vision, for picking a task of convolutional neural networks which are really great for object recognition, you can see we can adopt a lot of -- we can reduce a signal to noise ratio very far to the left on this graph here, getting down to 24-dB before it really significantly decreases that accuracy. And while we do that, we can also reduce the energy of our computing as we're admitting more and more noise into our circuits as well.

So while everything works like a jigsaw when there's too much noise there, it can still recognize objects very well with a low SNR. So that's a key motivating factor for us because it opens the door for our architecture development. We could be a little bit looser with our noise constraints.

The other nice thing is that vision is pretty good for complexity problems as well. Because vision is highly structural. For ConvNets, again, they deal with these convolution elements and these next pooling elements. And so they reuse the same types of operations over and over again and what's even better is that these operations are patch based. They deal with data local

operations. For example, for convolution applying a weight to a set of -- a patch of pixels in order to generate a value. And so because it's data local and repetitive, it also allows us to have some more architecture -- it opens the door for architecture development so we went ahead and pushed forward with our RedEye sensor architecture. Eventually we'll have to decide what we want to do in the analog domain, but this early study allows us to look in programmable analog ConvNet execution for looking at the early stages of deep neural networks. We've designed it around a modular form factor for design scalability so that we can scale that complexity and make the complexity local to the modules and we have tuneable noise so we can trade off the accuracy and efficiency in the circuit as well. Altogether, it's able to reduce the readout energy by a hundred times which is what we were really going after there. Reducing that bottleneck of that ADC. And so what it looks like to dive a little bit deeper into this is that we've got pixel data coming in. We buffer it in analog memory. We do some convolutional operations. These are multiplied accumulates in circuits vertically and horizontally. We do max pooling which is a winner takes all circuit. And then we cycle it back into this analog memory buffer before we do more and more operations. So we're able to keep a lot of it in the analog domain over and over again through multiple different layers of these neural networks before we finally send it through the ADC quanticizing the numbers so we can send it over the system bus and actually provide it to the digital system as well. So this allows us to have a programmable kernel and have that

[indiscernible] for use but each of these modules has to also -- we have to be careful about the complexity of each of these modules as well. So we structure this in a column parallel architecture in order for us to stream these pixels in so that we can have good data local access to all the pixels that are needed by these operations. And so to zoom in and explain that a little bit better is that for the vertical weighted average, all that we need to do is capture all the pixels that are coming in, buffer them temporally, and that gives you nice and vertical access to those pixels, apply the weight to it and you're good there. Horizontally, for horizontal access, we need to do these column interconnects to neighboring columns and that allows us to access those pixel values as well, so now we can have that patch-based access and we can apply the weight and we can do the multiply accumulate and you can imagine it works very similarly for the max pooling operation as well. But the point being that with this topology, we're able to reduce the complexity and maintain it to be local to the modules so then we can just copy these modules across making the analog design much simpler.

>> [Indiscernible], you mean it's not including doing all this stuff.

>> Robert LiKamWa: It doesn't include that [indiscernible] energy.

>> Okay. And the trick there was what again? To release --

>> Robert LiKamWa: We're reducing the samples that we're sending through the

ADC.

>> I see. You're observing that you can be quite noisy.

>> Robert LiKamWa: Yes, exactly.

>> So is the output of the analog is sort of a binary, like we're going to drop this frame or not?

>> Robert LiKamWa: No. Actually, the output of the analog is a half processed ConvNet basically. It's like at some point in the ConvNet execution, you cut it off and you send that out as your analog and then it can be processed further by the digital domain. Although, what you mention is interesting, is if the analog could have enough knowledge to throw away a frame, that would also be very interesting. Then you wouldn't have to send it through the ADC at all. Moving forward, that's actually what I want to consider as well. And I'll get to there too.

>> So do you have numbers to show accuracy?

>> Robert LiKamWa: Yes, I do. Okay. All right. So the other thing that -- the other problem beyond complexity is also noise in analog circuits.

There's quantization noise depending on the number of bits that you want to read out and we can use a SAR ADC to actually choose the number of bits that we with wasn't to actually read out. There's also thermal noise. Every time that we process on a capacitor, every time you read or write from that capacitor, there's going to be that KC over C thermal noise. So we could trade that off but having programmable capacitance. These charge sharing among multiple capacitors in order to provide a simulated capacitance that's lower than our design node and so even though we're at 180-nanometer of CMOS, we could have smaller capacitance by sharing the charge amongst parallel capacitors. And so we can actually reduce the capacitance and save energy there as well. So we laid out some of these modules and cadence in order to provide an estimation of the energy consumption and the noise that's estimated parasitic noise in these analog circuits as well. And we used

Caffe, a convolutional neural network framework, and we mapped the noise from cadence into Caffe, both in the processing noise and quantization noise, inserting these layers into Caffe to add that noise. And we used GoogLeNet as our candidate architecture because it was the state of the art when we started this work in 2014 to actually recognize different objects in the image as well. And so built on that using GoogLeNet as different depths, so if we did one layer of convolutions and next pooling and then read that out of RedEye, it consumed this much readout energy, 2, 3, 4, 5 layers of these convolutions and max pooling, you can see that compared to an image sensor, and this is with state of the art ADCs doing a ten-bit readout, it would consume one module per frame. We can save a lot of power by running it through RedEye instead. Sharrod.

>> So this is all with simulation, right? So how close do you think this will be to reality?

>> Robert LiKamWa: Good question. I'll get there.

>> [Indiscernible] the output of red essentially as you said

[indiscernible]. Now, many apps in potentially many different ways can use the raw image sensor data.

>> Robert LiKamWa: Yes.

>> But there will be some apps that may not be able to use this.

>> Robert LiKamWa: That's right.

>> Right? I mean, it's a [indiscernible] pushing more and more functionality down into the hardware as a result, the generality of the apps, you can write on top.

>> Robert LiKamWa: Sure. No, no, that's absolutely right. And so what I envision actually for RedEye is that it would occupy a layer of a stack because we're getting into three integrated sensors now. One layer of this stack would be for full image -- full pixel array readout and then another layer could be something like RedEye. And another layer could be even a different kind of vision processing element all together. And so with these different stacks, you could pick and choose which kind of things you actually want output. The point is that we want flexibility. If we do only want

ConvNet output, we should be able to use these types of modules. David.

>> So as you increase in depth, you can kind of not always a decreasing sort of energy [indiscernible]?

>> Robert LiKamWa: That's right.

>> And so I guess that has to deal with how well your pixel reductions are working, right? So is it the case with convolution neural network that you're always going to be able to reduce after every stage?

>> Robert LiKamWa: No. Okay. David's question is will ConvNets always allow you to reduce this stage? No, and in fact, this is just GoogLeNet off the shelf, not redesigned for RedEye, just taken off the shelf, and you can see that as David's pointing out, that it goes dips and then it goes back up because actually, there's more samples at this third layer than there is at the second layer. So what I would like to do is actually to design a content that does understand the analog architecture as well and then it would force it to reduce the number of samples being read out. Now ConvNets actually usually do become lower and lower in sample size as you go deeper and deeper because they are abstracting more and more out as they sparsify the data going deeper in their networks.

>> So when you said depth, are you excluding both the layers? When you said two, it's the first two?

>> Robert LiKamWa: Yes. And in the analog domain before even --

>> Why is that less than just the first one?

>> Robert LiKamWa: Oh, that's the readout energy. This is just the readout energy. So the processing energy is the part that also comes into play here.

And this is analog access, so don't just add these up in your head because they don't stack. But going deeper and deeper comes at the expense of doing processing in that analog domain as well. And so you could see that if we wanted to do something like cloud offloading, you should just do one layer in the RedEye and then read that out and then send it off to your cloud to do.

But actually, these deeper layers, incurring that additional processing is still very useful because you're reducing that readout depth, but also that processing is much more efficient than even the state of the art works that we see in ISCA, for example, this ShiDianNao work that's the state of the art for doing ConvNet acceleration in digital hardware. We can provide an order of two savings by doing the processing in the analog domain for the same exact -- for iSO tasks, basically. Same task. And at the same time, we're reducing the readout where they have to have an image sensor paired with their accelerator, we are also the image sensor and so we're reducing that readout and in effect improving that efficiency by three times overall for doing this readout and processing. So RedEye by simulation works very, very well. But everything we've done has been at the architecture level. So in order to fully realize this and so understand how far a simulation is from truth, from physical truth, we have to actually lay this out. And so we are pushing for a tape out. We are going to do some early test structures on a very small set of it, seven by seven structure. And then eventually map that out further and further, closer and closer to what we're seeing as 227 by 227 for a GoogLeNet and potentially even further than that for full image sensors as well. So there's some circuit investigations we have to go through while laying these things out in cadence in order to actually make these things happen, we have to look at the analog memory. It's got a less dense footprint than digital memory and so we have to care about that for the efficient streaming in spite of the parasitic effects there. And because we're dealing with very, very small capacitance, we have to worry about signal integrity effects too. It's very sensitive to, for example, device mismatch or PVT variation so we have to simulate all these effects as well.

But we're working very hard towards realizing this as an analog design as well through the layout and then the tape out. But all together, that provides our RedEye sensor architecture which provides this programmable analog confident execution with these modules for design scalability because we've localized the complexity and tuneable noise so we can trade off accuracy and efficiency throughout the processing reducing that readout energy by a hundred times. This morning I was informed it was accepted to

ISCA. So I'm very excited about that actually. So come a long way starting from two watts, reduced it by a factor of three watts using that Starfish

Library. Another factor of three with sensor management. Another factor of two with our analog vision processing as well. And so we've come a long way by looking at the application layer, the operating system layer, and the sensor layer itself and I haven't done this alone. I've done this with my colleagues at Rice University and great help from Victor, Matthai, and Bodhi from Microsoft Research as well all along the way. I'm getting very useful

intuition towards this efficient vision. Now, we're not is there yet, right?

80 milliwatts compared that to the goal of 10 milliwatts so we have some ways to go but I'm confident that with integration around this hardware software interface, we can really get there. So that takes me directly into my future directions.

>> So [indiscernible]. The one question I have is how was accuracy? Was it --

>> Robert LiKamWa: Oh, the accuracy. So, yeah. Sorry. I have the numbers.

You can reduce -- we can move down to 40-dB with our architecture which is a limit actually to which we can actually reduce it with our capacitance at our design node and still maintain exactly the same accuracy as the GoogLeNet untainted in a perfect architecture. So in fact, we're getting a hundred percent accuracy of the original, even after doing things in analog domain and accepting noise. Accuracy, by the way, being task accuracy top five is what we're using as our benchmark. It works quite well, and I'll show the paper especially now that it's moving forward. Okay. So we want to explore this hardware software boundary further for efficiency. ConvNets are a great start. RedEye is a great start, but we need to build flexibility to shift more into that analog domain. I think as Peter mentioned, a control flow kind of understanding this in analog domain would be interesting so you could discard data before even sending it out through that ADC. And so we need to be able to do that but we also need to deal with then the unreliable analog memory because of that decay and the imprecision. And this creates challenges not only at the micro architecture level, but actually more interestingly to me, at the operating system and programming language level as well. You need to build compilers and runtime support that understand the analog context. Analog context, if you're going to do something like a subroutine, you're going to need to maintain context. Or if you're going to have multiple applications calling into this thing, you're going to need your context switch. And you're going to have that understanding of that analog context is a memory that will decay. So what does that actually mean to your execution? That's something that I really want to investigate around this as well. And then developer tools so that developers can understand now that things are being approximately computed, there's an increasingly growing body of literature towards approximate computing as well and I want to build into that with this idea of analog approximate computing so that developers can understand this accuracy and energy tradeoffs while they're designing their applications. And I'd like to point out for a second that ADC is not only fundamental for image sensors but for all sensors because they all operate in what the real world is is analog domain. And so in order to operate for not only image sensors but also accelerometers and microphones and everything else and maybe even fuse them while they're in analog domain before you actually do that readout, could become very useful as our energy goals become tighter and tighter for these Internet of Things and using energy harvesting.

So moving beyond just imaging, I want to look at all sensors as well. But that's only the first leg of the work. I also want to look into the other elephant in the room which is privacy is that having these always on imagers is going to threaten the privacy not only of the user but also the subjects

in the room themselves. And so I want to ask just like we set an ambitious goal 10 milliwatts for energy efficiency, I want to set -- ask an audacious question for continuous mobile vision privacy as well. What would it take to use continuous mobile vision everywhere, including in the most private places, including in the bathroom? How would we ever be okay with this?

Well, there's actually a lot of work here in the security and privacy research and developing policies, access control policies to support these ideas. You can see your devices today are asking you about content based access control at a device level. Do you want to allow Facebook to use your camera or your microphone? Sure. Do we know what it's actually doing with it? Not really, but that's actually why there's been more work from the security and privacy researchers to give finer grained access to features extracted from the raw data itself before you provide it to the application.

A nice principle of these privilege support mechanism there and policy there.

But the question is because you have to do this processing earlier in the stack, how do we efficiently provide that privacy? Because doing these feature based access control, there's going to be a lot of movement of secure data so that's going to be very inefficient. So I argue that we move it actually into the -- deep down into the sensor itself, even in the analog domain because there, we can provide these access control mechanisms very efficiently before it seen hits the system bus. So that in-sensor processing will provide us efficient and performant privacy and also opens the door for us to do other interesting things as well that are not just security related but also privacy related.

>> I think the right thing do here is what Steffan had mentioned a long time ago, which is he wants to wear a device around his neck that if any camera sees that, it shouldn't take his picture. Then you could use it in the bathroom.

>> Robert LiKamWa: That's actually what some of these works are proposing, yeah. It's kind of interesting. There's a world-based access control that's proposed by Franziska Roesner next door at University of Washington for example. So these are the types of things that we want to integrate lower and lower into the stack as well. Maybe not necessarily in the sensor but also in those lower levels. Other things I'd like to investigate for sure.

But at the sensor, you can do interesting things. For example, degrade your image or your features so that the things you provide to the application could not be reconstructed into images. So now you would provide them these vision features that are corrupted enough basically. So what does corrupt enough actually mean? That's something I need to study deeper. But it's interesting because the more and more processing you do in the analog domain before you do those -- provide those features out, you're going to naturally be unable to reconstruct the original image unless you have a very good imagination.

>> [Indiscernible]?

>> Robert LiKamWa: Partially. Victor is on my thesis committee.

[Laughter]

>> [Indiscernible].

>> Robert LiKamWa: Part of it will be [indiscernible] okay. So, but I think the interesting questions moving forward beyond the stuff that will be in my

Ph.D. is actually integrating it with the rest of the environment, the trusted execution environments that we're seeing with ARM TrustZone or Intel

XGS where they provide these narrow monitored view of secure data operations.

Well, we want to provide a narrow monitored view of sensor data access as well. Where is your sensor data going? What's actually happening with it before it reaches the operating system and applications? So executing the secure code and sensor, we need to keep track of that as well of what is actually going to be potentially revealed, where are the threats from the application developers or from the external services if we're using the cloud for example as well. But all together, that software/hardware boundary is a nice ripe fertile region for me to explore further territory in continuous mobile vision. And in doing so then I'm going to create this platform where

I can then explore different application spaces with continuous mobile vision. For example, a nice natural place to go is natural user interfaces where we can fuse the physical and digital worlds with our vision now and so we can have gestural interfaces for example or object-based recognition by picking things up. A personal calling of mine is to provide assistance to those who are vision impaired or memory impaired and I want to work with health professionals to understand the needs of these individuals so that they can have a device that can help them with their daily lives as well and help those with memory impairments, for example, Alzheimer's, have more graceful social interactions by reminding them that this is your son or this is your daughter for example. And one of the future thrusts of personal computing in my opinion is these lightweight drones that you could have that could take your pictures for example. And so providing lightweight eyes for these lightweight drones would be a very interesting thing to look into as well. Not only for personal computing but also for search and rescue operations as well as military services as well. So providing lightweight efficient vision for these drones is a critical element in order to make this a reality. But moving forward, I'm excited about the opportunity to work with people across many different domains in the different applications support layers and as well as the hardware/software boundary as well. This circle kind of represents where I fall into with my personal interests but you can see it too spans all of these domains as well. But I think moving forward, my target is to establish this new wave of research to fully support and utilize continuous mobile vision integrating our devices with our real world environment and ultimately enhancing our own lives. Thank you.

[Applause]

Lenin Ravindranath Sivalingam: Questions?

>> Robert LiKamWa: Steffan.

>> I have a question. So the thing that strikes me and actually I'm not --

I actually think you're right on. So basically, part of your work was

[indiscernible] this observation that corrupting images does not actually degrade the accuracy of vision algorithms. So, and I agree with that because

I actually noticed it myself too. But explain to me why that's the case.

Like what's going on? So for example, humans, the worst vision we have, the less accurate we are. And if in fact, if we want to put humans to actually put high tasks that require high accuracy, then we really test them for good vision.

>> Robert LiKamWa: Yeah.

>> So what's going on with these algorithms that, you know, when we corrupt the images, yet they do -- they still do the same? Like what's happening?

Why is that? Help me sort of [indiscernible].

>> Robert LiKamWa: So actually, the visual acuity things is very interesting because humans themselves are very inaccurate with our vision even though we think that we see a lot at one time, we have a very narrow kind of focused view of things that are going on. In fact, if you look at the data path,

I've watched these interesting neuroscience things on this is that data path from our eyes to our brain is much lower than the model that goes into the cortex. So that's kind of interesting in the way that -- the amount of data that's shipped from our eyes to our brain. And there's early processing that's done in the lateral geniculate nucleus of the eyes. But what you're asking is also interesting in that if you impair the eye a little bit, if we blur things, for example, then we are unable to recognize different objects.

So I think for some tasks, it's clear that cameras will need a very, very high resolution image in order to detect facial suppression, for example, it may need things. But for coarse things, like, without my glasses, I can tell that this is a water bottle still. So that is surely going to be the case with vision. The interesting thing with the vision neural networks actually, and I'm trying to do it with a bunch of neural network experts on this as well, is that they're getting a sparse representation of the data and that's actually what helps them process so quickly is that they try to abstract as much as possible out of it in order to generate these high level features that can then be used to reconstruct things and so in generating those high level features, you don't need as dense of a pixel array and as accurate as a pixel array to generate them.

>> So just to understand, so you think there are in fact or there will be applications for which [indiscernible] images will lead to degrading --

>> Robert LiKamWa: Absolutely, yes. Yes. I think there's going to be a -- yeah, nothing is absolute. So you have to deal with and you have to provide for all. But the point of I think all of my work, actually, the point is that we should provision for the task at hand. Not for the best case.

Right? And then optimize for the common case so that we can do it in these corrupted regions for corrupted kind of performance first before we need to trigger these high quality things. Yes, back there.

>> So actually a follow-up on that, you know, when you talk of vision here, you're abstracting it away. So really, when you look at GoogLeNet, it's classifying an image and can you remind us like how many categories is it trying to categorize?