1

advertisement

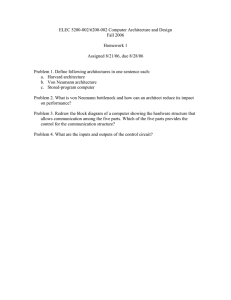

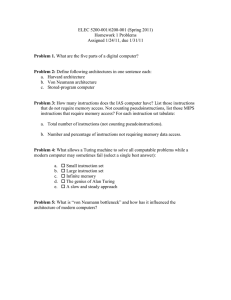

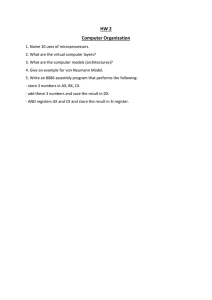

1 >> Amy Draves: Good afternoon. My name is Amy Draves, and I'm here to introduce George Dyson, who is joining us as part of the Microsoft Research visiting speaker series. George is here today to discuss his book, Turing's Cathedral, The Origins of the Digital Universe. Over 60 years ago, a group of eccentric geniuses gathered to create a universal machine. An idea that had been put forth by Alan Turing. The computer they built came to be the digital universe we know today. George Dyson is a science historian as well as a boat designer and builder. is the author of Baidarka, Project Orion, and Darwin Among the Machines. Please join me in giving him a very warm welcome. He >> George Dyson: Thank you. It's great to be here once again. Can we dim the lights? If there is a way. And trying to remember how many times I've been here, but for different subjects and different things. And I'm here on the end of a -- was here in Seattle three weeks ago and have been all over the place. I think the high point -- or the low point of my trip was leaving New York City a couple of days ago on the train to Princeton and I had -- had to be in Princeton at 2:00 for a radio interview from Boston, from NPR, and I missed the train. And I called the publicist and she says, sorry, you got to do the interview from the train on my cell phone. And if you'll see what the story's about, but this is about the [indiscernible] project to get this very fast, first five kilobyte computer running, primarily to solve the feasibility question of hydrogen bomb. And I'm on the train, doing this interview on my cell phone, and that's what the interviewer starts asking me about, this whole, why did they need the computer to solve this hydrogen bomb problem? And I start trying to explain. I'm in between the cars, and then, you know, I notice everybody in the car is looking at me, like they probably called the New Jersey transit police. And I totally lost it. It was really -- otherwise, everything has gone well. So I think I first came here to Microsoft in 1982 with my sister, Ester. Anybody here know her? In the old day, 80 percent of the people in the room would have known her. But she was a good friend of Bill's in the old days and used to stay at his house, swim in his pool, in a way helped him get started. 2 And but she doesn't drive so whenever she came -- and I lived, at that time in Vancouver. So whenever she came here I would be her driver so I got to see the inside of what was going on here. I think you had 80 people then. And everyone always says, you know, because my father, Freeman Dyson, how hard it must have been to be Freeman's boy and, you know, live in that shadow. It was much harder to be Ester's little brother. That's Ester at school. Because she was so good, later I would come along And there we are playing and I'm 10. And now she and she was a year and a half older. So a year half and just always be a disappointment to her teachers. a scene from Casablanca. So I think there she's 12, starts writing a newsletter. This is like a blog. 1967. Her first newsletter. She's just going to write what she -- I think she has 40 subscribers, or followers. That's Ester's. So that's April of 1967. Just a few months later, she goes off to Harvard at age 15. I'm working on the Crimson, the Harvard newspaper that all those famous people once worked on. And that's where she met -- that's really what put a lot of what you have here as you know came from Harvard. Then she got hired by Forbes and then got bored with Forbes and kind of moved over to the other side, to the stock analysis side. So now it's 1981 and she's writing, they asked her to report on this new thing called software. You know, is it going to be worth investing in for Oppenheimer securities. What is software? Software turns the computer from an engine, raw computer power, into a machine. A computer system that can accomplish something useful. So she sees that. Then she takes over from Ben Rosen, who started Compaq, and ran this newsletter. It started out being just about the semiconductor industry, about what chips were hot and stuff. But turned into sort of a newsletter. The first newsletter, really, about the personal computer industry. So now they send her to visit Microsoft, October 1982. A visit with Microsoft, a nice little software company grows up. So you see Microsoft's really moving up. It's now fourth behind Tandy, VisiCorp and MicroPro. It's got $11 million revenue for the year. And it's pulled ahead of digital research and Ashton-Tate, who the year before were still ahead. And then she gives her analysis that the company has just released its first 3 applications package. Starting to think about strategy and business plans. This is sort of the beginning of the end. And Microsoft has more than just money. It has about 180 people, including 90 programmers. That was last week. How many do you have now? Anybody know? Many thousands. And it's no longer going to be a language and systems software playground for creative programmers. We suspect that the company might move on to direct end-user sales. Hasn't made this jump yet. There's no way Microsoft can become the success it looks to be without abandoning some of its charm and becoming more structured, more organized, and more market-oriented. It's always a little sad to see a company grow up, but the result can be fairly terrific if the process is handled well. So she sort of called the whole thing. And the rest is history. Those were the people, the big players at the time. This was Ester, she started doing a conference, and had a marvelous ability to sort of bring, it was like a high school dance with the guys like Bill on one side of the room and the Wall Street bankers on the other, and Ester got the bankers to invest in the company. So there's Dan Fylstra, does anybody remember him? He wrote visicalc, which was the first -- or he was the co-author with some dispute of visicalc, was really the first spreadsheet. And Bill and Gary Kildall. Anybody remember Gary Kildall? Yeah, Gary Kildall wrote DR-DOS; which in a way became MS-DOS. And John Sculley, who was, thumbs down for John Sculley. John Sculley was brought in to kind of, you know, handle Steve Jobs, who was a little out of, you know, a little outlandish at that time. This was the year -- in fact, this year, they were still really buddies. John and Steve were working together and then, of course, they had a falling out and Steve left. So here we are, all these years later. I was a -- at that time, I was living in -- I wasn't living in a tree house anywhere, but I had lift in a tree house up in Canada. I was living on the ground but watching all this through reading Ester's newsletters. And it drove me to write this book with the long-term evolution of computing. I went to Ester's conferences and was very disappointed. I thought I was going to meet all these people who really thought about the fundamental importance of computing in a serious way, and all they did was argue about, you know, whether we should have five-inch floppy disks or three-inch floppy disks or which file structure would win or, you know, what kind of -- whether to do debase 3 or debase 4. So my revenge was sort to have write this deep, in a way too deep serious book about sort of the history of computing going back to the 17th 4 century. And one of your people here, Charles Shimoni, who for a while was chief architect, who's Hungarian and actually was the person who brought Ester to Microsoft so when we first came here, we went to a Hungarian restaurant in Bellevue somewhere with Charles. He's a huge fan, as all Hungarians are, of Johnny Von Neumann, the person whose three-dimensional holographic bust is in Budapest on his 100th birthday. So Charles was very taken with what I wrote in Darwin Among Machines about Johnny Von Neumann, and ten years ago, twisted the arms of the people at the Institute For Advanced Study in Princeton to allow me to go look for relics of this project that Von Neumann had done. And that year was very successful. So I not only -- there was not only lots of stuff in basement of the Institute For Advanced Study, I found other basements that had stuff in it. Sort of invariably, the best stuff is in a filing cabinet like this next to the water heater. And the sort of dark, interesting period to me in sort of the origins of the digital universe is from like about 1937 or 1938, before World War II, until, you know, like 1957, after Sputnik, everything was very well recorded. But there's sort of a period there we really, very interesting stuff happened we don't know exactly what happened. And in the bottom drawer of that filing cabinet was all the correspondence back and forth between Johnny Von Neumann and his second wife, Klara, who he married in 1938, and she came to America. But they were always -- he's in Princeton, she's working, doing the programming for the [indiscernible] at the ballistic research laboratory. They were always writing back and forth. They were writing back and forth about all the problems in their marriage and the problems in the programming of this early, these early computational problems. So it was a wealth of material, all in the original envelope, dated, postmarked from Los Alamos to Klara in Washington, D.C. In fact, some of this was when they were out here in Seattle. And that gave, if you read this book, that's what makes it into a kind of a living book and a story, because there's that firsthand, like having the emails. The what people are really thinking day to day, as all this exciting stuff was happening before people could really even see what was happening, because they were in the middle of it. 5 So I work at a former tavern up in Bellingham, and my kayak workshop, so it's a beautiful place to sort of lay out those, you know, chapters that became a book and see what's missing. I took ten years to write this book, which is almost incomprehensible how long it is. And to put it in perspective, the group working with John Von Neumann, they conceived, designed, built, programmed, debugged and solved the important problems, finished the project in less time than it took me to write about it. But finally, it became a manuscript. My subtitle was A Creation Myth to sort of remind people that this was not necessarily the truth, but it was the story of how this digital universe came into being. And the publishers couldn't have creation in the title. So it's, now it's the Origins of the Digital Universe. And if you're a writer, you live in fear, there are sort of three things you live in fear of from your publisher. First thing is the letters of rejection telling you that, you know, it's a great idea, or it's a wonderful manuscript, but we can't publish it. It's not for us. And if they accept your book, then several years later, you get the letter that I got lots of, saying, where is the manuscript? You're late, we're going to cancel your contract. And if you make it past that hurdle and you actually write the book, then another two or three years later, you get a letter, an email now that says, we've come up with a cover design and we think you will like it. And it's almost invariably not the case. Covers are -- it's incomprehensible how they come up with covers that so misrepresent, you know, the book you've worked on all these years. In this case, Pantheon, this cover's brilliant. I had no -- didn't question a thing about it from the moment I saw it. Just fell in love with this idea of the cover that is an actual example of, you know, how we made that switch from analog to digital at the beginning with punch cards and then electronics and how it puts Alan Turing, the sort of meaning behind the of Turing's Cathedral is like this whole structure is like a cathedral that you can't tell really who built it, but somehow the fundamental mathematical ideas of the Alan Turing are at the foundation of everything. So putting him on the inside cover just works really well. The upper graph is the dedication to Leibniz in the 17th century who made the statement, who built digital computers and actually invented but did not build 6 the digital computer that ran with black and white marbles running down tracks that were shifted, effectively exactly the same as a shift register in a micro processor, but with marbles and gravity instead of pulses of electrons and a voltage gradient. But he reminded us it was not made for those who sell oil or sardines. It's not all about ad revenue and -- although maybe it is, but there's something else going on that's of fundamental importance. But as some of you were sitting here the last few minutes, the book has 80 some pictures. I'm just going to run through them, which sort of gives you the whole story with a little more color than just the black and white in the book. So the first picture is simply a view. This is the digital universe in 1953. It's not a picture -- it's not a mapping of bits somewhere else, as what you're seeing now is actually, you know, is map from the bits that are in solid state in my laptop through various stages. But at the time, the bits were actually stored as spots of electric charge on the face of a phosphorescent oscilloscope tube. So those bits actually were the memory of the computer. And when you watched this computer work, you could actually see the bits changing state and moving around. So fundamentally different. And then it reversed so that then we used cathode ray tubes to display memory that came from somewhere else. So that's February, 1953. Machine's been running for about six months. At that point, it's easy to remember there were 53 kilobytes of high speed, fully random access memory on the entire planet. You know, which is, what, the memory of an email today with no attachments. And a lot was done. And this particular machine was five kilobytes. This piece of paper came from another basement. Jillian Bigelow was hired as chief engineer by Johnny Von Neumann, and somebody threw this piece of paper out and he saved it. At the top, it says orders, let a word 40 BD, which is an abbreviation for binary digit, be two orders. Each order equals C of A, command, and then it gives the address coordinates and an address. That to me is a fundamentally statement. As far as I can tell, that's the origin of the command line. The fact that they would have a command and an address, and then that sort of was cast in stone and that's how we do all our basic programming still. And they hadn't invented the word bit yet. And there's an entry in the journal also from March, 1953, stop at 18, 8. 18, 7 8 is the coordinates of a memory location where the computations stopped. So the computation it stopped was being done by Nils Barricelli, a Norwegian Italian viral geneticist who was running not simulations of evolution, but real evolution within this five kilobyte universe. He was inoculating it with random strings of bits and allowing them to cross-breed and self-replicate and do things like that. And the deal was that the bomb people from Los Alamos got the machine. They had first rights to the machine. They would go home at midnight or 2:00 a.m. and then Barricelli came in and ran -- that's the first night he starts letting these creatures loose inside the memory. And then the engineers come back in the morning and it's over, back to the thermonuclear hydro dynamic codes. And it's this beautiful transition between these two problems they were working on at exactly the same time. One whose solution could end up destroying all life on earth and one whose solution could end up possibly creating an entirely new form of life. Alan Turing at age 5. And Johnny Von Neumann at age 7. They grew up, Von Neumann in Budapest and Alan Turing in England in a very similar time. But between World War One and World War II. So what we know Turing best for is his paper published in 1936, written when he was 23 years old in 1935 that we remember as the proof of universal computation. That if you build one machine that do not, you know, just a number of very simple operations, then it can do anything any other digital computer can do if you give it the proper software to do so. And great debate has raged among modern sort of historians over how much credit did Von Neumann give to Turing. Did he read Turing's paper or not. Were Turing's ideas important to Von Neumann. And I thought I just would go look. So I went to Johnny Von Neumann's library at the institute, and now it's behind one of these compact shelving where you have to turn the cranks to get the vessels apart so you pretty much know no one's been there recently. And all the volumes of London [indiscernible] mathematical society are there, and they're all immaculate and perfect and everything else, except the volume with Turing's paper in it. It's completely just absolutely unbound from having been read so many times. And that's what the engineers told me. The surviving engineers I spoke with 8 said yes, when we showed up in 1946, Johnny said, you know, read this paper. This is what we're trying to do. We're going to build one of these machines. And such machines existed. Very difficult to get people to not say, oh, George is talking about the first computer. I'm not talking about the first computer. There were lots of, in fact, if you read the book, there was 11 computers that were ahead of this one that are mentioned. But until then, the memory was accessible only at the speed of sound. It was delay line memory and things like that. So suddenly now, Von Neumann figures out a way or his engineers do and we start getting memory that's accessible to speed of light and that was a big transition. And the memory became two-dimensional. The Alan Turing model, as powerful as it is, it's a one-dimensional model where you have this not infinite, but unbounded string of tape and that's where the memory is. And then Von Neumann had the sort of practical implementation of that, which is two dimensional, the two dimensional address matrix that still governs everything today. Everything we do has a -- you know, you a have a 64 bit computer. That's a 64 bit matrix. So in England at that time, looks to us as if computing just sort of suddenly came out of thin air after World War II. But it was incubating during the war but in secret. On the British side, they were trying to break -- I mean, in fact, it was their lives depended on breaking the codes. The communication between the German high command and the U-boat fleet. If you've read Cryptonomicon by Stevenson, just a fantastic portrayal of what that was like. England's survival depended on breaking those codes. Alan Turing had this realize idea in 1936. Only two requests for reprints of that paper came in when he published it. Suddenly, it had a real implication, because what they did with this machine called the colossus, of which they made 11 copies, it was a, effectively a universal Turing machine. And by changing the state of the bits in those vacuum tubes, they could imitate what they suspected might be the state of the German machine that was doing the encryption and then they could run through a whole lot of those permutations in a short period of time and if all went well, break the codes within enough time to be useful. And they did. And probably very good argument could be made that England won the war because -- our side won the war because of breaking those codes. 9 That's Alan Turing in 1946 getting on a bus to a long distance -- a running race. He was a long distance runner. And Johnny Von Neumann in 1952 with that machine built in Princeton. So the memory is in those canisters with a 32 by 32 bit array in each can. There's 40 cans. So there's -- they're running 40 bit words in parallel, and one bit of each word is in the separate tubes. All the tubes have to work perfectly, or it doesn't work. Why did it happen in Princeton? Because Princeton is in the middle of New Jersey. That's the Delaware estuary on the top where Philadelphia is and the Rareton estuary leading to New York. And there was an Indian foot path between the two. Which started being used by the people going back and forth between the growing cities of Philadelphia and New York. A settler built a tavern in the middle, Henley Greenland, so you could stop for a beer or change your horses. And that tavern grew into the town of Princeton, and then became Princeton university. But the other side of the trail, there was still wilderness and a group of Quakers led by William Penn, they settled there for the opposite reason. They were trying to get as far away from the corrupting influences of the city and they built a quaker meeting house, and that land ultimately became the Institute For Advanced Study. And you still have these sort of opposing cultures in Princeton, where the university is drinking a lot of beer and the institute is drinking a lot of tea. And they built this fantastic building in 1939. It was just the right thing at the right time, because things were going terribly bad in Europe, and the Bambergers, who funded this institute, were very strong on saving, rescuing all these European scholars. Oswald Veblen sort of masterminded this. He brought, some of you may know Paul Erdos, the great mathematician. They got him out of Hungary for $750. They brought Stan Ulam out of Poland for $300. So all they needed was some token appointment and they could get around the Visa rules because it was an exemption for lectureships and save people from Europe. And they saved a huge number of people. Veblen also hired Norbert Weiner, the sort of founder of cybernetics. He had a real eye for -- Norbert Weiner was writing articles for the encyclopedia Americana. Couldn't get a teaching job. So Veblen just had an eye for rescuing people. The place was run by Abraham Flexner. And his -- he sort of was the, you know, 10 the mastermind who convinced the Bambergers to do this crazy thing and his idea was the usefulness of useless knowledge. That if you just let people work on whatever they want to work on, something good will come of it. It's sort of that Google gives people 20 percent. He gave them 100 straight off. You either got 100 percent for one year or you got that 100 percent for life. There were two classes of membership at this place. Bamberger is who funded it. The first mathematics faculty at the beginning. You've got Einstein, Marston Morse, Oswald Veblen, Johnny Von Neumann and Oscar Morganstern, who founded -they wrote the theory of games and economic behavior together. Einstein and Kurt Godel. Godel, the great logician, who I think didn't get enough credit in the origins of digital computing. In this book, I try to give him a little more credit that he deserves. His office, he worked closely with Von Neumann. His office was above Von Neumann. And in his incompleteness proof of 1931, if you look at it carefully, it's very much like a computer program that he encodes logical statements with a numerical code and then gives them numerical addresses, manipulates the numerical addresses with arithmetic that then manipulates the underlying logical statements in a very profound and important way. And I think those ideas translated directly to what, sort of how Von Neumann set up this machine. Von Neumann, at age 11, with his cousin Lily, doing math. And in 1915, at an Austria Hungarian artillery position, and he's the kid sitting on the barrel of the gun. He was very comfortable with the military. At a wedding of his cousin in Budapest. And Budapest, between World War One and World War Two, Budapest was just phenomenally a rich place. Rich in literature, art, mathematics, and tremendous parties. And it changed the world when those people left Hungary. A big part of the, what we think of as Hollywood really was driven by Hungarian immigrants. Don't know how many people know Bailey Bulabash who comes here. He comes to MSR every year for two or three months, and his -- these letters of Klara's that so much of the book is based upon are half in Hungarian and half in English. They drift back and forth. And Gabby Bulabash, his wife, translated all of those letters into English. Fantastic job. Von Neumann's identity card from the university of Berlin that he, where he resigned in protest against the Nazis in 1935. He came to America in 1930. Princeton university -- Veblen was trying very hard to get Von Neumann, but the 11 gate keepers at Princeton at that time, there were only 23 Jewish students at Princeton and it was almost impossible to hire a Jewish faculty member. So Veblen had a marvelous way of getting around the rules. He had this brilliant idea he would hire half of two Hungarian Jewish professors and that didn't break the rules. So he brought Von Neumann and Eugene Jager, each a halftime position. It was ten times what they could make in Europe. They both said yes and came to Princeton and both stayed, greatly to our benefit. Princeton in the 1930s. The guy who's had so much to drink he's lying on the floor is Percy Robertson, who is teaching quantum physics to Alan Turing at the time. Alan Turing came to Princeton in 1936 and was a, essentially getting his Ph.D. under Alonzo Church there. So everybody's Hungarian there except Wagner standing in the middle from Madison, Wisconsin, and Percy Robertson, who was from Hoquiam, Washington. How he got to that position from Hoquiam, I don't know. Von Neumann and Dick Feynman standing at Los Alamos in 1949. The photo is by Nick Metropolis, who brought us the Metropolis algorithm. Feynman, it's my favorite picture of Feynman. He's playing James Dean or Marlon Brando. So these were peer mathematical physicists who worked completely in the abstract and suddenly during the war they get to work with high explosives and machine shops and things. They weren't going to go back. They were not going to go back to just academic work. And the question was what was next. Obviously, more weapons. Feynman didn't want to work on weapons. But computer was the next -- was going to be the next big thing. That's the Trinity, the first implosion/explosion. So the British had built this series of colossus machines. In America, we built the ENIAC, the electronic numerical integrator and computer, built at the university of Pennsylvania at the Moore School. Incredibly sophisticated machine that we ignore, we tend to forget how advanced really was. Effectively, it was a multiple core processor. It had 20 parallel processors that ran, did its computing at a very different way that's more similar to the way we do it now in some ways. But when Von Neumann walked in there and saw this thing, I think they immediately, in a great debate over whose ideas they were, but he saw how you could transform this machine into unless and actually run these more sequential codes, which is what he wanted to do. So he wrote a paper, again under much dispute, because he's the sole author on the title page. The record on the 12 EDVAC, which is sort of how you can build a much more sophisticated, fully stored program machine. So all the elements of this modern computer were the central processor and memory and all that are in that -- the architecture's in that document. And how you do an adder, basic things like that. The person in the middle here is Vladimir Zworykin, who also, I think, strangely ignored who was director of research laboratories at RCA at that time. And he was very strong -- he had brought RCA into television, been very successful. They were doing well. He wanted to go into computing in a big way, and then RCA said no. But the first meetings of Von Neumann's project were all held in Vladimir Zworykin's office. You look down the list you the fifth person is John Tukey, the statistician who worked with Claude Shannon. And he's the guy who finally got up in one of these meetings somewhere in early 1946 and said, binary digit, come on, let's just call it a bit. Got tired of saying binary digit. Then we had bits. And in my view, how do these amazing things happen? You know, they whole project with really between one dozen and two dozen people in years with under a million dollars. And how does that happen? And people helped. So I'm interested not just in the name brand people get left on the project, but everybody from the bottom up. did this about six all the whose names So important person there was Bernetta Miller, who was the administrative assistant. She's the person who, you know, wrote those contracts with the government, made sure the checks were cleared, and that kind of thing. She was also the fifth woman in the United States to get a pilot's license. And here she is in 1912. She's demonstrating the Bleriot monoplane to the U.S. Army who at that time only flew airplanes with two wings. They were not going to buy, you know, contract with a defense are contractor for a plane with only one wing, because if you shoot out one wing, it's going to crash. So they decided that the way to convince the Army this plane was really safe was to get a woman to fly it. So she had the job of flying this thing for the Army. And then her eyesight began to -- they wouldn't let her fly in World War One as a combat pilot so she volunteered on the ground, for bringing supplies under fire. And then her eyesight deteriorated and she ended up being the administrative assistant on this computing project. Emanuel Levies was hired at 16 to work on the ENIAC, just as a high school student and then came to Princeton with Von Neumann and Herman Goldstein and is 13 still alive and well in Los Angeles. Came to my talk two weeks ago at the computer history museum in Mountainview and they didn't want to put her on stage, because it was being filmed for KQED. But at question time, she came on stage and absolutely stole the show because she could, you know, microphone in her hand, tell stories about Von Neumann and all these other great people because she was there. We're losing the last eyewitnesses, which is what I'm trying to do, get the story down, because I think it's an important one. Were going to be living in this digital universe for as far as we can see, and we ought to know how it really started. Norman Thompson was doing meteorological codes trying to predict the weather. Did an amazingly good job if you get a five-day forecast, it's -- it really is his codes, just running with a whole lot more processing and a lot better input data. RCA, under -- I think we -- we lost our sound? They were building what was called -- they called the Selectron, and it's like the dinosaur with feathers. It was a 4096 bit digital matrix. Fully digitally switched inside a vacuum tube. So it's like the missing link between the analog and the digital. The problem is they didn't get it debugged and working in time. By the time they got it working, sort of Von Neumann had moved ahead with something else. But in a way, this is, the architecture of this machine they built was designed for these tube, and that's in a lot of ways why the architecture we end up with was so well-suited when we did get silicon memory and silicon chips. It was already there to plug in. The architecture had been expecting that from the beginning. So that's the ancestor of your USB stick. Fully solid state memory. Oh and they built one machine that ran the [indiscernible] in Los Angeles was built with selectrons, and they had 100,000 hours mean time between failure on those tubes. It really worked well once they got it running. Now, the only -they were only 256 bit tubes. They had to scale back down. So they were sort of too ambitious to begin with. So the Von Neumann group was desperate. There was real pressure from their sponsors to -- this hydrogen bomb question was imminent and they couldn't wait. They went to Manchester, England, and they borrowed the memory tubes that 14 Turing's group in Manchester had developed, what we call the Williams tube, which stores the memory as it spots a charge on the face of those just standard orser plus oscilloscope tubes. And a very high gain, 30,000 gain amplifier at the face of tube. And they aren't calling it ones and zeroes. They're calling it a dot and a dash. But how you distinguish between a -- you know, they had 0.7 -- three quarters of a millionths of a second to make that distinction, whether it was a zero or a one. The machine itself was a work of, I think, engineering genius. And that's part of the story I'm trying to tell is how that came to be. So Julian Bigelow was a real engineer. I remember going to his house as a kid. He had an airplane engine dismantled in his living room. He worked on real engines. And he built this -- the computer really was built as a V-40 engine with 20 cylinders of memory on each side, overhead intake and exhaust valves that are memory registers and all done with what we, I think, would now call positive interlock, with no bit moves without going into an intermediate register that signals back, okay, I've got all the bits. You can clear. It doesn't -- so you don't lose bits in transit. And that's one reason why, you know, again why we can have these computers that run billions of cycles a second and don't lose bits. So it wasn't that big. It was two feet wide, eight feet long, six feet high. The remains of it are still in the Smithsonian. Julian Bigelow, Herman Goldstein, Robert Oppenheimer, who we remember as being so opposed to the hydrogen bomb but was actually the director of this institute in supporting this project and Johnny Von Neumann and all the engineers who went, as this project was successful, they disbursed officers of other labs to RCA, IBM, Stanford research, you know, all the places that built copies of these machines, including about 11 different countries overseas. Some of the women who did the actual programming, there was no place for anybody to live in New Jersey after the war, so Bigelow went up to upstate New York to an iron mine that was closed down after the war and cut these buildings in half and brought them down to Princeton, against a lot of protests, and that's where the computer people lived. That's the ancestor of your hard disk. It's two bicycle wheels running a loop of magnetic recording wire, and they were able to get 90,000 bits per second input/output, just using that very crude machine. So that's a 40 bit word with index bits at the start and end of 15 the word. So all of this was done with vacuum tubes, very -- that's a shift register so you can physically see how there's that sort of intermediate register that stores the bits before they are shifted back down to the next register. A lot of the work was done by high school kids. All the wiring, everything had to be done multiple times. That's the wiring diagram for one of the shift registers. Jim Palmer with the pipe, Julian Bigelow in the middle, Herman Goldstein and Willis Ware, who then came to Rand and was a very important part in the beginnings of the internet at Rand. That's sort of another story. They're trying to run what we now -- what they called Monte Carlo, probably, if you look at what algorithm has solved the most real problems in the real world is probably the Monte car low algorithm. And that didn't come out of thin air. It sort of came out of the mind of Stan Ulam, who we rescued from Poland for $300. And then he was also working deeply on the hydrogen bomb problem at Los Alamos. And then got a near fatal case of encephalitis and was put in the hospital and the doctors told him to stop thinking. Which was very difficult. And his answer is okay, I'll stop thinking. He started playing solitaire. I'll just mindlessly play solitaire. He kept playing solitaire, but he couldn't stop thinking. And while playing all these games of solitaire, he started wondering, well, how could you calculate what the outcome of the game of sol /TAEURGS and then he realized that the game itself was a statistical model of the process you were trying to solve and that would be a better way to solve it. He had this blinding insight and that became Monte Carlo, that you sort of run this, using random numbers in the computer, you follow this statistical chain of process, which was very -- which solved these sort of neutron diffusion problems which had been very resistant to any other kind of mathematics and solved the problem. And Klara Von Neumann who came in 1938. That's her 1939 French driver's license. And Johnny needed somebody to start writing the code. There was a lot of housekeeping. They had nothing that we would term an assembly language or anything like that. It was just all done in raw, absolute addressing, raw binary. Somebody had to do all that housekeeping and he trained her to do that. Most of the early Monte Carlo codes were all coded by her. That was his, I thought 16 a Cadillac, but friend corrected me a couple of days ago, it's actually a LaSalle. Bought a new car every year. Loved to drive fast. That's on their honeymoon to key west. Stan Ulam with Francois, who was another great source. She died just this last year. Kept journals and could tell me what people were thinking at the time. You know, why did they really want to build this terrible, horrible weapon? She had lost her entire family in the holocaust and except one brother, and Stan had also lost everyone except one brother. So they had their reasons to want to make sure that these, if there were going to be such weapon, they would be on our side. Nick Metropolis, his badge photo. Playing the first game of chess against the maniac at Los Alamos. They're playing on a six by six board with no bishops to make the board simpler. You can see the input/output is still by five hole, paper tape. Johnny Von Neumann at -- on a trip to the Grand Canyon in the 1940s. And all the horses are facing this way, and his -- or mules, and his mule is facing the other way. That's Klara there. At the Ulam household. Francois, Klara, who is still alive, Stan Ulam, Johnny. And these are the -- drawing by George Gamoff that puzzles me because it's Stalin with the bomb and that's Robert Oppenheimer looking like a saint. And Stan Ulam and Edward Teller and George Gamoff. And what those three physicists are doing, I think what they're doing is some -- represents somehow different approaches to trying to ignite a thermonuclear reaction, but I don't understand. Von Neumann and von Braun. They together conceived the Atlas missile program. And a whole 'nother thread in this sort of story of how we got this numeral world came from Lewis Frey Richardson and the British meteorologist who, during World War One, spent the war by hand calculating a difference equation model of the atmosphere over northern Europe for one day. And the results were totally wrong. But Von Neumann and Churny, who came in to do these calculations, believed that the principle was right, just that they needed to reduce the noise in the equations. And when they did, and could do it much faster, it started to work. And Richardson, I think, also answered the artificial intelligence question, you know, can machines ever be intelligent. He said they can be intelligent already so here's a model of a machine that's a nondeterministic circuit. 17 Having a will but capable of only two ideas. That's their first trip to run a meteorological calculation. That's the first day got a 24-hour forecast in less than 24 hours. They're celebrating. And very quickly, it went faster and faster. So if you look at what they did in just five kilobytes, they worked fundamental problems where the mathematics was similar but the time very different. In the middle, we have a scale of seconds in time. worked on nuclear explosions where everything is over in, you know, billionths of a second and they worked on shock waves, sort of what the next few seconds. on five scales were So they in happens in And they worked on meteorology, which is sort of in the middle. That's sort of in range of time scale of hours and days, after a few weeks, months, it becomes climate. And Nils Barricelli showed up and worked on biological evolution. What would happen over millions of years. And Martin Schwartz came and ran stellar evolution codes. So looking at the evolution of stars over billions of years. You have 26 orders of magnitude in time. And the good thing about stellar evolution was you actually had stars up in the sky and you kind of check your results against the real world evolution of stars. And if you compare that with things that we are familiar with, the fast, you know, the smallest unit of time that we can perceive is like the blink of an eye, third of a second. Or your entire lifetime, 90 years. And exactly in the middle is kind of, you know, a working day at Microsoft. Eight hours. And I don't understand why that is. Why are we right in the middle of the perceivable. Is it because we are in the middle, it's going to be sort of equally perceivable on both sides or what. But to me, that's still some puzzle there I don't understand. And what the computer did, which is what -- what Von Neumann very intentionally set out to do is to enlarge our perception and did that very successfully. Brought us into ranges of time that before fast digital computers, we really could not, you know, calculate what would happen to stars over billions of years and we couldn't see what would happen in a billionths of a second of a nuclear explosion. And that just has continued to expand. If you go into the world of science today, you'll see people who are working 18 down in the fento-second range and, you know, looking now at the evolution of the universes over even longer periods of time. So Von Neumann was extremely interested in biology. He and Alan Turing, they both died working on biology. And to sort of in a way hedge his bets of inventing this terrible weapon that could destroy all life on earth, they brought in Nils Barricelli, who was a viral geneticist, ended up spending quite a few later years here at University of Washington. So there's still people here who remember him. He ate at Ivars regularly. Took all his students there. And he worked again, directly -- most of the programmers worked in hexadecimal notation. So, you know, you have a hexadecimal code for a 40 bit number. He worked directly in just raw binary and these are outputs of his results. He would run these simulations until they kicked him off the machine and then he would save the memory in the core and print it out. Phenomenally interesting stuff. That's Alan Turing in 1951, back in England. He's now working on the Ferranti Mark 1 machine. We remember Turing for this model of deterministic computation. What we forget is that by the time he got to Princeton, he already saw the limits of deterministic computation and what he worked on in Princeton was a model of nondeterministic computation. He called them oracle machines, machines that followed logical sequences for a certain number of steps and then made intuitive leaps that were nondeterministic. And he strongly believed that no machine could ever have any degree of real intelligence as long as it was deterministic. And so this machine, he insisted it have a source of electronic noise, sort of like Lewis Richardson. And I think that's, in many ways, future we're living in now, where we're building large, very large machines that are essentially nondeterministic and start to do other, more interesting things. Julian Bigelow would be a hundred next year. Alan Turing would be 100 this year. Von Neumann dies in 1956. They turn the machine over to the university, who can't get it running. They finally bring Julian Bigelow back -- they fire him but then bring him back to can he please get this thing running. He gets it running really well with about six people helping. And then they pull the plug. So that's the last entry in all those thousands of pages of log books. 12:00 midnight, July 15, 1958. Off. It's a real tragedy for Julian, who still had problems he wanted to work on. 19 Thanks so Charles Shimoni, when I really started digging this stuff out -actually, this is what I showed to Charles, and that's what prompted him to twist the arms of the trustees and let me spend more time there. In the bottom of one box in the basement in the corner, covered in greasy teletype manual stuff was a box of punch cards. That's the actual cards and the source code for one of these universes that Barricelli was running. With a note that said, there must be something about this code that you haven't explained yet. And when I saw that, I thought that's the last sentence in the book. All I have to do is write, you know, the other 130,000 words. It took eight years. But this takes us back to what Turing was trying to do at the beginning. You know, Alan Turing invented this model, digital computation, not to invent the digital computer. That really wasn't what he was thinking about. He was concerned with this very abstract problem in pure mathematics called the [indiscernible] problem, the decision problem of whether and, if I'm speaking to programmers, I'm going to garble this for you. But the question of whether, by given a string of logical symbols, is there any systematic way to determine whether that string is a provable formula or not. And David Hilbert, who posed that problem, thought the answer would be yes. And Turing said out of instinct, the answer would be no. The way he got to a firm no was by inventing this abstract machine, we now call it the universal Turing machine, that he was able to prove could do anything that any other machine could do, yet one of the things that this machine that could do anything that any other machine could do could not do is come up with a systematic way of determining by looking at a string whether it is a provable formula or not. And the implications for the real world are exactly sort of the world you're all working in today, that you really can't tell -- and again, this is not being formally correct. You know, it doesn't mean that you can't tell by looking at a code what the code will do, but it almost translates to that. That you can't -- you know, you're never going to be able to -- you cannot have a firewall that keeps out all malevolent code simply by inspecting it. You have to let the code run and you can never debug your products without, you know, letting them run and seeing what happens. To me, that makes the world much more interesting. I think that's why the digital universe is never, no matter how much trillions of cycles per second we have, it's always going to become more interesting at a faster rate than we can 20 sort of figure it all out. I think that's -- Turing left us with this very profound result that's going to be with us forever. And it takes us back to the world of Leibniz. This is a medal that Leibniz designed for the duke of Brunswick in 1697, and the explanation of it is really that everything in the world can be described in digital code and that if we understand digital code, we can start to understand everything in the world. And that's all we're really still doing. The last picture in the book is me at the -- in front of Fuld Hall, where they started this project. It's 1954. The project is already fundamentally over. They've done the interesting stuff by the time I showed up. But I grew up in this place where most of the people working there were doing very boring stuff. But in this back building, you know, Julian Bigelow was still building this machine or at least they were, you know, the scrap pieces of it were lying around. To me as a kid, that was by far the most exciting thing. The hardware was still really hardware. And thanks to all the people and the institutions who supported this and let me into their archives and basements and their sort of family secrets to try to put this whole story together. And we have a little bit of time for a few questions. Do we have any questions? >>: You may not be able to answer this, but I was wondering, when I was looking at photos that you had of the big machine that, the guy who put the airplane engine in his living room, he was putting the machine together. In your book, do you go into any of the reasoning of what he went through maybe. Not so much the physics, but just the general explanation of his reasoning on, you know, putting this here and putting that there, and putting it [indiscernible] juxtaposing and all that? Because I think that's fascinating. In other words, you know, what was going through their mind, what was their reasoning on how they put something together. Why they would think that this was the right way to do it. >> George Dyson: Yes, I'll repeat the question. The question is -- do I go into any detail about why the machine was designed physically the way it was. And yes, I mean, according to some critics, I go into way, way, way too much detail, because I think it's a very important question. Because every micro processor you use today is functionally an exact blueprint of that machine. Not because, necessarily, that machine was so much better. But it just happened to be the one that was copied. Once you started copying it, people 21 wrote codes for it. Once you started writing codes for it, you had to follow that design if you wanted to run the codes. We're stuck with it like we are with the genetic code in biology. But Julian Bigelow thought very deeply about this, and there's endless arguments at the beginning. And physically, he was way, way ahead. I mean, the architecture of that machine is actually three-dimensional, because he was concerned about the time path between components. So we now put things flat on a chip just because a chip is so small, it doesn't really matter. But in this bigger machine, he saw very clearly that you wanted the structure to actually be three-dimensional so there was a shorter connection path between the components to get these very, very, very high speeds that they got. And then it was beautifully designed, which is hard to imagine to us today. That computer required 37 different voltages. Micro processor just sort of has plus or minus five volts or something. So all these different voltage supplies. Vacuum tube has to have heaters to heat the -- heat it first and then it has five or six inputs and outputs. So it was amazingly complex. The wiring was brilliantly done with copper sheets that supplied all the heater voltages like that. So it's a sad thing that this computer is not on public display. It's in one of these storage warehouses at the Smithsonian because it is, I think, a true work of functional art. And it was, you know, it was built by hand. Another question here. >>: Von Neumann, in the [indiscernible] work doesn't mention Turing universality. But he clearly knew about it. So I wonder, is there any evidence that he deliberately left it out, or he was thinking of it, or what? >> George Dyson: Yes, he clearly was familiar with Turing and universality. The EDVAC report is a very strange document. There's a historian, David Greer, who is looking into this with great detail with sort of handwriting analysis and so on, because it was produced under very odd circumstances. And there's a -- you can take a sinister view that it was, you know, that it was pushed out there to avoid any possibility of patents on some of these ideas. >>: It was rumored that Von Neumann was a consultant for IBM at the time. 22 >> George Dyson: 1945. >>: It's not a rumor. I found a consulting agreement dated May So it was a bit hard on Eckardt and Mockley. >> George Dyson: If you read this book, you'll find several smoking guns that are very quite unpleasant, I mean, in the sense that it was Eckardt and Mockley found the their own company and they had a good special computer, the Univac, but they didn't have unlimited government funding. But they had a purchase contract for three machines. Would have put them in business. And at the last minute, their security clearance was questioned and they lost the contract. And that put them into bankruptcy and that's what opened the door for IBM. And so there's no direct chain of who did what or so on, but Eckardt and Mockley were very bitter and I think they had good reason to be bitter. So I admire Von Neumann tremendously, but I also would be the first to say that there were people who were very annoyed by what happened. >>: It was Goldstein who put out the report with only Von Neumann's name? >> George Dyson: That seems quite clear, yes. Goldstein issued it, and what those circumstances were, it's very hard to tell. It's a very interesting report, but it's very vague because at that time, you couldn't really talk about electronic architecture. It was still -- the ENIAC was not declassified until February 1946. Report came out sooner. So this is marvelously important period in history. I think some day it may all get sorted out. >>: Are there any documented meetings between Turing and Von Neumann in terms of work they did together? >> George Dyson: Sure. Oh, yeah, Turing was in Princeton for two years. That's why it baffles me. Why do people make this big deal about no contact. But Turing, they were in the same building. They were -- they drank tea in the same common room. They went to the bathroom in the same toilet. >>: Von Neumann research -- >> George Dyson: Yes, and Turing, partly because of the war and partly because he was homosexual and Princeton was not a very welcoming place, whereas 23 Cambridge was. And, I mean, he -- it's interesting. When Von Neumann comes to America, the day he gets off the boat, he says, I'm in America. I'm Johnny. You know, he just loved America from the first day. And the day -- Turing was never really happy in America. And I think it's clear why he went back. But then he came back during the war. Von Neumann went to England during the war and Turing came to America during the war and that's sort of completely black. We don't know what they worked on. But like Jack Goode, who worked with Turing very closely, said that when Turing came back from his trip to America and the war he talked about -- because, you know, they were chess players. He talked about this, what if I had a chess board and you had bags of gun powder equi-distributed at the intersections on the grid. What algorithm would you use to decide whether you ignite one bag of gun powder does the whole thing go off. That's really the criticality problem. I think Turing had some input into the computational problems they were doing at Los Alamos and I think Von Neumann, in fact, Von Neumann explicitly says that he got his ideas about programming from a trip to England. He actually says that in a, you know, a written report. >>: How alien would those early programs look to modern programmers? >> George Dyson: Not alien at all. And vice versa. That's what's so interesting that if you brought Turing and Von Neumann back today and took them, showed them your code, they'd completely understand it. I mean, nothing's changed. They would be horrified -- I mean, Von Neumann would be horrified, why did you name this crappy architecture after me. Because they were just interested in solving -- and Bigelow was heartbroken. I mean, you know, he saw how to make this design so much better. I mean, he was just wanting to go on to version two and we stayed on version one for 60 years. I mean, once it started working -- and what happened was it became so cheap and so fast. So we sort of tolerated it. But they were both thinking about very different forms of computation. >>: Online, someone says I'd be curious to see the computational logic that you've immersed in as any [indiscernible] designing, piloting your small water craft or [indiscernible]. >> George Dyson: So the question is does this thinking about digital design 24 have any relation to designing boats, which is something else I do. No, I would say no, that those are really different things. And I think you know that from your work. I mean, that's sort of designing code where you -- things have to be in perfect logical sequence is very different from, you know, making pottery or something where you are dealing with form. It's a very different kind of design. I mean, there might be some elements that clean, elegant code is similar to a clean, elegant, you know, boat design. But very little. I mean, so unfortunately not. Yes? >>: At the beginning of the lecture, you mentioned your sister. the book? What are some of her thoughts? Has she seen >> George Dyson: Has my sister, Ester, seen the book. Yes, she has seen it. Has she read it? You'll have to ask her. She keeps asking me, because they did an audio version, they kept calling me up, how to pronounce Hungarian names I don't know how to pronounce. So finally, I referred them to Von Neumann's daughter, who is still alive, to help with the pronunciation. Ester kept asking can I send her the audio version so that might mean she hasn't read it yet. But Ester, I was just in New York and we had a great day together, and she's zooming around in her orbit doing all kinds of great things. And in a way, it's very satisfying to sort of put these worlds together. And I gave a talk in person to our third grade teacher, one of these teachers who I thought had been so disappointed in me came to the talk and said no, you were just different than Ester, but we weren't disappointed. Yes? >>: First from Twitter, on the cover, the response is it's full of epic wind and full of geek awesome. Good choice. >> George Dyson: The cover is great. The cover doesn't mean anything, if you're trying to decode it. The designer very -- which I think was tremendously to their credit, I gave them one of Barricelli's cards. When they said they had this punch card idea. And then I got a message back, is it okay if we move the positions of the holes around. I said sure. >>: The question, though, is as a technology historian, you said you started writing this book around eight, ten years ago, right? And that influx at the 25 time we were in a very unlimited CPO, unlimited power mindset in software. Now we've traded that off for this kind of internet of things, sensor becoming the nervous system of the cloud and the cloud being this culmination of the big machine, right. How do you see that going in terms of your job as being historian of technology when science fact starts outpacing science fiction? Are we on this technology elbow? How is that making your job harder in that role? >> George Dyson: So the question is as a writer about technology, how do you deal with the rapid change in technology? And, of course, that's why I'm a historian. If I write about things that happened in 1945, you know, my book won't be out of date by the time it's finished, which would be the case if you were trying to write about modern developments. As to where this is going, I have very strong belief that we actually are going back to analog computation. And people just won't admit it, because analog is sort of a dirty word that is out with like polyester tires and 45 RPM records. But it's a true provable thing that analog computation can do a lot of things that cannot be done digitally. And if you look or if I look at what's going on in the computational university, you see analog computation all over the place and I think it's the new wave of the future. We kind of keep calling it other things, like web 2.0 or web 3.0. But the simplest way to describe it is something like Facebook, the complexity is not in the digital code. The complexity is in the architecture. And it's like you say, it's in the connections between things so you give every kid in high school a very simple piece of code, and suddenly then you have a direct analog computer solving the problem of who is friends with whom that purely digitally is a very difficult problem to solve. So these problems, you know, very known problems traveling salesmen problems that can be solved much better in that way. And the companies that are doing that, those are the ones that are doing so well. That's what Google is doing. It's easy to collect all the digital data. It's hard to find the network of meaning as to what -- so you let people do that. You let them link what they think you give them 100 results and, you know, they click on one and you know where the meaning is. And that's where I think is the future and it does change the way you think about code. Last question. 26 >>: Can you comment on analog as one who has helped people with analog computers. They have a habit of going unstable. They have artifacts in them that cause them to go to unstable, having nothing to do with the problem you're trying to solve. In fact, I helped a friend who was working on his Ph.D. convert his analog to digital, resolve the problem and then put it back on the analog computer so it wouldn't go unstable. And I think the message is, these systems are so complex and they will have instabilities that just will arise. And won't have anything to do with what they intentionally design for. >> George Dyson: Yes, there's really more an answer to a question pointing out that analog systems, which is part of their power, right, that they have a lot more power than you see. And you have to live with that and work with that. Thank you very much.