Document 17884457

advertisement

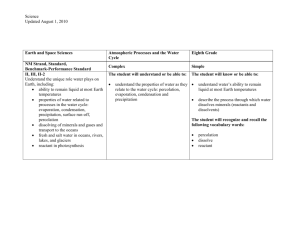

>> David Wilson: Next speaker his name is Tim Hulshof. He received his Ph.D. in 2013 under the supervision of Remco van der Hofstad, and he'll be talking about sharper thresholds for anisotropic bootstrap percolation. One thing I forgot to say is that he now works as a post-doctoral fellow at UBC and PIMS. >> Tim Hulshof: Thank you. It's on. Thanks for letting me speak here today. Very much appreciate it. I'm going to talk about bootstrap percolation. Indeed, this is a project with Hugo Duminil-Copin, Aernout van Enter, and Robert Morris. And I thought it would be a fun topic to talk about here because it has kind of its roots maybe here a bit at Microsoft or at UBC. I don't really know where this thing got started. But definitely in this particular area of the world. So I thought it would be fun to sort of talk about it here. So it's about bootstrap percolation. I'm sure a lot of you already know what bootstrap percolation is. It's kind of a common model. But for those who don't, let me just include you in the conversation as well. You start with some graph. Let's say it's a square lattice. Square lattices are nice but you can think of other graphs as well if you want. Then you pick some number which is a threshold value and you pick a set which is just a set of sites in the graph and let's center it around the .0. So it can be any set of sites. If I write it like this, it's kind of hard to see what such a set looks like. So I like to draw it as a little picture. So in this case you could take, for instance, just the nearest neighbors of 0 and circle there represents the 0. But you don't have to take this neighborhood. You can take other neighborhoods as well. This is one that's been studied quite a lot. It's kind of a remarkable neighborhood. Has interesting properties. But the one that I will mostly be talking about today is this neighborhood, where you have the nearest neighbors plus also to the sites the next nearest neighbors. This is what I will call anisotropic bootstrap percolation, this model. So how does the model work? Once you have these things chosen, the next step is to do a site percolation you take some parameter P and every site in the lattice -I call it infection. Some people call it activating or infection has sort of an unpleasant sound to it, but ->>: Especially these days. >> Tim Hulshof: Especially these days, it's not really like politically correct. But I'm going to say the word infection like 100 times today. I'm going to say the word log about 500 times today. So if nothing else, this talk will at least be half an hour where you heard the guy say log about a million times. So you do a site percolation, and the idea is just to iterate. Any site that's infected gets infected. Nobody gets better in this scenario. If you're healthy and one of the guys you're sitting next to is sick, well, that might still be okay, but if you're surrounded by them, that's not so great. And that's here represented by the fact that in your neighborhood, if you have more than or equal to the threshold value of infected sites around you, you become infected yourself. And so this goes for every site. This is the update rule. And the idea is just to update this as often as you like. Basically until we find a stationary or stable configuration. So that's the description. But maybe it's easier to see how it goes if you look at it. So I've been working on my computer, the grad students at UBC know how much effort this took me, because I'm super bad with computers, really. But I've been making some pictures. And so here, for instance, are some initial configurations, and I sort of have chosen a couple of interesting ones. So what you're going to see if we apply the bootstrap rule, then those sites that have two infected neighbors which are black in this case will become black themselves. And so indeed you see that these become black. And if you continue this process, at some point you wind up with a set of infected rectangles. These are stable because none of the sites now that are inactive have more than two active neighbors. If you do this for random configuration and the P is relatively low, you indeed see that this gives you a configuration of disjoint rectangles if you try and update this any farther, nothing will happen. It can of course happen that you choose your P to be quite high. In which case the whole rectangle gets infected. So this is an actual simulation of such a thing. So it doesn't -- it doesn't look super informative. So my next attempt was, well, I kind of stole this from Hugo. But to then color them according to the time that they became infected and you can actually see what is going on. You see that the blue sites are the ones that became infected first. And then gradually moving to red, those are the sites that became infected last. Here I'm not really doing it on a grid. I have periodic boundary conditions which you can see if you look at it closely. That's because in the beginning I was not even smart enough to simulate things without periodic boundary conditions. Now I know how to do that. But so you see basically you get these blue rectangles and they stop growing for a long time. You can tell because they're surrounded by red things. But one of them actually remained, it kept growing and ate up the whole thing. And so that's how this thing became filled up. If you go and do this on a larger scale, well, you might see more than one such thing grow and they might all grow. If you reduce P of it, maybe you can bring it down to the point where there was only one such cluster that group. This is without periodic boundary conditions. So here you can really see that the corners of the thing are the last to be filled up. So this is the, so this is what I call the standard model. It's called the standard model because basically 90 percent of all the people of all the papers about bootstrap percolation -- that's not true -- but a lot of papers about bootstrap percolation especially in the beginning were about this model and it's the most natural choice. The other model that's been studied quite extensively another model that's been studied quite extensively is the anisotropic model. And so this model has its own properties. Now you need the threshold value is three, so you need three infected neighbors but you're given a slightly bigger neighborhood to choose at and this neighborhood is not symmetrical. It's symmetrical along the axis but it's not rotation-symmetric by 90 degrees like the previous one. So you expect somehow the growth is going to also not be symmetrical. Indeed this is of course true. If we do the update on these things, well, you see that the sites that are surrounded by three, they're in the upper left corner get infected immediately. You also see that the diagonal there, well, there's not enough sites in the neighborhood of any point there, so that will just remain stable. And if you grow these things, one thing that is noticeable is that it grows more readily in the horizontal plane than in the vertical plane. And even the stable configurations, this is stable, we cannot update this any farther, does not necessarily consist of just disjoint rectangles it can have weird shapes especially upward facing. If you do an actual simulation, you see these as well. So here, for instance, you have this cluster with this big hook. It won't grow any further than this. And if you want to study the model, this poses some minor challenges. You have to sort of think about how this growth goes In particular what you need for a rectangle to fill up a line on the top is that there are at least two sides close to that rectangle, close to each other, while on the sides you only need a single site to grow sideways. So it's much easier to grow sideways than upwards or downward. So here again you can do simulations. And you see that these clusters are stretched out. And if you go to larger sizes, in fact you see that these clusters are, they get more and more stretched out. The fact is that in the horizontal plane, this particular model grows exponentially faster than in the vertical direction. That's a feature of this model. So people would unbalanced model for this particular reason. So fairly easy to see about these two models that I the standard model but also this results for the call this an one feature that is just described, for anisotropic model, if you take the whole plane of ZB, any P larger than 0 will fill it up. We call this internally spanned. That basically means that will later be more relevant statement. But for rectangle, internally spanned means if I do the side percolation on that rectangle it will fill up from the inside out, so without the help from the outside. So ZB always fills up because you always can find somewhere a small cluster of very dense, a dense cluster that will grow into a seed and then that thing will keep on growing and growing. It will always encounter something on its boundary. So the entire ZB is not interesting. But it is still interesting, because what happens on these smaller lattices is that you have the probability of being internally spanned for a rectangle. This actually undergoes some of a phase transition, stable phase transition. If you take a sequence LN that goes to infinity, and you take a sequence PN that goes to 0, then if the libsoup -- this is an old theorem. There's way better ones in case you're annoyed that I'm showing you something old, this is an old theory from Aizenman and Lebowitz is less than some value lambda minus, then this probability goes to 0 in the limit. And if the limit is greater than some lambda plus, then the probability goes to 1. So the question then became, of course, are these lambda minus and lambda plus. If they're equal, if so what are they. So that was answered by Mr. Holroyd. It's in fact they are equal and this is the famous result if you know anything about bootstrap percolation you've seen this number that it's pi squared over 18. So I want to talk a bit about a similar result for anisotropic bootstrap percolation. To express this for anisotropic bootstrap percolation this is not a very nice way of looking at it. So I would rather discuss it as -- I would rather look at P as a function of L. And, of course, we can do that. And so then if we rephrase this, then there should be some critical value PC, which I'm interested in. And we express it as L. So in this case PC should be 1 over log L, of order. With this particular pi squared. So we can express it as follows that PC is pi squared over 18 log L and then there are some little O of 1 there. So this result can also -- you can obtain a similar result for anisotropic bootstrap percolation, and it's almost the same except now the PC has a log-log L squared factor there instead of just that log L. And also here Aernout and Hugo have found the sharp value of this PC, and it's 1 over 12. And so here we have, essentially, a similar result. The log-log L squared is interesting, though. Very recently, and I think this is a very interesting set of papers and they all appeared in the last, let's say 12 months, maybe a bit more, two of them are still on the archive I believe only. Something has become known about the universality of these models. Turns out that these models can be roughly grouped into three -- they can be grouped into three groups. They are either sub critical, critical or super critical. So what does that mean? If they're sub critical, that means they have some PC that is always larger than 0. The limit of PC is greater than 0 which means also on ZB this has a nontrivial phase transition and these are the very, the models that have great difficulty growing. So, for instance, the threshold value is very high compared to the neighborhood. Then you have the critical ones where PC decays as some negative power of the log. So in the critical case, we have both the standard model and the anisotropic model. They both fit this picture. Then you have the super critical case, where PC goes as to some power of L. For super critical model you could think of standard bootstrap percolation neighborhood where the threshold is just equal to 1. If there were a single site inside of this rectangle it would fill up. So the probability of finding a single site in the PC that you would have then is roughly 1 over L squared. So this is interesting. But what is more interesting, maybe, is the third paper, at least my opinion, this is quite a surprising result. It's again by Bollobás and also in this case Hugo and Rob and Paul Smith, and this also appeared on the archive quite recently. Is that this PC of critical models at least in two dimensions can really only have two forms. So either it's the standard model, it's 1 over log L to some power kappa, okay this power kappa is model dependent, or it's log-log L squared over log L to some power kappa in which case it's like the anisotropic model. And so basically this allows us to put these models into two boxes. You have either the balanced models, similar to the standard model, or you have the unbalanced model, similar to the similar to the anisotropic model. And even if we look at the proofs, it really is quite evident that the way you should look at them for the balanced models is really quite similar to how you should look at the standard model and for the unbalanced models it's really quite similar to how you should look at the anisotropic model. So this makes the anisotropic model sort of an interesting toy model to understand an entire class. And similar for standard model, of course. So what is quite cool about this result, by the way, is it also contains that third model over there. That third model is called Duarte model after the person who came up with it and so far nobody really knew how to do anything with it other than with Martingale techniques. It's quite unique in that it was the only model that people knew how to study with Martingale techniques. There are some results that don't rely on those. But that also fits into this particular result, which is quite interesting. So this is one feature of these models that I wanted to mention. There's another feature which is called the bootstrap percolation paradox, is that already in the '80s, in the late '80s, physicists did simulations of what this lambda critical, which I mentioned earlier, the pi squared over 18, what it should be in simulations, and they went up to pretty large numbers. They really did a great job, especially for that time I think they mention in their paper that they use a create computer. So I was curious. This is what a create computer looks like. It costs 15 million but you do get a bench with it. So you can sit and wait. It's apparently it has about a one mega flop that's a quantity of how fast the computer is, which apparently also according to Wikipedia, my old PlayStation is like a thousand times faster than this thing. But still they went up to almost 30,000, I don't know how many samples they did at 30,000. And they found this number. That's impressively large if you did something like that for percolation, bond percolation on Z 2, you would certainly find the value PC equals one-half with very good accuracy. Except, of course, that if you know about this, this is nowhere near what the actual value is supposed to be. It's pi squared over 18 is much larger. It's twice as large. That's weird. And this sparked a lot of interest in bootstrap I think also among computer scientists and physicists and people were talking about it and people even said to Stephen Wolfram the guy from Mathematica, he wrote some book about how cellular automata could solve all the problems in the world, and people were like no, see here's bootstrap percolation and it's awful even though it's really simple. But okay, that's not an explanation of why it is so. So again I'm still going through old results here. But then Mr. Gravner and Mr. Holroyd came up with this particular bound on the PC with an undetermined constant there, but you can see there's a log three half and log three half of L for L small is this pretty close to just being the log of L. And there's also a lower bound which they then found together with Rob Morris, which is almost matching so there's a log-log L cubed there in the lower bound but that's very small. And according to Rob, according to his website at least, and I must admit I haven't read this paper and he has some remarks next to it saying that it's a sketch but I believe him because he's a smart guy, is that actually there's a constant there. So this is bounded by a constant. I should mention that in the original paper that came up with the lower bound they discussed a related model for which they actually give a precise bound and that also explains a lot of this stuff away. But this alone maybe you should want to contrast it to another result about bootstrap percolation, again for the standard model, is that the window of the transition with the free [indiscernible] argument, you can show that it's bounded from above by log-log L over log square of L which is much smaller than the correction term over there for small L. You will agree. And so this basically says like how sharp is the transition. That's what this result is about. How fast does this thing go up. So if you sort of act like a physicist and you say, well, okay I believe in the naturalness of numbers all constants should be roughly the same, they're in the same model, then you can do some back-of-the-envelope calculation and you can see that if I divide this actual value of PC, as I assume it to be, just with the C replaced with pi squared over 18, if I divide it by the first order term, then I'm actually off by quite a lot. I'm off by 1 over the square root of log to the power of 12. Log is about E-squared. This is about E to the power of 25. So that's about square root of 25. So that's about one-fifth. That's 0.8. This is off by quite a lot. And indeed you can forgive these, those physicists from the late '80s for having missed this. If you draw it as a picture, as I imagine it, and I have drawn it as a picture, what you basically see as you go and draw these curves for different sizes of L, what this P log L is versus P probability of being internally spanned, you see this transition gets sharper and sharper, the window gets smaller. But it moves very slowly towards the actual critical value. There's a huge gap there. And if you were naively saying, okay, I'll go from in factors of 10, you wouldn't see it converge. You would have to like double the size of your thing every time to get a nice sequence of graphs that looks like it actually converges to the right point. So we thought this was very interesting. And we also thought that the anisotropic model is very well suited to do the same thing, because the anisotropic model is like it grows very fast horizontally and so we figured that if there were correction terms they would be even way worse than they would be for bootstrap percolation, the standard model. So we did. And so here is the -- here is the main term. And now I'm going to unveil to you the correction term, is log-log L times log-log L over 3 log L. So it even has the same denominator there. And the log-log L and the log-log-log L are basically like the same numbers if L is small. So this is really -- this is really bad. But somehow to our surprise it actually went much better than that. We also found the third term, which is log 9 over 2 plus 1. That's two and a half, let's say. Log-log L over 6 log L. Two and a half over 6 is actually kind of big compared to 1 over 3 or 1 over 12. Student of Aernout's wrote a master's thesis. And also found for us a window. This was already a while ago, Suzanne Berma wrote this down. The window is the same as for the bootstrap. Basically there's a cube there, but that's not so much important. It's much narrower than the correction term. >>: [indiscernible]. >> Tim Hulshof: It's a bound on the window. A big O. I don't know. I didn't read her thesis. But Aernout tells me it's correct. So I believe Aernout. So there's this -- if we write it in a slightly different way we see that actually the third, up to the three terms, there's only two constants that determine it. The first two terms only rely on the C1, 1/12 and C2 in the third term -- actually C2 is really, really small. It's minus, it's a negative number. 0.0032. Because 8 over 3 is quite close to E, actually. So basically this whole thing, the first three terms almost exclusively depend on the first constant. And if we actually start to look now at how do these second and third terms measure up to the first one, well, we see some pretty maybe expected results since what we already knew, but the second term is actually bigger than the first term up until about when L is 10 to the power 2390. That's a bit larger than computers can basically deal with. And the third term is also really large for computer sizes at least. It's bigger than the second and bigger than the first as a result, when it's up to 10 to the 13th. If you really want to see these things vanish, well, that's not even going to happen ever. Because it only vanishes around where L is like 10 to the 10 to the 1400, which is according to Wolfram [indiscernible] Google flex to the power of Google and then some more. So that's numbers, not rival companies. And also the third term is huge until way, way far off. So okay how did we manage to find this? Still have a couple of minutes to tell you. Well, the main idea is that if you want to know what this critical value is, all you need to do is find out the probability of something much smaller, to grow large, because once it reaches a certain size, there's X by Y which we have to figure out of course. Then it will keep on growing. So the probably that it's internally spanned is basically equal to the probability that there is such a cluster inside of it which is much smaller. And so basically you just want to treat this like a binomial. And you just say, well, the density, the P should be such that L squared over X times Y, that's L squared is much larger than X times Y. So if you take a large number and divide it by a smaller number, it's a really large number, this L, then that's basically the same as saying L squared. And so this is proportional to the inverse of this probability. L squared is proportional to inverse of the probability. So what we really want to show is the inverse of the probability and this is where all the work goes. And here we see that this probability that X times Y is internally spanned is minus two times C1 over P plus 2 times C2 over P log 1 over P. Log square 1 over P and the correction term is log 1 over P. If we take 1 over the square root of this, of course we get our value of L and then we can figure out what PC is from that. So if we write it slightly differently, we see that it looks like this. So it's 1 over 6 log squared 1 over P, plus 1 over 3 P log 8 over 3 times E -that E is there because I didn't want to write a minus one-third. And log 1 over P. And if we split this up, it's just depending on two numbers, number 3 and the number 8. Also a number 2 there but that number 2 doesn't do anything. That's universal for these kind of problems. So where do the 3 and the 8 come from? The 3 and the 8 are just basically, they result from the way this thing grows. So the 3 comes from the fact up to the vertical rotations the rectangle will grow to the side, if it has a site next to it, distance one or distance two. This is distance one, distance two, distance three, so three ways. And it will grow vertically if it has a pair of infected sites right on top of it and again up to translations, there are basically eight such sites. Now I'm cheating because there's not eight such sites. And actually much of the work for the lower bound, this will be fine, to pretend like there are eight. But for the upper bound of course we'll have to be a bit careful because it could be that one of these sites is not yet activated at the time that we get there with the rectangle. So indeed we can draw all kinds of annoying things that really make our life hard like what if these pairs overlap, that's something you have to bound with the probability. It's not so hard. What if they get activated at some time later than 1 like that light gray rectangle over there, and we can have them at arbitrarily large times. Now, again from a probabilistic point of view, you have to do some work with the come torics, this is not so hard but the problem is at some point they get arbitrarily wide and that turns out to be really annoying if you want to be precise about these things. So that's for you an indication of what is hard about this proof. For the rest, we just did what everybody does, just take all the best ideas from the previous papers about bootstrap percolation and cobbled them together into something that looks okay. But here is where we really had our work, trying to understand how to see how these things grow. So like I mentioned, once we have this particular bound, we can find the critical length and it is this thing, and then from here on we still want to calculate PC. How does it happen that here we have an expression that only has two constants and yet we find for PC an expression that has three terms? Well, this is actually quite easy. All you have to notice is that there's not one -- there's one L but there's three times three over the P in there. So if we take this particular critical length, here I'm assuming the second term isn't there so I could fit it on the slide for you, so you could read along with me. If we have just the first term, take the first two terms of PC quite easily, because PC times log L is now some function of 1 over PC, log squared of 1 PC. And if we write it the other way around, we get 1 over P, we can express also in this way. We take the right-hand one and plug it into the left-hand one and what we get is this. We expand this out and what we get here is this C1. So that's the 1 over 12, log log L squared and we have a thing that looks almost like what we need still there's a log-log 1 over PC there. So now we use from the initial bound on the critical length that we can do it like this and so we can just replace that 1 over PC by a log L without paying too much and then we get the first two terms of this thing. So I then went to simulating, because I wanted to see now with our formula this thing actually looks much better. I'm so bad at this stuff that I only came to 500 and then I had to run my computer for an entire night to get this 500. It's really embarrassing. If I had known that this was going to be so hard, I would have -- it's not hard. I'm sure somebody knows how to do this much better than I do. But I wanted to get larger numbers but I didn't for purposes of illustration the four numbers will still do. What if we take the first order term. It's really bad. It actually goes up at the beginning. That's never a good sign if your PC goes up when you increase the size of your lattice. So actually you can see that 1 to the power of 100 -- well, we still don't really see convincing numbers there. If you go to the second order term, if we had kept it at that, we would have been even more embarrassed because then I had to just show you this here. They're almost all negative because the second term is bigger than the first one like I explained earlier. So then there's the third order term. And well frankly they don't look so good. At 1 million, it's 0.06 but I'm almost there already at 500. So there's something going on here. There are more terms that we haven't figured out yet. So we can conjecture and actually we're quite close to proving this. I hope we can make it. But I'm not sure that actually there's a 1 over P that that's the third term. And if you do that, then you can easily calculate a couple of other extra terms, namely two extra terms. And they again are just the same numbers repeated and so this already looks good. But let's go for a slightly stronger conjecture, which I'm sure we cannot prove, what we know now, but if we have also this C3 which should exist, then we can also get the 6th term and it's also big, and after this I'm kind of confident to say that you have to go to higher orders of log to get extra terms. Divide by log to some power, which could still be big, admittedly. But if we have this, then with the conjecture at C3 equals 0, I have to pick some number, couldn't make it fit that well. But at C3 equals 0 this kind of looks encouraging because at least now the 500 value is, it seems to be somewhere in between the 500 value of the conjecture and the 500 value and the thousand value. So maybe, maybe we can find some way. I'm going to -- when I have a student, this is the first thing I'm going to make him do is try and figure out how to simulate this stuff and then come back to me with what this C3 could be. Anyways, that's I suppose all I have to say. So thank you for listening. [applause]. >> David Wilson: Questions? No questions. >>: I have more of a philosophical question, you have these amazing -first of all, hell impressed with your calculations because it's amazing to have this very slowly converging sequence of terms and to get it so precisely. >> Tim Hulshof: It's pretty weird, yeah. >>: It actually starts converging 10 to the 10 to the 240 or whatever it was. >> Tim Hulshof: >>: That's way larger than the atoms in the universe. >> Tim Hulshof: could ->>: Absolutely. There's no way ever that a computer Makes me wonder can you really speak about convergence? >> Tim Hulshof: >>: 2,000. That's fair. Philosophical. >> Tim Hulshof: I have to say, though, I think Ander had a number there that was way bigger still than the number I showed 1 over 2 to the power 2 to the power something. So philosophically speaking, I'm not sure. >>: But it's kind of interesting here, isn't it? originally as a physics model. It's intended >> Tim Hulshof: Yes. It was a physics model, of course. But could still be a physics model it's just finite size effects of this thing are absolutely overwhelmingly larger than what you would expect them to be. Or hope them to be. >> David Wilson: [applause] Any questions? Let's thank the speaker again.